Chapter 67. Configuring and managing logical volumes

67.1. Overview of logical volume management

Logical Volume Manager (LVM) creates a layer of abstraction over physical storage, which helps you to create logical storage volumes. This offers more flexibility compared to direct physical storage usage.

In addition, the hardware storage configuration is hidden from the software so you can resize and move it without stopping applications or unmounting file systems. This can reduce operational costs.

67.1.1. LVM architecture

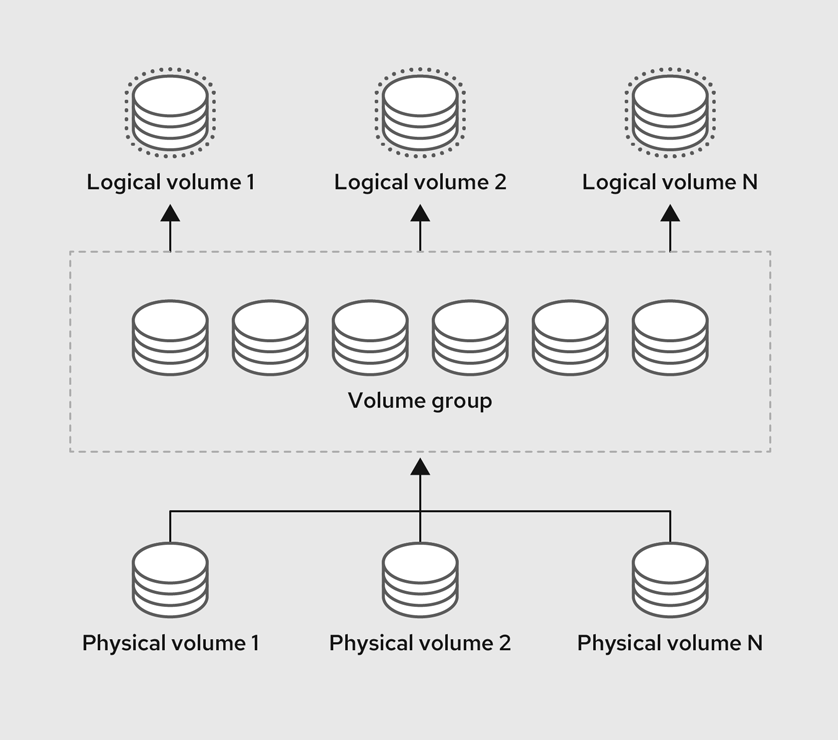

The following are the components of LVM:

- Physical volume

- A physical volume (PV) is a partition or whole disk designated for LVM use. For more information, see Managing LVM physical volumes.

- Volume group

- A volume group (VG) is a collection of physical volumes (PVs), which creates a pool of disk space out of which you can allocate logical volumes. For more information, see Managing LVM volume groups.

- Logical volume

- A logical volume represents a usable storage device. For more information, see Basic logical volume management and Advanced logical volume management.

The following diagram illustrates the components of LVM:

Figure 67.1. LVM logical volume components

67.1.2. Advantages of LVM

Logical volumes provide the following advantages over using physical storage directly:

- Flexible capacity

- When using logical volumes, you can aggregate devices and partitions into a single logical volume. With this functionality, file systems can extend across multiple devices as though they were a single, large one.

- Convenient device naming

- Logical storage volumes can be managed with user-defined and custom names.

- Resizeable storage volumes

- You can extend logical volumes or reduce logical volumes in size with simple software commands, without reformatting and repartitioning the underlying devices. For more information, see Resizing logical volumes.

- Online data relocation

To deploy newer, faster, or more resilient storage subsystems, you can move data while your system is active using the

pvmovecommand. Data can be rearranged on disks while the disks are in use. For example, you can empty a hot-swappable disk before removing it.For more information on how to migrate the data, see the

pvmoveman page and Removing physical volumes from a volume group.- Striped Volumes

- You can create a logical volume that stripes data across two or more devices. This can dramatically increase throughput. For more information, see Creating a striped logical volume.

- RAID volumes

- Logical volumes provide a convenient way to configure RAID for your data. This provides protection against device failure and improves performance. For more information, see Configuring RAID logical volumes.

- Volume snapshots

- You can take snapshots, which is a point-in-time copy of logical volumes for consistent backups or to test the effect of changes without affecting the real data. For more information, see Managing logical volume snapshots.

- Thin volumes

- Logical volumes can be thin-provisioned. This allows you to create logical volumes that are larger than the available physical space. For more information, see Creating a thin logical volume.

- Caching

- Caching uses fast devices, like SSDs, to cache data from logical volumes, boosting performance. For more information, see Caching logical volumes.

67.2. Managing LVM physical volumes

A physical volume (PV) is a physical storage device or a partition on a storage device that LVM uses.

During the initialization process, an LVM disk label and metadata are written to the device, which allows LVM to track and manage it as part of the logical volume management scheme.

You cannot increase the size of the metadata after the initialization. If you need larger metadata, you must set the appropriate size during the initialization process.

When initialization process is complete, you can allocate the PV to a volume group (VG). You can divide this VG into logical volumes (LVs), which are the virtual block devices that operating systems and applications can use for storage.

To ensure optimal performance, partition the whole disk as a single PV for LVM use.

67.2.1. Creating an LVM physical volume

You can use the pvcreate command to initialize a physical volume LVM usage.

Prerequisites

- Administrative access.

-

The

lvm2package is installed.

Procedure

Identify the storage device you want to use as a physical volume. To list all available storage devices, use:

lsblk

$ lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an LVM physical volume:

pvcreate /dev/sdb

# pvcreate /dev/sdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /dev/sdb with the name of the device you want to initialize as a physical volume.

Verification steps

Display the created physical volume:

pvs

# pvs PV VG Fmt Attr PSize PFree /dev/sdb lvm2 a-- 28.87g 13.87gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.2.2. Removing LVM physical volumes

You can use the pvremove command to remove a physical volume for LVM usage.

Prerequisites

- Administrative access.

Procedure

List the physical volumes to identify the device you want to remove:

pvs

# pvs PV VG Fmt Attr PSize PFree /dev/sdb1 lvm2 --- 28.87g 28.87gCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the physical volume:

pvremove /dev/sdb1

# pvremove /dev/sdb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /dev/sdb1 with the name of the device associated with the physical volume.

If your physical volume is part of the volume group, you need to remove it from the volume group first.

If you volume group contains more that one physical volume, use the

vgreducecommand:vgreduce VolumeGroupName /dev/sdb1

# vgreduce VolumeGroupName /dev/sdb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group. Replace /dev/sdb1 with the name of the device.

If your volume group contains only one physical volume, use

vgremovecommand:vgremove VolumeGroupName

# vgremove VolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group.

Verification

Verify the physical volume is removed:

pvs

# pvsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.2.3. Creating logical volumes in the web console

Logical volumes act as physical drives. You can use the RHEL 8 web console to create LVM logical volumes in a volume group.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - The volume group is created.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click Storage.

- In the Storage table, click the volume group in which you want to create logical volumes.

- On the Logical volume group page, scroll to the LVM2 logical volumes section and click .

- In the Name field, enter a name for the new logical volume. Do not include spaces in the name.

In the drop-down menu, select Block device for filesystems.

This configuration enables you to create a logical volume with the maximum volume size which is equal to the sum of the capacities of all drives included in the volume group.

Define the size of the logical volume. Consider:

- How much space the system using this logical volume will need.

- How many logical volumes you want to create.

You do not have to use the whole space. If necessary, you can grow the logical volume later.

Click .

The logical volume is created. To use the logical volume you must format and mount the volume.

Verification

On the Logical volume page, scroll to the LVM2 logical volumes section and verify whether the new logical volume is listed.

67.2.4. Formatting logical volumes in the web console

Logical volumes act as physical drives. To use them, you must format them with a file system.

Formatting logical volumes erases all data on the volume.

The file system you select determines the configuration parameters you can use for logical volumes. For example, the XFS file system does not support shrinking volumes.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - The logical volume created.

- You have root access privileges to the system.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click .

- In the Storage table, click the volume group in the logical volumes is created.

- On the Logical volume group page, scroll to the LVM2 logical volumes section.

- Click the menu button, , next to the volume group you want to format.

From the drop-down menu, select .

- In the Name field, enter a name for the file system.

In the Mount Point field, add the mount path.

In the drop-down menu, select a file system:

XFS file system supports large logical volumes, switching physical drives online without outage, and growing an existing file system. Leave this file system selected if you do not have a different strong preference.

XFS does not support reducing the size of a volume formatted with an XFS file system

ext4 file system supports:

- Logical volumes

- Switching physical drives online without an outage

- Growing a file system

- Shrinking a file system

Select the Overwrite existing data with zeros checkbox if you want the RHEL web console to rewrite the whole disk with zeros. This option is slower because the program has to go through the whole disk, but it is more secure. Use this option if the disk includes any data and you need to overwrite it.

If you do not select the Overwrite existing data with zeros checkbox, the RHEL web console rewrites only the disk header. This increases the speed of formatting.

From the drop-down menu, select the type of encryption if you want to enable it on the logical volume.

You can select a version with either the LUKS1 (Linux Unified Key Setup) or LUKS2 encryption, which allows you to encrypt the volume with a passphrase.

- In the drop-down menu, select when you want the logical volume to mount after the system boots.

- Select the required Mount options.

Format the logical volume:

- If you want to format the volume and immediately mount it, click .

If you want to format the volume without mounting it, click .

Formatting can take several minutes depending on the volume size and which formatting options are selected.

Verification

On the Logical volume group page, scroll to the LVM2 logical volumes section and click the logical volume to check the details and additional options.

- If you selected the option, click the menu button at the end of the line of the logical volume, and select to use the logical volume.

67.2.5. Resizing logical volumes in the web console

You can extend or reduce logical volumes in the RHEL 8 web console. The example procedure demonstrates how to grow and shrink the size of a logical volume without taking the volume offline.

You cannot reduce volumes that contains GFS2 or XFS filesystem.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - An existing logical volume containing a file system that supports resizing logical volumes.

Procedure

- Log in to the RHEL web console.

- Click .

- In the Storage table, click the volume group in the logical volumes is created.

On the Logical volume group page, scroll to the LVM2 logical volumes section and click the menu button, , next to volume group you want to resize.

From the menu, select Grow or Shrink to resize the volume:

Growing the Volume:

- Select to increase the size of the volume.

In the Grow logical volume dialog box, adjust the size of the logical volume.

Click .

LVM grows the logical volume without causing a system outage.

Shrinking the Volume:

- Select to reduce the size of the volume.

In the Shrink logical volume dialog box, adjust the size of the logical volume.

Click .

LVM shrinks the logical volume without causing a system outage.

67.3. Managing LVM volume groups

You can create and use volume groups (VGs) to manage and resize multiple physical volumes (PVs) combined into a single storage entity.

Extents are the smallest units of space that you can allocate in LVM. Physical extents (PE) and logical extents (LE) has the default size of 4 MiB that you can configure. All extents have the same size.

When you create a logical volume (LV) within a VG, LVM allocates physical extents on the PVs. The logical extents within the LV correspond one-to-one with physical extents in the VG. You do not need to specify the PEs to create LVs. LVM will locate the available PEs and piece them together to create a LV of the requested size.

Within a VG, you can create multiple LVs, each acting like a traditional partition but with the ability to span across physical volumes and resize dynamically. VGs can manage the allocation of disk space automatically.

67.3.1. Creating an LVM volume group

You can use the vgcreate command to create a volume group (VG). You can adjust the extent size for very large or very small volumes to optimize performance and storage efficiency. You can specify the extent size when creating a VG. To change the extent size you must re-create the volume group.

Prerequisites

- Administrative access.

-

The

lvm2package is installed. - One or more physical volumes are created. For more information about creating physical volumes, see Creating LVM physical volume.

Procedure

List and identify the PV that you want to include in the VG:

pvs

# pvsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a VG:

vgcreate VolumeGroupName PhysicalVolumeName1 PhysicalVolumeName2

# vgcreate VolumeGroupName PhysicalVolumeName1 PhysicalVolumeName2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group that you want to create. Replace PhysicalVolumeName with the name of the PV.

To specify the extent size when creating a VG, use the

-s ExtentSizeoption. Replace ExtentSize with the size of the extent. If you provide no size suffix, the command defaults to MB.

Verification

Verify that the VG is created:

vgs

# vgs VG #PV #LV #SN Attr VSize VFree VolumeGroupName 1 0 0 wz--n- 28.87g 28.87gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.3.2. Creating volume groups in the web console

Create volume groups from one or more physical drives or other storage devices.

Logical volumes are created from volume groups. Each volume group can include multiple logical volumes.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - Physical drives or other types of storage devices from which you want to create volume groups.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click .

- In the Storage table, click the menu button.

From the drop-down menu, select Create LVM2 volume group.

- In the Name field, enter a name for the volume group. The name must not include spaces.

Select the drives you want to combine to create the volume group.

The RHEL web console displays only unused block devices. If you do not see your device in the list, make sure that it is not being used by your system, or format it to be empty and unused. Used devices include, for example:

- Devices formatted with a file system

- Physical volumes in another volume group

- Physical volumes being a member of another software RAID device

Click .

The volume group is created.

Verification

- On the Storage page, check whether the new volume group is listed in the Storage table.

67.3.3. Renaming an LVM volume group

You can use the vgrename command to rename a volume group (VG).

Prerequisites

- Administrative access.

-

The

lvm2package is installed. - One or more physical volumes are created. For more information about creating physical volumes, see Creating LVM physical volume.

- The volume group is created. For more information about creating volume groups, see Section 67.3.1, “Creating an LVM volume group”.

Procedure

List and identify the VG that you want to rename:

vgs

# vgsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Rename the VG:

vgrename OldVolumeGroupName NewVolumeGroupName

# vgrename OldVolumeGroupName NewVolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace OldVolumeGroupName with the name of the VG. Replace NewVolumeGroupName with the new name for the VG.

Verification

Verify that the VG has a new name:

vgs

# vgs VG #PV #LV #SN Attr VSize VFree NewVolumeGroupName 1 0 0 wz--n- 28.87g 28.87gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.3.4. Extending an LVM volume group

You can use the vgextend command to add physical volumes (PVs) to a volume group (VG).

Prerequisites

- Administrative access.

-

The

lvm2package is installed. - One or more physical volumes are created. For more information about creating physical volumes, see Creating LVM physical volume.

- The volume group is created. For more information about creating volume groups, see Section 67.3.1, “Creating an LVM volume group”.

Procedure

List and identify the VG that you want to extend:

vgs

# vgsCopy to Clipboard Copied! Toggle word wrap Toggle overflow List and identify the PVs that you want to add to the VG:

pvs

# pvsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Extend the VG:

vgextend VolumeGroupName PhysicalVolumeName

# vgextend VolumeGroupName PhysicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the VG. Replace PhysicalVolumeName with the name of the PV.

Verification

Verify that the VG now includes the new PV:

pvs

# pvs PV VG Fmt Attr PSize PFree /dev/sda VolumeGroupName lvm2 a-- 28.87g 28.87g /dev/sdd VolumeGroupName lvm2 a-- 1.88g 1.88gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.3.5. Combining LVM volume groups

You can combine two existing volume groups (VGs) with the vgmerge command. The source volume will be merged into the destination volume.

Prerequisites

- Administrative access.

-

The

lvm2package is installed. - One or more physical volumes are created. For more information about creating physical volumes, see Creating LVM physical volume.

- Two or more volume group are created. For more information about creating volume groups, see Section 67.3.1, “Creating an LVM volume group”.

Procedure

List and identify the VG that you want to merge:

vgs

# vgs VG #PV #LV #SN Attr VSize VFree VolumeGroupName1 1 0 0 wz--n- 28.87g 28.87g VolumeGroupName2 1 0 0 wz--n- 1.88g 1.88gCopy to Clipboard Copied! Toggle word wrap Toggle overflow Merge the source VG into the destination VG:

vgmerge VolumeGroupName2 VolumeGroupName1

# vgmerge VolumeGroupName2 VolumeGroupName1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName2 with the name of the source VG. Replace VolumeGroupName1 with the name of the destination VG.

Verification

Verify that the VG now includes the new PV:

vgs

# vgs VG #PV #LV #SN Attr VSize VFree VolumeGroupName1 2 0 0 wz--n- 30.75g 30.75gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.3.6. Removing physical volumes from a volume group

To remove unused physical volumes (PVs) from a volume group (VG), use the vgreduce command. The vgreduce command shrinks a volume group’s capacity by removing one or more empty physical volumes. This frees those physical volumes to be used in different volume groups or to be removed from the system.

Procedure

If the physical volume is still being used, migrate the data to another physical volume from the same volume group:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If there are not enough free extents on the other physical volumes in the existing volume group:

Create a new physical volume from /dev/vdb4:

pvcreate /dev/vdb4

# pvcreate /dev/vdb4 Physical volume "/dev/vdb4" successfully createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the newly created physical volume to the volume group:

vgextend VolumeGroupName /dev/vdb4

# vgextend VolumeGroupName /dev/vdb4 Volume group "VolumeGroupName" successfully extendedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the data from /dev/vdb3 to /dev/vdb4:

pvmove /dev/vdb3 /dev/vdb4

# pvmove /dev/vdb3 /dev/vdb4 /dev/vdb3: Moved: 33.33% /dev/vdb3: Moved: 100.00%Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Remove the physical volume /dev/vdb3 from the volume group:

vgreduce VolumeGroupName /dev/vdb3

# vgreduce VolumeGroupName /dev/vdb3 Removed "/dev/vdb3" from volume group "VolumeGroupName"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the /dev/vdb3 physical volume is removed from the VolumeGroupName volume group:

pvs

# pvs PV VG Fmt Attr PSize PFree Used /dev/vdb1 VolumeGroupName lvm2 a-- 1020.00m 0 1020.00m /dev/vdb2 VolumeGroupName lvm2 a-- 1020.00m 0 1020.00m /dev/vdb3 lvm2 a-- 1020.00m 1008.00m 12.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.3.7. Splitting a LVM volume group

If there is enough unused space on the physical volumes, a new volume group can be created without adding new disks.

In the initial setup, the volume group VolumeGroupName1 consists of /dev/vdb1, /dev/vdb2, and /dev/vdb3. After completing this procedure, the volume group VolumeGroupName1 will consist of /dev/vdb1 and /dev/vdb2, and the second volume group, VolumeGroupName2, will consist of /dev/vdb3.

Prerequisites

-

You have sufficient space in the volume group. Use the

vgscancommand to determine how much free space is currently available in the volume group. -

Depending on the free capacity in the existing physical volume, move all the used physical extents to other physical volume using the

pvmovecommand. For more information, see Removing physical volumes from a volume group.

Procedure

Split the existing volume group VolumeGroupName1 to the new volume group VolumeGroupName2:

vgsplit VolumeGroupName1 VolumeGroupName2 /dev/vdb3

# vgsplit VolumeGroupName1 VolumeGroupName2 /dev/vdb3 Volume group "VolumeGroupName2" successfully split from "VolumeGroupName1"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you have created a logical volume using the existing volume group, use the following command to deactivate the logical volume:

lvchange -a n /dev/VolumeGroupName1/LogicalVolumeName

# lvchange -a n /dev/VolumeGroupName1/LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the attributes of the two volume groups:

vgs

# vgs VG #PV #LV #SN Attr VSize VFree VolumeGroupName1 2 1 0 wz--n- 34.30G 10.80G VolumeGroupName2 1 0 0 wz--n- 17.15G 17.15GCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the newly created volume group VolumeGroupName2 consists of /dev/vdb3 physical volume:

pvs

# pvs PV VG Fmt Attr PSize PFree Used /dev/vdb1 VolumeGroupName1 lvm2 a-- 1020.00m 0 1020.00m /dev/vdb2 VolumeGroupName1 lvm2 a-- 1020.00m 0 1020.00m /dev/vdb3 VolumeGroupName2 lvm2 a-- 1020.00m 1008.00m 12.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.3.8. Moving a volume group to another system

You can move an entire LVM volume group (VG) to another system using the following commands:

vgexport- Use this command on an existing system to make an inactive VG inaccessible to the system. Once the VG is inaccessible, you can detach its physical volumes (PV).

vgimport- Use this command on the other system to make the VG, which was inactive in the old system, accessible in the new system.

Prerequisites

- No users are accessing files on the active volumes in the volume group that you are moving.

Procedure

Unmount the LogicalVolumeName logical volume:

umount /dev/mnt/LogicalVolumeName

# umount /dev/mnt/LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deactivate all logical volumes in the volume group, which prevents any further activity on the volume group:

vgchange -an VolumeGroupName

# vgchange -an VolumeGroupName vgchange -- volume group "VolumeGroupName" successfully deactivatedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Export the volume group to prevent it from being accessed by the system from which you are removing it:

vgexport VolumeGroupName

# vgexport VolumeGroupName vgexport -- volume group "VolumeGroupName" successfully exportedCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the exported volume group:

pvscan

# pvscan PV /dev/sda1 is in exported VG VolumeGroupName [17.15 GB / 7.15 GB free] PV /dev/sdc1 is in exported VG VolumeGroupName [17.15 GB / 15.15 GB free] PV /dev/sdd1 is in exported VG VolumeGroupName [17.15 GB / 15.15 GB free] ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Shut down your system and unplug the disks that make up the volume group and connect them to the new system.

Plug the disks into the new system and import the volume group to make it accessible to the new system:

vgimport VolumeGroupName

# vgimport VolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can use the

--forceargument of thevgimportcommand to import volume groups that are missing physical volumes and subsequently run thevgreduce --removemissingcommand.Activate the volume group:

vgchange -ay VolumeGroupName

# vgchange -ay VolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Mount the file system to make it available for use:

mkdir -p /mnt/VolumeGroupName/users mount /dev/VolumeGroupName/users /mnt/VolumeGroupName/users

# mkdir -p /mnt/VolumeGroupName/users # mount /dev/VolumeGroupName/users /mnt/VolumeGroupName/usersCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

-

vgimport(8),vgexport(8), andvgchange(8)man pages on your system

67.3.9. Removing LVM volume groups

You can remove an existing volume group using the vgremove command. Only volume groups that do not contain logical volumes can be removed.

Prerequisites

- Administrative access.

Procedure

Ensure the volume group does not contain logical volumes:

vgs -o vg_name,lv_count VolumeGroupName

# vgs -o vg_name,lv_count VolumeGroupName VG #LV VolumeGroupName 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group.

Remove the volume group:

vgremove VolumeGroupName

# vgremove VolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group.

67.3.10. Removing LVM volume groups in a cluster environment

In a cluster environment, LVM uses the lockspace <qualifier> to coordinate access to volume groups shared among multiple machines. You must stop the lockspace before removing a volume group to make sure no other node is trying to access or modify it during the removal process.

Prerequisites

- Administrative access.

- The volume group contains no logical volumes.

Procedure

Ensure the volume group does not contain logical volumes:

vgs -o vg_name,lv_count VolumeGroupName

# vgs -o vg_name,lv_count VolumeGroupName VG #LV VolumeGroupName 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group.

Stop the

lockspaceon all nodes except the node where you are removing the volume group:vgchange --lockstop VolumeGroupName

# vgchange --lockstop VolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group and wait for the lock to stop.

Remove the volume group:

vgremove VolumeGroupName

# vgremove VolumeGroupNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group.

67.4. Managing LVM logical volumes

A logical volume is a virtual, block storage device that a file system, database, or application can use. To create an LVM logical volume, the physical volumes (PVs) are combined into a volume group (VG). This creates a pool of disk space out of which LVM logical volumes (LVs) can be allocated.

67.4.1. Overview of logical volume features

With the Logical Volume Manager (LVM), you can manage disk storage in a flexible and efficient way that traditional partitioning schemes cannot offer. Below is a summary of key LVM features that are used for storage management and optimization.

- Concatenation

- Concatenation involves combining space from one or more physical volumes into a singular logical volume, effectively merging the physical storage.

- Striping

- Striping optimizes data I/O efficiency by distributing data across multiple physical volumes. This method enhances performance for sequential reads and writes by allowing parallel I/O operations.

- RAID

- LVM supports RAID levels 0, 1, 4, 5, 6, and 10. When you create a RAID logical volume, LVM creates a metadata subvolume that is one extent in size for every data or parity subvolume in the array.

- Thin provisioning

- Thin provisioning enables the creation of logical volumes that are larger than the available physical storage. With thin provisioning, the system dynamically allocates storage based on actual usage instead of allocating a predetermined amount upfront.

- Snapshots

- With LVM snapshots, you can create point-in-time copies of logical volumes. A snapshot starts empty. As changes occur on the original logical volume, the snapshot captures the pre-change states through copy-on-write (CoW), growing only with changes to preserve the state of the original logical volume.

- Caching

- LVM supports the use of fast block devices, such as SSD drives as write-back or write-through caches for larger slower block devices. Users can create cache logical volumes to improve the performance of their existing logical volumes or create new cache logical volumes composed of a small and fast device coupled with a large and slow device.

67.4.2. Managing logical volume snapshots

A snapshot is a logical volume (LV) that mirrors the content of another LV at a specific point in time.

67.4.2.1. Understanding logical volume snapshots

When you create a snapshot, you are creating a new LV that serves as a point-in-time copy of another LV. Initially, the snapshot LV contains no actual data. Instead, it references the data blocks of the original LV at the moment of snapshot creation.

It is important to regularly monitor the snapshot’s storage usage. If a snapshot reaches 100% of its allocated space, it will become invalid.

It is essential to extend the snapshot before it gets completely filled. This can be done manually by using the lvextend command or automatically via the /etc/lvm/lvm.conf file.

- Thick LV snapshots

- When data on the original LV changes, the copy-on-write (CoW) system copies the original, unchanged data to the snapshot before the change is made. This way, the snapshot grows in size only as changes occur, storing the state of the original volume at the time of the snapshot’s creation. Thick snapshots are a type of LV that requires you to allocate some amount of storage space upfront. This amount can later be extended or reduced, however, you should consider what type of changes you intend to make to the original LV. This helps you to avoid either wasting resources by allocating too much space or needing to frequently increase the snapshot size if you allocate too little.

- Thin LV snapshots

Thin snapshots are a type of LV created from an existing thin provisioned LV. Thin snapshots do not require allocating extra space upfront. Initially, both the original LV and its snapshot share the same data blocks. When changes are made to the original LV, it writes new data to different blocks, while the snapshot continues to reference the original blocks, preserving a point-in-time view of the LV’s data at the snapshot creation.

Thin provisioning is a method of optimizing and managing storage efficiently by allocating disk space on an as-needed basis. This means that you can create multiple LVs without needing to allocate a large amount of storage upfront for each LV. The storage is shared among all LVs in a thin pool, making it a more efficient use of resources. A thin pool allocates space on-demand to its LVs.

- Choosing between thick and thin LV snapshots

- The choice between thick or thin LV snapshots is directly determined by the type of LV you are taking a snapshot of. If your original LV is a thick LV, your snapshots will be thick. If your original LV is thin, your snapshots will be thin.

67.4.2.2. Managing thick logical volume snapshots

When you create a thick LV snapshot, it is important to consider the storage requirements and the intended lifespan of your snapshot. You need to allocate enough storage for it based on the expected changes to the original volume. The snapshot must have a sufficient size to capture changes during its intended lifespan, but it cannot exceed the size of the original LV. If you expect a low rate of change, a smaller snapshot size of 10%-15% might be sufficient. For LVs with a high rate of change, you might need to allocate 30% or more.

It is essential to extend the snapshot before it gets completely filled. If a snapshot reaches 100% of its allocated space, it becomes invalid. You can monitor the snapshot capacity with the lvs -o lv_name,data_percent,origin command.

67.4.2.2.1. Creating thick logical volume snapshots

You can create a thick LV snapshot with the lvcreate command.

Prerequisites

- Administrative access.

- You have created a physical volume. For more information, see Creating LVM physical volume.

- You have created a volume group. For more information, see Creating LVM volume group.

- You have created a logical volume. For more information, see Creating logical volumes.

Procedure

Identify the LV of which you want to create a snapshot:

lvs -o vg_name,lv_name,lv_size

# lvs -o vg_name,lv_name,lv_size VG LV LSize VolumeGroupName LogicalVolumeName 10.00gCopy to Clipboard Copied! Toggle word wrap Toggle overflow The size of the snapshot cannot exceed the size of the LV.

Create a thick LV snapshot:

lvcreate --snapshot --size SnapshotSize --name SnapshotName VolumeGroupName/LogicalVolumeName

# lvcreate --snapshot --size SnapshotSize --name SnapshotName VolumeGroupName/LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace SnapshotSize with the size you want to allocate for the snapshot (e.g. 10G). Replace SnapshotName with the name you want to give to the snapshot logical volume. Replace VolumeGroupName with the name of the volume group that contains the original logical volume. Replace LogicalVolumeName with the name of the logical volume that you want to create a snapshot of.

Verification

Verify that the snapshot is created:

lvs -o lv_name,origin

# lvs -o lv_name,origin LV Origin LogicalVolumeName SnapshotName LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.2.2.2. Manually extending logical volume snapshots

If a snapshot reaches 100% of its allocated space, it becomes invalid. It is essential to extend the snapshot before it gets completely filled. This can be done manually by using the lvextend command.

Prerequisites

- Administrative access.

Procedure

List the names of volume groups, logical volumes, source volumes for snapshots, their usage percentages, and sizes:

lvs -o vg_name,lv_name,origin,data_percent,lv_size

# lvs -o vg_name,lv_name,origin,data_percent,lv_size VG LV Origin Data% LSize VolumeGroupName LogicalVolumeName 10.00g VolumeGroupName SnapshotName LogicalVolumeName 82.00 5.00gCopy to Clipboard Copied! Toggle word wrap Toggle overflow Extend the thick-provisioned snapshot:

lvextend --size +AdditionalSize VolumeGroupName/SnapshotName

# lvextend --size +AdditionalSize VolumeGroupName/SnapshotNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace AdditionalSize with how much space to add to the snapshot (for example, +1G). Replace VolumeGroupName with the name of the volume group. Replace SnapshotName with the name of the snapshot.

Verification

Verify that the LV is extended:

lvs -o vg_name,lv_name,origin,data_percent,lv_size

# lvs -o vg_name,lv_name,origin,data_percent,lv_size VG LV Origin Data% LSize VolumeGroupName LogicalVolumeName 10.00g VolumeGroupName SnapshotName LogicalVolumeName 68.33 6.00gCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.2.2.3. Automatically extending thick logical volume snapshots

If a snapshot reaches 100% of its allocated space, it becomes invalid. It is essential to extend the snapshot before it gets completely filled. This can be done automatically.

Prerequisites

- Administrative access.

Procedure

-

As the

rootuser, open the/etc/lvm/lvm.conffile in an editor of your choice. Uncomment the

snapshot_autoextend_thresholdandsnapshot_autoextend_percentlines and set each parameter to a required value:snapshot_autoextend_threshold = 70 snapshot_autoextend_percent = 20

snapshot_autoextend_threshold = 70 snapshot_autoextend_percent = 20Copy to Clipboard Copied! Toggle word wrap Toggle overflow snapshot_autoextend_thresholddetermines the percentage at which LVM starts to auto-extend the snapshot. For example, setting the parameter to 70 means that LVM will try to extend the snapshot when it reaches 70% capacity.snapshot_autoextend_percentspecifies by what percentage the snapshot should be extended when it reaches the threshold. For example, setting the parameter to 20 means the snapshot will be increased by 20% of its current size.- Save the changes and exit the editor.

Restart the

lvm2-monitor:systemctl restart lvm2-monitor

# systemctl restart lvm2-monitorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.2.2.4. Merging thick logical volume snapshots

You can merge thick LV snapshot into the original logical volume from which the snapshot was created. The process of merging means that the original LV is reverted to the state it was in when the snapshot was created. Once the merge is complete, the snapshot is removed.

The merge between the original and snapshot LV is postponed if either is active. It only proceeds once the LVs are reactivated and not in use.

Prerequisites

- Administrative access.

Procedure

List the LVs, their volume groups, and their paths:

lvs -o lv_name,vg_name,lv_path

# lvs -o lv_name,vg_name,lv_path LV VG Path LogicalVolumeName VolumeGroupName /dev/VolumeGroupName/LogicalVolumeName SnapshotName VolumeGroupName /dev/VolumeGroupName/SnapshotNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check where the LVs are mounted:

findmnt -o SOURCE,TARGET /dev/VolumeGroupName/LogicalVolumeName findmnt -o SOURCE,TARGET /dev/VolumeGroupName/SnapshotName

# findmnt -o SOURCE,TARGET /dev/VolumeGroupName/LogicalVolumeName # findmnt -o SOURCE,TARGET /dev/VolumeGroupName/SnapshotNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /dev/VolumeGroupName/LogicalVolumeName with the path to your logical volume. Replace /dev/VolumeGroupName/SnapshotName with the path to your snapshot.

Unmount the LVs:

umount /LogicalVolume/MountPoint umount /Snapshot/MountPoint

# umount /LogicalVolume/MountPoint # umount /Snapshot/MountPointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /LogicalVolume/MountPoint with the mounting point for your logical volume. Replace /Snapshot/MountPoint with the mounting point for your snapshot.

Deactivate the LVs:

lvchange --activate n VolumeGroupName/LogicalVolumeName lvchange --activate n VolumeGroupName/SnapshotName

# lvchange --activate n VolumeGroupName/LogicalVolumeName # lvchange --activate n VolumeGroupName/SnapshotNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group. Replace LogicalVolumeName with the name of the logical volume. Replace SnapshotName with the name of your snapshot.

Merge the thick LV snapshot into the origin:

lvconvert --merge SnapshotName

# lvconvert --merge SnapshotNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace SnapshotName with the name of the snapshot.

Activate the LV:

lvchange --activate y VolumeGroupName/LogicalVolumeName

# lvchange --activate y VolumeGroupName/LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group. Replace LogicalVolumeName with the name of the logical volume.

Mount the LV:

umount /LogicalVolume/MountPoint

# umount /LogicalVolume/MountPointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /LogicalVolume/MountPoint with the mounting point for your logical volume.

Verification

Verify that the snapshot is removed:

lvs -o lv_name

# lvs -o lv_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.2.3. Managing thin logical volume snapshots

Thin provisioning is appropriate where storage efficiency is a priority. Storage space dynamic allocation reduces initial storage costs and maximizes the use of available storage resources. In environments with dynamic workloads or where storage grows over time, thin provisioning allows for flexibility. It enables the storage system to adapt to changing needs without requiring large upfront allocations of the storage space. With dynamic allocation, over-provisioning is possible, where the total size of all LVs can exceed the physical size of the thin pool, under the assumption that not all space will be utilized at the same time.

67.4.2.3.1. Creating thin logical volume snapshots

You can create a thin LV snapshot with the lvcreate command. When creating a thin LV snapshot, avoid specifying the snapshot size. Including a size parameter results in the creation of a thick snapshot instead.

Prerequisites

- Administrative access.

- You have created a physical volume. For more information, see Creating LVM physical volume.

- You have created a volume group. For more information, see Creating LVM volume group.

- You have created a logical volume. For more information, see Creating logical volumes.

Procedure

Identify the LV of which you want to create a snapshot:

lvs -o lv_name,vg_name,pool_lv,lv_size

# lvs -o lv_name,vg_name,pool_lv,lv_size LV VG Pool LSize PoolName VolumeGroupName 152.00m ThinVolumeName VolumeGroupName PoolName 100.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a thin LV snapshot:

lvcreate --snapshot --name SnapshotName VolumeGroupName/ThinVolumeName

# lvcreate --snapshot --name SnapshotName VolumeGroupName/ThinVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace SnapshotName with the name you want to give to the snapshot logical volume. Replace VolumeGroupName with the name of the volume group that contains the original logical volume. Replace ThinVolumeName with the name of the thin logical volume that you want to create a snapshot of.

Verification

Verify that the snapshot is created:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.2.3.2. Merging thin logical volume snapshots

You can merge thin LV snapshot into the original logical volume from which the snapshot was created. The process of merging means that the original LV is reverted to the state it was in when the snapshot was created. Once the merge is complete, the snapshot is removed.

Prerequisites

- Administrative access.

Procedure

List the LVs, their volume groups, and their paths:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check where the original LV is mounted:

findmnt -o SOURCE,TARGET /dev/VolumeGroupName/ThinVolumeName

# findmnt -o SOURCE,TARGET /dev/VolumeGroupName/ThinVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName/ThinVolumeName with the path to your logical volume.

Unmount the LV:

umount /ThinLogicalVolume/MountPoint

# umount /ThinLogicalVolume/MountPointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /ThinLogicalVolume/MountPoint with the mounting point for your logical volume. Replace /ThinSnapshot/MountPoint with the mounting point for your snapshot.

Deactivate the LV:

lvchange --activate n VolumeGroupName/ThinLogicalVolumeName

# lvchange --activate n VolumeGroupName/ThinLogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group. Replace ThinLogicalVolumeName with the name of the logical volume.

Merge the thin LV snapshot into the origin:

lvconvert --mergethin VolumeGroupName/ThinSnapshotName

# lvconvert --mergethin VolumeGroupName/ThinSnapshotNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName with the name of the volume group. Replace ThinSnapshotName with the name of the snapshot.

Mount the LV:

umount /ThinLogicalVolume/MountPoint

# umount /ThinLogicalVolume/MountPointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /ThinLogicalVolume/MountPoint with the mounting point for your logical volume.

Verification

Verify that the original LV is merged:

lvs -o lv_name

# lvs -o lv_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.3. Creating a RAID0 striped logical volume

A RAID0 logical volume spreads logical volume data across multiple data subvolumes in units of stripe size. The following procedure creates an LVM RAID0 logical volume called mylv that stripes data across the disks.

Prerequisites

- You have created three or more physical volumes. For more information about creating physical volumes, see Creating LVM physical volume.

- You have created the volume group. For more information, see Creating LVM volume group.

Procedure

Create a RAID0 logical volume from the existing volume group. The following command creates the RAID0 volume mylv from the volume group myvg, which is 2G in size, with three stripes and a stripe size of 4kB:

lvcreate --type raid0 -L 2G --stripes 3 --stripesize 4 -n mylv my_vg

# lvcreate --type raid0 -L 2G --stripes 3 --stripesize 4 -n mylv my_vg Rounding size 2.00 GiB (512 extents) up to stripe boundary size 2.00 GiB(513 extents). Logical volume "mylv" created.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file system on the RAID0 logical volume. The following command creates an ext4 file system on the logical volume:

mkfs.ext4 /dev/my_vg/mylv

# mkfs.ext4 /dev/my_vg/mylvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Mount the logical volume and report the file system disk space usage:

mount /dev/my_vg/mylv /mnt df

# mount /dev/my_vg/mylv /mnt # df Filesystem 1K-blocks Used Available Use% Mounted on /dev/mapper/my_vg-mylv 2002684 6168 1875072 1% /mntCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the created RAID0 stripped logical volume:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.4. Removing a disk from a logical volume

This procedure describes how to remove a disk from an existing logical volume, either to replace the disk or to use the disk as part of a different volume.

In order to remove a disk, you must first move the extents on the LVM physical volume to a different disk or set of disks.

Procedure

View the used and free space of physical volumes when using the LV:

pvs -o+pv_used

# pvs -o+pv_used PV VG Fmt Attr PSize PFree Used /dev/vdb1 myvg lvm2 a-- 1020.00m 0 1020.00m /dev/vdb2 myvg lvm2 a-- 1020.00m 0 1020.00m /dev/vdb3 myvg lvm2 a-- 1020.00m 1008.00m 12.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the data to other physical volume:

If there are enough free extents on the other physical volumes in the existing volume group, use the following command to move the data:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If there are no enough free extents on the other physical volumes in the existing volume group, use the following commands to add a new physical volume, extend the volume group using the newly created physical volume, and move the data to this physical volume:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Remove the physical volume:

vgreduce myvg /dev/vdb3

# vgreduce myvg /dev/vdb3 Removed "/dev/vdb3" from volume group "myvg"Copy to Clipboard Copied! Toggle word wrap Toggle overflow If a logical volume contains a physical volume that fails, you cannot use that logical volume. To remove missing physical volumes from a volume group, you can use the

--removemissingparameter of thevgreducecommand, if there are no logical volumes that are allocated on the missing physical volumes:vgreduce --removemissing myvg

# vgreduce --removemissing myvgCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.5. Changing physical drives in volume groups using the web console

You can change the drive in a volume group using the RHEL 8 web console.

Prerequisites

- A new physical drive for replacing the old or broken one.

- The configuration expects that physical drives are organized in a volume group.

67.4.5.1. Adding physical drives to volume groups in the web console

You can add a new physical drive or other type of volume to the existing logical volume by using the RHEL 8 web console.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - A volume group must be created.

- A new drive connected to the machine.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click Storage.

- In the Storage table, click the volume group to which you want to add physical drives.

- On the LVM2 volume group page, click .

- In the Add Disks dialog box, select the preferred drives and click .

Verification

- On the LVM2 volume group page, check the Physical volumes section to verify whether the new physical drives are available in the volume group.

67.4.5.2. Removing physical drives from volume groups in the web console

If a logical volume includes multiple physical drives, you can remove one of the physical drives online.

The system moves automatically all data from the drive to be removed to other drives during the removal process. Notice that it can take some time.

The web console also verifies, if there is enough space for removing the physical drive.

Prerequisites

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

-

The

cockpit-storagedpackage is installed on your system. - A volume group with more than one physical drive connected.

Procedure

- Log in to the RHEL 8 web console.

- Click Storage.

- In the Storage table, click the volume group to which you want to add physical drives.

- On the LVM2 volume group page, scroll to the Physical volumes section.

- Click the menu button, , next to the physical volume you want to remove.

From the drop-down menu, select .

The RHEL 8 web console verifies whether the logical volume has enough free space to removing the disk. If there is no free space to transfer the data, you cannot remove the disk and you must first add another disk to increase the capacity of the volume group. For details, see Adding physical drives to logical volumes in the web console.

67.4.6. Removing logical volumes

You can remove an existing logical volume, including snapshots, using the lvremove command.

Prerequisites

- Administrative access.

Procedure

List the logical volumes and their paths:

lvs -o lv_name,lv_path

# lvs -o lv_name,lv_path LV Path LogicalVolumeName /dev/VolumeGroupName/LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check where the logical volume is mounted:

findmnt -o SOURCE,TARGET /dev/VolumeGroupName/LogicalVolumeName

# findmnt -o SOURCE,TARGET /dev/VolumeGroupName/LogicalVolumeName SOURCE TARGET /dev/mapper/VolumeGroupName-LogicalVolumeName /MountPointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /dev/VolumeGroupName/LogicalVolumeName with the path to your logical volume.

Unmount the logical volume:

umount /MountPoint

# umount /MountPointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /MountPoint with the mounting point for your logical volume.

Remove the logical volume:

lvremove VolumeGroupName/LogicalVolumeName

# lvremove VolumeGroupName/LogicalVolumeNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace VolumeGroupName/LogicalVolumeName with the path to your logical volume.

67.4.7. Managing LVM logical volumes by using RHEL system roles

Use the storage role to perform the following tasks:

- Create an LVM logical volume in a volume group consisting of multiple disks.

- Create an ext4 file system with a given label on the logical volume.

- Persistently mount the ext4 file system.

Prerequisites

-

An Ansible playbook including the

storagerole

67.4.7.1. Creating or resizing a logical volume by using the storage RHEL system role

Use the storage role to perform the following tasks:

- To create an LVM logical volume in a volume group consisting of many disks

- To resize an existing file system on LVM

- To express an LVM volume size in percentage of the pool’s total size

If the volume group does not exist, the role creates it. If a logical volume exists in the volume group, it is resized if the size does not match what is specified in the playbook.

If you are reducing a logical volume, to prevent data loss you must ensure that the file system on that logical volume is not using the space in the logical volume that is being reduced.

Prerequisites

- You have prepared the control node and the managed nodes

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

size: <size>- You must specify the size by using units (for example, GiB) or percentage (for example, 60%).

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that specified volume has been created or resized to the requested size:

ansible managed-node-01.example.com -m command -a 'lvs myvg'

# ansible managed-node-01.example.com -m command -a 'lvs myvg'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

67.4.8. Resizing an existing file system on LVM by using the storage RHEL system role

You can use the storage RHEL system role to resize an LVM logical volume with a file system.

If the logical volume you are reducing has a file system, to prevent data loss you must ensure that the file system is not using the space in the logical volume that is being reduced.

Prerequisites

- You have prepared the control node and the managed nodes

- You are logged in to the control node as a user who can run playbooks on the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions on them.

Procedure

Create a playbook file, for example

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This playbook resizes the following existing file systems:

-

The Ext4 file system on the

mylv1volume, which is mounted at/opt/mount1, resizes to 10 GiB. -

The Ext4 file system on the

mylv2volume, which is mounted at/opt/mount2, resizes to 50 GiB.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.-

The Ext4 file system on the

Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the logical volume has been resized to the requested size:

ansible managed-node-01.example.com -m command -a 'lvs myvg'

# ansible managed-node-01.example.com -m command -a 'lvs myvg'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify file system size using file system tools. For example, for ext4, calculate the file system size by multiplying block count and block size reported by dumpe2fs tool:

ansible managed-node-01.example.com -m command -a 'dumpe2fs -h /dev/myvg/mylv | grep -E "Block count|Block size"'

# ansible managed-node-01.example.com -m command -a 'dumpe2fs -h /dev/myvg/mylv | grep -E "Block count|Block size"'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

67.5. Modifying the size of a logical volume

After you have created a logical volume, you can modify the size of the volume.

67.5.1. Extending a striped logical volume

You can extend a striped logical volume (LV) by using the lvextend command with the required size.

Prerequisites

- You have enough free space on the underlying physical volumes (PVs) that make up the volume group (VG) to support the stripe.

Procedure

Optional: Display your volume group:

vgs

# vgs VG #PV #LV #SN Attr VSize VFree myvg 2 1 0 wz--n- 271.31G 271.31GCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Create a stripe using the entire amount of space in the volume group:

lvcreate -n stripe1 -L 271.31G -i 2 myvg

# lvcreate -n stripe1 -L 271.31G -i 2 myvg Using default stripesize 64.00 KB Rounding up size to full physical extent 271.31 GiBCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Extend the myvg volume group by adding new physical volumes:

vgextend myvg /dev/sdc1

# vgextend myvg /dev/sdc1 Volume group "myvg" successfully extendedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat this step to add sufficient physical volumes depending on your stripe type and the amount of space used. For example, for a two-way stripe that uses up the entire volume group, you need to add at least two physical volumes.

Extend the striped logical volume stripe1 that is a part of the myvg VG:

lvextend myvg/stripe1 -L 542G

# lvextend myvg/stripe1 -L 542G Using stripesize of last segment 64.00 KB Extending logical volume stripe1 to 542.00 GB Logical volume stripe1 successfully resizedCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also extend the stripe1 logical volume to fill all of the unallocated space in the myvg volume group:

lvextend -l+100%FREE myvg/stripe1

# lvextend -l+100%FREE myvg/stripe1 Size of logical volume myvg/stripe1 changed from 1020.00 MiB (255 extents) to <2.00 GiB (511 extents). Logical volume myvg/stripe1 successfully resized.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the new size of the extended striped LV:

lvs

# lvs LV VG Attr LSize Pool Origin Data% Move Log Copy% Convert stripe1 myvg wi-ao---- 542.00 GBCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.6. Customizing the LVM report

LVM provides a wide range of configuration and command line options to produce customized reports. You can sort the output, specify units, use selection criteria, and update the lvm.conf file to customize the LVM report.

67.6.1. Controlling the format of the LVM display

When you use the pvs, lvs, or vgs command without additional options, you see the default set of fields displayed in the default sort order. The default fields for the pvs command include the following information sorted by the name of physical volumes:

pvs

# pvs

PV VG Fmt Attr PSize PFree

/dev/vdb1 VolumeGroupName lvm2 a-- 17.14G 17.14G

/dev/vdb2 VolumeGroupName lvm2 a-- 17.14G 17.09G

/dev/vdb3 VolumeGroupName lvm2 a-- 17.14G 17.14GPV- Physical volume name.

VG- Volume group name.

Fmt-

Metadata format of the physical volume:

lvm2orlvm1. Attr- Status of the physical volume: (a) - allocatable or (x) - exported.

PSize- Size of the physical volume.

PFree- Free space remaining on the physical volume.

Displaying custom fields

To display a different set of fields than the default, use the -o option. The following example displays only the name, size and free space of the physical volumes:

pvs -o pv_name,pv_size,pv_free

# pvs -o pv_name,pv_size,pv_free

PV PSize PFree

/dev/vdb1 17.14G 17.14G

/dev/vdb2 17.14G 17.09G

/dev/vdb3 17.14G 17.14GSorting the LVM display

To sort the results by specific criteria, use the -O option. The following example sorts the entries by the free space of their physical volumes in ascending order:

pvs -o pv_name,pv_size,pv_free -O pv_free

# pvs -o pv_name,pv_size,pv_free -O pv_free

PV PSize PFree

/dev/vdb2 17.14G 17.09G

/dev/vdb1 17.14G 17.14G

/dev/vdb3 17.14G 17.14G

To sort the results by descending order, use the -O option along with the - character:

pvs -o pv_name,pv_size,pv_free -O -pv_free

# pvs -o pv_name,pv_size,pv_free -O -pv_free

PV PSize PFree

/dev/vdb1 17.14G 17.14G

/dev/vdb3 17.14G 17.14G

/dev/vdb2 17.14G 17.09G67.6.2. Specifying the units for an LVM display

You can view the size of the LVM devices in base 2 or base 10 units by specifying the --units argument of an LVM display command. See the following table for all arguments:

| Units type | Description | Available options | Default |

|---|---|---|---|

| Base 2 units | Units are displayed in powers of 2 (multiples of 1024). |

|

|

| Base 10 units | Units are displayed in multiples of 1000. |

| N/A |

| Custom units |

Combination of a quantity with a base 2 or base 10 unit. For example, to display the results in 4 mebibytes, use | N/A | N/A |

If you do not specify a value for the units, human-readable format (

r) is used by default. The followingvgscommand displays the size of VGs in human-readable format. The most suitable unit is used and the rounding indicator<shows that the actual size is an approximation and it is less than 931 gibibytes.vgs myvg

# vgs myvg VG #PV #LV #SN Attr VSize VFree myvg 1 1 0 wz-n <931.00g <930.00gCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following

pvscommand displays the output in base 2 gibibyte units for the/dev/vdbphysical volume:pvs --units g /dev/vdb

# pvs --units g /dev/vdb PV VG Fmt Attr PSize PFree /dev/vdb myvg lvm2 a-- 931.00g 930.00gCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following

pvscommand displays the output in base 10 gigabyte units for the/dev/vdbphysical volume:pvs --units G /dev/vdb

# pvs --units G /dev/vdb PV VG Fmt Attr PSize PFree /dev/vdb myvg lvm2 a-- 999.65G 998.58GCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following

pvscommand displays the output in 512-byte sectors:pvs --units s

# pvs --units s PV VG Fmt Attr PSize PFree /dev/vdb myvg lvm2 a-- 1952440320S 1950343168SCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can specify custom units for an LVM display command. The following example displays the output of the

pvscommand in units of 4 mebibytes:pvs --units 4m

# pvs --units 4m PV VG Fmt Attr PSize PFree /dev/vdb myvg lvm2 a-- 238335.00U 238079.00UCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.6.3. Customizing the LVM configuration file

You can customize your LVM configuration according to your specific storage and system requirements by editing the lvm.conf file. For example, you can edit the lvm.conf file to modify filter settings, configure volume group auto activation, manage a thin pool, or automatically extend a snapshot.

Procedure

-

Open the

lvm.conffile in an editor of your choice. Customize the

lvm.conffile by uncommenting and modifying the setting for which you want to modify the default display values.To customize what fields you see in the

lvsoutput, uncomment thelvs_colsparameter and modify it:lvs_cols="lv_name,vg_name,lv_attr"

lvs_cols="lv_name,vg_name,lv_attr"Copy to Clipboard Copied! Toggle word wrap Toggle overflow To hide empty fields for the

pvs,vgs, andlvscommands, uncomment thecompact_output=1setting:compact_output = 1

compact_output = 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow To set gigabytes as the default unit for the

pvs,vgs, andlvscommands, replace theunits = "r"setting withunits = "G":units = "G"

units = "G"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Ensure that the corresponding section of the

lvm.conffile is uncommented. For example, to modify thelvs_colsparameter, thereportsection must be uncommented:report { ... }report { ... }Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the changed values after modifying the

lvm.conffile:lvmconfig --typeconfig diff

# lvmconfig --typeconfig diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow

67.6.4. Defining LVM selection criteria

Selection criteria are a set of statements in the form of <field> <operator> <value>, which use comparison operators to define values for specific fields. Objects that match the selection criteria are then processed or displayed. Objects can be physical volumes (PVs), volume groups (VGs), or logical volumes (LVs). Statements are combined by logical and grouping operators.

To define selection criteria use the -S or --select option followed by one or multiple statements.

The -S option works by describing the objects to process, rather than naming each object. This is helpful when processing many objects and it would be difficult to find and name each object separately or when searching objects that have a complex set of characteristics. The -S option can also be used as a shortcut to avoid typing many names.

To see full sets of fields and possible operators, use the lvs -S help command. Replace lvs with any reporting or processing command to see the details of that command:

-

Reporting commands include

pvs,vgs,lvs,pvdisplay,vgdisplay,lvdisplay, anddmsetup info -c. -

Processing commands include

pvchange,vgchange,lvchange,vgimport,vgexport,vgremove, andlvremove.

Examples of selection criteria using the pvs commands

The following example of the

pvscommand displays only physical volumes with a name that contains the stringnvme:pvs -S name=~nvme

# pvs -S name=~nvme PV Fmt Attr PSize PFree /dev/nvme2n1 lvm2 --- 1.00g 1.00gCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example of the

pvscommand displays only physical devices in themyvgvolume group:pvs -S vg_name=myvg

# pvs -S vg_name=myvg PV VG Fmt Attr PSize PFree /dev/vdb1 myvg lvm2 a-- 1020.00m 396.00m /dev/vdb2 myvg lvm2 a-- 1020.00m 896.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Examples of selection criteria using the lvs commands

The following example of the

lvscommand displays only logical volumes with a size greater than 100m but less than 200m:lvs -S 'size > 100m && size < 200m'

# lvs -S 'size > 100m && size < 200m' LV VG Attr LSize Cpy%Sync rr myvg rwi-a-r--- 120.00m 100.00Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example of the

lvscommand displays only logical volumes with a name that containslvoland any number between 0 and 2:lvs -S name=~lvol[02]

# lvs -S name=~lvol[02] LV VG Attr LSize lvol0 myvg -wi-a----- 100.00m lvol2 myvg -wi------- 100.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example of the

lvscommand displays only logical volumes with araid1segment type:lvs -S segtype=raid1

# lvs -S segtype=raid1 LV VG Attr LSize Cpy%Sync rr myvg rwi-a-r--- 120.00m 100.00Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Advanced examples

You can combine selection criteria with other options.

The following example of the

lvchangecommand adds a specific tagmytagto only active logical volumes:lvchange --addtag mytag -S active=1

# lvchange --addtag mytag -S active=1 Logical volume myvg/mylv changed. Logical volume myvg/lvol0 changed. Logical volume myvg/lvol1 changed. Logical volume myvg/rr changed.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example of the

lvscommand displays all logical volumes whose name does not match_pmspareand changes the default headers to custom ones:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example of the

lvchangecommand flags a logical volume withrole=thinsnapshotandorigin=thin1to be skipped during normal activation commands:lvchange --setactivationskip n -S 'role=thinsnapshot && origin=thin1'

# lvchange --setactivationskip n -S 'role=thinsnapshot && origin=thin1' Logical volume myvg/thin1s changed.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example of the

lvscommand displays only logical volumes that match all three conditions:-

Name contains

_tmeta. -

Role is

metadata. - Size is less or equal to 4m.

lvs -a -S 'name=~_tmeta && role=metadata && size <= 4m'

# lvs -a -S 'name=~_tmeta && role=metadata && size <= 4m' LV VG Attr LSize [tp_tmeta] myvg ewi-ao---- 4.00mCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Name contains

Additional resources

-

lvmreport(7)man page on your system

67.7. Configuring RAID logical volumes

You can create and manage Redundant Array of Independent Disks (RAID) volumes by using logical volume manager (LVM). LVM supports RAID levels 0, 1, 4, 5, 6, and 10. An LVM RAID volume has the following characteristics:

- LVM creates and manages RAID logical volumes that leverage the Multiple Devices (MD) kernel drivers.

- You can temporarily split RAID1 images from the array and merge them back into the array later.

- LVM RAID volumes support snapshots.

- RAID logical volumes are not cluster-aware. Although you can create and activate RAID logical volumes exclusively on one machine, you cannot activate them simultaneously on more than one machine.

-

When you create a RAID logical volume (LV), LVM creates a metadata subvolume that is one extent in size for every data or parity subvolume in the array. For example, creating a 2-way RAID1 array results in two metadata subvolumes (

lv_rmeta_0andlv_rmeta_1) and two data subvolumes (lv_rimage_0andlv_rimage_1). - Adding integrity to a RAID LV reduces or prevents soft corruption.

67.7.1. RAID levels and linear support

The following are the supported configurations by RAID, including levels 0, 1, 4, 5, 6, 10, and linear:

- Level 0

RAID level 0, often called striping, is a performance-oriented striped data mapping technique. This means the data being written to the array is broken down into stripes and written across the member disks of the array, allowing high I/O performance at low inherent cost but provides no redundancy.

RAID level 0 implementations only stripe the data across the member devices up to the size of the smallest device in the array. This means that if you have multiple devices with slightly different sizes, each device gets treated as though it was the same size as the smallest drive. Therefore, the common storage capacity of a level 0 array is the total capacity of all disks. If the member disks have a different size, then the RAID0 uses all the space of those disks using the available zones.

- Level 1

RAID level 1, or mirroring, provides redundancy by writing identical data to each member disk of the array, leaving a mirrored copy on each disk. Mirroring remains popular due to its simplicity and high level of data availability. Level 1 operates with two or more disks, and provides very good data reliability and improves performance for read-intensive applications but at relatively high costs.

RAID level 1 is costly because you write the same information to all of the disks in the array, which provides data reliability, but in a much less space-efficient manner than parity based RAID levels such as level 5. However, this space inefficiency comes with a performance benefit, which is parity-based RAID levels that consume considerably more CPU power in order to generate the parity while RAID level 1 simply writes the same data more than once to the multiple RAID members with very little CPU overhead. As such, RAID level 1 can outperform the parity-based RAID levels on machines where software RAID is employed and CPU resources on the machine are consistently taxed with operations other than RAID activities.

The storage capacity of the level 1 array is equal to the capacity of the smallest mirrored hard disk in a hardware RAID or the smallest mirrored partition in a software RAID. Level 1 redundancy is the highest possible among all RAID types, with the array being able to operate with only a single disk present.

- Level 4

Level 4 uses parity concentrated on a single disk drive to protect data. Parity information is calculated based on the content of the rest of the member disks in the array. This information can then be used to reconstruct data when one disk in the array fails. The reconstructed data can then be used to satisfy I/O requests to the failed disk before it is replaced and to repopulate the failed disk after it has been replaced.

Since the dedicated parity disk represents an inherent bottleneck on all write transactions to the RAID array, level 4 is seldom used without accompanying technologies such as write-back caching. Or it is used in specific circumstances where the system administrator is intentionally designing the software RAID device with this bottleneck in mind such as an array that has little to no write transactions once the array is populated with data. RAID level 4 is so rarely used that it is not available as an option in Anaconda. However, it could be created manually by the user if needed.