Chapter 16. Scanning the system for security compliance and vulnerabilities

16.1. Configuration compliance tools in RHEL

Red Hat Enterprise Linux provides tools that enable you to perform a fully automated compliance audit. These tools are based on the Security Content Automation Protocol (SCAP) standard and are designed for automated tailoring of compliance policies.

-

SCAP Workbench - The

scap-workbenchgraphical utility is designed to perform configuration and vulnerability scans on a single local or remote system. You can also use it to generate security reports based on these scans and evaluations. -

OpenSCAP - The

OpenSCAPlibrary, with the accompanyingoscapcommand-line utility, is designed to perform configuration and vulnerability scans on a local system, to validate configuration compliance content, and to generate reports and guides based on these scans and evaluations.

You can experience memory-consumption problems while using OpenSCAP, which can cause stopping the program prematurely and prevent generating any result files. See the OpenSCAP memory-consumption problems Knowledgebase article for details.

-

SCAP Security Guide (SSG) - The

scap-security-guidepackage provides the latest collection of security policies for Linux systems. The guidance consists of a catalog of practical hardening advice, linked to government requirements where applicable. The project bridges the gap between generalized policy requirements and specific implementation guidelines. -

Script Check Engine (SCE) - SCE is an extension to the SCAP protocol that enables administrators to write their security content using a scripting language, such as Bash, Python, and Ruby. The SCE extension is provided in the

openscap-engine-scepackage. The SCE itself is not part of the SCAP standard.

To perform automated compliance audits on multiple systems remotely, you can use the OpenSCAP solution for Red Hat Satellite.

Additional resources

-

oscap(8),scap-workbench(8), andscap-security-guide(8)man pages - Red Hat Security Demos: Creating Customized Security Policy Content to Automate Security Compliance

- Red Hat Security Demos: Defend Yourself with RHEL Security Technologies

- Security Compliance Management in the Administering Red Hat Satellite Guide.

16.2. Red Hat Security Advisories OVAL feed

Red Hat Enterprise Linux security auditing capabilities are based on the Security Content Automation Protocol (SCAP) standard. SCAP is a multi-purpose framework of specifications that supports automated configuration, vulnerability and patch checking, technical control compliance activities, and security measurement.

SCAP specifications create an ecosystem where the format of security content is well-known and standardized although the implementation of the scanner or policy editor is not mandated. This enables organizations to build their security policy (SCAP content) once, no matter how many security vendors they employ.

The Open Vulnerability Assessment Language (OVAL) is the essential and oldest component of SCAP. Unlike other tools and custom scripts, OVAL describes a required state of resources in a declarative manner. OVAL code is never executed directly but using an OVAL interpreter tool called scanner. The declarative nature of OVAL ensures that the state of the assessed system is not accidentally modified.

Like all other SCAP components, OVAL is based on XML. The SCAP standard defines several document formats. Each of them includes a different kind of information and serves a different purpose.

Red Hat Product Security helps customers evaluate and manage risk by tracking and investigating all security issues affecting Red Hat customers. It provides timely and concise patches and security advisories on the Red Hat Customer Portal. Red Hat creates and supports OVAL patch definitions, providing machine-readable versions of our security advisories.

Because of differences between platforms, versions, and other factors, Red Hat Product Security qualitative severity ratings of vulnerabilities do not directly align with the Common Vulnerability Scoring System (CVSS) baseline ratings provided by third parties. Therefore, we recommend that you use the RHSA OVAL definitions instead of those provided by third parties.

The RHSA OVAL definitions are available individually and as a complete package, and are updated within an hour of a new security advisory being made available on the Red Hat Customer Portal.

Each OVAL patch definition maps one-to-one to a Red Hat Security Advisory (RHSA). Because an RHSA can contain fixes for multiple vulnerabilities, each vulnerability is listed separately by its Common Vulnerabilities and Exposures (CVE) name and has a link to its entry in our public bug database.

The RHSA OVAL definitions are designed to check for vulnerable versions of RPM packages installed on a system. It is possible to extend these definitions to include further checks, for example, to find out if the packages are being used in a vulnerable configuration. These definitions are designed to cover software and updates shipped by Red Hat. Additional definitions are required to detect the patch status of third-party software.

The Red Hat Insights for Red Hat Enterprise Linux compliance service helps IT security and compliance administrators to assess, monitor, and report on the security policy compliance of Red Hat Enterprise Linux systems. You can also create and manage your SCAP security policies entirely within the compliance service UI.

16.3. Vulnerability scanning

16.3.1. Red Hat Security Advisories OVAL feed

Red Hat Enterprise Linux security auditing capabilities are based on the Security Content Automation Protocol (SCAP) standard. SCAP is a multi-purpose framework of specifications that supports automated configuration, vulnerability and patch checking, technical control compliance activities, and security measurement.

SCAP specifications create an ecosystem where the format of security content is well-known and standardized although the implementation of the scanner or policy editor is not mandated. This enables organizations to build their security policy (SCAP content) once, no matter how many security vendors they employ.

The Open Vulnerability Assessment Language (OVAL) is the essential and oldest component of SCAP. Unlike other tools and custom scripts, OVAL describes a required state of resources in a declarative manner. OVAL code is never executed directly but using an OVAL interpreter tool called scanner. The declarative nature of OVAL ensures that the state of the assessed system is not accidentally modified.

Like all other SCAP components, OVAL is based on XML. The SCAP standard defines several document formats. Each of them includes a different kind of information and serves a different purpose.

Red Hat Product Security helps customers evaluate and manage risk by tracking and investigating all security issues affecting Red Hat customers. It provides timely and concise patches and security advisories on the Red Hat Customer Portal. Red Hat creates and supports OVAL patch definitions, providing machine-readable versions of our security advisories.

Because of differences between platforms, versions, and other factors, Red Hat Product Security qualitative severity ratings of vulnerabilities do not directly align with the Common Vulnerability Scoring System (CVSS) baseline ratings provided by third parties. Therefore, we recommend that you use the RHSA OVAL definitions instead of those provided by third parties.

The RHSA OVAL definitions are available individually and as a complete package, and are updated within an hour of a new security advisory being made available on the Red Hat Customer Portal.

Each OVAL patch definition maps one-to-one to a Red Hat Security Advisory (RHSA). Because an RHSA can contain fixes for multiple vulnerabilities, each vulnerability is listed separately by its Common Vulnerabilities and Exposures (CVE) name and has a link to its entry in our public bug database.

The RHSA OVAL definitions are designed to check for vulnerable versions of RPM packages installed on a system. It is possible to extend these definitions to include further checks, for example, to find out if the packages are being used in a vulnerable configuration. These definitions are designed to cover software and updates shipped by Red Hat. Additional definitions are required to detect the patch status of third-party software.

The Red Hat Insights for Red Hat Enterprise Linux compliance service helps IT security and compliance administrators to assess, monitor, and report on the security policy compliance of Red Hat Enterprise Linux systems. You can also create and manage your SCAP security policies entirely within the compliance service UI.

16.3.2. Scanning the system for vulnerabilities

The oscap command-line utility enables you to scan local systems, validate configuration compliance content, and generate reports and guides based on these scans and evaluations. This utility serves as a front end to the OpenSCAP library and groups its functionalities to modules (sub-commands) based on the type of SCAP content it processes.

Prerequisites

-

The

openscap-scannerandbzip2packages are installed.

Procedure

Download the latest RHSA OVAL definitions for your system:

# wget -O - https://www.redhat.com/security/data/oval/v2/RHEL8/rhel-8.oval.xml.bz2 | bzip2 --decompress > rhel-8.oval.xmlScan the system for vulnerabilities and save results to the vulnerability.html file:

# oscap oval eval --report vulnerability.html rhel-8.oval.xml

Verification

Check the results in a browser of your choice, for example:

$ firefox vulnerability.html &

Additional resources

-

oscap(8)man page - Red Hat OVAL definitions

- OpenSCAP memory consumption problems

16.3.3. Scanning remote systems for vulnerabilities

You can check also remote systems for vulnerabilities with the OpenSCAP scanner using the oscap-ssh tool over the SSH protocol.

Prerequisites

-

The

openscap-utilsandbzip2packages are installed on the system you use for scanning. -

The

openscap-scannerpackage is installed on the remote systems. - The SSH server is running on the remote systems.

Procedure

Download the latest RHSA OVAL definitions for your system:

# wget -O - https://www.redhat.com/security/data/oval/v2/RHEL8/rhel-8.oval.xml.bz2 | bzip2 --decompress > rhel-8.oval.xmlScan a remote system with the machine1 host name, SSH running on port 22, and the joesec user name for vulnerabilities and save results to the remote-vulnerability.html file:

# oscap-ssh joesec@machine1 22 oval eval --report remote-vulnerability.html rhel-8.oval.xml

Additional resources

16.4. Configuration compliance scanning

16.4.1. Configuration compliance in RHEL

You can use configuration compliance scanning to conform to a baseline defined by a specific organization. For example, if you work with the US government, you might have to align your systems with the Operating System Protection Profile (OSPP), and if you are a payment processor, you might have to align your systems with the Payment Card Industry Data Security Standard (PCI-DSS). You can also perform configuration compliance scanning to harden your system security.

Red Hat recommends you follow the Security Content Automation Protocol (SCAP) content provided in the SCAP Security Guide package because it is in line with Red Hat best practices for affected components.

The SCAP Security Guide package provides content which conforms to the SCAP 1.2 and SCAP 1.3 standards. The openscap scanner utility is compatible with both SCAP 1.2 and SCAP 1.3 content provided in the SCAP Security Guide package.

Performing a configuration compliance scanning does not guarantee the system is compliant.

The SCAP Security Guide suite provides profiles for several platforms in a form of data stream documents. A data stream is a file that contains definitions, benchmarks, profiles, and individual rules. Each rule specifies the applicability and requirements for compliance. RHEL provides several profiles for compliance with security policies. In addition to the industry standard, Red Hat data streams also contain information for remediation of failed rules.

Structure of compliance scanning resources

Data stream ├── xccdf | ├── benchmark | ├── profile | | ├──rule reference | | └──variable | ├── rule | ├── human readable data | ├── oval reference ├── oval ├── ocil reference ├── ocil ├── cpe reference └── cpe └── remediation

A profile is a set of rules based on a security policy, such as OSPP, PCI-DSS, and Health Insurance Portability and Accountability Act (HIPAA). This enables you to audit the system in an automated way for compliance with security standards.

You can modify (tailor) a profile to customize certain rules, for example, password length. For more information on profile tailoring, see Customizing a security profile with SCAP Workbench.

16.4.2. Possible results of an OpenSCAP scan

Depending on various properties of your system and the data stream and profile applied to an OpenSCAP scan, each rule may produce a specific result. This is a list of possible results with brief explanations of what they mean.

| Result | Explanation |

|---|---|

| Pass | The scan did not find any conflicts with this rule. |

| Fail | The scan found a conflict with this rule. |

| Not checked | OpenSCAP does not perform an automatic evaluation of this rule. Check whether your system conforms to this rule manually. |

| Not applicable | This rule does not apply to the current configuration. |

| Not selected | This rule is not part of the profile. OpenSCAP does not evaluate this rule and does not display these rules in the results. |

| Error |

The scan encountered an error. For additional information, you can enter the |

| Unknown |

The scan encountered an unexpected situation. For additional information, you can enter the |

16.4.3. Viewing profiles for configuration compliance

Before you decide to use profiles for scanning or remediation, you can list them and check their detailed descriptions using the oscap info subcommand.

Prerequisites

-

The

openscap-scannerandscap-security-guidepackages are installed.

Procedure

List all available files with security compliance profiles provided by the SCAP Security Guide project:

$ ls /usr/share/xml/scap/ssg/content/ ssg-firefox-cpe-dictionary.xml ssg-rhel6-ocil.xml ssg-firefox-cpe-oval.xml ssg-rhel6-oval.xml ... ssg-rhel6-ds-1.2.xml ssg-rhel8-oval.xml ssg-rhel8-ds.xml ssg-rhel8-xccdf.xml ...Display detailed information about a selected data stream using the

oscap infosubcommand. XML files containing data streams are indicated by the-dsstring in their names. In theProfilessection, you can find a list of available profiles and their IDs:$ oscap info /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xml Profiles: ... Title: Health Insurance Portability and Accountability Act (HIPAA) Id: xccdf_org.ssgproject.content_profile_hipaa Title: PCI-DSS v3.2.1 Control Baseline for Red Hat Enterprise Linux 8 Id: xccdf_org.ssgproject.content_profile_pci-dss Title: OSPP - Protection Profile for General Purpose Operating Systems Id: xccdf_org.ssgproject.content_profile_ospp ...Select a profile from the data-stream file and display additional details about the selected profile. To do so, use

oscap infowith the--profileoption followed by the last section of the ID displayed in the output of the previous command. For example, the ID of the HIPPA profile is:xccdf_org.ssgproject.content_profile_hipaa, and the value for the--profileoption ishipaa:$ oscap info --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xml ... Profile Title: Health Insurance Portability and Accountability Act (HIPAA) Description: The HIPAA Security Rule establishes U.S. national standards to protect individuals’ electronic personal health information that is created, received, used, or maintained by a covered entity. ...

Additional resources

-

scap-security-guide(8)man page - OpenSCAP memory consumption problems

16.4.4. Assessing configuration compliance with a specific baseline

To determine whether your system conforms to a specific baseline, follow these steps.

Prerequisites

-

The

openscap-scannerandscap-security-guidepackages are installed - You know the ID of the profile within the baseline with which the system should comply. To find the ID, see Viewing Profiles for Configuration Compliance.

Procedure

Evaluate the compliance of the system with the selected profile and save the scan results in the report.html HTML file, for example:

$ oscap xccdf eval --report report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xmlOptional: Scan a remote system with the

machine1host name, SSH running on port22, and thejoesecuser name for compliance and save results to theremote-report.htmlfile:$ oscap-ssh joesec@machine1 22 xccdf eval --report remote_report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xml

Additional resources

-

scap-security-guide(8)man page -

SCAP Security Guidedocumentation in the/usr/share/doc/scap-security-guide/directory -

/usr/share/doc/scap-security-guide/guides/ssg-rhel8-guide-index.html- [Guide to the Secure Configuration of Red Hat Enterprise Linux 8] installed with thescap-security-guide-docpackage - OpenSCAP memory consumption problems

16.5. Remediating the system to align with a specific baseline

Use this procedure to remediate the RHEL system to align with a specific baseline. This example uses the Health Insurance Portability and Accountability Act (HIPAA) profile.

If not used carefully, running the system evaluation with the Remediate option enabled might render the system non-functional. Red Hat does not provide any automated method to revert changes made by security-hardening remediations. Remediations are supported on RHEL systems in the default configuration. If your system has been altered after the installation, running remediation might not make it compliant with the required security profile.

Prerequisites

-

The

scap-security-guidepackage is installed on your RHEL system.

Procedure

Use the

oscapcommand with the--remediateoption:# oscap xccdf eval --profile hipaa --remediate /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xml- Restart your system.

Verification

Evaluate compliance of the system with the HIPAA profile, and save scan results in the

hipaa_report.htmlfile:$ oscap xccdf eval --report hipaa_report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xml

Additional resources

-

scap-security-guide(8)andoscap(8)man pages

16.6. Remediating the system to align with a specific baseline using an SSG Ansible playbook

Use this procedure to remediate your system with a specific baseline using an Ansible playbook file from the SCAP Security Guide project. This example uses the Health Insurance Portability and Accountability Act (HIPAA) profile.

If not used carefully, running the system evaluation with the Remediate option enabled might render the system non-functional. Red Hat does not provide any automated method to revert changes made by security-hardening remediations. Remediations are supported on RHEL systems in the default configuration. If your system has been altered after the installation, running remediation might not make it compliant with the required security profile.

Prerequisites

-

The

scap-security-guidepackage is installed. -

The

ansible-corepackage is installed. See the Ansible Installation Guide for more information.

In RHEL 8.6 and later versions, Ansible Engine is replaced by the ansible-core package, which contains only built-in modules. Note that many Ansible remediations use modules from the community and Portable Operating System Interface (POSIX) collections, which are not included in the built-in modules. In this case, you can use Bash remediations as a substitute to Ansible remediations. The Red Hat Connector in RHEL 8 includes the necessary Ansible modules to enable the remediation playbooks to function with Ansible Core.

Procedure

Remediate your system to align with HIPAA using Ansible:

# ansible-playbook -i localhost, -c local /usr/share/scap-security-guide/ansible/rhel8-playbook-hipaa.yml- Restart the system.

Verification

Evaluate compliance of the system with the HIPAA profile, and save scan results in the

hipaa_report.htmlfile:# oscap xccdf eval --profile hipaa --report hipaa_report.html /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xml

Additional resources

-

scap-security-guide(8)andoscap(8)man pages - Ansible Documentation

16.7. Creating a remediation Ansible playbook to align the system with a specific baseline

You can create an Ansible playbook containing only the remediations that are required to align your system with a specific baseline. This example uses the Health Insurance Portability and Accountability Act (HIPAA) profile. With this procedure, you create a smaller playbook that does not cover already satisfied requirements. By following these steps, you do not modify your system in any way, you only prepare a file for later application.

In RHEL 8.6, Ansible Engine is replaced by the ansible-core package, which contains only built-in modules. Note that many Ansible remediations use modules from the community and Portable Operating System Interface (POSIX) collections, which are not included in the built-in modules. In this case, you can use Bash remediations as a substitute for Ansible remediations. The Red Hat Connector in RHEL 8.6 includes the necessary Ansible modules to enable the remediation playbooks to function with Ansible Core.

Prerequisites

-

The

scap-security-guidepackage is installed.

Procedure

Scan the system and save the results:

# oscap xccdf eval --profile hipaa --results hipaa-results.xml /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xmlGenerate an Ansible playbook based on the file generated in the previous step:

# oscap xccdf generate fix --fix-type ansible --profile hipaa --output hipaa-remediations.yml hipaa-results.xml-

The

hipaa-remediations.ymlfile contains Ansible remediations for rules that failed during the scan performed in step 1. After reviewing this generated file, you can apply it with theansible-playbook hipaa-remediations.ymlcommand.

Verification

-

In a text editor of your choice, review that the

hipaa-remediations.ymlfile contains rules that failed in the scan performed in step 1.

Additional resources

-

scap-security-guide(8)andoscap(8)man pages - Ansible Documentation

16.8. Creating a remediation Bash script for a later application

Use this procedure to create a Bash script containing remediations that align your system with a security profile such as HIPAA. Using the following steps, you do not do any modifications to your system, you only prepare a file for later application.

Prerequisites

-

The

scap-security-guidepackage is installed on your RHEL system.

Procedure

Use the

oscapcommand to scan the system and to save the results to an XML file. In the following example,oscapevaluates the system against thehipaaprofile:# oscap xccdf eval --profile hipaa --results hipaa-results.xml /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xmlGenerate a Bash script based on the results file generated in the previous step:

# oscap xccdf generate fix --profile hipaa --fix-type bash --output hipaa-remediations.sh hipaa-results.xml-

The

hipaa-remediations.shfile contains remediations for rules that failed during the scan performed in step 1. After reviewing this generated file, you can apply it with the./hipaa-remediations.shcommand when you are in the same directory as this file.

Verification

-

In a text editor of your choice, review that the

hipaa-remediations.shfile contains rules that failed in the scan performed in step 1.

Additional resources

-

scap-security-guide(8),oscap(8), andbash(1)man pages

16.9. Scanning the system with a customized profile using SCAP Workbench

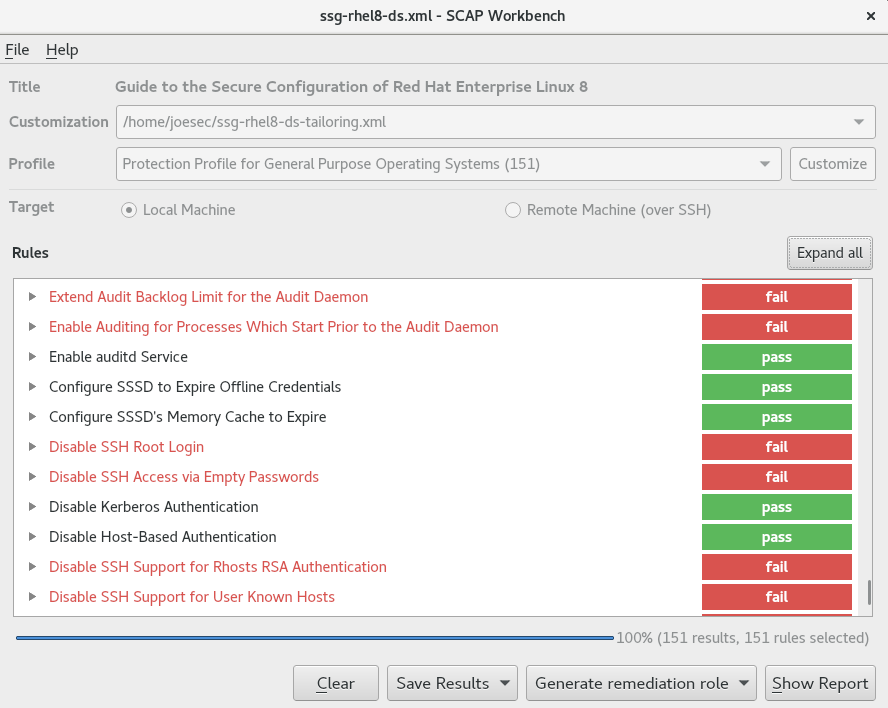

SCAP Workbench, which is contained in the scap-workbench package, is a graphical utility that enables users to perform configuration and vulnerability scans on a single local or a remote system, perform remediation of the system, and generate reports based on scan evaluations. Note that SCAP Workbench has limited functionality compared with the oscap command-line utility. SCAP Workbench processes security content in the form of data-stream files.

16.9.1. Using SCAP Workbench to scan and remediate the system

To evaluate your system against the selected security policy, use the following procedure.

Prerequisites

-

The

scap-workbenchpackage is installed on your system.

Procedure

To run

SCAP Workbenchfrom theGNOME Classicdesktop environment, press the Super key to enter theActivities Overview, typescap-workbench, and then press Enter. Alternatively, use:$ scap-workbench &Select a security policy using either of the following options:

-

Load Contentbutton on the starting window -

Open content from SCAP Security Guide Open Other Contentin theFilemenu, and search the respective XCCDF, SCAP RPM, or data stream file.

-

You can allow automatic correction of the system configuration by selecting the check box. With this option enabled,

SCAP Workbenchattempts to change the system configuration in accordance with the security rules applied by the policy. This process should fix the related checks that fail during the system scan.WarningIf not used carefully, running the system evaluation with the

Remediateoption enabled might render the system non-functional. Red Hat does not provide any automated method to revert changes made by security-hardening remediations. Remediations are supported on RHEL systems in the default configuration. If your system has been altered after the installation, running remediation might not make it compliant with the required security profile.Scan your system with the selected profile by clicking the button.

-

To store the scan results in form of an XCCDF, ARF, or HTML file, click the combo box. Choose the

HTML Reportoption to generate the scan report in human-readable format. The XCCDF and ARF (data stream) formats are suitable for further automatic processing. You can repeatedly choose all three options. - To export results-based remediations to a file, use the pop-up menu.

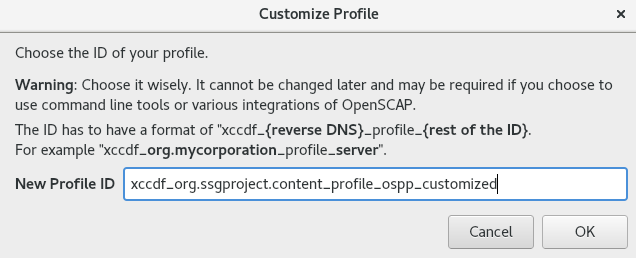

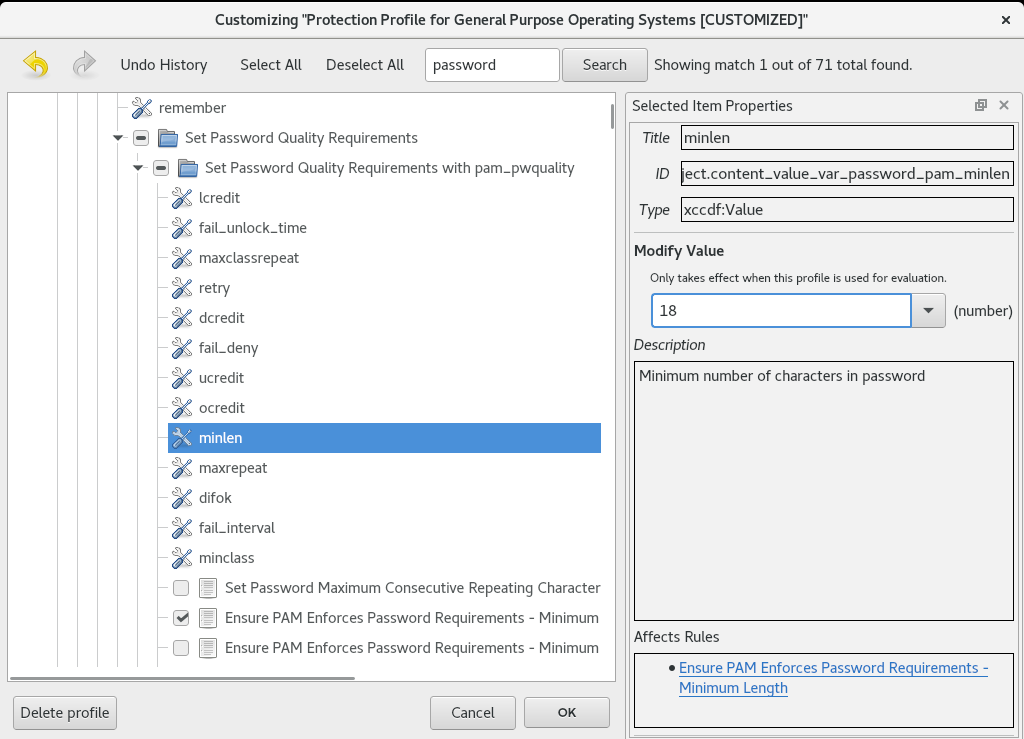

16.9.2. Customizing a security profile with SCAP Workbench

You can customize a security profile by changing parameters in certain rules (for example, minimum password length), removing rules that you cover in a different way, and selecting additional rules, to implement internal policies. You cannot define new rules by customizing a profile.

The following procedure demonstrates the use of SCAP Workbench for customizing (tailoring) a profile. You can also save the tailored profile for use with the oscap command-line utility.

Prerequisites

-

The

scap-workbenchpackage is installed on your system.

Procedure

-

Run

SCAP Workbench, and select the profile to customize by using eitherOpen content from SCAP Security GuideorOpen Other Contentin theFilemenu. To adjust the selected security profile according to your needs, click the button.

This opens the new Customization window that enables you to modify the currently selected profile without changing the original data stream file. Choose a new profile ID.

- Find a rule to modify using either the tree structure with rules organized into logical groups or the field.

Include or exclude rules using check boxes in the tree structure, or modify values in rules where applicable.

- Confirm the changes by clicking the button.

To store your changes permanently, use one of the following options:

-

Save a customization file separately by using

Save Customization Onlyin theFilemenu. Save all security content at once by

Save Allin theFilemenu.If you select the

Into a directoryoption,SCAP Workbenchsaves both the data stream file and the customization file to the specified location. You can use this as a backup solution.By selecting the

As RPMoption, you can instructSCAP Workbenchto create an RPM package containing the data stream file and the customization file. This is useful for distributing the security content to systems that cannot be scanned remotely, and for delivering the content for further processing.

-

Save a customization file separately by using

Because SCAP Workbench does not support results-based remediations for tailored profiles, use the exported remediations with the oscap command-line utility.

16.10. Scanning container and container images for vulnerabilities

Use this procedure to find security vulnerabilities in a container or a container image.

The oscap-podman command is available from RHEL 8.2. For RHEL 8.1 and 8.0, use the workaround described in the Using OpenSCAP for scanning containers in RHEL 8 Knowledgebase article.

Prerequisites

-

The

openscap-utilsandbzip2packages are installed.

Procedure

Download the latest RHSA OVAL definitions for your system:

# wget -O - https://www.redhat.com/security/data/oval/v2/RHEL8/rhel-8.oval.xml.bz2 | bzip2 --decompress > rhel-8.oval.xmlGet the ID of a container or a container image, for example:

# podman images REPOSITORY TAG IMAGE ID CREATED SIZE registry.access.redhat.com/ubi8/ubi latest 096cae65a207 7 weeks ago 239 MBScan the container or the container image for vulnerabilities and save results to the vulnerability.html file:

# oscap-podman 096cae65a207 oval eval --report vulnerability.html rhel-8.oval.xmlNote that the

oscap-podmancommand requires root privileges, and the ID of a container is the first argument.

Verification

Check the results in a browser of your choice, for example:

$ firefox vulnerability.html &

Additional resources

-

For more information, see the

oscap-podman(8)andoscap(8)man pages.

16.11. Assessing security compliance of a container or a container image with a specific baseline

Follow these steps to assess compliance of your container or a container image with a specific security baseline, such as Operating System Protection Profile (OSPP), Payment Card Industry Data Security Standard (PCI-DSS), and Health Insurance Portability and Accountability Act (HIPAA).

The oscap-podman command is available from RHEL 8.2. For RHEL 8.1 and 8.0, use the workaround described in the Using OpenSCAP for scanning containers in RHEL 8 Knowledgebase article.

Prerequisites

-

The

openscap-utilsandscap-security-guidepackages are installed.

Procedure

Get the ID of a container or a container image, for example:

# podman images REPOSITORY TAG IMAGE ID CREATED SIZE registry.access.redhat.com/ubi8/ubi latest 096cae65a207 7 weeks ago 239 MBEvaluate the compliance of the container image with the HIPAA profile and save scan results into the report.html HTML file

# oscap-podman 096cae65a207 xccdf eval --report report.html --profile hipaa /usr/share/xml/scap/ssg/content/ssg-rhel8-ds.xmlReplace 096cae65a207 with the ID of your container image and the hipaa value with ospp or pci-dss if you assess security compliance with the OSPP or PCI-DSS baseline. Note that the

oscap-podmancommand requires root privileges.

Verification

Check the results in a browser of your choice, for example:

$ firefox report.html &

The rules marked as notapplicable are rules that do not apply to containerized systems. These rules apply only to bare-metal and virtualized systems.

Additional resources

-

oscap-podman(8)andscap-security-guide(8)man pages. -

/usr/share/doc/scap-security-guide/directory.

16.12. Checking integrity with AIDE

Advanced Intrusion Detection Environment (AIDE) is a utility that creates a database of files on the system, and then uses that database to ensure file integrity and detect system intrusions.

16.12.1. Installing AIDE

The following steps are necessary to install AIDE and to initiate its database.

Prerequisites

-

The

AppStreamrepository is enabled.

Procedure

To install the aide package:

# yum install aideTo generate an initial database:

# aide --initNoteIn the default configuration, the

aide --initcommand checks just a set of directories and files defined in the/etc/aide.conffile. To include additional directories or files in theAIDEdatabase, and to change their watched parameters, edit/etc/aide.confaccordingly.To start using the database, remove the

.newsubstring from the initial database file name:# mv /var/lib/aide/aide.db.new.gz /var/lib/aide/aide.db.gz-

To change the location of the

AIDEdatabase, edit the/etc/aide.conffile and modify theDBDIRvalue. For additional security, store the database, configuration, and the/usr/sbin/aidebinary file in a secure location such as a read-only media.

16.12.2. Performing integrity checks with AIDE

Prerequisites

-

AIDEis properly installed and its database is initialized. See Installing AIDE

Procedure

To initiate a manual check:

# aide --check Start timestamp: 2018-07-11 12:41:20 +0200 (AIDE 0.16) AIDE found differences between database and filesystem!! ... [trimmed for clarity]At a minimum, configure the system to run

AIDEweekly. Optimally, runAIDEdaily. For example, to schedule a daily execution ofAIDEat 04:05 a.m. using thecroncommand, add the following line to the/etc/crontabfile:05 4 * * * root /usr/sbin/aide --check

16.12.3. Updating an AIDE database

After verifying the changes of your system such as, package updates or configuration files adjustments, Red Hat recommends updating your baseline AIDE database.

Prerequisites

-

AIDEis properly installed and its database is initialized. See Installing AIDE

Procedure

Update your baseline AIDE database:

# aide --updateThe

aide --updatecommand creates the/var/lib/aide/aide.db.new.gzdatabase file.-

To start using the updated database for integrity checks, remove the

.newsubstring from the file name.

16.12.4. File-integrity tools: AIDE and IMA

Red Hat Enterprise Linux provides several tools for checking and preserving the integrity of files and directories on your system. The following table helps you decide which tool better fits your scenario.

| Question | Advanced Intrusion Detection Environment (AIDE) | Integrity Measurement Architecture (IMA) |

|---|---|---|

| What | AIDE is a utility that creates a database of files and directories on the system. This database serves for checking file integrity and detect intrusion detection. | IMA detects if a file is altered by checking file measurement (hash values) compared to previously stored extended attributes. |

| How | AIDE uses rules to compare the integrity state of the files and directories. | IMA uses file hash values to detect the intrusion. |

| Why | Detection - AIDE detects if a file is modified by verifying the rules. | Detection and Prevention - IMA detects and prevents an attack by replacing the extended attribute of a file. |

| Usage | AIDE detects a threat when the file or directory is modified. | IMA detects a threat when someone tries to alter the entire file. |

| Extension | AIDE checks the integrity of files and directories on the local system. | IMA ensures security on the local and remote systems. |

16.13. Encrypting block devices using LUKS

Disk encryption protects the data on a block device by encrypting it. To access the device’s decrypted contents, a user must provide a passphrase or key as authentication. This is particularly important when it comes to mobile computers and removable media: it helps to protect the device’s contents even if it has been physically removed from the system. The LUKS format is a default implementation of block device encryption in RHEL.

16.13.1. LUKS disk encryption

The Linux Unified Key Setup-on-disk-format (LUKS) enables you to encrypt block devices and it provides a set of tools that simplifies managing the encrypted devices. LUKS allows multiple user keys to decrypt a master key, which is used for the bulk encryption of the partition.

RHEL uses LUKS to perform block device encryption. By default, the option to encrypt the block device is unchecked during the installation. If you select the option to encrypt your disk, the system prompts you for a passphrase every time you boot the computer. This passphrase “unlocks” the bulk encryption key that decrypts your partition. If you choose to modify the default partition table, you can choose which partitions you want to encrypt. This is set in the partition table settings.

What LUKS does

- LUKS encrypts entire block devices and is therefore well-suited for protecting contents of mobile devices such as removable storage media or laptop disk drives.

- The underlying contents of the encrypted block device are arbitrary, which makes it useful for encrypting swap devices. This can also be useful with certain databases that use specially formatted block devices for data storage.

- LUKS uses the existing device mapper kernel subsystem.

- LUKS provides passphrase strengthening, which protects against dictionary attacks.

- LUKS devices contain multiple key slots, allowing users to add backup keys or passphrases.

What LUKS does not do

- Disk-encryption solutions like LUKS protect the data only when your system is off. Once the system is on and LUKS has decrypted the disk, the files on that disk are available to anyone who would normally have access to them.

- LUKS is not well-suited for scenarios that require many users to have distinct access keys to the same device. The LUKS1 format provides eight key slots, LUKS2 up to 32 key slots.

- LUKS is not well-suited for applications requiring file-level encryption.

Ciphers

The default cipher used for LUKS is aes-xts-plain64. The default key size for LUKS is 512 bits. The default key size for LUKS with Anaconda (XTS mode) is 512 bits. Ciphers that are available are:

- AES - Advanced Encryption Standard

- Twofish (a 128-bit block cipher)

- Serpent

Additional resources

16.13.2. LUKS versions in RHEL

In RHEL, the default format for LUKS encryption is LUKS2. The legacy LUKS1 format remains fully supported and it is provided as a format compatible with earlier RHEL releases.

The LUKS2 format is designed to enable future updates of various parts without a need to modify binary structures. LUKS2 internally uses JSON text format for metadata, provides redundancy of metadata, detects metadata corruption and allows automatic repairs from a metadata copy.

Do not use LUKS2 in systems that must be compatible with legacy systems that support only LUKS1. Note that RHEL 7 supports the LUKS2 format since version 7.6.

LUKS2 and LUKS1 use different commands to encrypt the disk. Using the wrong command for a LUKS version might cause data loss.

| LUKS version | Encryption command |

|---|---|

| LUKS2 |

|

| LUKS1 |

|

Online re-encryption

The LUKS2 format supports re-encrypting encrypted devices while the devices are in use. For example, you do not have to unmount the file system on the device to perform the following tasks:

- Change the volume key

- Change the encryption algorithm

When encrypting a non-encrypted device, you must still unmount the file system. You can remount the file system after a short initialization of the encryption.

The LUKS1 format does not support online re-encryption.

Conversion

The LUKS2 format is inspired by LUKS1. In certain situations, you can convert LUKS1 to LUKS2. The conversion is not possible specifically in the following scenarios:

-

A LUKS1 device is marked as being used by a Policy-Based Decryption (PBD - Clevis) solution. The

cryptsetuptool refuses to convert the device when someluksmetametadata are detected. - A device is active. The device must be in the inactive state before any conversion is possible.

16.13.3. Options for data protection during LUKS2 re-encryption

LUKS2 provides several options that prioritize performance or data protection during the re-encryption process:

checksumThis is the default mode. It balances data protection and performance.

This mode stores individual checksums of the sectors in the re-encryption area, so the recovery process can detect which sectors LUKS2 already re-encrypted. The mode requires that the block device sector write is atomic.

journal- That is the safest mode but also the slowest. This mode journals the re-encryption area in the binary area, so LUKS2 writes the data twice.

none-

This mode prioritizes performance and provides no data protection. It protects the data only against safe process termination, such as the

SIGTERMsignal or the user pressing Ctrl+C. Any unexpected system crash or application crash might result in data corruption.

You can select the mode using the --resilience option of cryptsetup.

If a LUKS2 re-encryption process terminates unexpectedly by force, LUKS2 can perform the recovery in one of the following ways:

-

Automatically, during the next LUKS2 device open action. This action is triggered either by the

cryptsetup opencommand or by attaching the device withsystemd-cryptsetup. -

Manually, by using the

cryptsetup repaircommand on the LUKS2 device.

16.13.4. Encrypting existing data on a block device using LUKS2

This procedure encrypts existing data on a not yet encrypted device using the LUKS2 format. A new LUKS header is stored in the head of the device.

Prerequisites

- The block device contains a file system.

You have backed up your data.

WarningYou might lose your data during the encryption process: due to a hardware, kernel, or human failure. Ensure that you have a reliable backup before you start encrypting the data.

Procedure

Unmount all file systems on the device that you plan to encrypt. For example:

# umount /dev/sdb1Make free space for storing a LUKS header. Choose one of the following options that suits your scenario:

In the case of encrypting a logical volume, you can extend the logical volume without resizing the file system. For example:

# lvextend -L+32M vg00/lv00-

Extend the partition using partition management tools, such as

parted. -

Shrink the file system on the device. You can use the

resize2fsutility for the ext2, ext3, or ext4 file systems. Note that you cannot shrink the XFS file system.

Initialize the encryption. For example:

# cryptsetup reencrypt \ --encrypt \ --init-only \ --reduce-device-size 32M \ /dev/sdb1 sdb1_encrypted

The command asks you for a passphrase and starts the encryption process.

Mount the device:

# mount /dev/mapper/sdb1_encrypted /mnt/sdb1_encryptedAdd an entry for a persistent mapping to

/etc/crypttabFind the

luksUUID:# cryptsetup luksUUID /dev/mapper/sdb1_encrypted

This displays the

luksUUIDof the selected device.Open the

/etc/crypttabfile in a text editor of your choice and add a device in this file:$ vi /etc/crypttab

/dev/mapper/sdb1_encrypted luks_uuid noneRefresh initramfs with dracut:

$ dracut -f --regenerate-all

Add an entry for a persistent mounting to the

/etc/fstabfile:Find the

FS UUIDof the active LUKS block device$ blkid -p /dev/mapper/sdb1_encrypted

Open the

/etc/fstabfile in a text editor of your choice and add a device in this file, for example:$ vi /etc/fstab fs__uuid /home auto rw,user,auto 0 0

Start the online encryption:

# cryptsetup reencrypt --resume-only /dev/sdb1

Additional resources

-

cryptsetup(8),lvextend(8),resize2fs(8), andparted(8)man pages

16.13.5. Encrypting existing data on a block device using LUKS2 with a detached header

This procedure encrypts existing data on a block device without creating free space for storing a LUKS header. The header is stored in a detached location, which also serves as an additional layer of security. The procedure uses the LUKS2 encryption format.

Prerequisites

- The block device contains a file system.

You have backed up your data.

WarningYou might lose your data during the encryption process: due to a hardware, kernel, or human failure. Ensure that you have a reliable backup before you start encrypting the data.

Procedure

Unmount all file systems on the device. For example:

# umount /dev/sdb1Initialize the encryption:

# cryptsetup reencrypt \ --encrypt \ --init-only \ --header /path/to/header \ /dev/sdb1 sdb1_encrypted

Replace /path/to/header with a path to the file with a detached LUKS header. The detached LUKS header has to be accessible so that the encrypted device can be unlocked later.

The command asks you for a passphrase and starts the encryption process.

Mount the device:

# mount /dev/mapper/sdb1_encrypted /mnt/sdb1_encryptedStart the online encryption:

# cryptsetup reencrypt --resume-only --header /path/to/header /dev/sdb1

Additional resources

-

cryptsetup(8)man page

16.13.6. Encrypting a blank block device using LUKS2

This procedure provides information about encrypting a blank block device using the LUKS2 format.

Prerequisites

- A blank block device.

Procedure

Setup a partition as an encrypted LUKS partition:

# cryptsetup luksFormat /dev/sdb1Open an encrypted LUKS partition:

# cryptsetup open /dev/sdb1 sdb1_encryptedThis unlocks the partition and maps it to a new device using the device mapper. This alerts kernel that

deviceis an encrypted device and should be addressed through LUKS using the/dev/mapper/device_mapped_nameso as not to overwrite the encrypted data.To write encrypted data to the partition, it must be accessed through the device mapped name. To do this, you must create a file system. For example:

# mkfs -t ext4 /dev/mapper/sdb1_encryptedMount the device:

# mount /dev/mapper/sdb1_encrypted mount-point

Additional resources

-

cryptsetup(8)man page

16.13.7. Creating a LUKS encrypted volume using the storage RHEL System Role

You can use the storage role to create and configure a volume encrypted with LUKS by running an Ansible playbook.

Prerequisites

-

Access and permissions to one or more managed nodes, which are systems you want to configure with the

crypto_policiesSystem Role. Access and permissions to a control node, which is a system from which Red Hat Ansible Core configures other systems.

On the control node:

-

The

ansible-coreandrhel-system-rolespackages are installed.

-

The

RHEL 8.0-8.5 provided access to a separate Ansible repository that contains Ansible Engine 2.9 for automation based on Ansible. Ansible Engine contains command-line utilities such as ansible, ansible-playbook, connectors such as docker and podman, and many plugins and modules. For information on how to obtain and install Ansible Engine, see the How to download and install Red Hat Ansible Engine Knowledgebase article.

RHEL 8.6 and 9.0 have introduced Ansible Core (provided as the ansible-core package), which contains the Ansible command-line utilities, commands, and a small set of built-in Ansible plugins. RHEL provides this package through the AppStream repository, and it has a limited scope of support. For more information, see the Scope of support for the Ansible Core package included in the RHEL 9 and RHEL 8.6 and later AppStream repositories Knowledgebase article.

- An inventory file which lists the managed nodes.

Procedure

Create a new

playbook.ymlfile with the following content:- hosts: all vars: storage_volumes: - name: barefs type: disk disks: - sdb fs_type: xfs fs_label: label-name mount_point: /mnt/data encryption: true encryption_password: your-password roles: - rhel-system-roles.storageOptional: Verify playbook syntax:

# ansible-playbook --syntax-check playbook.ymlRun the playbook on your inventory file:

# ansible-playbook -i inventory.file /path/to/file/playbook.yml

Additional resources

- Encrypting block devices using LUKS

-

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile

16.14. Configuring automated unlocking of encrypted volumes using policy-based decryption

Policy-Based Decryption (PBD) is a collection of technologies that enable unlocking encrypted root and secondary volumes of hard drives on physical and virtual machines. PBD uses a variety of unlocking methods, such as user passwords, a Trusted Platform Module (TPM) device, a PKCS #11 device connected to a system, for example, a smart card, or a special network server.

PBD allows combining different unlocking methods into a policy, which makes it possible to unlock the same volume in different ways. The current implementation of the PBD in RHEL consists of the Clevis framework and plug-ins called pins. Each pin provides a separate unlocking capability. Currently, the following pins are available:

-

tang- allows unlocking volumes using a network server -

tpm2- allows unlocking volumes using a TPM2 policy -

sss- allows deploying high-availability systems using the Shamir’s Secret Sharing (SSS) cryptographic scheme

16.14.1. Network-bound disk encryption

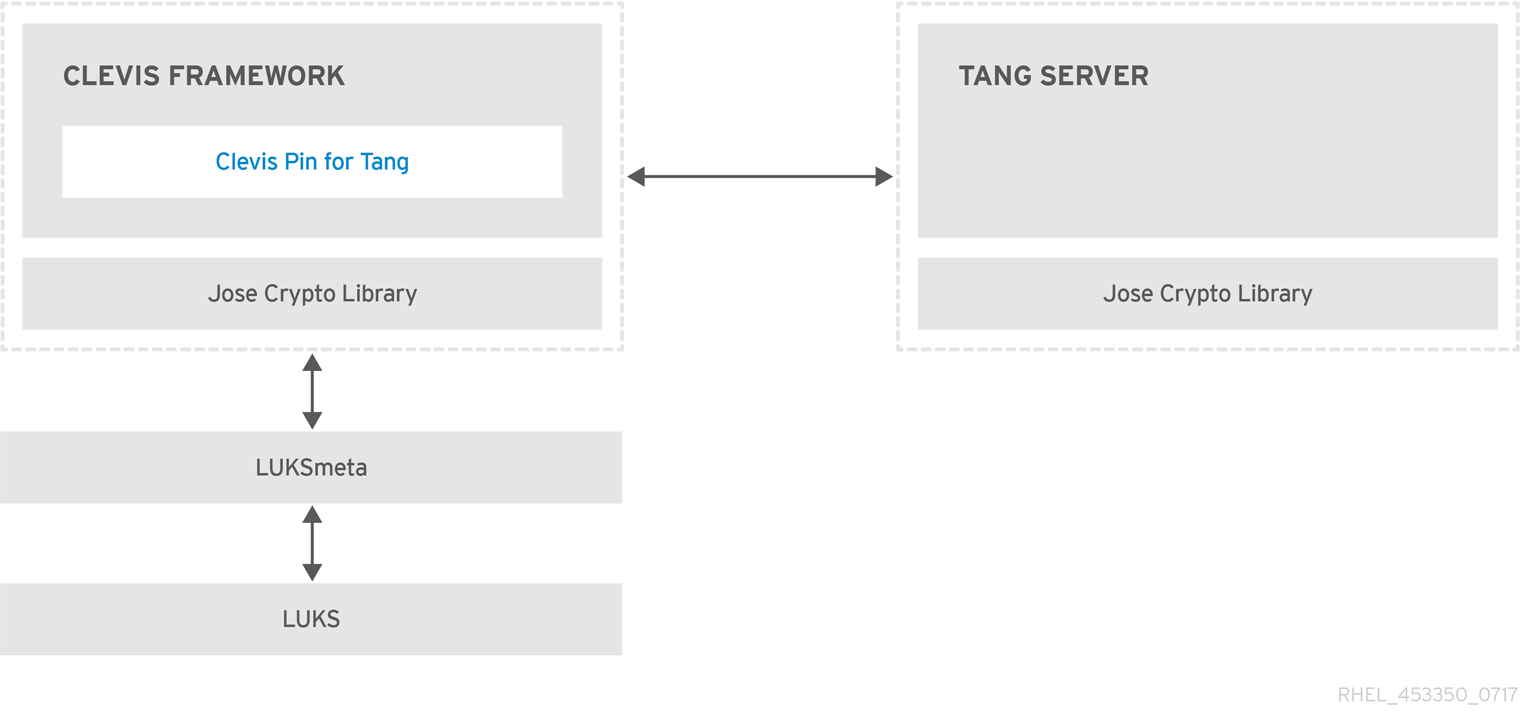

The Network Bound Disc Encryption (NBDE) is a subcategory of Policy-Based Decryption (PBD) that allows binding encrypted volumes to a special network server. The current implementation of the NBDE includes a Clevis pin for the Tang server and the Tang server itself.

In RHEL, NBDE is implemented through the following components and technologies:

Figure 16.1. NBDE scheme when using a LUKS1-encrypted volume. The luksmeta package is not used for LUKS2 volumes.

Tang is a server for binding data to network presence. It makes a system containing your data available when the system is bound to a certain secure network. Tang is stateless and does not require TLS or authentication. Unlike escrow-based solutions, where the server stores all encryption keys and has knowledge of every key ever used, Tang never interacts with any client keys, so it never gains any identifying information from the client.

Clevis is a pluggable framework for automated decryption. In NBDE, Clevis provides automated unlocking of LUKS volumes. The clevis package provides the client side of the feature.

A Clevis pin is a plug-in into the Clevis framework. One of such pins is a plug-in that implements interactions with the NBDE server — Tang.

Clevis and Tang are generic client and server components that provide network-bound encryption. In RHEL, they are used in conjunction with LUKS to encrypt and decrypt root and non-root storage volumes to accomplish Network-Bound Disk Encryption.

Both client- and server-side components use the José library to perform encryption and decryption operations.

When you begin provisioning NBDE, the Clevis pin for Tang server gets a list of the Tang server’s advertised asymmetric keys. Alternatively, since the keys are asymmetric, a list of Tang’s public keys can be distributed out of band so that clients can operate without access to the Tang server. This mode is called offline provisioning.

The Clevis pin for Tang uses one of the public keys to generate a unique, cryptographically-strong encryption key. Once the data is encrypted using this key, the key is discarded. The Clevis client should store the state produced by this provisioning operation in a convenient location. This process of encrypting data is the provisioning step.

The LUKS version 2 (LUKS2) is the default disk-encryption format in RHEL, hence, the provisioning state for NBDE is stored as a token in a LUKS2 header. The leveraging of provisioning state for NBDE by the luksmeta package is used only for volumes encrypted with LUKS1.

The Clevis pin for Tang supports both LUKS1 and LUKS2 without specification need. Clevis can encrypt plain-text files but you have to use the cryptsetup tool for encrypting block devices. See the Encrypting block devices using LUKS for more information.

When the client is ready to access its data, it loads the metadata produced in the provisioning step and it responds to recover the encryption key. This process is the recovery step.

In NBDE, Clevis binds a LUKS volume using a pin so that it can be automatically unlocked. After successful completion of the binding process, the disk can be unlocked using the provided Dracut unlocker.

If the kdump kernel crash dumping mechanism is set to save the content of the system memory to a LUKS-encrypted device, you are prompted for entering a password during the second kernel boot.

Additional resources

- NBDE (Network-Bound Disk Encryption) Technology Knowledgebase article

-

tang(8),clevis(1),jose(1), andclevis-luks-unlockers(7)man pages - How to set up Network-Bound Disk Encryption with multiple LUKS devices (Clevis + Tang unlocking) Knowledgebase article

16.14.2. Installing an encryption client - Clevis

Use this procedure to deploy and start using the Clevis pluggable framework on your system.

Procedure

To install Clevis and its pins on a system with an encrypted volume:

# yum install clevisTo decrypt data, use a

clevis decryptcommand and provide a cipher text in the JSON Web Encryption (JWE) format, for example:$ clevis decrypt < secret.jwe

Additional resources

-

clevis(1)man page Built-in CLI help after entering the

cleviscommand without any argument:$ clevis Usage: clevis COMMAND [OPTIONS] clevis decrypt Decrypts using the policy defined at encryption time clevis encrypt sss Encrypts using a Shamir's Secret Sharing policy clevis encrypt tang Encrypts using a Tang binding server policy clevis encrypt tpm2 Encrypts using a TPM2.0 chip binding policy clevis luks bind Binds a LUKS device using the specified policy clevis luks edit Edit a binding from a clevis-bound slot in a LUKS device clevis luks list Lists pins bound to a LUKSv1 or LUKSv2 device clevis luks pass Returns the LUKS passphrase used for binding a particular slot. clevis luks regen Regenerate clevis binding clevis luks report Report tang keys' rotations clevis luks unbind Unbinds a pin bound to a LUKS volume clevis luks unlock Unlocks a LUKS volume

16.14.3. Deploying a Tang server with SELinux in enforcing mode

Use this procedure to deploy a Tang server running on a custom port as a confined service in SELinux enforcing mode.

Prerequisites

-

The

policycoreutils-python-utilspackage and its dependencies are installed. -

The

firewalldservice is running.

Procedure

To install the

tangpackage and its dependencies, enter the following command asroot:# yum install tangPick an unoccupied port, for example, 7500/tcp, and allow the

tangdservice to bind to that port:# semanage port -a -t tangd_port_t -p tcp 7500Note that a port can be used only by one service at a time, and thus an attempt to use an already occupied port implies the

ValueError: Port already definederror message.Open the port in the firewall:

# firewall-cmd --add-port=7500/tcp # firewall-cmd --runtime-to-permanent

Enable the

tangdservice:# systemctl enable tangd.socketCreate an override file:

# systemctl edit tangd.socketIn the following editor screen, which opens an empty

override.conffile located in the/etc/systemd/system/tangd.socket.d/directory, change the default port for the Tang server from 80 to the previously picked number by adding the following lines:[Socket] ListenStream= ListenStream=7500Save the file and exit the editor.

Reload the changed configuration:

# systemctl daemon-reloadCheck that your configuration is working:

# systemctl show tangd.socket -p Listen Listen=[::]:7500 (Stream)Start the

tangdservice:# systemctl restart tangd.socketBecause

tangduses thesystemdsocket activation mechanism, the server starts as soon as the first connection comes in. A new set of cryptographic keys is automatically generated at the first start. To perform cryptographic operations such as manual key generation, use thejoseutility.

Additional resources

-

tang(8),semanage(8),firewall-cmd(1),jose(1),systemd.unit(5), andsystemd.socket(5)man pages

16.14.4. Rotating Tang server keys and updating bindings on clients

Use the following steps to rotate your Tang server keys and update existing bindings on clients. The precise interval at which you should rotate them depends on your application, key sizes, and institutional policy.

Alternatively, you can rotate Tang keys by using the nbde_server RHEL system role. See Using the nbde_server system role for setting up multiple Tang servers for more information.

Prerequisites

- A Tang server is running.

-

The

clevisandclevis-lukspackages are installed on your clients. -

Note that

clevis luks list,clevis luks report, andclevis luks regenhave been introduced in RHEL 8.2.

Procedure

Rename all keys in the

/var/db/tangkey database directory to have a leading.to hide them from advertisement. Note that the file names in the following example differs from unique file names in the key database directory of your Tang server:# cd /var/db/tang # ls -l -rw-r--r--. 1 root root 349 Feb 7 14:55 UV6dqXSwe1bRKG3KbJmdiR020hY.jwk -rw-r--r--. 1 root root 354 Feb 7 14:55 y9hxLTQSiSB5jSEGWnjhY8fDTJU.jwk # mv UV6dqXSwe1bRKG3KbJmdiR020hY.jwk .UV6dqXSwe1bRKG3KbJmdiR020hY.jwk # mv y9hxLTQSiSB5jSEGWnjhY8fDTJU.jwk .y9hxLTQSiSB5jSEGWnjhY8fDTJU.jwk

Check that you renamed and therefore hid all keys from the Tang server advertisement:

# ls -l total 0Generate new keys using the

/usr/libexec/tangd-keygencommand in/var/db/tangon the Tang server:# /usr/libexec/tangd-keygen /var/db/tang # ls /var/db/tang 3ZWS6-cDrCG61UPJS2BMmPU4I54.jwk zyLuX6hijUy_PSeUEFDi7hi38.jwk

Check that your Tang server advertises the signing key from the new key pair, for example:

# tang-show-keys 7500 3ZWS6-cDrCG61UPJS2BMmPU4I54On your NBDE clients, use the

clevis luks reportcommand to check if the keys advertised by the Tang server remains the same. You can identify slots with the relevant binding using theclevis luks listcommand, for example:# clevis luks list -d /dev/sda2 1: tang '{"url":"http://tang.srv"}' # clevis luks report -d /dev/sda2 -s 1 ... Report detected that some keys were rotated. Do you want to regenerate luks metadata with "clevis luks regen -d /dev/sda2 -s 1"? [ynYN]

To regenerate LUKS metadata for the new keys either press

yto the prompt of the previous command, or use theclevis luks regencommand:# clevis luks regen -d /dev/sda2 -s 1When you are sure that all old clients use the new keys, you can remove the old keys from the Tang server, for example:

# cd /var/db/tang # rm .*.jwk

Removing the old keys while clients are still using them can result in data loss. If you accidentally remove such keys, use the clevis luks regen command on the clients, and provide your LUKS password manually.

Additional resources

-

tang-show-keys(1),clevis-luks-list(1),clevis-luks-report(1), andclevis-luks-regen(1)man pages

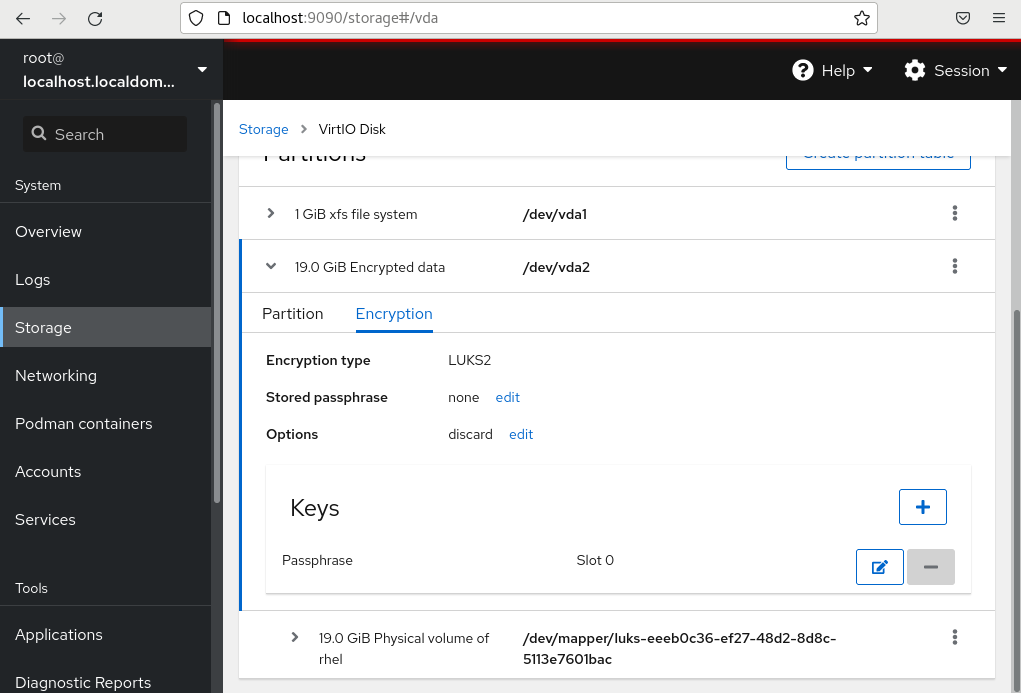

16.14.5. Configuring automated unlocking using a Tang key in the web console

Configure automated unlocking of a LUKS-encrypted storage device using a key provided by a Tang server.

Prerequisites

The RHEL 8 web console has been installed.

For details, see Installing the web console.

-

The

cockpit-storagedpackage is installed on your system. -

The

cockpit.socketservice is running at port 9090. -

The

clevis,tang, andclevis-dracutpackages are installed. - A Tang server is running.

Procedure

Open the RHEL web console by entering the following address in a web browser:

https://localhost:9090Replace the localhost part by the remote server’s host name or IP address when you connect to a remote system.

- Provide your credentials and click . Click to expand details of the encrypted device you want to unlock using the Tang server, and click .

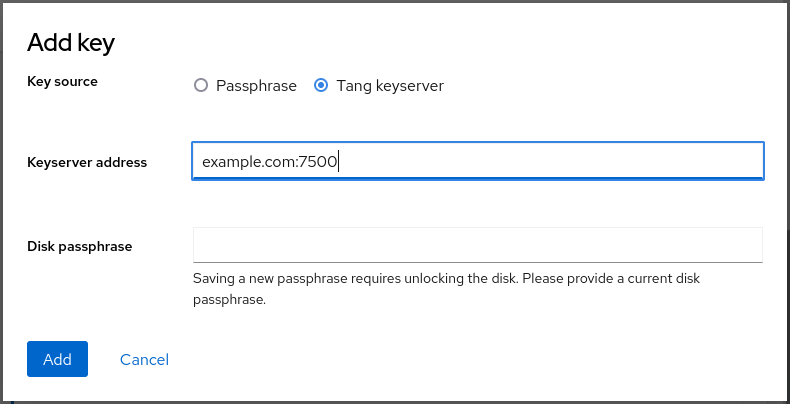

Click in the Keys section to add a Tang key:

Provide the address of your Tang server and a password that unlocks the LUKS-encrypted device. Click to confirm:

The following dialog window provides a command to verify that the key hash matches.

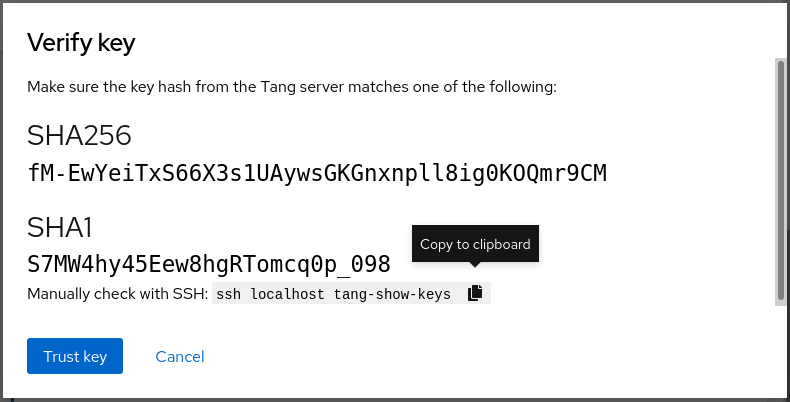

In a terminal on the Tang server, use the

tang-show-keyscommand to display the key hash for comparison. In this example, the Tang server is running on the port 7500:# tang-show-keys 7500 fM-EwYeiTxS66X3s1UAywsGKGnxnpll8ig0KOQmr9CMClick when the key hashes in the web console and in the output of previously listed commands are the same:

To enable the early boot system to process the disk binding, click at the bottom of the left navigation bar and enter the following commands:

# yum install clevis-dracut # grubby --update-kernel=ALL --args="rd.neednet=1" # dracut -fv --regenerate-all

Verification

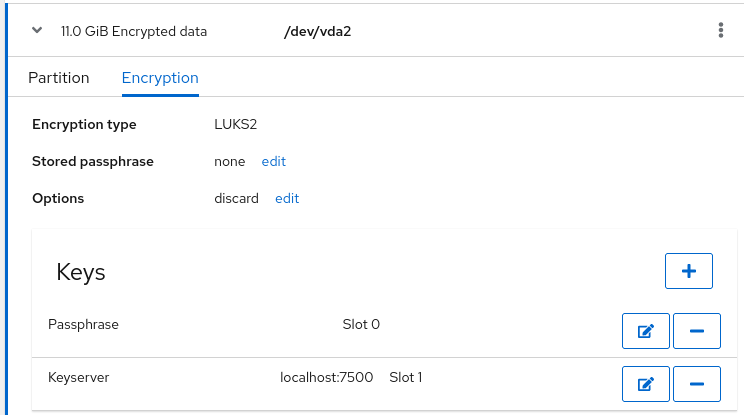

Check that the newly added Tang key is now listed in the Keys section with the

Keyservertype:

Verify that the bindings are available for the early boot, for example:

# lsinitrd | grep clevis clevis clevis-pin-sss clevis-pin-tang clevis-pin-tpm2 -rwxr-xr-x 1 root root 1600 Feb 11 16:30 usr/bin/clevis -rwxr-xr-x 1 root root 1654 Feb 11 16:30 usr/bin/clevis-decrypt ... -rwxr-xr-x 2 root root 45 Feb 11 16:30 usr/lib/dracut/hooks/initqueue/settled/60-clevis-hook.sh -rwxr-xr-x 1 root root 2257 Feb 11 16:30 usr/libexec/clevis-luks-askpass

Additional resources

16.14.6. Basic NBDE and TPM2 encryption-client operations

The Clevis framework can encrypt plain-text files and decrypt both ciphertexts in the JSON Web Encryption (JWE) format and LUKS-encrypted block devices. Clevis clients can use either Tang network servers or Trusted Platform Module 2.0 (TPM 2.0) chips for cryptographic operations.

The following commands demonstrate the basic functionality provided by Clevis on examples containing plain-text files. You can also use them for troubleshooting your NBDE or Clevis+TPM deployments.

Encryption client bound to a Tang server

To check that a Clevis encryption client binds to a Tang server, use the

clevis encrypt tangsub-command:$ clevis encrypt tang '{"url":"http://tang.srv:port"}' < input-plain.txt > secret.jwe The advertisement contains the following signing keys: _OsIk0T-E2l6qjfdDiwVmidoZjA Do you wish to trust these keys? [ynYN] yChange the http://tang.srv:port URL in the previous example to match the URL of the server where

tangis installed. The secret.jwe output file contains your encrypted cipher text in the JWE format. This cipher text is read from the input-plain.txt input file.Alternatively, if your configuration requires a non-interactive communication with a Tang server without SSH access, you can download an advertisement and save it to a file:

$ curl -sfg http://tang.srv:port/adv -o adv.jwsUse the advertisement in the adv.jws file for any following tasks, such as encryption of files or messages:

$ echo 'hello' | clevis encrypt tang '{"url":"http://tang.srv:port","adv":"adv.jws"}'To decrypt data, use the

clevis decryptcommand and provide the cipher text (JWE):$ clevis decrypt < secret.jwe > output-plain.txt

Encryption client using TPM 2.0

To encrypt using a TPM 2.0 chip, use the

clevis encrypt tpm2sub-command with the only argument in form of the JSON configuration object:$ clevis encrypt tpm2 '{}' < input-plain.txt > secret.jweTo choose a different hierarchy, hash, and key algorithms, specify configuration properties, for example:

$ clevis encrypt tpm2 '{"hash":"sha256","key":"rsa"}' < input-plain.txt > secret.jweTo decrypt the data, provide the ciphertext in the JSON Web Encryption (JWE) format:

$ clevis decrypt < secret.jwe > output-plain.txt

The pin also supports sealing data to a Platform Configuration Registers (PCR) state. That way, the data can only be unsealed if the PCR hashes values match the policy used when sealing.

For example, to seal the data to the PCR with index 0 and 7 for the SHA-256 bank:

$ clevis encrypt tpm2 '{"pcr_bank":"sha256","pcr_ids":"0,7"}' < input-plain.txt > secret.jweHashes in PCRs can be rewritten, and you no longer can unlock your encrypted volume. For this reason, add a strong passphrase that enable you to unlock the encrypted volume manually even when a value in a PCR changes.

If the system cannot automatically unlock your encrypted volume after an upgrade of the shim-x64 package, follow the steps in the Clevis TPM2 no longer decrypts LUKS devices after a restart KCS article.

Additional resources

-

clevis-encrypt-tang(1),clevis-luks-unlockers(7),clevis(1), andclevis-encrypt-tpm2(1)man pages clevis,clevis decrypt, andclevis encrypt tangcommands without any arguments show the built-in CLI help, for example:$ clevis encrypt tang Usage: clevis encrypt tang CONFIG < PLAINTEXT > JWE ...

16.14.7. Configuring manual enrollment of LUKS-encrypted volumes

Use the following steps to configure unlocking of LUKS-encrypted volumes with NBDE.

Prerequisites

- A Tang server is running and available.

Procedure

To automatically unlock an existing LUKS-encrypted volume, install the

clevis-lukssubpackage:# yum install clevis-luksIdentify the LUKS-encrypted volume for PBD. In the following example, the block device is referred as /dev/sda2:

# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 12G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 11G 0 part └─luks-40e20552-2ade-4954-9d56-565aa7994fb6 253:0 0 11G 0 crypt ├─rhel-root 253:0 0 9.8G 0 lvm / └─rhel-swap 253:1 0 1.2G 0 lvm [SWAP]Bind the volume to a Tang server using the

clevis luks bindcommand:# clevis luks bind -d /dev/sda2 tang '{"url":"http://tang.srv"}' The advertisement contains the following signing keys: _OsIk0T-E2l6qjfdDiwVmidoZjA Do you wish to trust these keys? [ynYN] y You are about to initialize a LUKS device for metadata storage. Attempting to initialize it may result in data loss if data was already written into the LUKS header gap in a different format. A backup is advised before initialization is performed. Do you wish to initialize /dev/sda2? [yn] y Enter existing LUKS password:This command performs four steps:

- Creates a new key with the same entropy as the LUKS master key.

- Encrypts the new key with Clevis.

- Stores the Clevis JWE object in the LUKS2 header token or uses LUKSMeta if the non-default LUKS1 header is used.

- Enables the new key for use with LUKS.

NoteThe binding procedure assumes that there is at least one free LUKS password slot. The

clevis luks bindcommand takes one of the slots.The volume can now be unlocked with your existing password as well as with the Clevis policy.

To enable the early boot system to process the disk binding, use the

dracuttool on an already installed system:# yum install clevis-dracutIn RHEL, Clevis produces a generic

initrd(initial ramdisk) without host-specific configuration options and does not automatically add parameters such asrd.neednet=1to the kernel command line. If your configuration relies on a Tang pin that requires network during early boot, use the--hostonly-cmdlineargument anddracutaddsrd.neednet=1when it detects a Tang binding:# dracut -fv --regenerate-all --hostonly-cmdlineAlternatively, create a .conf file in the

/etc/dracut.conf.d/, and add thehostonly_cmdline=yesoption to the file, for example:# echo "hostonly_cmdline=yes" > /etc/dracut.conf.d/clevis.confNoteYou can also ensure that networking for a Tang pin is available during early boot by using the

grubbytool on the system where Clevis is installed:# grubby --update-kernel=ALL --args="rd.neednet=1"Then you can use

dracutwithout--hostonly-cmdline:# dracut -fv --regenerate-all

Verification

To verify that the Clevis JWE object is successfully placed in a LUKS header, use the

clevis luks listcommand:# clevis luks list -d /dev/sda2 1: tang '{"url":"http://tang.srv:port"}'

To use NBDE for clients with static IP configuration (without DHCP), pass your network configuration to the dracut tool manually, for example:

# dracut -fv --regenerate-all --kernel-cmdline "ip=192.0.2.10::192.0.2.1:255.255.255.0::ens3:none"

Alternatively, create a .conf file in the /etc/dracut.conf.d/ directory with the static network information. For example:

# cat /etc/dracut.conf.d/static_ip.conf

kernel_cmdline="ip=192.0.2.10::192.0.2.1:255.255.255.0::ens3:none"Regenerate the initial RAM disk image:

# dracut -fv --regenerate-allAdditional resources

-

clevis-luks-bind(1)anddracut.cmdline(7)man pages. - RHEL Network boot options

16.14.8. Configuring manual enrollment of LUKS-encrypted volumes using a TPM 2.0 policy

Use the following steps to configure unlocking of LUKS-encrypted volumes by using a Trusted Platform Module 2.0 (TPM 2.0) policy.

Prerequisites

- An accessible TPM 2.0-compatible device.

- A system with the 64-bit Intel or 64-bit AMD architecture.

Procedure

To automatically unlock an existing LUKS-encrypted volume, install the

clevis-lukssubpackage:# yum install clevis-luksIdentify the LUKS-encrypted volume for PBD. In the following example, the block device is referred as /dev/sda2:

# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 12G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 11G 0 part └─luks-40e20552-2ade-4954-9d56-565aa7994fb6 253:0 0 11G 0 crypt ├─rhel-root 253:0 0 9.8G 0 lvm / └─rhel-swap 253:1 0 1.2G 0 lvm [SWAP]Bind the volume to a TPM 2.0 device using the

clevis luks bindcommand, for example:# clevis luks bind -d /dev/sda2 tpm2 '{"hash":"sha256","key":"rsa"}' ... Do you wish to initialize /dev/sda2? [yn] y Enter existing LUKS password:This command performs four steps:

- Creates a new key with the same entropy as the LUKS master key.

- Encrypts the new key with Clevis.

- Stores the Clevis JWE object in the LUKS2 header token or uses LUKSMeta if the non-default LUKS1 header is used.

Enables the new key for use with LUKS.

NoteThe binding procedure assumes that there is at least one free LUKS password slot. The

clevis luks bindcommand takes one of the slots.Alternatively, if you want to seal data to specific Platform Configuration Registers (PCR) states, add the

pcr_bankandpcr_idsvalues to theclevis luks bindcommand, for example:# clevis luks bind -d /dev/sda2 tpm2 '{"hash":"sha256","key":"rsa","pcr_bank":"sha256","pcr_ids":"0,1"}'WarningBecause the data can only be unsealed if PCR hashes values match the policy used when sealing and the hashes can be rewritten, add a strong passphrase that enable you to unlock the encrypted volume manually when a value in a PCR changes.

If the system cannot automatically unlock your encrypted volume after an upgrade of the

shim-x64package, follow the steps in the Clevis TPM2 no longer decrypts LUKS devices after a restart KCS article.

- The volume can now be unlocked with your existing password as well as with the Clevis policy.

To enable the early boot system to process the disk binding, use the

dracuttool on an already installed system:# yum install clevis-dracut # dracut -fv --regenerate-all

Verification

To verify that the Clevis JWE object is successfully placed in a LUKS header, use the

clevis luks listcommand:# clevis luks list -d /dev/sda2 1: tpm2 '{"hash":"sha256","key":"rsa"}'

Additional resources

-

clevis-luks-bind(1),clevis-encrypt-tpm2(1), anddracut.cmdline(7)man pages

16.14.9. Removing a Clevis pin from a LUKS-encrypted volume manually

Use the following procedure for manual removing the metadata created by the clevis luks bind command and also for wiping a key slot that contains passphrase added by Clevis.

The recommended way to remove a Clevis pin from a LUKS-encrypted volume is through the clevis luks unbind command. The removal procedure using clevis luks unbind consists of only one step and works for both LUKS1 and LUKS2 volumes. The following example command removes the metadata created by the binding step and wipe the key slot 1 on the /dev/sda2 device:

# clevis luks unbind -d /dev/sda2 -s 1Prerequisites

- A LUKS-encrypted volume with a Clevis binding.

Procedure

Check which LUKS version the volume, for example /dev/sda2, is encrypted by and identify a slot and a token that is bound to Clevis:

# cryptsetup luksDump /dev/sda2 LUKS header information Version: 2 ... Keyslots: 0: luks2 ... 1: luks2 Key: 512 bits Priority: normal Cipher: aes-xts-plain64 ... Tokens: 0: clevis Keyslot: 1 ...In the previous example, the Clevis token is identified by 0 and the associated key slot is 1.

In case of LUKS2 encryption, remove the token:

# cryptsetup token remove --token-id 0 /dev/sda2If your device is encrypted by LUKS1, which is indicated by the

Version: 1string in the output of thecryptsetup luksDumpcommand, perform this additional step with theluksmeta wipecommand:# luksmeta wipe -d /dev/sda2 -s 1Wipe the key slot containing the Clevis passphrase:

# cryptsetup luksKillSlot /dev/sda2 1

Additional resources

-

clevis-luks-unbind(1),cryptsetup(8), andluksmeta(8)man pages

16.14.10. Configuring automated enrollment of LUKS-encrypted volumes using Kickstart

Follow the steps in this procedure to configure an automated installation process that uses Clevis for the enrollment of LUKS-encrypted volumes.

Procedure

Instruct Kickstart to partition the disk such that LUKS encryption has enabled for all mount points, other than

/boot, with a temporary password. The password is temporary for this step of the enrollment process.part /boot --fstype="xfs" --ondisk=vda --size=256 part / --fstype="xfs" --ondisk=vda --grow --encrypted --passphrase=temppass

Note that OSPP-compliant systems require a more complex configuration, for example:

part /boot --fstype="xfs" --ondisk=vda --size=256 part / --fstype="xfs" --ondisk=vda --size=2048 --encrypted --passphrase=temppass part /var --fstype="xfs" --ondisk=vda --size=1024 --encrypted --passphrase=temppass part /tmp --fstype="xfs" --ondisk=vda --size=1024 --encrypted --passphrase=temppass part /home --fstype="xfs" --ondisk=vda --size=2048 --grow --encrypted --passphrase=temppass part /var/log --fstype="xfs" --ondisk=vda --size=1024 --encrypted --passphrase=temppass part /var/log/audit --fstype="xfs" --ondisk=vda --size=1024 --encrypted --passphrase=temppass

Install the related Clevis packages by listing them in the

%packagessection:%packages clevis-dracut clevis-luks clevis-systemd %end

- Optionally, to ensure that you can unlock the encrypted volume manually when required, add a strong passphrase before you remove the temporary passphrase. See the How to add a passphrase, key, or keyfile to an existing LUKS device article for more information.

Call

clevis luks bindto perform binding in the%postsection. Afterward, remove the temporary password:%post clevis luks bind -y -k - -d /dev/vda2 \ tang '{"url":"http://tang.srv"}' <<< "temppass" cryptsetup luksRemoveKey /dev/vda2 <<< "temppass" dracut -fv --regenerate-all %endIf your configuration relies on a Tang pin that requires network during early boot or you use NBDE clients with static IP configurations, you have to modify the

dracutcommand as described in Configuring manual enrollment of LUKS-encrypted volumes.Note that the

-yoption for theclevis luks bindcommand is available from RHEL 8.3. In RHEL 8.2 and older, replace-yby-fin theclevis luks bindcommand and download the advertisement from the Tang server:%post curl -sfg http://tang.srv/adv -o adv.jws clevis luks bind -f -k - -d /dev/vda2 \ tang '{"url":"http://tang.srv","adv":"adv.jws"}' <<< "temppass" cryptsetup luksRemoveKey /dev/vda2 <<< "temppass" dracut -fv --regenerate-all %endWarningThe

cryptsetup luksRemoveKeycommand prevents any further administration of a LUKS2 device on which you apply it. You can recover a removed master key using thedmsetupcommand only for LUKS1 devices.

You can use an analogous procedure when using a TPM 2.0 policy instead of a Tang server.

Additional resources

-

clevis(1),clevis-luks-bind(1),cryptsetup(8), anddmsetup(8)man pages - Installing Red Hat Enterprise Linux 8 using Kickstart

16.14.11. Configuring automated unlocking of a LUKS-encrypted removable storage device

Use this procedure to set up an automated unlocking process of a LUKS-encrypted USB storage device.

Procedure

To automatically unlock a LUKS-encrypted removable storage device, such as a USB drive, install the

clevis-udisks2package:# yum install clevis-udisks2Reboot the system, and then perform the binding step using the

clevis luks bindcommand as described in Configuring manual enrollment of LUKS-encrypted volumes, for example:# clevis luks bind -d /dev/sdb1 tang '{"url":"http://tang.srv"}'The LUKS-encrypted removable device can be now unlocked automatically in your GNOME desktop session. The device bound to a Clevis policy can be also unlocked by the

clevis luks unlockcommand:# clevis luks unlock -d /dev/sdb1

You can use an analogous procedure when using a TPM 2.0 policy instead of a Tang server.

Additional resources

-

clevis-luks-unlockers(7)man page

16.14.12. Deploying high-availability NBDE systems

Tang provides two methods for building a high-availability deployment:

- Client redundancy (recommended)

-