このコンテンツは選択した言語では利用できません。

11.16. Recommended Configurations - Dispersed Volume

The following table lists the brick layout details of multiple server/disk configurations for dispersed and distributed dispersed volumes.

| Redundancy Level | Supported Configurations | Bricks per Server per Subvolume | Node Loss | Max brick failure count within a subvolume | Compatible Server Node count | Increment Size (no. of nodes) | Min number of sub-volumes | Total Spindles | Tolerated HDD Failure Percentage |

|---|---|---|---|---|---|---|---|---|---|

| 12 HDD Chassis | |||||||||

| 2 | 4 + 2 | 2 | 1 | 2 | 3 | 3 | 6 | 36 | 33.33% |

| 1 | 2 | 2 | 6 | 6 | 12 | 72 | 33.33% | ||

| 2 | 8+2 | 2 | 1 | 2 | 5 | 5 | 6 | 60 | 20.00% |

| 1 | 2 | 2 | 10 | 10 | 12 | 120 | 20.00% | ||

| 3 | 8 + 3 | 1-2 | 1 | 3 | 6 | 6 | 6 | 72 | 25.00% |

| 4 | 8 + 4 | 4 | 1 | 4 | 3 | 3 | 3 | 36 | 33.33% |

| 2 | 2 | 4 | 6 | 6 | 6 | 72 | 33.33% | ||

| 1 | 4 | 4 | 12 | 12 | 12 | 144 | 33.33% | ||

| 4 | 16 + 4 | 4 | 1 | 4 | 5 | 5 | 3 | 60 | 20.00% |

| 2 | 2 | 4 | 10 | 10 | 6 | 120 | 20.00% | ||

| 1 | 4 | 4 | 20 | 20 | 12 | 240 | 20.00% | ||

| 24 HDD Chassis | |||||||||

| 2 | 4 + 2 | 2 | 1 | 2 | 3 | 3 | 12 | 72 | 33.33% |

| 1 | 2 | 2 | 6 | 6 | 24 | 144 | 33.33% | ||

| 2 | 8+ 2 | 2 | 1 | 2 | 5 | 5 | 12 | 120 | 20.00% |

| 1 | 2 | 2 | 10 | 10 | 24 | 240 | 20.00% | ||

| 4 | 8 + 4 | 4 | 1 | 4 | 3 | 3 | 6 | 72 | 33.33% |

| 2 | 2 | 4 | 6 | 6 | 12 | 144 | 33.33% | ||

| 1 | 4 | 4 | 12 | 12 | 24 | 288 | 33.33% | ||

| 4 | 16 + 4 | 4 | 1 | 4 | 5 | 5 | 6 | 120 | 20.00% |

| 2 | 2 | 4 | 10 | 10 | 12 | 240 | 20.00% | ||

| 1 | 4 | 4 | 20 | 20 | 24 | 480 | 20.00% | ||

| 36 HDD Chassis | |||||||||

| 2 | 4 + 2 | 2 | 1 | 2 | 3 | 3 | 18 | 108 | 33.33% |

| 1 | 2 | 2 | 6 | 6 | 36 | 216 | 33.33% | ||

| 2 | 8 + 2 | 2 | 1 | 1 | 5 | 5 | 18 | 180 | 20.00% |

| 1 | 2 | 2 | 10 | 10 | 36 | 360 | 20.00% | ||

| 3 | 8 + 3 | 1-2 | 1 | 3 | 6 | 6 | 19 | 216 | 26.39% |

| 4 | 8 + 4 | 4 | 1 | 4 | 3 | 3 | 9 | 108 | 33.33% |

| 2 | 2 | 4 | 6 | 6 | 18 | 216 | 33.33% | ||

| 1 | 4 | 4 | 12 | 12 | 36 | 432 | 33.33% | ||

| 4 | 16 + 4 | 4 | 1 | 4 | 5 | 5 | 9 | 180 | 20.00% |

| 2 | 2 | 4 | 10 | 10 | 18 | 360 | 20.00% | ||

| 1 | 4 | 4 | 20 | 20 | 36 | 720 | 20.00% | ||

| 60 HDD Chassis | |||||||||

| 2 | 4 + 2 | 2 | 1 | 2 | 3 | 3 | 30 | 180 | 33.33% |

| 1 | 2 | 2 | 6 | 6 | 60 | 360 | 33.33% | ||

| 2 | 8 + 2 | 2 | 1 | 2 | 5 | 5 | 30 | 300 | 20.00% |

| 1 | 2 | 2 | 10 | 10 | 60 | 600 | 20.00% | ||

| 3 | 8 + 3 | 1-2 | 1 | 3 | 6 | 6 | 32 | 360 | 26.67% |

| 4 | 8 + 4 | 4 | 1 | 4 | 3 | 3 | 15 | 180 | 33.33% |

| 2 | 2 | 4 | 6 | 6 | 30 | 360 | 33.33% | ||

| 1 | 4 | 4 | 12 | 12 | 60 | 720 | 33.33% | ||

| 4 | 16 + 4 | 4 | 1 | 4 | 5 | 5 | 15 | 300 | 20.00% |

| 2 | 2 | 4 | 10 | 10 | 30 | 600 | 20.00% | ||

| 1 | 4 | 4 | 20 | 20 | 60 | 1200 | 20.00% | ||

This example describes a compact configuration of three servers, with each server attached to a 12 HDD chassis to create a dispersed volume. In this example, each HDD is assumed to contain a single brick.

gluster volume create test_vol disperse-data 4 redundancy 2 transport tcp server1:/rhgs/brick1 server1:/rhgs/brick2 server2:/rhgs/brick3 server2:/rhgs/brick4 server3:/rhgs/brick5 server3:/rhgs/brick6 --force

# gluster volume create test_vol disperse-data 4 redundancy 2 transport tcp server1:/rhgs/brick1 server1:/rhgs/brick2 server2:/rhgs/brick3 server2:/rhgs/brick4 server3:/rhgs/brick5 server3:/rhgs/brick6 --force--force parameter is required because this configuration is not optimal in terms of fault tolerance. Since each server provides two bricks, this configuration has a greater risk to data availability if a server goes offline than it would if each brick was provided by a separate server.

gluster volume info command to view the volume information.

gluster volume add-brick test_vol server1:/rhgs/brick7 server1:/rhgs/brick8 server2:/rhgs/brick9 server2:/rhgs/brick10 server3:/rhgs/brick11 server3:/rhgs/brick12

# gluster volume add-brick test_vol server1:/rhgs/brick7 server1:/rhgs/brick8 server2:/rhgs/brick9 server2:/rhgs/brick10 server3:/rhgs/brick11 server3:/rhgs/brick12gluster volume info command to view distributed dispersed volume information.

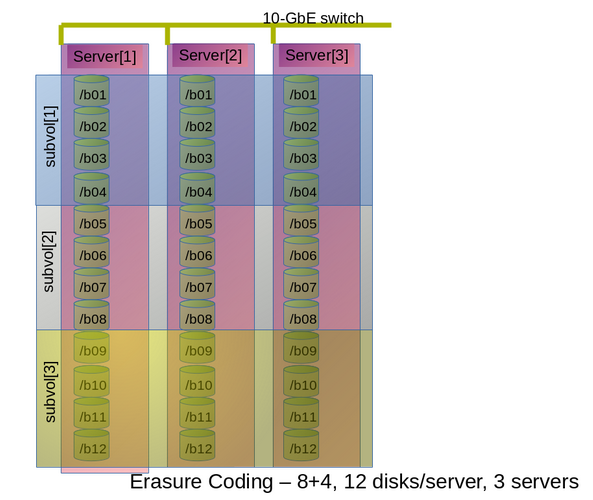

The following diagram illustrates a dispersed 8+4 configuration on three servers as explained in the row 3 of Table 11.3, “Brick Configurations for Dispersed and Distributed Dispersed Volumes” The command to create the disperse volume for this configuration:

gluster volume create test_vol disperse-data 8 redundancy 4 transport tcp server1:/rhgs/brick1 server1:/rhgs/brick2 server1:/rhgs/brick3 server1:/rhgs/brick4 server2:/rhgs/brick1 server2:/rhgs/brick2 server2:/rhgs/brick3 server2:/rhgs/brick4 server3:/rhgs/brick1 server3:/rhgs/brick2 server3:/rhgs/brick3 server3:/rhgs/brick4 server1:/rhgs/brick5 server1:/rhgs/brick6 server1:/rhgs/brick7 server1:/rhgs/brick8 server2:/rhgs/brick5 server2:/rhgs/brick6 server2:/rhgs/brick7 server2:/rhgs/brick8 server3:/rhgs/brick5 server3:/rhgs/brick6 server3:/rhgs/brick7 server3:/rhgs/brick8 server1:/rhgs/brick9 server1:/rhgs/brick10 server1:/rhgs/brick11 server1:/rhgs/brick12 server2:/rhgs/brick9 server2:/rhgs/brick10 server2:/rhgs/brick11 server2:/rhgs/brick12 server3:/rhgs/brick9 server3:/rhgs/brick10 server3:/rhgs/brick11 server3:/rhgs/brick12 --force

# gluster volume create test_vol disperse-data 8 redundancy 4 transport tcp server1:/rhgs/brick1 server1:/rhgs/brick2 server1:/rhgs/brick3 server1:/rhgs/brick4 server2:/rhgs/brick1 server2:/rhgs/brick2 server2:/rhgs/brick3 server2:/rhgs/brick4 server3:/rhgs/brick1 server3:/rhgs/brick2 server3:/rhgs/brick3 server3:/rhgs/brick4 server1:/rhgs/brick5 server1:/rhgs/brick6 server1:/rhgs/brick7 server1:/rhgs/brick8 server2:/rhgs/brick5 server2:/rhgs/brick6 server2:/rhgs/brick7 server2:/rhgs/brick8 server3:/rhgs/brick5 server3:/rhgs/brick6 server3:/rhgs/brick7 server3:/rhgs/brick8 server1:/rhgs/brick9 server1:/rhgs/brick10 server1:/rhgs/brick11 server1:/rhgs/brick12 server2:/rhgs/brick9 server2:/rhgs/brick10 server2:/rhgs/brick11 server2:/rhgs/brick12 server3:/rhgs/brick9 server3:/rhgs/brick10 server3:/rhgs/brick11 server3:/rhgs/brick12 --force--force parameter is required because this configuration is not optimal in terms of fault tolerance. Since each server provides more than one brick, this configuration has a greater risk to data availability if a server goes offline than it would if each brick was provided by a separate server.

Figure 11.1. Example Configuration of 8+4 Dispersed Volume Configuration

m bricks (refer to section Section 5.9, “Creating Dispersed Volumes” for information on n = k+m equation) from a dispersed subvolume on each server. If you add more than m bricks from a dispersed subvolume on server S, and if the server S goes down, data will be unavailable.

S (a single column in the above diagram) goes down, there is no data loss, but if there is any additional hardware failure, either another node going down or a storage device failure, there would be immediate data loss.

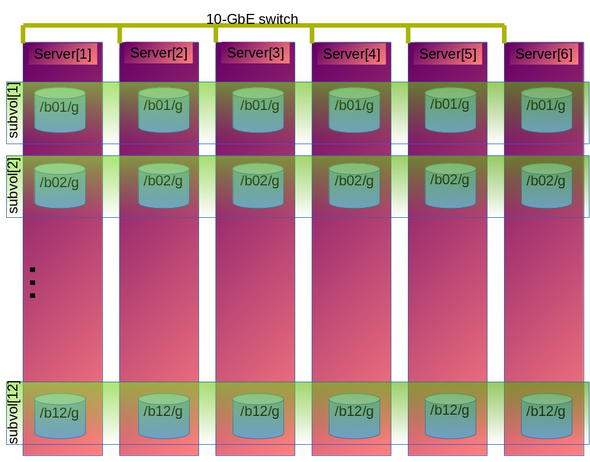

The following diagram illustrates dispersed 4+2 configuration on six servers and each server with 12-disk-per-server configuration as explained in the row 2 of Table 11.3, “Brick Configurations for Dispersed and Distributed Dispersed Volumes”. The command to create the disperse volume for this configuration:

gluster volume create test_vol disperse-data 4 redundancy 2 transport tcp server1:/rhgs/brick1 server2:/rhgs/brick1 server3:/rhgs/brick1 server4:/rhgs/brick1 server5:/rhgs/brick1 server6:/rhgs/brick1server1:/rhgs/brick2 server2:/rhgs/brick2 server3:/rhgs/brick2 server4:/rhgs/brick2 server5:/rhgs/brick2 server6:/rhgs/brick2 server1:/rhgs/brick3 server2:/rhgs/brick3 server3:/rhgs/brick3 server4:/rhgs/brick3 server5:/rhgs/brick3 server6:/rhgs/brick3 server1:/rhgs/brick4 server2:/rhgs/brick4 server3:/rhgs/brick4 server4:/rhgs/brick4 server5:/rhgs/brick4 server6:/rhgs/brick4 server1:/rhgs/brick5 server2:/rhgs/brick5 server3:/rhgs/brick5 server4:/rhgs/brick5 server5:/rhgs/brick5 server6:/rhgs/brick5 server1:/rhgs/brick6 server2:/rhgs/brick6 server3:/rhgs/brick6 server4:/rhgs/brick6 server5:/rhgs/brick6 server6:/rhgs/brick6 server1:/rhgs/brick7 server2:/rhgs/brick7 server3:/rhgs/brick7 server4:/rhgs/brick7 server5:/rhgs/brick7 server6:/rhgs/brick7 server1:/rhgs/brick8 server2:/rhgs/brick8 server3:/rhgs/brick8 server4:/rhgs/brick8 server5:/rhgs/brick8 server6:/rhgs/brick8 server1:/rhgs/brick9 server2:/rhgs/brick9 server3:/rhgs/brick9 server4:/rhgs/brick9 server5:/rhgs/brick9 server6:/rhgs/brick9 server1:/rhgs/brick10 server2:/rhgs/brick10 server3:/rhgs/brick10 server4:/rhgs/brick10 server5:/rhgs/brick10 server6:/rhgs/brick10 server1:/rhgs/brick11 server2:/rhgs/brick11 server3:/rhgs/brick11 server4:/rhgs/brick11 server5:/rhgs/brick11 server6:/rhgs/brick11 server1:/rhgs/brick12 server2:/rhgs/brick12 server3:/rhgs/brick12 server4:/rhgs/brick12 server5:/rhgs/brick12 server6:/rhgs/brick12

# gluster volume create test_vol disperse-data 4 redundancy 2 transport tcp server1:/rhgs/brick1 server2:/rhgs/brick1 server3:/rhgs/brick1 server4:/rhgs/brick1 server5:/rhgs/brick1 server6:/rhgs/brick1server1:/rhgs/brick2 server2:/rhgs/brick2 server3:/rhgs/brick2 server4:/rhgs/brick2 server5:/rhgs/brick2 server6:/rhgs/brick2 server1:/rhgs/brick3 server2:/rhgs/brick3 server3:/rhgs/brick3 server4:/rhgs/brick3 server5:/rhgs/brick3 server6:/rhgs/brick3 server1:/rhgs/brick4 server2:/rhgs/brick4 server3:/rhgs/brick4 server4:/rhgs/brick4 server5:/rhgs/brick4 server6:/rhgs/brick4 server1:/rhgs/brick5 server2:/rhgs/brick5 server3:/rhgs/brick5 server4:/rhgs/brick5 server5:/rhgs/brick5 server6:/rhgs/brick5 server1:/rhgs/brick6 server2:/rhgs/brick6 server3:/rhgs/brick6 server4:/rhgs/brick6 server5:/rhgs/brick6 server6:/rhgs/brick6 server1:/rhgs/brick7 server2:/rhgs/brick7 server3:/rhgs/brick7 server4:/rhgs/brick7 server5:/rhgs/brick7 server6:/rhgs/brick7 server1:/rhgs/brick8 server2:/rhgs/brick8 server3:/rhgs/brick8 server4:/rhgs/brick8 server5:/rhgs/brick8 server6:/rhgs/brick8 server1:/rhgs/brick9 server2:/rhgs/brick9 server3:/rhgs/brick9 server4:/rhgs/brick9 server5:/rhgs/brick9 server6:/rhgs/brick9 server1:/rhgs/brick10 server2:/rhgs/brick10 server3:/rhgs/brick10 server4:/rhgs/brick10 server5:/rhgs/brick10 server6:/rhgs/brick10 server1:/rhgs/brick11 server2:/rhgs/brick11 server3:/rhgs/brick11 server4:/rhgs/brick11 server5:/rhgs/brick11 server6:/rhgs/brick11 server1:/rhgs/brick12 server2:/rhgs/brick12 server3:/rhgs/brick12 server4:/rhgs/brick12 server5:/rhgs/brick12 server6:/rhgs/brick12Figure 11.2. Example Configuration of 4+2 Dispersed Volume Configuration

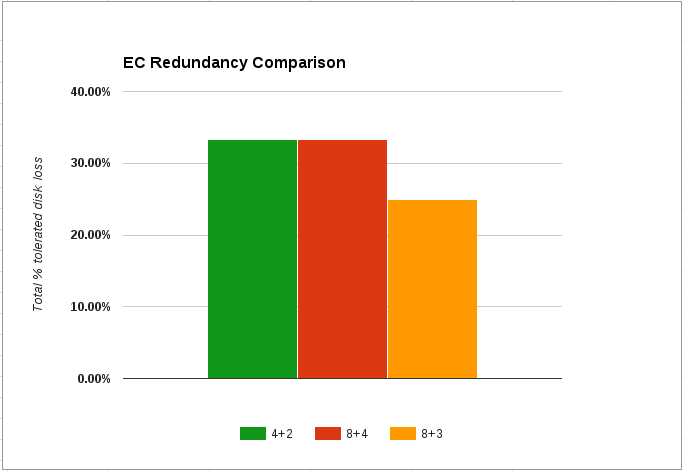

The following chart illustrates the redundancy comparison of all supported dispersed volume configurations.

Figure 11.3. Illustration of the redundancy comparison