Chapter 22. Workflows in automation controller

Workflows enable you to configure a sequence of disparate job templates (or workflow templates) that may or may not share inventory, playbooks, or permissions.

Workflows have admin and execute permissions, similar to job templates. A workflow accomplishes the task of tracking the full set of jobs that were part of the release process as a single unit.

Job or workflow templates are linked together using a graph-like structure called nodes. These nodes can be jobs, project syncs, or inventory syncs. A template can be part of different workflows or used multiple times in the same workflow. A copy of the graph structure is saved to a workflow job when you launch the workflow.

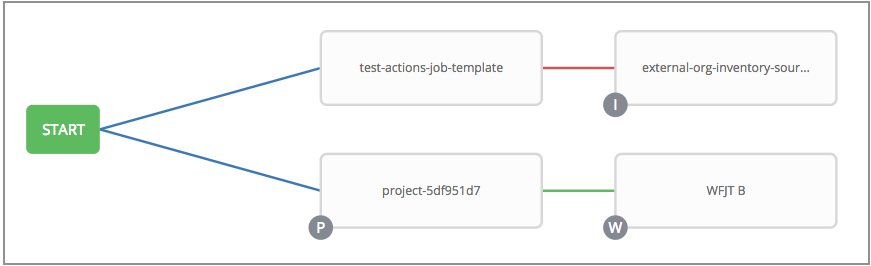

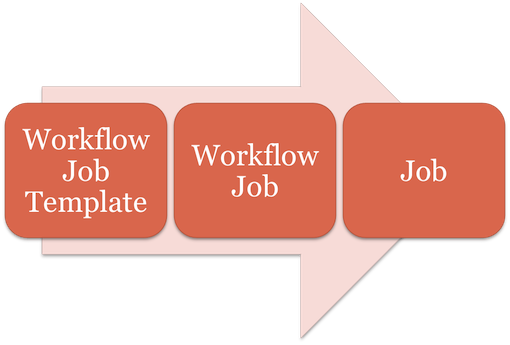

The following example shows a workflow that contains all three, as well as a workflow job template:

As the workflow runs, jobs are spawned from the node’s linked template. Nodes linking to a job template which has prompt-driven fields (job_type, job_tags, skip_tags, limit) can contain those fields, and is not prompted on launch. Job templates that prompt for a credential or inventory, without defaults, are not available for inclusion in a workflow.

22.1. Workflow scenarios and considerations

When building workflows, consider the following:

- A root node is set to ALWAYS by default and cannot be edited.

- A node can have multiple parents, and children can be linked to any of the states of success, failure, or always. If always, then the state is neither success nor failure. States apply at the node level, not at the workflow job template level. A workflow job is marked as successful unless it is canceled or encounters an error.

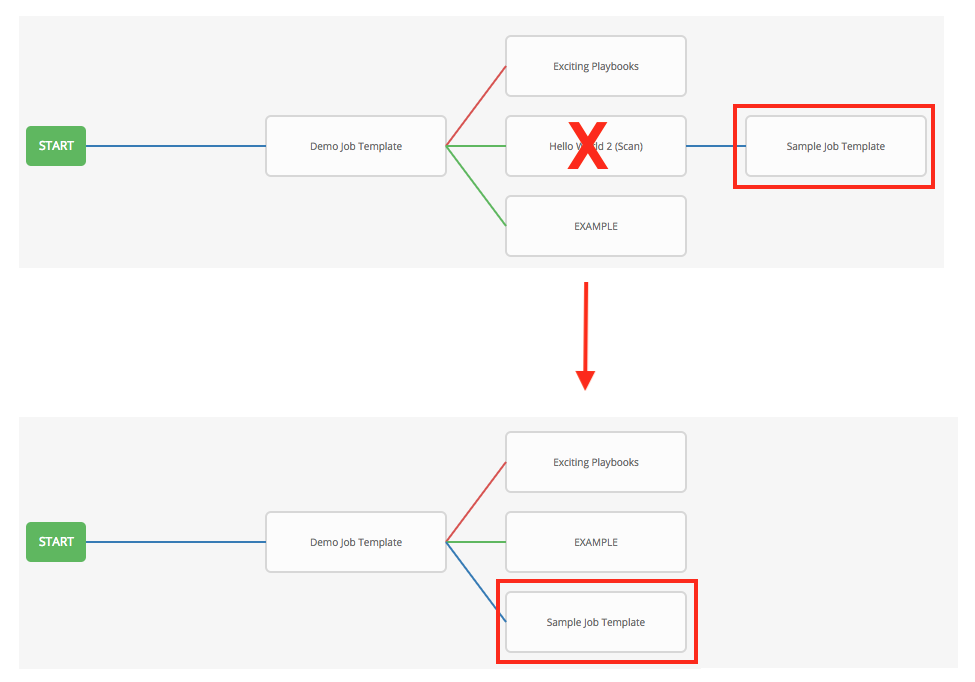

- If you remove a job or workflow template within the workflow, the nodes previously connected to those deleted, automatically get connected upstream and retain the edge type as in the following example:

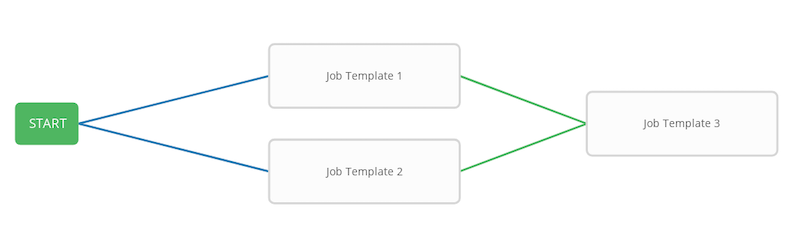

You can have a convergent workflow, where multiple jobs converge into one. In this scenario, any of the jobs or all of them must complete before the next one runs, as shown in the following example:

- In this example, automation controller runs the first two job templates in parallel. When they both finish and succeed as specified, the third downstream (convergence node), triggers.

- Prompts for inventory and surveys apply to workflow nodes in workflow job templates.

-

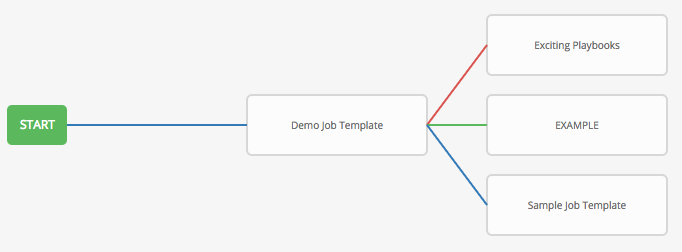

If you launch from the API, running a

getcommand displays a list of warnings and highlights missing components. The following image illustrates a basic workflow for a workflow job template:

- It is possible to launch several workflows simultaneously, and set a schedule for when to launch them. You can set notifications on workflows, such as when a job completes, similar to that of job templates.

Job slicing is intended to scale job executions horizontally.

If you enable job slicing on a job template, it divides the inventory to be acted on in the number of slices configured at launch time. Then starts a job for each slice.

For more information see the Job slicing section.

- You can build a recursive workflow, but if automation controller detects an error, it stops at the time the nested workflow attempts to run.

- Artifacts gathered in jobs in the sub-workflow are passed to downstream nodes.

- An inventory can be set at the workflow level, or prompt for inventory on launch.

-

When launched, all job templates in the workflow that have

ask_inventory_on_launch=trueuse the workflow level inventory. - Job templates that do not prompt for inventory ignore the workflow inventory and run against their own inventory.

- If a workflow prompts for inventory, schedules and other workflow nodes can provide the inventory.

-

In a workflow convergence scenario,

set_statsdata is merged in an undefined way, therefore you must set unique keys.

22.2. Workflow extra variables

Workflows use surveys to specify variables to be used in the playbooks in the workflow, called extra_vars. Survey variables are combined with extra_vars defined on the workflow job template, and saved to the workflow job extra_vars. extra_vars in the workflow job are combined with job template variables when spawning jobs within the workflow.

Workflows use the same behavior (hierarchy) of variable precedence as job templates with the exception of three additional variables. See the Automation controller Variable Precedence Hierarchy in the Extra variables section of Job templates. The three additional variables include:

- Workflow job template extra variables

- Workflow job template survey (defaults)

- Workflow job launch extra variables

Workflows included in a workflow follow the same variable precedence, they only inherit variables if they are specifically prompted for, or defined as part of a survey.

In addition to the workflow extra_vars, jobs and workflows run as part of a workflow can inherit variables in the artifacts dictionary of a parent job in the workflow (also combining with ancestors further upstream in its branch). These can be defined by the set_stats Ansible module.

If you use the set_stats module in your playbook, you can produce results that can be consumed downstream by another job.

Example

Notifying users as to the success or failure of an integration run. In this example, there are two playbooks that can be combined in a workflow to exercise artifact passing:

- invoke_set_stats.yml: first playbook in the workflow:

---

- hosts: localhost

tasks:

- name: "Artifact integration test results to the web"

local_action: 'shell curl -F "file=@integration_results.txt" https://file.io'

register: result

- name: "Artifact URL of test results to Workflows"

set_stats:

data:

integration_results_url: "{{ (result.stdout|from_json).link }}"- use_set_stats.yml: second playbook in the workflow:

---

- hosts: localhost

tasks:

- name: "Get test results from the web"

uri:

url: "{{ integration_results_url }}"

return_content: true

register: results

- name: "Output test results"

debug:

msg: "{{ results.content }}"

The set_stats module processes this workflow as follows:

- The contents of an integration result is uploaded to the web.

-

Through the

invoke_set_statsplaybook,set_statsis then invoked to artifact the URL of the uploadedintegration_results.txtinto the Ansible variable "integration_results_url". - The second playbook in the workflow consumes the Ansible extra variable "integration_results_url". It calls out to the web using the uri module to get the contents of the file uploaded by the previous job template job. Then, it prints out the contents of the obtained file.

For artifacts to work, keep the default setting, per_host = False in the set_stats module.

22.3. Workflow states

The workflow job can have the following states (no Failed state):

- Waiting

- Running

- Success (finished)

- Cancel

- Error

- Failed

In the workflow scheme, canceling a job cancels the branch, while canceling the workflow job cancels the entire workflow.

22.4. Role-based access controls

To edit and delete a workflow job template, you must have the administrator role. To create a workflow job template, you must be an organization administrator or a system administrator. However, you can run a workflow job template that contains job templates that you do not have permissions for. System administrators can create a blank workflow and then grant an admin_role to a low-level user, after which they can delegate more access and build the graph. You must have execute access to a job template to add it to a workflow job template.

You can also perform other tasks, such as making a duplicate copy or re-launching a workflow, depending on which permissions are granted to a user. You must have permissions to all the resources used in a workflow, such as job templates, before relaunching or making a copy.

For more information, see Role-based access controls.

For more information on performing the tasks described in this section, see the Administration Guide.