16.2. PCI Device Assignment with SR-IOV Devices

<source> element) can be directly connected to the guest using direct device assignment (sometimes referred to as passthrough). Due to limitations in standard single-port PCI ethernet card driver design, only Single Root I/O Virtualization (SR-IOV) virtual function (VF) devices can be assigned in this manner; to assign a standard single-port PCI or PCIe Ethernet card to a guest, use the traditional <hostdev> device definition.

Figure 16.9. XML example for PCI device assignment

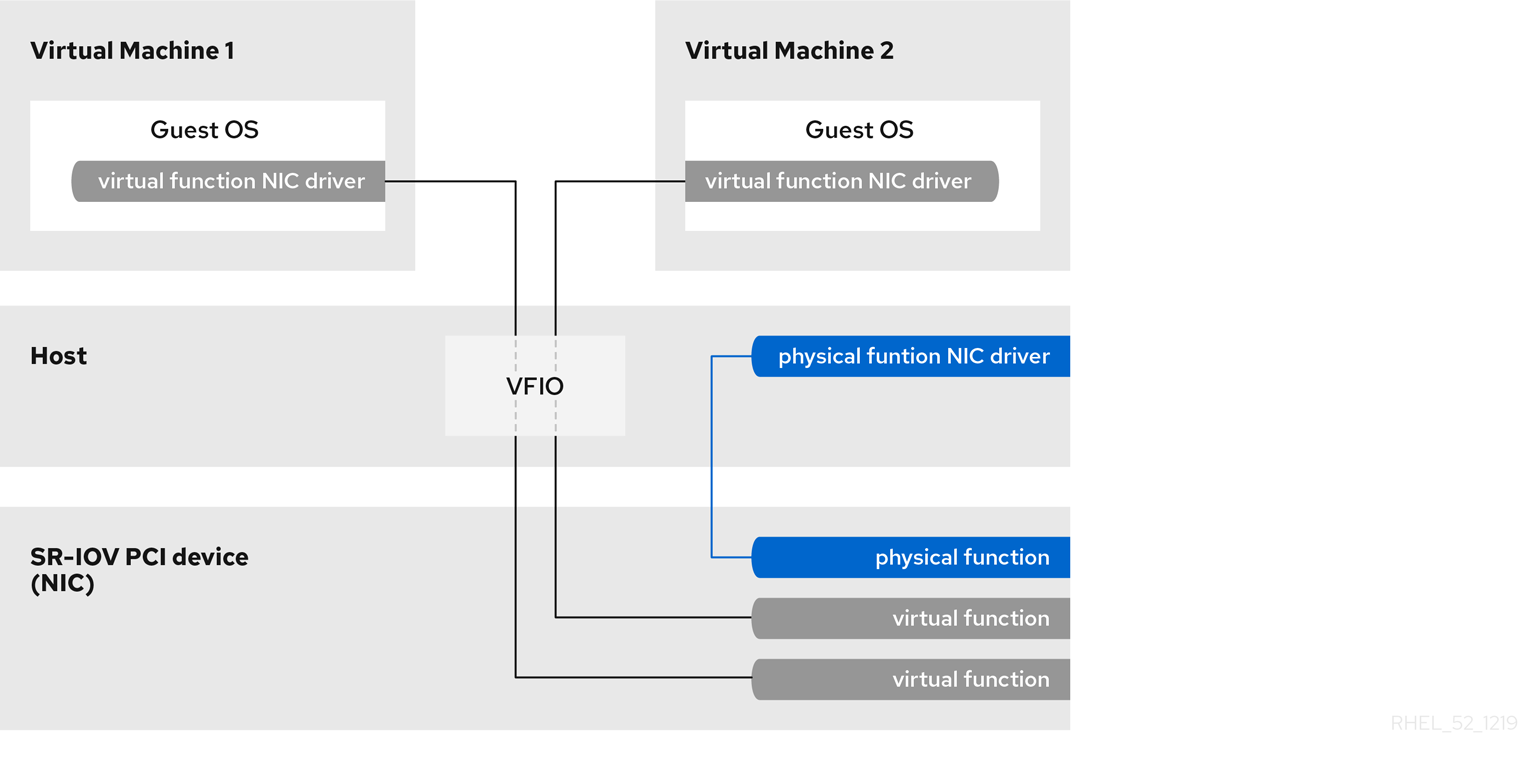

Figure 16.10. How SR-IOV works

- Physical Functions (PFs) are full PCIe devices that include the SR-IOV capabilities. Physical Functions are discovered, managed, and configured as normal PCI devices. Physical Functions configure and manage the SR-IOV functionality by assigning Virtual Functions.

- Virtual Functions (VFs) are simple PCIe functions that only process I/O. Each Virtual Function is derived from a Physical Function. The number of Virtual Functions a device may have is limited by the device hardware. A single Ethernet port, the Physical Device, may map to many Virtual Functions that can be shared to virtual machines.

16.2.1. Advantages of SR-IOV

16.2.2. Using SR-IOV

<hostdev> with the virsh edit or virsh attach-device command. However, this can be problematic because unlike a regular network device, an SR-IOV VF network device does not have a permanent unique MAC address, and is assigned a new MAC address each time the host is rebooted. Because of this, even if the guest is assigned the same VF after a reboot, when the host is rebooted the guest determines its network adapter to have a new MAC address. As a result, the guest believes there is new hardware connected each time, and will usually require re-configuration of the guest's network settings.

<interface type='hostdev'> interface device. Using this interface device, libvirt will first perform any network-specific hardware/switch initialization indicated (such as setting the MAC address, VLAN tag, or 802.1Qbh virtualport parameters), then perform the PCI device assignment to the guest.

<interface type='hostdev'> interface device requires:

- an SR-IOV-capable network card,

- host hardware that supports either the Intel VT-d or the AMD IOMMU extensions

- the PCI address of the VF to be assigned.

Important

Procedure 16.8. Attach an SR-IOV network device on an Intel or AMD system

Enable Intel VT-d or the AMD IOMMU specifications in the BIOS and kernel

On an Intel system, enable Intel VT-d in the BIOS if it is not enabled already. See Procedure 16.1, “Preparing an Intel system for PCI device assignment” for procedural help on enabling Intel VT-d in the BIOS and kernel.Skip this step if Intel VT-d is already enabled and working.On an AMD system, enable the AMD IOMMU specifications in the BIOS if they are not enabled already. See Procedure 16.2, “Preparing an AMD system for PCI device assignment” for procedural help on enabling IOMMU in the BIOS.Verify support

Verify if the PCI device with SR-IOV capabilities is detected. This example lists an Intel 82576 network interface card which supports SR-IOV. Use thelspcicommand to verify whether the device was detected.lspci

# lspci 03:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01) 03:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note that the output has been modified to remove all other devices.Activate Virtual Functions

Run the following command:echo ${num_vfs} > /sys/class/net/enp14s0f0/device/sriov_numvfs# echo ${num_vfs} > /sys/class/net/enp14s0f0/device/sriov_numvfsecho ${num_vfs} > /sys/class/net/enp14s0f0/device/sriov_numvfsecho ${num_vfs} > /sys/class/net/enp14s0f0/device/sriov_numvfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make the Virtual Functions persistent

To make the Virtual Functions persistent across reboots, use the editor of your choice to create an udev rule similar to the following, where you specify the intended number of VFs (in this example,2), up to the limit supported by the network interface card. In the following example, replace enp14s0f0 with the PF network device name(s) and adjust the value ofENV{ID_NET_DRIVER}to match the driver in use:vim /etc/udev/rules.d/enp14s0f0.rules

# vim /etc/udev/rules.d/enp14s0f0.rulesCopy to Clipboard Copied! Toggle word wrap Toggle overflow ACTION=="add", SUBSYSTEM=="net", ENV{ID_NET_DRIVER}=="ixgbe", ATTR{device/sriov_numvfs}="2"ACTION=="add", SUBSYSTEM=="net", ENV{ID_NET_DRIVER}=="ixgbe", ATTR{device/sriov_numvfs}="2"Copy to Clipboard Copied! Toggle word wrap Toggle overflow This will ensure the feature is enabled at boot-time.Inspect the new Virtual Functions

Using thelspcicommand, list the newly added Virtual Functions attached to the Intel 82576 network device. (Alternatively, usegrepto search forVirtual Function, to search for devices that support Virtual Functions.)Copy to Clipboard Copied! Toggle word wrap Toggle overflow The identifier for the PCI device is found with the-nparameter of thelspcicommand. The Physical Functions correspond to0b:00.0and0b:00.1. All Virtual Functions haveVirtual Functionin the description.Verify devices exist with virsh

Thelibvirtservice must recognize the device before adding a device to a virtual machine.libvirtuses a similar notation to thelspcioutput. All punctuation characters, : and ., inlspcioutput are changed to underscores (_).Use thevirsh nodedev-listcommand and thegrepcommand to filter the Intel 82576 network device from the list of available host devices.0bis the filter for the Intel 82576 network devices in this example. This may vary for your system and may result in additional devices.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The PCI addresses for the Virtual Functions and Physical Functions should be in the list.Get device details with virsh

Thepci_0000_0b_00_0is one of the Physical Functions andpci_0000_0b_10_0is the first corresponding Virtual Function for that Physical Function. Use thevirsh nodedev-dumpxmlcommand to get device details for both devices.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example adds the Virtual Functionpci_0000_03_10_2to the virtual machine in Step 8. Note thebus,slotandfunctionparameters of the Virtual Function: these are required for adding the device.Copy these parameters into a temporary XML file, such as/tmp/new-interface.xmlfor example.<interface type='hostdev' managed='yes'> <source> <address type='pci' domain='0x0000' bus='0x03' slot='0x10' function='0x2'/> </source> </interface><interface type='hostdev' managed='yes'> <source> <address type='pci' domain='0x0000' bus='0x03' slot='0x10' function='0x2'/> </source> </interface>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

When the virtual machine starts, it should see a network device of the type provided by the physical adapter, with the configured MAC address. This MAC address will remain unchanged across host and guest reboots.The following<interface>example shows the syntax for the optional<mac address>,<virtualport>, and<vlan>elements. In practice, use either the<vlan>or<virtualport>element, not both simultaneously as shown in the example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you do not specify a MAC address, one will be automatically generated. The<virtualport>element is only used when connecting to an 802.11Qbh hardware switch. The<vlan>element will transparently put the guest's device on the VLAN tagged42.Add the Virtual Function to the virtual machine

Add the Virtual Function to the virtual machine using the following command with the temporary file created in the previous step. This attaches the new device immediately and saves it for subsequent guest restarts.virsh attach-device MyGuest /tmp/new-interface.xml --live --config

virsh attach-device MyGuest /tmp/new-interface.xml --live --configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Specifying the--liveoption withvirsh attach-deviceattaches the new device to the running guest. Using the--configoption ensures the new device is available after future guest restarts.Note

The--liveoption is only accepted when the guest is running.virshwill return an error if the--liveoption is used on a non-running guest.

16.2.3. Configuring PCI Assignment with SR-IOV Devices

<hostdev> element. However, SR-IOV VF network devices do not have permanent unique MAC addresses, which causes problems where the guest virtual machine's network settings need to be re-configured each time the host physical machine is rebooted. To fix this, you need to set the MAC address prior to assigning the VF to the host physical machine after every boot of the guest virtual machine. In order to assign this MAC address, as well as other options, see the following procedure:

Procedure 16.9. Configuring MAC addresses, vLAN, and virtual ports for assigning PCI devices on SR-IOV

<hostdev> element cannot be used for function-specific items like MAC address assignment, vLAN tag ID assignment, or virtual port assignment, because the <mac>, <vlan>, and <virtualport> elements are not valid children for <hostdev>. Instead, these elements can be used with the hostdev interface type: <interface type='hostdev'>. This device type behaves as a hybrid of an <interface> and <hostdev>. Thus, before assigning the PCI device to the guest virtual machine, libvirt initializes the network-specific hardware/switch that is indicated (such as setting the MAC address, setting a vLAN tag, or associating with an 802.1Qbh switch) in the guest virtual machine's XML configuration file. For information on setting the vLAN tag, see Section 17.16, “Setting vLAN Tags”.

Gather information

In order to use<interface type='hostdev'>, you must have an SR-IOV-capable network card, host physical machine hardware that supports either the Intel VT-d or AMD IOMMU extensions, and you must know the PCI address of the VF that you wish to assign.Shut down the guest virtual machine

Usingvirsh shutdowncommand, shut down the guest virtual machine (here named guestVM).virsh shutdown guestVM

# virsh shutdown guestVMvirsh shutdown guestVMCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open the XML file for editing

virsh edit guestVM.xml

# virsh edit guestVM.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: For the XML configuration file that was created by thevirsh savecommand, run:virsh save-image-edit guestVM.xml --running

# virsh save-image-edit guestVM.xml --runningvirsh save-image-edit guestVM.xml --runningvirsh save-image-edit guestVM.xml --runningvirsh save-image-edit guestVM.xml --runningCopy to Clipboard Copied! Toggle word wrap Toggle overflow The configuration file, in this example guestVM.xml, opens in your default editor. For more information, see Section 20.7.5, “Editing the Guest Virtual Machine Configuration”Edit the XML file

Update the configuration file (guestVM.xml) to have a<devices>entry similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 16.11. Sample domain XML for hostdev interface type

Note

If you do not provide a MAC address, one will be automatically generated, just as with any other type of interface device. In addition, the<virtualport>element is only used if you are connecting to an 802.11Qgh hardware switch. 802.11Qbg (also known as "VEPA") switches are currently not supported.Restart the guest virtual machine

Run thevirsh startcommand to restart the guest virtual machine you shut down in step 2. See Section 20.6, “Starting, Resuming, and Restoring a Virtual Machine” for more information.virsh start guestVM

# virsh start guestVMvirsh start guestVMCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the guest virtual machine starts, it sees the network device provided to it by the physical host machine's adapter, with the configured MAC address. This MAC address remains unchanged across guest virtual machine and host physical machine reboots.

16.2.4. Setting PCI device assignment from a pool of SR-IOV virtual functions

- The specified VF must be available any time the guest virtual machine is started. Therefore, the administrator must permanently assign each VF to a single guest virtual machine (or modify the configuration file for every guest virtual machine to specify a currently unused VF's PCI address each time every guest virtual machine is started).

- If the guest virtual machine is moved to another host physical machine, that host physical machine must have exactly the same hardware in the same location on the PCI bus (or the guest virtual machine configuration must be modified prior to start).

Procedure 16.10. Creating a device pool

Shut down the guest virtual machine

Usingvirsh shutdowncommand, shut down the guest virtual machine, here named guestVM.virsh shutdown guestVM

# virsh shutdown guestVMvirsh shutdown guestVMCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a configuration file

Using your editor of choice, create an XML file (named passthrough.xml, for example) in the/tmpdirectory. Make sure to replacepf dev='eth3'with the netdev name of your own SR-IOV device's Physical Function (PF).The following is an example network definition that will make available a pool of all VFs for the SR-IOV adapter with its PF at "eth3' on the host physical machine:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 16.12. Sample network definition domain XML

Load the new XML file

Enter the following command, replacing /tmp/passthrough.xml with the name and location of your XML file you created in the previous step:virsh net-define /tmp/passthrough.xml

# virsh net-define /tmp/passthrough.xmlvirsh net-define /tmp/passthrough.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restarting the guest

Run the following, replacing passthrough.xml with the name of your XML file you created in the previous step:virsh net-autostart passthrough # virsh net-start passthrough

# virsh net-autostart passthroughvirsh net-autostart passthrough # virsh net-start passthroughvirsh net-start passthroughCopy to Clipboard Copied! Toggle word wrap Toggle overflow Re-start the guest virtual machine

Run thevirsh startcommand to restart the guest virtual machine you shutdown in the first step (example uses guestVM as the guest virtual machine's domain name). See Section 20.6, “Starting, Resuming, and Restoring a Virtual Machine” for more information.virsh start guestVM

# virsh start guestVMvirsh start guestVMCopy to Clipboard Copied! Toggle word wrap Toggle overflow Initiating passthrough for devices

Although only a single device is shown, libvirt will automatically derive the list of all VFs associated with that PF the first time a guest virtual machine is started with an interface definition in its domain XML like the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 16.13. Sample domain XML for interface network definition

Verification

You can verify this by runningvirsh net-dumpxml passthroughcommand after starting the first guest that uses the network; you will get output similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 16.14. XML dump file passthrough contents

16.2.5. SR-IOV Restrictions

- Intel® 82576NS Gigabit Ethernet Controller (

igbdriver) - Intel® 82576EB Gigabit Ethernet Controller (

igbdriver) - Intel® 82599ES 10 Gigabit Ethernet Controller (

ixgbedriver) - Intel® 82599EB 10 Gigabit Ethernet Controller (

ixgbedriver)