Dieser Inhalt ist in der von Ihnen ausgewählten Sprache nicht verfügbar.

Chapter 23. OVN-Kubernetes network plugin

23.1. About the OVN-Kubernetes network plugin

The OpenShift Container Platform cluster uses a virtualized network for pod and service networks.

Part of Red Hat OpenShift Networking, the OVN-Kubernetes network plugin is the default network provider for OpenShift Container Platform. OVN-Kubernetes is based on Open Virtual Network (OVN) and provides an overlay-based networking implementation. A cluster that uses the OVN-Kubernetes plugin also runs Open vSwitch (OVS) on each node. OVN configures OVS on each node to implement the declared network configuration.

OVN-Kubernetes is the default networking solution for OpenShift Container Platform and single-node OpenShift deployments.

OVN-Kubernetes, which arose from the OVS project, uses many of the same constructs, such as open flow rules, to determine how packets travel through the network. For more information, see the Open Virtual Network website.

OVN-Kubernetes is a series of daemons for OVS that translate virtual network configurations into OpenFlow rules. OpenFlow is a protocol for communicating with network switches and routers, providing a means for remotely controlling the flow of network traffic on a network device, allowing network administrators to configure, manage, and monitor the flow of network traffic.

OVN-Kubernetes provides more of the advanced functionality not available with OpenFlow. OVN supports distributed virtual routing, distributed logical switches, access control, DHCP and DNS. OVN implements distributed virtual routing within logic flows which equate to open flows. So for example if you have a pod that sends out a DHCP request on the network, it sends out that broadcast looking for DHCP address there will be a logic flow rule that matches that packet, and it responds giving it a gateway, a DNS server an IP address and so on.

OVN-Kubernetes runs a daemon on each node. There are daemon sets for the databases and for the OVN controller that run on every node. The OVN controller programs the Open vSwitch daemon on the nodes to support the network provider features; egress IPs, firewalls, routers, hybrid networking, IPSEC encryption, IPv6, network policy, network policy logs, hardware offloading and multicast.

23.1.1. OVN-Kubernetes purpose

The OVN-Kubernetes network plugin is an open-source, fully-featured Kubernetes CNI plugin that uses Open Virtual Network (OVN) to manage network traffic flows. OVN is a community developed, vendor-agnostic network virtualization solution. The OVN-Kubernetes network plugin:

- Uses OVN (Open Virtual Network) to manage network traffic flows. OVN is a community developed, vendor-agnostic network virtualization solution.

- Implements Kubernetes network policy support, including ingress and egress rules.

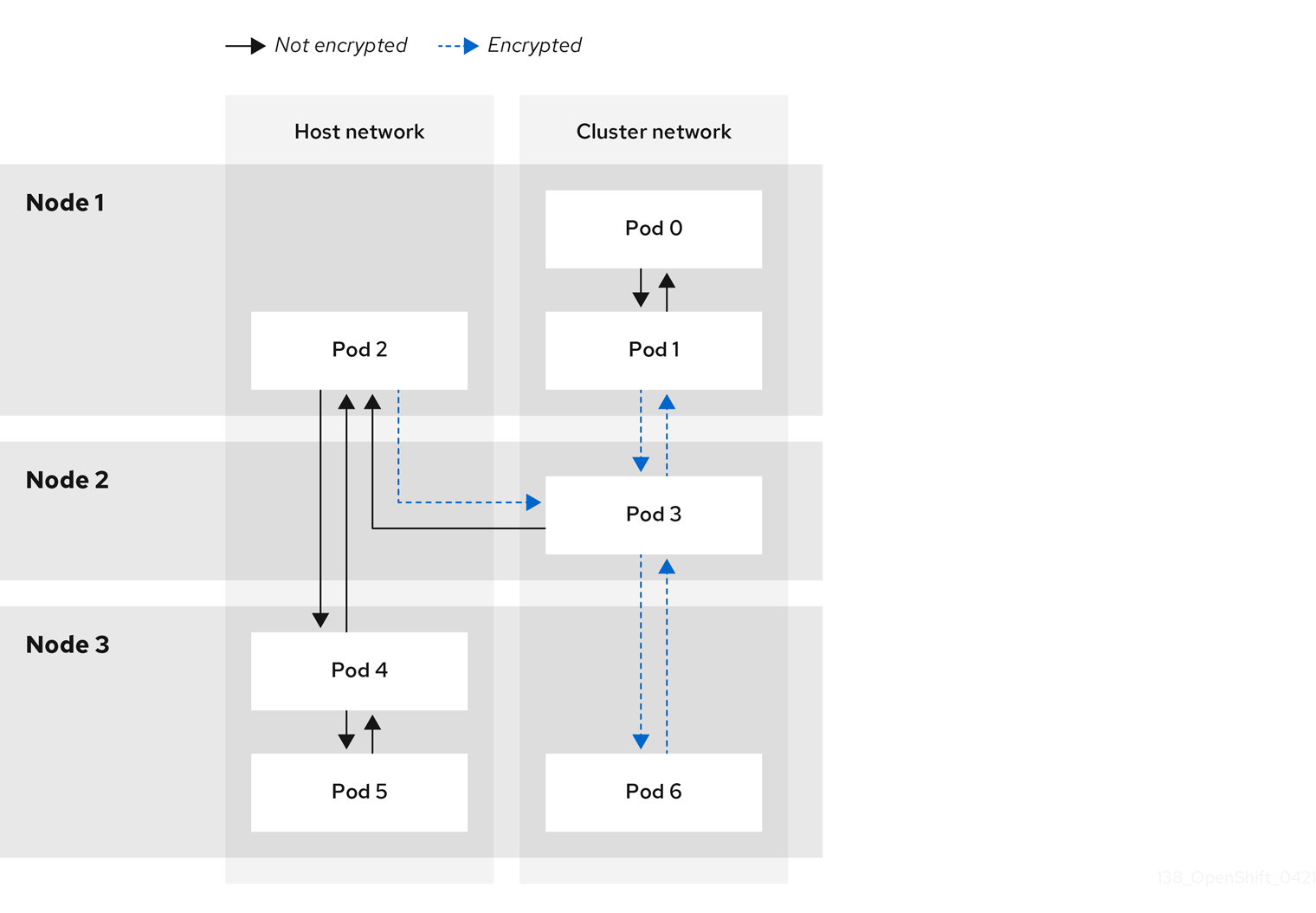

- Uses the Geneve (Generic Network Virtualization Encapsulation) protocol rather than VXLAN to create an overlay network between nodes.

The OVN-Kubernetes network plugin provides the following advantages over OpenShift SDN.

- Full support for IPv6 single-stack and IPv4/IPv6 dual-stack networking on supported platforms

- Support for hybrid clusters with both Linux and Microsoft Windows workloads

- Optional IPsec encryption of intra-cluster communications

- Offload of network data processing from host CPU to compatible network cards and data processing units (DPUs)

23.1.2. Supported network plugin feature matrix

Red Hat OpenShift Networking offers two options for the network plugin, OpenShift SDN and OVN-Kubernetes, for the network plugin. The following table summarizes the current feature support for both network plugins:

| Feature | OpenShift SDN | OVN-Kubernetes |

|---|---|---|

| Egress IPs | Supported | Supported |

| Egress firewall | Supported | Supported [1] |

| Egress router | Supported | Supported [2] |

| Hybrid networking | Not supported | Supported |

| IPsec encryption for intra-cluster communication | Not supported | Supported |

| IPv4 single-stack | Supported | Supported |

| IPv6 single-stack | Not supported | Supported [3] |

| IPv4/IPv6 dual-stack | Not Supported | Supported [4] |

| IPv6/IPv4 dual-stack | Not supported | Supported [5] |

| Kubernetes network policy | Supported | Supported |

| Kubernetes network policy logs | Not supported | Supported |

| Hardware offloading | Not supported | Supported |

| Multicast | Supported | Supported |

- Egress firewall is also known as egress network policy in OpenShift SDN. This is not the same as network policy egress.

- Egress router for OVN-Kubernetes supports only redirect mode.

- IPv6 single-stack networking on a bare-metal platform.

- IPv4/IPv6 dual-stack networking on bare-metal, IBM Power®, and IBM Z® platforms.

- IPv6/IPv4 dual-stack networking on bare-metal and IBM Power® platforms.

23.1.3. OVN-Kubernetes IPv6 and dual-stack limitations

The OVN-Kubernetes network plugin has the following limitations:

For clusters configured for dual-stack networking, both IPv4 and IPv6 traffic must use the same network interface as the default gateway. If this requirement is not met, pods on the host in the

ovnkube-nodedaemon set enter theCrashLoopBackOffstate. If you display a pod with a command such asoc get pod -n openshift-ovn-kubernetes -l app=ovnkube-node -o yaml, thestatusfield contains more than one message about the default gateway, as shown in the following output:I1006 16:09:50.985852 60651 helper_linux.go:73] Found default gateway interface br-ex 192.168.127.1 I1006 16:09:50.985923 60651 helper_linux.go:73] Found default gateway interface ens4 fe80::5054:ff:febe:bcd4 F1006 16:09:50.985939 60651 ovnkube.go:130] multiple gateway interfaces detected: br-ex ens4

I1006 16:09:50.985852 60651 helper_linux.go:73] Found default gateway interface br-ex 192.168.127.1 I1006 16:09:50.985923 60651 helper_linux.go:73] Found default gateway interface ens4 fe80::5054:ff:febe:bcd4 F1006 16:09:50.985939 60651 ovnkube.go:130] multiple gateway interfaces detected: br-ex ens4Copy to Clipboard Copied! Toggle word wrap Toggle overflow The only resolution is to reconfigure the host networking so that both IP families use the same network interface for the default gateway.

For clusters configured for dual-stack networking, both the IPv4 and IPv6 routing tables must contain the default gateway. If this requirement is not met, pods on the host in the

ovnkube-nodedaemon set enter theCrashLoopBackOffstate. If you display a pod with a command such asoc get pod -n openshift-ovn-kubernetes -l app=ovnkube-node -o yaml, thestatusfield contains more than one message about the default gateway, as shown in the following output:I0512 19:07:17.589083 108432 helper_linux.go:74] Found default gateway interface br-ex 192.168.123.1 F0512 19:07:17.589141 108432 ovnkube.go:133] failed to get default gateway interface

I0512 19:07:17.589083 108432 helper_linux.go:74] Found default gateway interface br-ex 192.168.123.1 F0512 19:07:17.589141 108432 ovnkube.go:133] failed to get default gateway interfaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow The only resolution is to reconfigure the host networking so that both IP families contain the default gateway.

23.1.4. Session affinity

Session affinity is a feature that applies to Kubernetes Service objects. You can use session affinity if you want to ensure that each time you connect to a <service_VIP>:<Port>, the traffic is always load balanced to the same back end. For more information, including how to set session affinity based on a client’s IP address, see Session affinity.

Stickiness timeout for session affinity

The OVN-Kubernetes network plugin for OpenShift Container Platform calculates the stickiness timeout for a session from a client based on the last packet. For example, if you run a curl command 10 times, the sticky session timer starts from the tenth packet not the first. As a result, if the client is continuously contacting the service, then the session never times out. The timeout starts when the service has not received a packet for the amount of time set by the timeoutSeconds parameter.

23.2. OVN-Kubernetes architecture

23.2.1. Introduction to OVN-Kubernetes architecture

The following diagram shows the OVN-Kubernetes architecture.

Figure 23.1. OVK-Kubernetes architecture

The key components are:

- Cloud Management System (CMS) - A platform specific client for OVN that provides a CMS specific plugin for OVN integration. The plugin translates the cloud management system’s concept of the logical network configuration, stored in the CMS configuration database in a CMS-specific format, into an intermediate representation understood by OVN.

-

OVN Northbound database (

nbdb) - Stores the logical network configuration passed by the CMS plugin. -

OVN Southbound database (

sbdb) - Stores the physical and logical network configuration state for OpenVswitch (OVS) system on each node, including tables that bind them. -

ovn-northd - This is the intermediary client between

nbdbandsbdb. It translates the logical network configuration in terms of conventional network concepts, taken from thenbdb, into logical data path flows in thesbdbbelow it. The container name isnorthdand it runs in theovnkube-masterpods. -

ovn-controller - This is the OVN agent that interacts with OVS and hypervisors, for any information or update that is needed for

sbdb. Theovn-controllerreads logical flows from thesbdb, translates them intoOpenFlowflows and sends them to the node’s OVS daemon. The container name isovn-controllerand it runs in theovnkube-nodepods.

The OVN northbound database has the logical network configuration passed down to it by the cloud management system (CMS). The OVN northbound Database contains the current desired state of the network, presented as a collection of logical ports, logical switches, logical routers, and more. The ovn-northd (northd container) connects to the OVN northbound database and the OVN southbound database. It translates the logical network configuration in terms of conventional network concepts, taken from the OVN northbound Database, into logical data path flows in the OVN southbound database.

The OVN southbound database has physical and logical representations of the network and binding tables that link them together. Every node in the cluster is represented in the southbound database, and you can see the ports that are connected to it. It also contains all the logic flows, the logic flows are shared with the ovn-controller process that runs on each node and the ovn-controller turns those into OpenFlow rules to program Open vSwitch.

The Kubernetes control plane nodes each contain an ovnkube-master pod which hosts containers for the OVN northbound and southbound databases. All OVN northbound databases form a Raft cluster and all southbound databases form a separate Raft cluster. At any given time a single ovnkube-master is the leader and the other ovnkube-master pods are followers.

23.2.2. Listing all resources in the OVN-Kubernetes project

Finding the resources and containers that run in the OVN-Kubernetes project is important to help you understand the OVN-Kubernetes networking implementation.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

Procedure

Run the following command to get all resources, endpoints, and

ConfigMapsin the OVN-Kubernetes project:oc get all,ep,cm -n openshift-ovn-kubernetes

$ oc get all,ep,cm -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow There are three

ovnkube-mastersthat run on the control plane nodes, and two daemon sets used to deploy theovnkube-masterandovnkube-nodepods. There is oneovnkube-nodepod for each node in the cluster. In this example, there are 5, and since there is oneovnkube-nodeper node in the cluster, there are five nodes in the cluster. Theovnkube-configConfigMaphas the OpenShift Container Platform OVN-Kubernetes configurations started by online-master andovnkube-node. Theovn-kubernetes-masterConfigMaphas the information of the current online master leader.List all the containers in the

ovnkube-masterpods by running the following command:oc get pods ovnkube-master-9g7zt \ -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetes$ oc get pods ovnkube-master-9g7zt \ -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

northd nbdb kube-rbac-proxy sbdb ovnkube-master ovn-dbchecker

northd nbdb kube-rbac-proxy sbdb ovnkube-master ovn-dbcheckerCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-masterpod is made up of several containers. It is responsible for hosting the northbound database (nbdbcontainer), the southbound database (sbdbcontainer), watching for cluster events for pods, egressIP, namespaces, services, endpoints, egress firewall, and network policy and writing them to the northbound database (ovnkube-masterpod), as well as managing pod subnet allocation to nodes.List all the containers in the

ovnkube-nodepods by running the following command:oc get pods ovnkube-node-jg52r \ -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetes$ oc get pods ovnkube-node-jg52r \ -o jsonpath='{.spec.containers[*].name}' -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

ovn-controller ovn-acl-logging kube-rbac-proxy kube-rbac-proxy-ovn-metrics ovnkube-node

ovn-controller ovn-acl-logging kube-rbac-proxy kube-rbac-proxy-ovn-metrics ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-nodepod has a container (ovn-controller) that resides on each OpenShift Container Platform node. Each node’sovn-controllerconnects the OVN northbound to the OVN southbound database to learn about the OVN configuration. Theovn-controllerconnects southbound toovs-vswitchdas an OpenFlow controller, for control over network traffic, and to the localovsdb-serverto allow it to monitor and control Open vSwitch configuration.

23.2.3. Listing the OVN-Kubernetes northbound database contents

To understand logic flow rules you need to examine the northbound database and understand what objects are there to see how they are translated into logic flow rules. The up to date information is present on the OVN Raft leader and this procedure describes how to find the Raft leader and subsequently query it to list the OVN northbound database contents.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

Procedure

Find the OVN Raft leader for the northbound database.

NoteThe Raft leader stores the most up to date information.

List the pods by running the following command:

oc get po -n openshift-ovn-kubernetes

$ oc get po -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Choose one of the master pods at random and run the following command:

oc exec -n openshift-ovn-kubernetes ovnkube-master-7j97q \ -- /usr/bin/ovn-appctl -t /var/run/ovn/ovnnb_db.ctl \ --timeout=3 cluster/status OVN_Northbound

$ oc exec -n openshift-ovn-kubernetes ovnkube-master-7j97q \ -- /usr/bin/ovn-appctl -t /var/run/ovn/ovnnb_db.ctl \ --timeout=3 cluster/status OVN_NorthboundCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Find the

ovnkube-masterpod running on IP Address10.0.242.240using the following command:oc get po -o wide -n openshift-ovn-kubernetes | grep 10.0.242.240 | grep -v ovnkube-node

$ oc get po -o wide -n openshift-ovn-kubernetes | grep 10.0.242.240 | grep -v ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ovnkube-master-gt4ms 6/6 Running 1 (143m ago) 150m 10.0.242.240 ip-10-0-242-240.ec2.internal <none> <none>

ovnkube-master-gt4ms 6/6 Running 1 (143m ago) 150m 10.0.242.240 ip-10-0-242-240.ec2.internal <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-master-gt4mspod runs on IP Address 10.0.242.240.

Run the following command to show all the objects in the northbound database:

oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl show

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl showCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output is too long to list here. The list includes the NAT rules, logical switches, load balancers and so on.

Run the following command to display the options available with the command

ovn-nbctl:oc exec -n openshift-ovn-kubernetes -it ovnkube-master-mk6p6 \ -c northd ovn-nbctl --help

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-mk6p6 \ -c northd ovn-nbctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can narrow down and focus on specific components by using some of the following commands:

Run the following command to show the list of logical routers:

oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl lr-list

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl lr-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFrom this output you can see there is router on each node plus an

ovn_cluster_router.Run the following command to show the list of logical switches:

oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl ls-list

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl ls-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFrom this output you can see there is an ext switch for each node plus switches with the node name itself and a join switch.

Run the following command to show the list of load balancers:

oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl lb-list

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-gt4ms \ -c northd -- ovn-nbctl lb-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFrom this truncated output you can see there are many OVN-Kubernetes load balancers. Load balancers in OVN-Kubernetes are representations of services.

23.2.4. Command-line arguments for ovn-nbctl to examine northbound database contents

The following table describes the command-line arguments that can be used with ovn-nbctl to examine the contents of the northbound database.

| Argument | Description |

|---|---|

|

| An overview of the northbound database contents. |

|

| Show the details associated with the specified switch or router. |

|

| Show the logical routers. |

|

|

Using the router information from |

|

| Show network address translation details for the specified router. |

|

| Show the logical switches |

|

|

Using the switch information from |

|

| Get the type for the logical port. |

|

| Show the load balancers. |

23.2.5. Listing the OVN-Kubernetes southbound database contents

Logic flow rules are stored in the southbound database that is a representation of your infrastructure. The up to date information is present on the OVN Raft leader and this procedure describes how to find the Raft leader and query it to list the OVN southbound database contents.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed.

Procedure

Find the OVN Raft leader for the southbound database.

NoteThe Raft leader stores the most up to date information.

List the pods by running the following command:

oc get po -n openshift-ovn-kubernetes

$ oc get po -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Choose one of the master pods at random and run the following command to find the OVN southbound Raft leader:

oc exec -n openshift-ovn-kubernetes ovnkube-master-7j97q \ -- /usr/bin/ovn-appctl -t /var/run/ovn/ovnsb_db.ctl \ --timeout=3 cluster/status OVN_Southbound

$ oc exec -n openshift-ovn-kubernetes ovnkube-master-7j97q \ -- /usr/bin/ovn-appctl -t /var/run/ovn/ovnsb_db.ctl \ --timeout=3 cluster/status OVN_SouthboundCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Find the

ovnkube-masterpod running on IP Address10.0.163.212using the following command:oc get po -o wide -n openshift-ovn-kubernetes | grep 10.0.163.212 | grep -v ovnkube-node

$ oc get po -o wide -n openshift-ovn-kubernetes | grep 10.0.163.212 | grep -v ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ovnkube-master-mk6p6 6/6 Running 0 136m 10.0.163.212 ip-10-0-163-212.ec2.internal <none> <none>

ovnkube-master-mk6p6 6/6 Running 0 136m 10.0.163.212 ip-10-0-163-212.ec2.internal <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-master-mk6p6pod runs on IP Address 10.0.163.212.

Run the following command to show all the information stored in the southbound database:

oc exec -n openshift-ovn-kubernetes -it ovnkube-master-mk6p6 \ -c northd -- ovn-sbctl show

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-mk6p6 \ -c northd -- ovn-sbctl showCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This detailed output shows the chassis and the ports that are attached to the chassis which in this case are all of the router ports and anything that runs like host networking. Any pods communicate out to the wider network using source network address translation (SNAT). Their IP address is translated into the IP address of the node that the pod is running on and then sent out into the network.

In addition to the chassis information the southbound database has all the logic flows and those logic flows are then sent to the

ovn-controllerrunning on each of the nodes. Theovn-controllertranslates the logic flows into open flow rules and ultimately programsOpenvSwitchso that your pods can then follow open flow rules and make it out of the network.Run the following command to display the options available with the command

ovn-sbctl:oc exec -n openshift-ovn-kubernetes -it ovnkube-master-mk6p6 \ -c northd -- ovn-sbctl --help

$ oc exec -n openshift-ovn-kubernetes -it ovnkube-master-mk6p6 \ -c northd -- ovn-sbctl --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.2.6. Command-line arguments for ovn-sbctl to examine southbound database contents

The following table describes the command-line arguments that can be used with ovn-sbctl to examine the contents of the southbound database.

| Argument | Description |

|---|---|

|

| Overview of the southbound database contents. |

|

| List the contents of southbound database for a the specified port . |

|

| List the logical flows. |

23.2.7. OVN-Kubernetes logical architecture

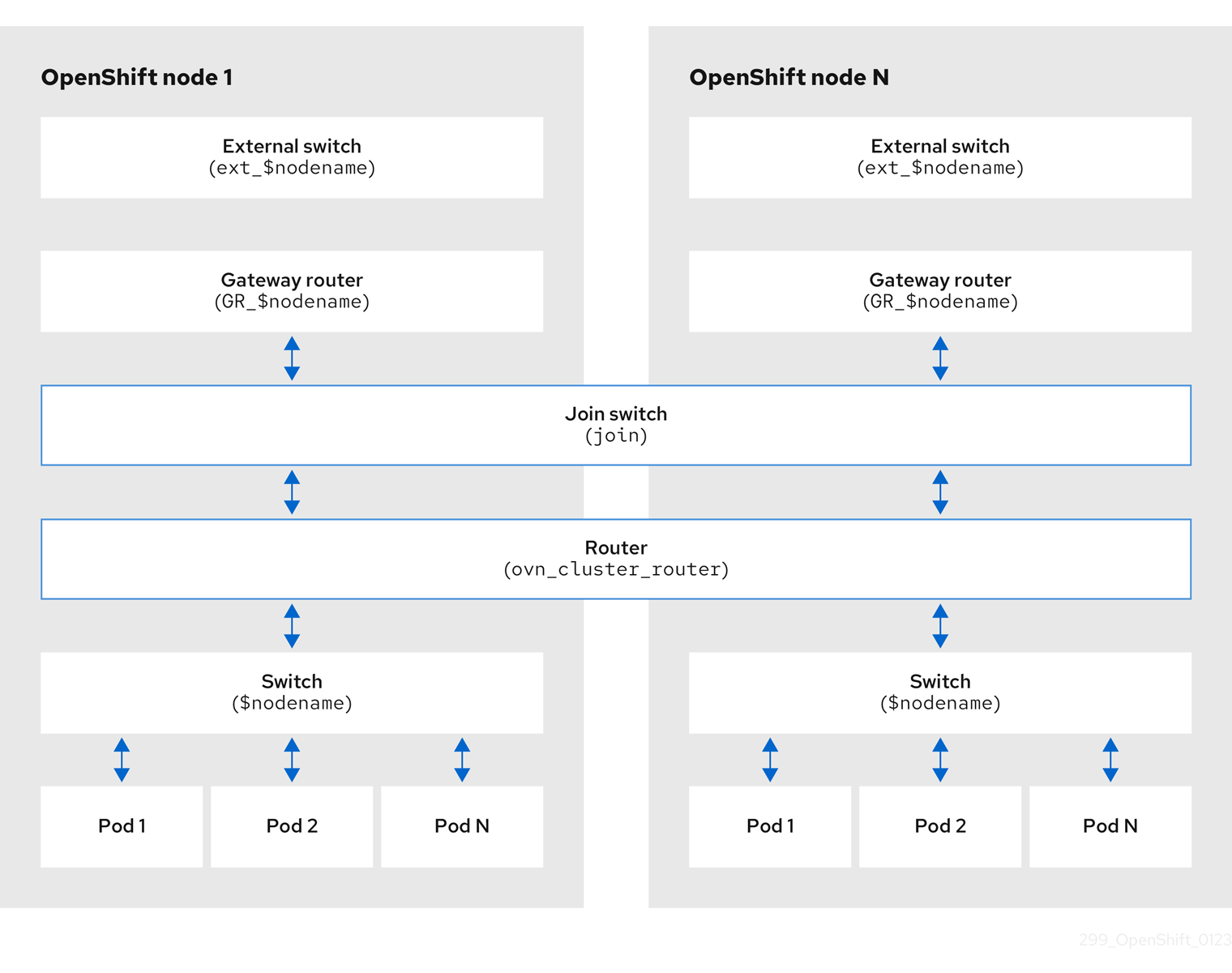

OVN is a network virtualization solution. It creates logical switches and routers. These switches and routers are interconnected to create any network topologies. When you run ovnkube-trace with the log level set to 2 or 5 the OVN-Kubernetes logical components are exposed. The following diagram shows how the routers and switches are connected in OpenShift Container Platform.

Figure 23.2. OVN-Kubernetes router and switch components

The key components involved in packet processing are:

- Gateway routers

-

Gateway routers sometimes called L3 gateway routers, are typically used between the distributed routers and the physical network. Gateway routers including their logical patch ports are bound to a physical location (not distributed), or chassis. The patch ports on this router are known as l3gateway ports in the ovn-southbound database (

ovn-sbdb). - Distributed logical routers

- Distributed logical routers and the logical switches behind them, to which virtual machines and containers attach, effectively reside on each hypervisor.

- Join local switch

- Join local switches are used to connect the distributed router and gateway routers. It reduces the number of IP addresses needed on the distributed router.

- Logical switches with patch ports

- Logical switches with patch ports are used to virtualize the network stack. They connect remote logical ports through tunnels.

- Logical switches with localnet ports

- Logical switches with localnet ports are used to connect OVN to the physical network. They connect remote logical ports by bridging the packets to directly connected physical L2 segments using localnet ports.

- Patch ports

- Patch ports represent connectivity between logical switches and logical routers and between peer logical routers. A single connection has a pair of patch ports at each such point of connectivity, one on each side.

- l3gateway ports

-

l3gateway ports are the port binding entries in the

ovn-sbdbfor logical patch ports used in the gateway routers. They are called l3gateway ports rather than patch ports just to portray the fact that these ports are bound to a chassis just like the gateway router itself. - localnet ports

-

localnet ports are present on the bridged logical switches that allows a connection to a locally accessible network from each

ovn-controllerinstance. This helps model the direct connectivity to the physical network from the logical switches. A logical switch can only have a single localnet port attached to it.

23.2.7.1. Installing network-tools on local host

Install network-tools on your local host to make a collection of tools available for debugging OpenShift Container Platform cluster network issues.

Procedure

Clone the

network-toolsrepository onto your workstation with the following command:git clone git@github.com:openshift/network-tools.git

$ git clone git@github.com:openshift/network-tools.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change into the directory for the repository you just cloned:

cd network-tools

$ cd network-toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: List all available commands:

./debug-scripts/network-tools -h

$ ./debug-scripts/network-tools -hCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.2.7.2. Running network-tools

Get information about the logical switches and routers by running network-tools.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster as a user with

cluster-adminprivileges. -

You have installed

network-toolson local host.

Procedure

List the routers by running the following command:

./debug-scripts/network-tools ovn-db-run-command ovn-nbctl lr-list

$ ./debug-scripts/network-tools ovn-db-run-command ovn-nbctl lr-listCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the localnet ports by running the following command:

./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=localnet

$ ./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=localnetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the

l3gatewayports by running the following command:./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=l3gateway

$ ./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=l3gatewayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the patch ports by running the following command:

./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=patch

$ ./debug-scripts/network-tools ovn-db-run-command \ ovn-sbctl find Port_Binding type=patchCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.3. Troubleshooting OVN-Kubernetes

OVN-Kubernetes has many sources of built-in health checks and logs.

23.3.1. Monitoring OVN-Kubernetes health by using readiness probes

The ovnkube-master and ovnkube-node pods have containers configured with readiness probes.

Prerequisites

-

Access to the OpenShift CLI (

oc). -

You have access to the cluster with

cluster-adminprivileges. -

You have installed

jq.

Procedure

Review the details of the

ovnkube-masterreadiness probe by running the following command:oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-master \ -o json | jq '.items[0].spec.containers[] | .name,.readinessProbe'

$ oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-master \ -o json | jq '.items[0].spec.containers[] | .name,.readinessProbe'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The readiness probe for the northbound and southbound database containers in the

ovnkube-masterpod checks for the health of the Raft cluster hosting the databases.Review the details of the

ovnkube-nodereadiness probe by running the following command:oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-master \ -o json | jq '.items[0].spec.containers[] | .name,.readinessProbe'

$ oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-master \ -o json | jq '.items[0].spec.containers[] | .name,.readinessProbe'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

ovnkube-nodecontainer in theovnkube-nodepod has a readiness probe to verify the presence of the ovn-kubernetes CNI configuration file, the absence of which would indicate that the pod is not running or is not ready to accept requests to configure pods.Show all events including the probe failures, for the namespace by using the following command:

oc get events -n openshift-ovn-kubernetes

$ oc get events -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Show the events for just this pod:

oc describe pod ovnkube-master-tp2z8 -n openshift-ovn-kubernetes

$ oc describe pod ovnkube-master-tp2z8 -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Show the messages and statuses from the cluster network operator:

oc get co/network -o json | jq '.status.conditions[]'

$ oc get co/network -o json | jq '.status.conditions[]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Show the

readystatus of each container inovnkube-masterpods by running the following script:for p in $(oc get pods --selector app=ovnkube-master -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); do echo === $p ===; \ oc get pods -n openshift-ovn-kubernetes $p -o json | jq '.status.containerStatuses[] | .name, .ready'; \ done$ for p in $(oc get pods --selector app=ovnkube-master -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); do echo === $p ===; \ oc get pods -n openshift-ovn-kubernetes $p -o json | jq '.status.containerStatuses[] | .name, .ready'; \ doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe expectation is all container statuses are reporting as

true. Failure of a readiness probe sets the status tofalse.

23.3.2. Viewing OVN-Kubernetes alerts in the console

The Alerting UI provides detailed information about alerts and their governing alerting rules and silences.

Prerequisites

- You have access to the cluster as a developer or as a user with view permissions for the project that you are viewing metrics for.

Procedure (UI)

-

In the Administrator perspective, select Observe

Alerting. The three main pages in the Alerting UI in this perspective are the Alerts, Silences, and Alerting Rules pages. -

View the rules for OVN-Kubernetes alerts by selecting Observe

Alerting Alerting Rules.

23.3.3. Viewing OVN-Kubernetes alerts in the CLI

You can get information about alerts and their governing alerting rules and silences from the command line.

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

The OpenShift CLI (

oc) installed. -

You have installed

jq.

Procedure

View active or firing alerts by running the following commands.

Set the alert manager route environment variable by running the following command:

ALERT_MANAGER=$(oc get route alertmanager-main -n openshift-monitoring \ -o jsonpath='{@.spec.host}')$ ALERT_MANAGER=$(oc get route alertmanager-main -n openshift-monitoring \ -o jsonpath='{@.spec.host}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Issue a

curlrequest to the alert manager route API with the correct authorization details requesting specific fields by running the following command:curl -s -k -H "Authorization: Bearer \ $(oc create token prometheus-k8s -n openshift-monitoring)" \ https://$ALERT_MANAGER/api/v1/alerts \ | jq '.data[] | "\(.labels.severity) \(.labels.alertname) \(.labels.pod) \(.labels.container) \(.labels.endpoint) \(.labels.instance)"'

$ curl -s -k -H "Authorization: Bearer \ $(oc create token prometheus-k8s -n openshift-monitoring)" \ https://$ALERT_MANAGER/api/v1/alerts \ | jq '.data[] | "\(.labels.severity) \(.labels.alertname) \(.labels.pod) \(.labels.container) \(.labels.endpoint) \(.labels.instance)"'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

View alerting rules by running the following command:

oc -n openshift-monitoring exec -c prometheus prometheus-k8s-0 -- curl -s 'http://localhost:9090/api/v1/rules' | jq '.data.groups[].rules[] | select(((.name|contains("ovn")) or (.name|contains("OVN")) or (.name|contains("Ovn")) or (.name|contains("North")) or (.name|contains("South"))) and .type=="alerting")'$ oc -n openshift-monitoring exec -c prometheus prometheus-k8s-0 -- curl -s 'http://localhost:9090/api/v1/rules' | jq '.data.groups[].rules[] | select(((.name|contains("ovn")) or (.name|contains("OVN")) or (.name|contains("Ovn")) or (.name|contains("North")) or (.name|contains("South"))) and .type=="alerting")'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.3.4. Viewing the OVN-Kubernetes logs using the CLI

You can view the logs for each of the pods in the ovnkube-master and ovnkube-node pods using the OpenShift CLI (oc).

Prerequisites

-

Access to the cluster as a user with the

cluster-adminrole. -

Access to the OpenShift CLI (

oc). -

You have installed

jq.

Procedure

View the log for a specific pod:

oc logs -f <pod_name> -c <container_name> -n <namespace>

$ oc logs -f <pod_name> -c <container_name> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

-f- Optional: Specifies that the output follows what is being written into the logs.

<pod_name>- Specifies the name of the pod.

<container_name>- Optional: Specifies the name of a container. When a pod has more than one container, you must specify the container name.

<namespace>- Specify the namespace the pod is running in.

For example:

oc logs ovnkube-master-7h4q7 -n openshift-ovn-kubernetes

$ oc logs ovnkube-master-7h4q7 -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc logs -f ovnkube-master-7h4q7 -n openshift-ovn-kubernetes -c ovn-dbchecker

$ oc logs -f ovnkube-master-7h4q7 -n openshift-ovn-kubernetes -c ovn-dbcheckerCopy to Clipboard Copied! Toggle word wrap Toggle overflow The contents of log files are printed out.

Examine the most recent entries in all the containers in the

ovnkube-masterpods:for p in $(oc get pods --selector app=ovnkube-master -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); \ do echo === $p ===; for container in $(oc get pods -n openshift-ovn-kubernetes $p \ -o json | jq -r '.status.containerStatuses[] | .name');do echo ---$container---; \ oc logs -c $container $p -n openshift-ovn-kubernetes --tail=5; done; done$ for p in $(oc get pods --selector app=ovnkube-master -n openshift-ovn-kubernetes \ -o jsonpath='{range.items[*]}{" "}{.metadata.name}'); \ do echo === $p ===; for container in $(oc get pods -n openshift-ovn-kubernetes $p \ -o json | jq -r '.status.containerStatuses[] | .name');do echo ---$container---; \ oc logs -c $container $p -n openshift-ovn-kubernetes --tail=5; done; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the last 5 lines of every log in every container in an

ovnkube-masterpod using the following command:oc logs -l app=ovnkube-master -n openshift-ovn-kubernetes --all-containers --tail 5

$ oc logs -l app=ovnkube-master -n openshift-ovn-kubernetes --all-containers --tail 5Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.3.5. Viewing the OVN-Kubernetes logs using the web console

You can view the logs for each of the pods in the ovnkube-master and ovnkube-node pods in the web console.

Prerequisites

-

Access to the OpenShift CLI (

oc).

Procedure

-

In the OpenShift Container Platform console, navigate to Workloads

Pods or navigate to the pod through the resource you want to investigate. -

Select the

openshift-ovn-kubernetesproject from the drop-down menu. - Click the name of the pod you want to investigate.

-

Click Logs. By default for the

ovnkube-masterthe logs associated with thenorthdcontainer are displayed. - Use the down-down menu to select logs for each container in turn.

23.3.5.1. Changing the OVN-Kubernetes log levels

The default log level for OVN-Kubernetes is 2. To debug OVN-Kubernetes set the log level to 5. Follow this procedure to increase the log level of the OVN-Kubernetes to help you debug an issue.

Prerequisites

-

You have access to the cluster with

cluster-adminprivileges. - You have access to the OpenShift Container Platform web console.

Procedure

Run the following command to get detailed information for all pods in the OVN-Kubernetes project:

oc get po -o wide -n openshift-ovn-kubernetes

$ oc get po -o wide -n openshift-ovn-kubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

ConfigMapfile similar to the following example and use a filename such asenv-overrides.yaml:Example

ConfigMapfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

ConfigMapfile by using the following command:oc apply -n openshift-ovn-kubernetes -f env-overrides.yaml

$ oc apply -n openshift-ovn-kubernetes -f env-overrides.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

configmap/env-overrides.yaml created

configmap/env-overrides.yaml createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

ovnkubepods to apply the new log level by using the following commands:oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ip-10-0-217-114.ec2.internal -l app=ovnkube-node

$ oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ip-10-0-217-114.ec2.internal -l app=ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ip-10-0-209-180.ec2.internal -l app=ovnkube-node

$ oc delete pod -n openshift-ovn-kubernetes \ --field-selector spec.nodeName=ip-10-0-209-180.ec2.internal -l app=ovnkube-nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete pod -n openshift-ovn-kubernetes -l app=ovnkube-master

$ oc delete pod -n openshift-ovn-kubernetes -l app=ovnkube-masterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.3.6. Checking the OVN-Kubernetes pod network connectivity

The connectivity check controller, in OpenShift Container Platform 4.10 and later, orchestrates connection verification checks in your cluster. These include Kubernetes API, OpenShift API and individual nodes. The results for the connection tests are stored in PodNetworkConnectivity objects in the openshift-network-diagnostics namespace. Connection tests are performed every minute in parallel.

Prerequisites

-

Access to the OpenShift CLI (

oc). -

Access to the cluster as a user with the

cluster-adminrole. -

You have installed

jq.

Procedure

To list the current

PodNetworkConnectivityCheckobjects, enter the following command:oc get podnetworkconnectivitychecks -n openshift-network-diagnostics

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnosticsCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the most recent success for each connection object by using the following command:

oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.successes[0]'

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.successes[0]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the most recent failures for each connection object by using the following command:

oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.failures[0]'

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.failures[0]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the most recent outages for each connection object by using the following command:

oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.outages[0]'

$ oc get podnetworkconnectivitychecks -n openshift-network-diagnostics \ -o json | jq '.items[]| .spec.targetEndpoint,.status.outages[0]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The connectivity check controller also logs metrics from these checks into Prometheus.

View all the metrics by running the following command:

oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'$ oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow View the latency between the source pod and the openshift api service for the last 5 minutes:

oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'$ oc exec prometheus-k8s-0 -n openshift-monitoring -- \ promtool query instant http://localhost:9090 \ '{component="openshift-network-diagnostics"}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.4. Tracing Openflow with ovnkube-trace

OVN and OVS traffic flows can be simulated in a single utility called ovnkube-trace. The ovnkube-trace utility runs ovn-trace, ovs-appctl ofproto/trace and ovn-detrace and correlates that information in a single output.

You can execute the ovnkube-trace binary from a dedicated container. For releases after OpenShift Container Platform 4.7, you can also copy the binary to a local host and execute it from that host.

The binaries in the Quay images do not currently work for Dual IP stack or IPv6 only environments. For those environments, you must build from source.

23.4.1. Installing the ovnkube-trace on local host

The ovnkube-trace tool traces packet simulations for arbitrary UDP or TCP traffic between points in an OVN-Kubernetes driven OpenShift Container Platform cluster. Copy the ovnkube-trace binary to your local host making it available to run against the cluster.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges.

Procedure

Create a pod variable by using the following command:

POD=$(oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-master -o name | head -1 | awk -F '/' '{print $NF}')$ POD=$(oc get pods -n openshift-ovn-kubernetes -l app=ovnkube-master -o name | head -1 | awk -F '/' '{print $NF}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command on your local host to copy the binary from the

ovnkube-masterpods:oc cp -n openshift-ovn-kubernetes $POD:/usr/bin/ovnkube-trace ovnkube-trace

$ oc cp -n openshift-ovn-kubernetes $POD:/usr/bin/ovnkube-trace ovnkube-traceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Make

ovnkube-traceexecutable by running the following command:chmod +x ovnkube-trace

$ chmod +x ovnkube-traceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Display the options available with

ovnkube-traceby running the following command:./ovnkube-trace -help

$ ./ovnkube-trace -helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command-line arguments supported are familiar Kubernetes constructs, such as namespaces, pods, services so you do not need to find the MAC address, the IP address of the destination nodes, or the ICMP type.

The log levels are:

- 0 (minimal output)

- 2 (more verbose output showing results of trace commands)

- 5 (debug output)

23.4.2. Running ovnkube-trace

Run ovn-trace to simulate packet forwarding within an OVN logical network.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You are logged in to the cluster with a user with

cluster-adminprivileges. -

You have installed

ovnkube-traceon local host

Example: Testing that DNS resolution works from a deployed pod

This example illustrates how to test the DNS resolution from a deployed pod to the core DNS pod that runs in the cluster.

Procedure

Start a web service in the default namespace by entering the following command:

oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the pods running in the

openshift-dnsnamespace:oc get pods -n openshift-dns

oc get pods -n openshift-dnsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following

ovn-kube-tracecommand to verify DNS resolution is working:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The ouput indicates success from the deployed pod to the DNS port and also indicates that it is successful going back in the other direction. So you know bi-directional traffic is supported on UDP port 53 if my web pod wants to do dns resolution from core DNS.

If for example that did not work and you wanted to get the ovn-trace, the ovs-appctl ofproto/trace and ovn-detrace, and more debug type information increase the log level to 2 and run the command again as follows:

The output from this increased log level is too much to list here. In a failure situation the output of this command shows which flow is dropping that traffic. For example an egress or ingress network policy may be configured on the cluster that does not allow that traffic.

Example: Verifying by using debug output a configured default deny

This example illustrates how to identify by using the debug output that an ingress default deny policy blocks traffic.

Procedure

Create the following YAML that defines a

deny-by-defaultpolicy to deny ingress from all pods in all namespaces. Save the YAML in thedeny-by-default.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command:

oc apply -f deny-by-default.yaml

$ oc apply -f deny-by-default.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

networkpolicy.networking.k8s.io/deny-by-default created

networkpolicy.networking.k8s.io/deny-by-default createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start a web service in the

defaultnamespace by entering the following command:oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80

$ oc run web --namespace=default --image=nginx --labels="app=web" --expose --port=80Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

prodnamespace:oc create namespace prod

$ oc create namespace prodCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to label the

prodnamespace:oc label namespace/prod purpose=production

$ oc label namespace/prod purpose=productionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to deploy an

alpineimage in theprodnamespace and start a shell:oc run test-6459 --namespace=prod --rm -i -t --image=alpine -- sh

$ oc run test-6459 --namespace=prod --rm -i -t --image=alpine -- shCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Open another terminal session.

In this new terminal session run

ovn-traceto verify the failure in communication between the source podtest-6459running in namespaceprodand destination pod running in thedefaultnamespace:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

I0116 14:20:47.380775 50822 ovs.go:90] Maximum command line arguments set to: 191102 ovn-trace source pod to destination pod indicates failure from test-6459 to web

I0116 14:20:47.380775 50822 ovs.go:90] Maximum command line arguments set to: 191102 ovn-trace source pod to destination pod indicates failure from test-6459 to webCopy to Clipboard Copied! Toggle word wrap Toggle overflow Increase the log level to 2 to expose the reason for the failure by running the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Ingress traffic is blocked due to the default deny policy being in place

Create a policy that allows traffic from all pods in a particular namespaces with a label

purpose=production. Save the YAML in theweb-allow-prod.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the policy by entering the following command:

oc apply -f web-allow-prod.yaml

$ oc apply -f web-allow-prod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run

ovnkube-traceto verify that traffic is now allowed by entering the following command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the open shell run the following command:

wget -qO- --timeout=2 http://web.default

wget -qO- --timeout=2 http://web.defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expected output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

23.5. Migrating from the OpenShift SDN network plugin

As a cluster administrator, you can migrate to the OVN-Kubernetes network plugin from the OpenShift SDN network plugin.

You can use the offline migration method for migrating from the OpenShift SDN network plugin to the OVN-Kubernetes plugin. The offline migration method is a manual process that includes some downtime.

23.5.1. Migration to the OVN-Kubernetes network plugin

Migrating to the OVN-Kubernetes network plugin is a manual process that includes some downtime during which your cluster is unreachable.

Before you migrate your OpenShift Container Platform cluster to use the OVN-Kubernetes network plugin, update your cluster to the latest z-stream release so that all the latest bug fixes apply to your cluster.

Although a rollback procedure is provided, the migration is intended to be a one-way process.

A migration to the OVN-Kubernetes network plugin is supported on the following platforms:

- Bare metal hardware

- Amazon Web Services (AWS)

- Google Cloud

- IBM Cloud®

- Microsoft Azure

- Red Hat OpenStack Platform (RHOSP)

- Red Hat Virtualization (RHV)

- {vmw-first}

Migrating to or from the OVN-Kubernetes network plugin is not supported for managed OpenShift cloud services such as Red Hat OpenShift Dedicated, Azure Red Hat OpenShift(ARO), and Red Hat OpenShift Service on AWS (ROSA).

Migrating from OpenShift SDN network plugin to OVN-Kubernetes network plugin is not supported on Nutanix.

23.5.1.1. Considerations for migrating to the OVN-Kubernetes network plugin

If you have more than 150 nodes in your OpenShift Container Platform cluster, then open a support case for consultation on your migration to the OVN-Kubernetes network plugin.

The subnets assigned to nodes and the IP addresses assigned to individual pods are not preserved during the migration.

While the OVN-Kubernetes network plugin implements many of the capabilities present in the OpenShift SDN network plugin, the configuration is not the same.

If your cluster uses any of the following OpenShift SDN network plugin capabilities, you must manually configure the same capability in the OVN-Kubernetes network plugin:

- Namespace isolation

- Egress router pods

-

If your cluster or surrounding network uses any part of the

100.64.0.0/16address range, you must choose another unused IP range by specifying thev4InternalSubnetspec under thespec.defaultNetwork.ovnKubernetesConfigobject definition. OVN-Kubernetes uses the IP range100.64.0.0/16internally by default. -

If your

openshift-sdncluster with Precision Time Protocol (PTP) uses the User Datagram Protocol (UDP) for hardware time stamping and you migrate to the OVN-Kubernetes plugin, the hardware time stamping cannot be applied to primary interface devices, such as an Open vSwitch (OVS) bridge. As a result, UDP version 4 configurations cannot work with abr-exinterface.

The following sections highlight the differences in configuration between the aforementioned capabilities in OVN-Kubernetes and OpenShift SDN network plugins.

Primary network interface

The OpenShift SDN plugin allows application of the NodeNetworkConfigurationPolicy (NNCP) custom resource (CR) to the primary interface on a node. The OVN-Kubernetes network plugin does not have this capability.

If you have an NNCP applied to the primary interface, you must delete the NNCP before migrating to the OVN-Kubernetes network plugin. Deleting the NNCP does not remove the configuration from the primary interface, but with OVN-Kubernetes, the Kubernetes NMState cannot manage this configuration. Instead, the configure-ovs.sh shell script manages the primary interface and the configuration attached to this interface.

Namespace isolation

OVN-Kubernetes supports only the network policy isolation mode.

For a cluster using OpenShift SDN that is configured in either the multitenant or subnet isolation mode, you can still migrate to the OVN-Kubernetes network plugin. Note that after the migration operation, multitenant isolation mode is dropped, so you must manually configure network policies to achieve the same level of project-level isolation for pods and services.

Egress IP addresses

OpenShift SDN supports two different Egress IP modes:

- In the automatically assigned approach, an egress IP address range is assigned to a node.

- In the manually assigned approach, a list of one or more egress IP addresses is assigned to a node.

The migration process supports migrating Egress IP configurations that use the automatically assigned mode.

The differences in configuring an egress IP address between OVN-Kubernetes and OpenShift SDN is described in the following table:

| OVN-Kubernetes | OpenShift SDN |

|---|---|

|

|

For more information on using egress IP addresses in OVN-Kubernetes, see "Configuring an egress IP address".

Egress network policies

The difference in configuring an egress network policy, also known as an egress firewall, between OVN-Kubernetes and OpenShift SDN is described in the following table:

| OVN-Kubernetes | OpenShift SDN |

|---|---|

|

|

Because the name of an EgressFirewall object can only be set to default, after the migration all migrated EgressNetworkPolicy objects are named default, regardless of what the name was under OpenShift SDN.

If you subsequently rollback to OpenShift SDN, all EgressNetworkPolicy objects are named default as the prior name is lost.

For more information on using an egress firewall in OVN-Kubernetes, see "Configuring an egress firewall for a project".

Egress router pods

OVN-Kubernetes supports egress router pods in redirect mode. OVN-Kubernetes does not support egress router pods in HTTP proxy mode or DNS proxy mode.

When you deploy an egress router with the Cluster Network Operator, you cannot specify a node selector to control which node is used to host the egress router pod.

Multicast

The difference between enabling multicast traffic on OVN-Kubernetes and OpenShift SDN is described in the following table:

| OVN-Kubernetes | OpenShift SDN |

|---|---|

|

|

For more information on using multicast in OVN-Kubernetes, see "Enabling multicast for a project".

Network policies

OVN-Kubernetes fully supports the Kubernetes NetworkPolicy API in the networking.k8s.io/v1 API group. No changes are necessary in your network policies when migrating from OpenShift SDN.

23.5.1.2. How the migration process works

The following table summarizes the migration process by segmenting between the user-initiated steps in the process and the actions that the migration performs in response.

| User-initiated steps | Migration activity |

|---|---|

|

Set the |

|

|

Update the |

|

| Reboot each node in the cluster. |

|

If a rollback to OpenShift SDN is required, the following table describes the process.

You must wait until the migration process from OpenShift SDN to OVN-Kubernetes network plugin is successful before initiating a rollback.

| User-initiated steps | Migration activity |

|---|---|

| Suspend the MCO to ensure that it does not interrupt the migration. | The MCO stops. |

|

Set the |

|

|

Update the |

|

| Reboot each node in the cluster. |

|

| Enable the MCO after all nodes in the cluster reboot. |

|

23.5.1.3. Using an Ansible playbook to migrate to the OVN-Kubernetes network plugin

As a cluster administrator, you can use an Ansible collection, network.offline_migration_sdn_to_ovnk, to migrate from the OpenShift SDN Container Network Interface (CNI) network plugin to the OVN-Kubernetes plugin for your cluster. The Ansible collection includes the following playbooks:

-

playbooks/playbook-migration.yml: Includes playbooks that execute in a sequence where each playbook represents a step in the migration process. -

playbooks/playbook-rollback.yml: Includes playbooks that execute in a sequence where each playbook represents a step in the rollback process.

Prerequisites

-

You installed the

python3package, minimum version 3.10. -

You installed the

jmespathandjqpackages. - You logged in to the Red Hat Hybrid Cloud Console and opened the Ansible Automation Platform web console.

-

You created a security group rule that allows User Datagram Protocol (UDP) packets on port

6081for all nodes on all cloud platforms. If you do not do this task, your cluster might fail to schedule pods. Check if your cluster uses static routes or routing policies in the host network.

-

If true, a later procedure step requires that you set the

routingViaHostparameter totrueand theipForwardingparameter toGlobalin thegatewayConfigsection of theplaybooks/playbook-migration.ymlfile.

-

If true, a later procedure step requires that you set the

-

If the OpenShift-SDN plugin uses the

100.64.0.0/16and100.88.0.0/16address ranges, you patched the address ranges. For more information, see "Patching OVN-Kubernetes address ranges" in the Additional resources section.

Procedure

Install the

ansible-corepackage, minimum version 2.15. The following example command shows how to install theansible-corepackage on Red Hat Enterprise Linux (RHEL):sudo dnf install -y ansible-core

$ sudo dnf install -y ansible-coreCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create an

ansible.cfgfile and add information similar to the following example to the file. Ensure that file exists in the same directory as where theansible-galaxycommands and the playbooks run.Copy to Clipboard Copied! Toggle word wrap Toggle overflow From the Ansible Automation Platform web console, go to the Connect to Hub page and complete the following steps:

- In the Offline token section of the page, click the Load token button.

- After the token loads, click the Copy to clipboard icon.

-

Open the

ansible.cfgfile and paste the API token in thetoken=parameter. The API token is required for authenticating against the server URL specified in theansible.cfgfile.

Install the

network.offline_migration_sdn_to_ovnkAnsible collection by entering the followingansible-galaxycommand:ansible-galaxy collection install network.offline_migration_sdn_to_ovnk

$ ansible-galaxy collection install network.offline_migration_sdn_to_ovnkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

network.offline_migration_sdn_to_ovnkAnsible collection is installed on your system:ansible-galaxy collection list | grep network.offline_migration_sdn_to_ovnk

$ ansible-galaxy collection list | grep network.offline_migration_sdn_to_ovnkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

network.offline_migration_sdn_to_ovnk 1.0.2

network.offline_migration_sdn_to_ovnk 1.0.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

network.offline_migration_sdn_to_ovnkAnsible collection is saved in the default path of~/.ansible/collections/ansible_collections/network/offline_migration_sdn_to_ovnk/.Configure migration features in the

playbooks/playbook-migration.ymlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow migration_interface_name-

If you use an

NodeNetworkConfigurationPolicy(NNCP) resource on a primary interface, specify the interface name in themigration-playbook.ymlfile so that the NNCP resource gets deleted on the primary interface during the migration process. migration_disable_auto_migration-

Disables the auto-migration of OpenShift SDN CNI plug-in features to the OVN-Kubernetes plugin. If you disable auto-migration of features, you must also set the

migration_egress_ip,migration_egress_firewall, andmigration_multicastparameters tofalse. If you need to enable auto-migration of features, set the parameter tofalse. migration_routing_via_host-

Set to

trueto configure local gateway mode orfalseto configure shared gateway mode for nodes in your cluster. The default value isfalse. In local gateway mode, traffic is routed through the host network stack. In shared gateway mode, traffic is not routed through the host network stack. migration_ip_forwarding-

If you configured local gateway mode, set IP forwarding to

Globalif you need the host network of the node to act as a router for traffic not related to OVN-Kubernetes. migration_cidr-

Specifies a Classless Inter-Domain Routing (CIDR) IP address block for your cluster. You cannot use any CIDR block that overlaps the

100.64.0.0/16CIDR block, because the OVN-Kubernetes network provider uses this block internally. migration_prefix- Ensure that you specify a prefix value, which is the slice of the CIDR block apportioned to each node in your cluster.

migration_mtu- Optional parameter that sets a specific maximum transmission unit (MTU) to your cluster network after the migration process.

migration_geneve_port-

Optional parameter that sets a Geneve port for OVN-Kubernetes. The default port is

6081. migration_ipv4_subnet-

Optional parameter that sets an IPv4 address range for internal use by OVN-Kubernetes. The default value for the parameter is

100.64.0.0/16.

To run the

playbooks/playbook-migration.ymlfile, enter the following command:ansible-playbook -v playbooks/playbook-migration.yml

$ ansible-playbook -v playbooks/playbook-migration.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

23.5.2. Migrating to the OVN-Kubernetes network plugin

As a cluster administrator, you can change the network plugin for your cluster to OVN-Kubernetes. During the migration, you must reboot every node in your cluster.

While performing the migration, your cluster is unavailable and workloads might be interrupted. Perform the migration only when an interruption in service is acceptable.

Prerequisites

- You have a cluster configured with the OpenShift SDN CNI network plugin in the network policy isolation mode.

-

You installed the OpenShift CLI (

oc). -

You have access to the cluster as a user with the

cluster-adminrole. - You have a recent backup of the etcd database.

- You can manually reboot each node.

- You checked that your cluster is in a known good state without any errors.

-

You created a security group rule that allows User Datagram Protocol (UDP) packets on port

6081for all nodes on all cloud platforms.

Procedure

To backup the configuration for the cluster network, enter the following command:

oc get Network.config.openshift.io cluster -o yaml > cluster-openshift-sdn.yaml

$ oc get Network.config.openshift.io cluster -o yaml > cluster-openshift-sdn.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

OVN_SDN_MIGRATION_TIMEOUTenvironment variable is set and is equal to0sby running the following command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the configuration from the Cluster Network Operator (CNO) configuration object by running the following command:

oc patch Network.operator.openshift.io cluster --type='merge' \ --patch '{"spec":{"migration":null}}'$ oc patch Network.operator.openshift.io cluster --type='merge' \ --patch '{"spec":{"migration":null}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the

NodeNetworkConfigurationPolicy(NNCP) custom resource (CR) that defines the primary network interface for the OpenShift SDN network plugin by completing the following steps:Check that the existing NNCP CR bonded the primary interface to your cluster by entering the following command:

oc get nncp

$ oc get nncpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME STATUS REASON bondmaster0 Available SuccessfullyConfigured

NAME STATUS REASON bondmaster0 Available SuccessfullyConfiguredCopy to Clipboard Copied! Toggle word wrap Toggle overflow Network Manager stores the connection profile for the bonded primary interface in the

/etc/NetworkManager/system-connectionssystem path.Remove the NNCP from your cluster:

oc delete nncp <nncp_manifest_filename>

$ oc delete nncp <nncp_manifest_filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To prepare all the nodes for the migration, set the

migrationfield on the CNO configuration object by running the following command:oc patch Network.operator.openshift.io cluster --type='merge' \ --patch '{ "spec": { "migration": { "networkType": "OVNKubernetes" } } }'$ oc patch Network.operator.openshift.io cluster --type='merge' \ --patch '{ "spec": { "migration": { "networkType": "OVNKubernetes" } } }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThis step does not deploy OVN-Kubernetes immediately. Instead, specifying the

migrationfield triggers the Machine Config Operator (MCO) to apply new machine configs to all the nodes in the cluster in preparation for the OVN-Kubernetes deployment.Check that the reboot is finished by running the following command:

oc get mcp

$ oc get mcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that all cluster Operators are available by running the following command:

oc get co

$ oc get coCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively: You can disable automatic migration of several OpenShift SDN capabilities to the OVN-Kubernetes equivalents:

- Egress IPs

- Egress firewall

- Multicast

To disable automatic migration of the configuration for any of the previously noted OpenShift SDN features, specify the following keys:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

bool: Specifies whether to enable migration of the feature. The default istrue.

Optional: You can customize the following settings for OVN-Kubernetes to meet your network infrastructure requirements:

Maximum transmission unit (MTU). Consider the following before customizing the MTU for this optional step:

- If you use the default MTU, and you want to keep the default MTU during migration, this step can be ignored.

- If you used a custom MTU, and you want to keep the custom MTU during migration, you must declare the custom MTU value in this step.

This step does not work if you want to change the MTU value during migration. Instead, you must first follow the instructions for "Changing the cluster MTU". You can then keep the custom MTU value by performing this procedure and declaring the custom MTU value in this step.

NoteOpenShift-SDN and OVN-Kubernetes have different overlay overhead. MTU values should be selected by following the guidelines found on the "MTU value selection" page.

- Geneve (Generic Network Virtualization Encapsulation) overlay network port

- OVN-Kubernetes IPv4 internal subnet

To customize either of the previously noted settings, enter and customize the following command. If you do not need to change the default value, omit the key from the patch.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where:

mtu-

The MTU for the Geneve overlay network. This value is normally configured automatically, but if the nodes in your cluster do not all use the same MTU, then you must set this explicitly to

100less than the smallest node MTU value. port-

The UDP port for the Geneve overlay network. If a value is not specified, the default is

6081. The port cannot be the same as the VXLAN port that is used by OpenShift SDN. The default value for the VXLAN port is4789. ipv4_subnet-

An IPv4 address range for internal use by OVN-Kubernetes. You must ensure that the IP address range does not overlap with any other subnet used by your OpenShift Container Platform installation. The IP address range must be larger than the maximum number of nodes that can be added to the cluster. The default value is

100.64.0.0/16.

Example patch command to update

mtufieldCopy to Clipboard Copied! Toggle word wrap Toggle overflow As the MCO updates machines in each machine config pool, it reboots each node one by one. You must wait until all the nodes are updated. Check the machine config pool status by entering the following command:

oc get mcp

$ oc get mcpCopy to Clipboard Copied! Toggle word wrap Toggle overflow A successfully updated node has the following status:

UPDATED=true,UPDATING=false,DEGRADED=false.NoteBy default, the MCO updates one machine per pool at a time, causing the total time the migration takes to increase with the size of the cluster.

Confirm the status of the new machine configuration on the hosts:

To list the machine configuration state and the name of the applied machine configuration, enter the following command:

oc describe node | egrep "hostname|machineconfig"

$ oc describe node | egrep "hostname|machineconfig"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

kubernetes.io/hostname=master-0 machineconfiguration.openshift.io/currentConfig: rendered-master-c53e221d9d24e1c8bb6ee89dd3d8ad7b machineconfiguration.openshift.io/desiredConfig: rendered-master-c53e221d9d24e1c8bb6ee89dd3d8ad7b machineconfiguration.openshift.io/reason: machineconfiguration.openshift.io/state: Done

kubernetes.io/hostname=master-0 machineconfiguration.openshift.io/currentConfig: rendered-master-c53e221d9d24e1c8bb6ee89dd3d8ad7b machineconfiguration.openshift.io/desiredConfig: rendered-master-c53e221d9d24e1c8bb6ee89dd3d8ad7b machineconfiguration.openshift.io/reason: machineconfiguration.openshift.io/state: DoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the following statements are true:

-

The value of

machineconfiguration.openshift.io/statefield isDone. -

The value of the

machineconfiguration.openshift.io/currentConfigfield is equal to the value of themachineconfiguration.openshift.io/desiredConfigfield.

-

The value of

To confirm that the machine config is correct, enter the following command:

oc get machineconfig <config_name> -o yaml | grep ExecStart

$ oc get machineconfig <config_name> -o yaml | grep ExecStartCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

<config_name>is the name of the machine config from themachineconfiguration.openshift.io/currentConfigfield.The machine config must include the following update to the systemd configuration:

ExecStart=/usr/local/bin/configure-ovs.sh OVNKubernetes

ExecStart=/usr/local/bin/configure-ovs.sh OVNKubernetesCopy to Clipboard Copied! Toggle word wrap Toggle overflow If a node is stuck in the

NotReadystate, investigate the machine config daemon pod logs and resolve any errors.To list the pods, enter the following command:

oc get pod -n openshift-machine-config-operator

$ oc get pod -n openshift-machine-config-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The names for the config daemon pods are in the following format:

machine-config-daemon-<seq>. The<seq>value is a random five character alphanumeric sequence.Display the pod log for the first machine config daemon pod shown in the previous output by enter the following command:

oc logs <pod> -n openshift-machine-config-operator

$ oc logs <pod> -n openshift-machine-config-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

podis the name of a machine config daemon pod.- Resolve any errors in the logs shown by the output from the previous command.

To start the migration, configure the OVN-Kubernetes network plugin by using one of the following commands:

To specify the network provider without changing the cluster network IP address block, enter the following command:

oc patch Network.config.openshift.io cluster \ --type='merge' --patch '{ "spec": { "networkType": "OVNKubernetes" } }'$ oc patch Network.config.openshift.io cluster \ --type='merge' --patch '{ "spec": { "networkType": "OVNKubernetes" } }'Copy to Clipboard Copied! Toggle word wrap Toggle overflow To specify a different cluster network IP address block, enter the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow where

cidris a CIDR block andprefixis the slice of the CIDR block apportioned to each node in your cluster. You cannot use any CIDR block that overlaps with the100.64.0.0/16CIDR block because the OVN-Kubernetes network provider uses this block internally.ImportantYou cannot change the service network address block during the migration.

Verify that the Multus daemon set rollout is complete before continuing with subsequent steps:

oc -n openshift-multus rollout status daemonset/multus

$ oc -n openshift-multus rollout status daemonset/multusCopy to Clipboard Copied! Toggle word wrap Toggle overflow The name of the Multus pods is in the form of

multus-<xxxxx>where<xxxxx>is a random sequence of letters. It might take several moments for the pods to restart.Example output

Waiting for daemon set "multus" rollout to finish: 1 out of 6 new pods have been updated... ... Waiting for daemon set "multus" rollout to finish: 5 of 6 updated pods are available... daemon set "multus" successfully rolled out