14.7. Gestion des dispositifs SR-IOV

Un périphérique virtuel émulé utilise souvent plus de CPU et de mémoire qu'un périphérique réseau matériel. Cela peut limiter les performances d'une machine virtuelle (VM). Toutefois, si l'un des périphériques de votre hôte de virtualisation prend en charge la virtualisation d'E/S à racine unique (SR-IOV), vous pouvez utiliser cette fonctionnalité pour améliorer les performances du périphérique et, éventuellement, les performances globales de vos machines virtuelles.

14.7.1. Qu'est-ce que le SR-IOV ?

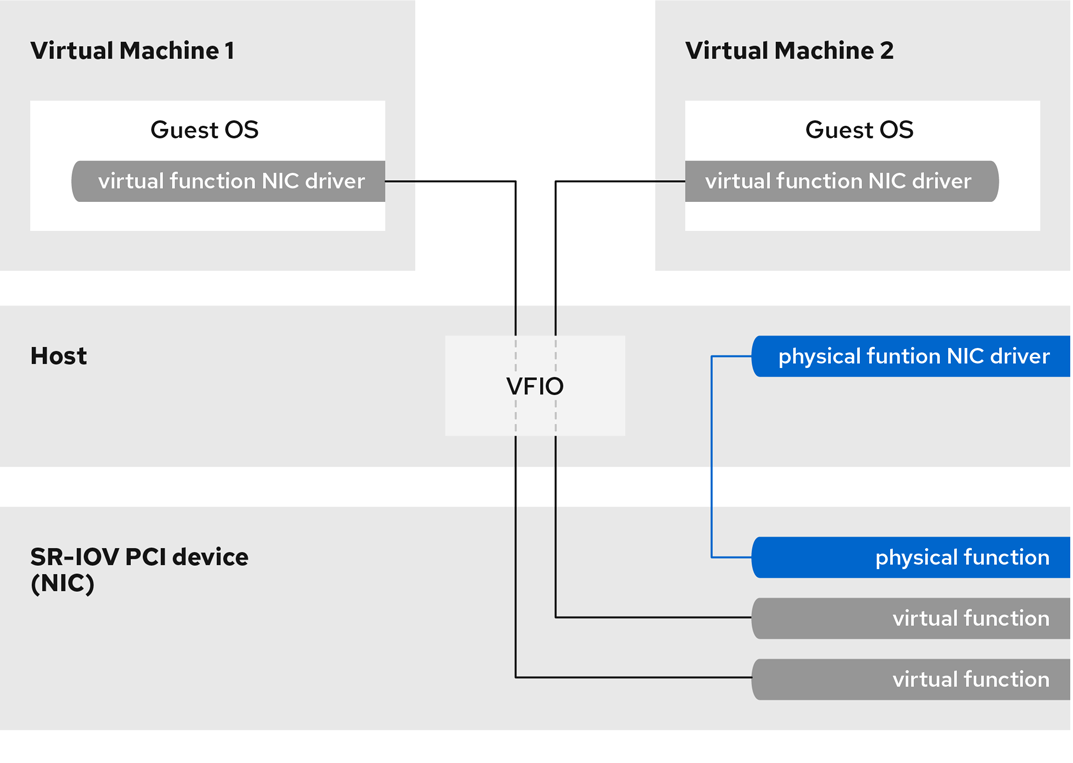

La virtualisation des E/S à racine unique (SR-IOV) est une spécification qui permet à un seul périphérique PCI Express (PCIe) de présenter au système hôte plusieurs périphériques PCI distincts, appelés virtual functions (VF). Chacun de ces périphériques :

- Est capable de fournir un service identique ou similaire à celui de l'appareil PCIe d'origine.

- Apparaît à une adresse différente sur le bus PCI de l'hôte.

- Peut être affecté à une VM différente en utilisant l'affectation VFIO.

Par exemple, un seul périphérique réseau compatible SR-IOV peut présenter des VF à plusieurs VM. Bien que tous les VF utilisent la même carte physique, la même connexion réseau et le même câble réseau, chaque VM contrôle directement son propre périphérique réseau matériel et n'utilise aucune ressource supplémentaire de l'hôte.

Comment fonctionne le SR-IOV

La fonctionnalité SR-IOV est possible grâce à l'introduction des fonctions PCIe suivantes :

- Physical functions (PFs) - Une fonction PCIe qui fournit la fonctionnalité de son dispositif (par exemple la mise en réseau) à l'hôte, mais qui peut également créer et gérer un ensemble de VF. Chaque périphérique compatible SR-IOV possède un ou plusieurs PF.

- Virtual functions (VFs) - Fonctions PCIe légères qui se comportent comme des dispositifs indépendants. Chaque VF est dérivé d'un PF. Le nombre maximum de VF qu'un périphérique peut avoir dépend du matériel du périphérique. Chaque VF ne peut être attribué qu'à une seule VM à la fois, mais plusieurs VF peuvent être attribués à une VM.

Les VM reconnaissent les VF comme des périphériques virtuels. Par exemple, un VF créé par un périphérique réseau SR-IOV apparaît comme une carte réseau pour une VM à laquelle il est assigné, de la même manière qu'une carte réseau physique apparaît au système hôte.

Figure 14.1. Architecture SR-IOV

Avantages

Les principaux avantages de l'utilisation de VF SR-IOV plutôt que de dispositifs émulés sont les suivants :

- Amélioration des performances

- Réduction de l'utilisation des ressources de l'unité centrale et de la mémoire de l'hôte

Par exemple, un VF attaché à une VM en tant que vNIC a des performances presque identiques à celles d'un NIC physique, et bien meilleures que celles des NIC paravirtualisés ou émulés. En particulier, lorsque plusieurs VF sont utilisés simultanément sur un même hôte, les avantages en termes de performances peuvent être significatifs.

Inconvénients

- Pour modifier la configuration d'un PF, vous devez d'abord changer le nombre de VFs exposés par le PF à zéro. Par conséquent, vous devez également supprimer les périphériques fournis par ces VFs de la VM à laquelle ils sont assignés.

- Une VM à laquelle sont attachés des périphériques assignés par VFIO, y compris des VF SR-IOV, ne peut pas être migrée vers un autre hôte. Dans certains cas, vous pouvez contourner cette limitation en associant le périphérique attribué à un périphérique émulé. Par exemple, vous pouvez lier un VF de réseau attribué à un vNIC émulé, et retirer le VF avant la migration.

- En outre, les périphériques attribués par VFIO nécessitent l'épinglage de la mémoire de la VM, ce qui augmente la consommation de mémoire de la VM et empêche l'utilisation du ballooning de la mémoire sur la VM.

14.7.2. Attacher des périphériques de réseau SR-IOV à des machines virtuelles

Pour attacher un périphérique réseau SR-IOV à une machine virtuelle (VM) sur un hôte Intel ou AMD, vous devez créer une fonction virtuelle (VF) à partir d'une interface réseau compatible SR-IOV sur l'hôte et affecter la VF en tant que périphérique à une VM spécifiée. Pour plus de détails, voir les instructions suivantes.

Conditions préalables

L'unité centrale et le micrologiciel de votre hôte prennent en charge l'unité de gestion de la mémoire d'E/S (IOMMU).

- Si vous utilisez un processeur Intel, il doit supporter la technologie de virtualisation Intel pour Directed I/O (VT-d).

- Si vous utilisez un processeur AMD, il doit prendre en charge la fonction AMD-Vi.

Le système hôte utilise le service de contrôle d'accès (ACS) pour assurer l'isolation de l'accès direct à la mémoire (DMA) pour la topologie PCIe. Vérifiez ce point auprès du fournisseur du système.

Pour plus d'informations, voir Considérations matérielles pour l'implémentation du SR-IOV.

Le périphérique réseau physique prend en charge SR-IOV. Pour vérifier si des périphériques réseau de votre système prennent en charge SR-IOV, utilisez la commande

lspci -vet recherchezSingle Root I/O Virtualization (SR-IOV)dans le résultat.Copy to Clipboard Copied! Toggle word wrap Toggle overflow L'interface réseau de l'hôte que vous voulez utiliser pour créer des VF est en cours d'exécution. Par exemple, pour activer l'interface eth1 et vérifier qu'elle fonctionne :

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pour que l'attribution de périphériques SR-IOV fonctionne, la fonction IOMMU doit être activée dans le BIOS et le noyau de l'hôte. Pour ce faire, il faut

Sur un hôte Intel, activez VT-d :

Régénérer la configuration GRUB avec les paramètres

intel_iommu=onetiommu=pt:grubby --args="intel_iommu=on iommu=pt" --update-kernel=ALL

# grubby --args="intel_iommu=on iommu=pt" --update-kernel=ALLCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Redémarrer l'hôte.

Sur un hôte AMD, activez AMD-Vi :

Régénérer la configuration GRUB avec le paramètre

iommu=pt:grubby --args="iommu=pt" --update-kernel=ALL

# grubby --args="iommu=pt" --update-kernel=ALLCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Redémarrer l'hôte.

Procédure

Optional: Confirmez le nombre maximum de VF que votre périphérique de réseau peut utiliser. Pour ce faire, utilisez la commande suivante et remplacez eth1 par votre périphérique réseau compatible SR-IOV.

cat /sys/class/net/eth1/device/sriov_totalvfs 7

# cat /sys/class/net/eth1/device/sriov_totalvfs 7Copy to Clipboard Copied! Toggle word wrap Toggle overflow La commande suivante permet de créer une fonction virtuelle (VF) :

echo VF-number > /sys/class/net/network-interface/device/sriov_numvfs

# echo VF-number > /sys/class/net/network-interface/device/sriov_numvfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Dans la commande, remplacez :

- VF-number avec le nombre de FV que vous souhaitez créer sur la CP.

- network-interface avec le nom de l'interface réseau pour laquelle les VF seront créés.

L'exemple suivant crée 2 VF à partir de l'interface réseau eth1 :

echo 2 > /sys/class/net/eth1/device/sriov_numvfs

# echo 2 > /sys/class/net/eth1/device/sriov_numvfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Vérifier que les VF ont été ajoutés :

lspci | grep Ethernet

# lspci | grep Ethernet 82:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) 82:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01) 82:10.0 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01) 82:10.2 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function (rev 01)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Rendez les VF créés persistants en créant une règle udev pour l'interface réseau que vous avez utilisée pour créer les VF. Par exemple, pour l'interface eth1, créez le fichier

/etc/udev/rules.d/eth1.ruleset ajoutez la ligne suivante :ACTION=="add", SUBSYSTEM=="net", ENV{ID_NET_DRIVER}=="ixgbe", ATTR{device/sriov_numvfs}="2"ACTION=="add", SUBSYSTEM=="net", ENV{ID_NET_DRIVER}=="ixgbe", ATTR{device/sriov_numvfs}="2"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Cela garantit que les deux VF qui utilisent le pilote

ixgbeseront automatiquement disponibles pour l'interfaceeth1au démarrage de l'hôte. Si vous n'avez pas besoin de périphériques SR-IOV persistants, ignorez cette étape.AvertissementActuellement, le paramètre décrit ci-dessus ne fonctionne pas correctement lorsque l'on tente de rendre les VF persistants sur les adaptateurs Broadcom NetXtreme II BCM57810. En outre, l'attachement de VF basés sur ces adaptateurs à des machines virtuelles Windows n'est actuellement pas fiable.

Branchez à chaud l'un des périphériques d'interface VF nouvellement ajoutés à une VM en cours d'exécution.

virsh attach-interface testguest1 hostdev 0000:82:10.0 --managed --live --config

# virsh attach-interface testguest1 hostdev 0000:82:10.0 --managed --live --configCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Vérification

- Si la procédure réussit, le système d'exploitation invité détecte une nouvelle carte d'interface réseau.

14.7.3. Dispositifs pris en charge pour l'affectation SR-IOV

Tous les périphériques ne peuvent pas être utilisés pour le SR-IOV. Les périphériques suivants ont été testés et vérifiés comme étant compatibles avec SR-IOV dans RHEL 9.

Dispositifs de mise en réseau

-

Intel 82599ES 10 Gigabit Ethernet Controller - utilise le pilote

ixgbe -

Intel Ethernet Controller XL710 Series - utilise le pilote

i40e -

Intel Ethernet Network Adapter XXV710 - utilise le pilote

i40e -

Intel 82576 Gigabit Ethernet Controller - utilise le pilote

igb -

Broadcom NetXtreme II BCM57810 - utilise le pilote

bnx2x -

Contrôleur Ethernet E810-C pour QSFP - utilise le pilote

ice -

SFC9220 10/40G Ethernet Controller - utilise le pilote

sfc -

Contrôleur FastLinQ QL41000 Series 10/25/40/50GbE - utilise le pilote

qede - Cartes d'adaptateur Ethernet Mellanox ConnectX-5

- Famille Mellanox MT2892 [ConnectX-6 Dx]