Chapter 34. Load balancing with MetalLB

34.1. About MetalLB and the MetalLB Operator

As a cluster administrator, you can add the MetalLB Operator to your cluster so that when a service of type LoadBalancer is added to the cluster, MetalLB can add an external IP address for the service. The external IP address is added to the host network for your cluster.

34.1.1. When to use MetalLB

Using MetalLB is valuable when you have a bare-metal cluster, or an infrastructure that is like bare metal, and you want fault-tolerant access to an application through an external IP address.

You must configure your networking infrastructure to ensure that network traffic for the external IP address is routed from clients to the host network for the cluster.

After deploying MetalLB with the MetalLB Operator, when you add a service of type LoadBalancer, MetalLB provides a platform-native load balancer.

MetalLB operating in layer2 mode provides support for failover by utilizing a mechanism similar to IP failover. However, instead of relying on the virtual router redundancy protocol (VRRP) and keepalived, MetalLB leverages a gossip-based protocol to identify instances of node failure. When a failover is detected, another node assumes the role of the leader node, and a gratuitous ARP message is dispatched to broadcast this change.

MetalLB operating in layer3 or border gateway protocol (BGP) mode delegates failure detection to the network. The BGP router or routers that the OpenShift Container Platform nodes have established a connection with will identify any node failure and terminate the routes to that node.

Using MetalLB instead of IP failover is preferable for ensuring high availability of pods and services.

34.1.2. MetalLB Operator custom resources

The MetalLB Operator monitors its own namespace for the following custom resources:

MetalLB-

When you add a

MetalLBcustom resource to the cluster, the MetalLB Operator deploys MetalLB on the cluster. The Operator only supports a single instance of the custom resource. If the instance is deleted, the Operator removes MetalLB from the cluster. IPAddressPoolMetalLB requires one or more pools of IP addresses that it can assign to a service when you add a service of type

LoadBalancer. AnIPAddressPoolincludes a list of IP addresses. The list can be a single IP address that is set using a range, such as 1.1.1.1-1.1.1.1, a range specified in CIDR notation, a range specified as a starting and ending address separated by a hyphen, or a combination of the three. AnIPAddressPoolrequires a name. The documentation uses names likedoc-example,doc-example-reserved, anddoc-example-ipv6. The MetalLBcontrollerassigns IP addresses from a pool of addresses in anIPAddressPool.L2AdvertisementandBGPAdvertisementcustom resources enable the advertisement of a given IP from a given pool. You can assign IP addresses from anIPAddressPoolto services and namespaces by using thespec.serviceAllocationspecification in theIPAddressPoolcustom resource.NoteA single

IPAddressPoolcan be referenced by a L2 advertisement and a BGP advertisement.BGPPeer- The BGP peer custom resource identifies the BGP router for MetalLB to communicate with, the AS number of the router, the AS number for MetalLB, and customizations for route advertisement. MetalLB advertises the routes for service load-balancer IP addresses to one or more BGP peers.

BFDProfile- The BFD profile custom resource configures Bidirectional Forwarding Detection (BFD) for a BGP peer. BFD provides faster path failure detection than BGP alone provides.

L2Advertisement-

The L2Advertisement custom resource advertises an IP coming from an

IPAddressPoolusing the L2 protocol. BGPAdvertisement-

The BGPAdvertisement custom resource advertises an IP coming from an

IPAddressPoolusing the BGP protocol.

After you add the MetalLB custom resource to the cluster and the Operator deploys MetalLB, the controller and speaker MetalLB software components begin running.

MetalLB validates all relevant custom resources.

34.1.3. MetalLB software components

When you install the MetalLB Operator, the metallb-operator-controller-manager deployment starts a pod. The pod is the implementation of the Operator. The pod monitors for changes to all the relevant resources.

When the Operator starts an instance of MetalLB, it starts a controller deployment and a speaker daemon set.

You can configure deployment specifications in the MetalLB custom resource to manage how controller and speaker pods deploy and run in your cluster. For more information about these deployment specifications, see the Additional resources section.

controllerThe Operator starts the deployment and a single pod. When you add a service of type

LoadBalancer, Kubernetes uses thecontrollerto allocate an IP address from an address pool. In case of a service failure, verify you have the following entry in yourcontrollerpod logs:Example output

"event":"ipAllocated","ip":"172.22.0.201","msg":"IP address assigned by controller

"event":"ipAllocated","ip":"172.22.0.201","msg":"IP address assigned by controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow speakerThe Operator starts a daemon set for

speakerpods. By default, a pod is started on each node in your cluster. You can limit the pods to specific nodes by specifying a node selector in theMetalLBcustom resource when you start MetalLB. If thecontrollerallocated the IP address to the service and service is still unavailable, read thespeakerpod logs. If thespeakerpod is unavailable, run theoc describe pod -ncommand.For layer 2 mode, after the

controllerallocates an IP address for the service, thespeakerpods use an algorithm to determine whichspeakerpod on which node will announce the load balancer IP address. The algorithm involves hashing the node name and the load balancer IP address. For more information, see "MetalLB and external traffic policy". Thespeakeruses Address Resolution Protocol (ARP) to announce IPv4 addresses and Neighbor Discovery Protocol (NDP) to announce IPv6 addresses.

For Border Gateway Protocol (BGP) mode, after the controller allocates an IP address for the service, each speaker pod advertises the load balancer IP address with its BGP peers. You can configure which nodes start BGP sessions with BGP peers.

Requests for the load balancer IP address are routed to the node with the speaker that announces the IP address. After the node receives the packets, the service proxy routes the packets to an endpoint for the service. The endpoint can be on the same node in the optimal case, or it can be on another node. The service proxy chooses an endpoint each time a connection is established.

34.1.4. MetalLB and external traffic policy

With layer 2 mode, one node in your cluster receives all the traffic for the service IP address. With BGP mode, a router on the host network opens a connection to one of the nodes in the cluster for a new client connection. How your cluster handles the traffic after it enters the node is affected by the external traffic policy.

clusterThis is the default value for

spec.externalTrafficPolicy.With the

clustertraffic policy, after the node receives the traffic, the service proxy distributes the traffic to all the pods in your service. This policy provides uniform traffic distribution across the pods, but it obscures the client IP address and it can appear to the application in your pods that the traffic originates from the node rather than the client.localWith the

localtraffic policy, after the node receives the traffic, the service proxy only sends traffic to the pods on the same node. For example, if thespeakerpod on node A announces the external service IP, then all traffic is sent to node A. After the traffic enters node A, the service proxy only sends traffic to pods for the service that are also on node A. Pods for the service that are on additional nodes do not receive any traffic from node A. Pods for the service on additional nodes act as replicas in case failover is needed.This policy does not affect the client IP address. Application pods can determine the client IP address from the incoming connections.

The following information is important when configuring the external traffic policy in BGP mode.

Although MetalLB advertises the load balancer IP address from all the eligible nodes, the number of nodes loadbalancing the service can be limited by the capacity of the router to establish equal-cost multipath (ECMP) routes. If the number of nodes advertising the IP is greater than the ECMP group limit of the router, the router will use less nodes than the ones advertising the IP.

For example, if the external traffic policy is set to local and the router has an ECMP group limit set to 16 and the pods implementing a LoadBalancer service are deployed on 30 nodes, this would result in pods deployed on 14 nodes not receiving any traffic. In this situation, it would be preferable to set the external traffic policy for the service to cluster.

34.1.5. MetalLB concepts for layer 2 mode

In layer 2 mode, the speaker pod on one node announces the external IP address for a service to the host network. From a network perspective, the node appears to have multiple IP addresses assigned to a network interface.

In layer 2 mode, MetalLB relies on ARP and NDP. These protocols implement local address resolution within a specific subnet. In this context, the client must be able to reach the VIP assigned by MetalLB that exists on the same subnet as the nodes announcing the service in order for MetalLB to work.

The speaker pod responds to ARP requests for IPv4 services and NDP requests for IPv6.

In layer 2 mode, all traffic for a service IP address is routed through one node. After traffic enters the node, the service proxy for the CNI network provider distributes the traffic to all the pods for the service.

Because all traffic for a service enters through a single node in layer 2 mode, in a strict sense, MetalLB does not implement a load balancer for layer 2. Rather, MetalLB implements a failover mechanism for layer 2 so that when a speaker pod becomes unavailable, a speaker pod on a different node can announce the service IP address.

When a node becomes unavailable, failover is automatic. The speaker pods on the other nodes detect that a node is unavailable and a new speaker pod and node take ownership of the service IP address from the failed node.

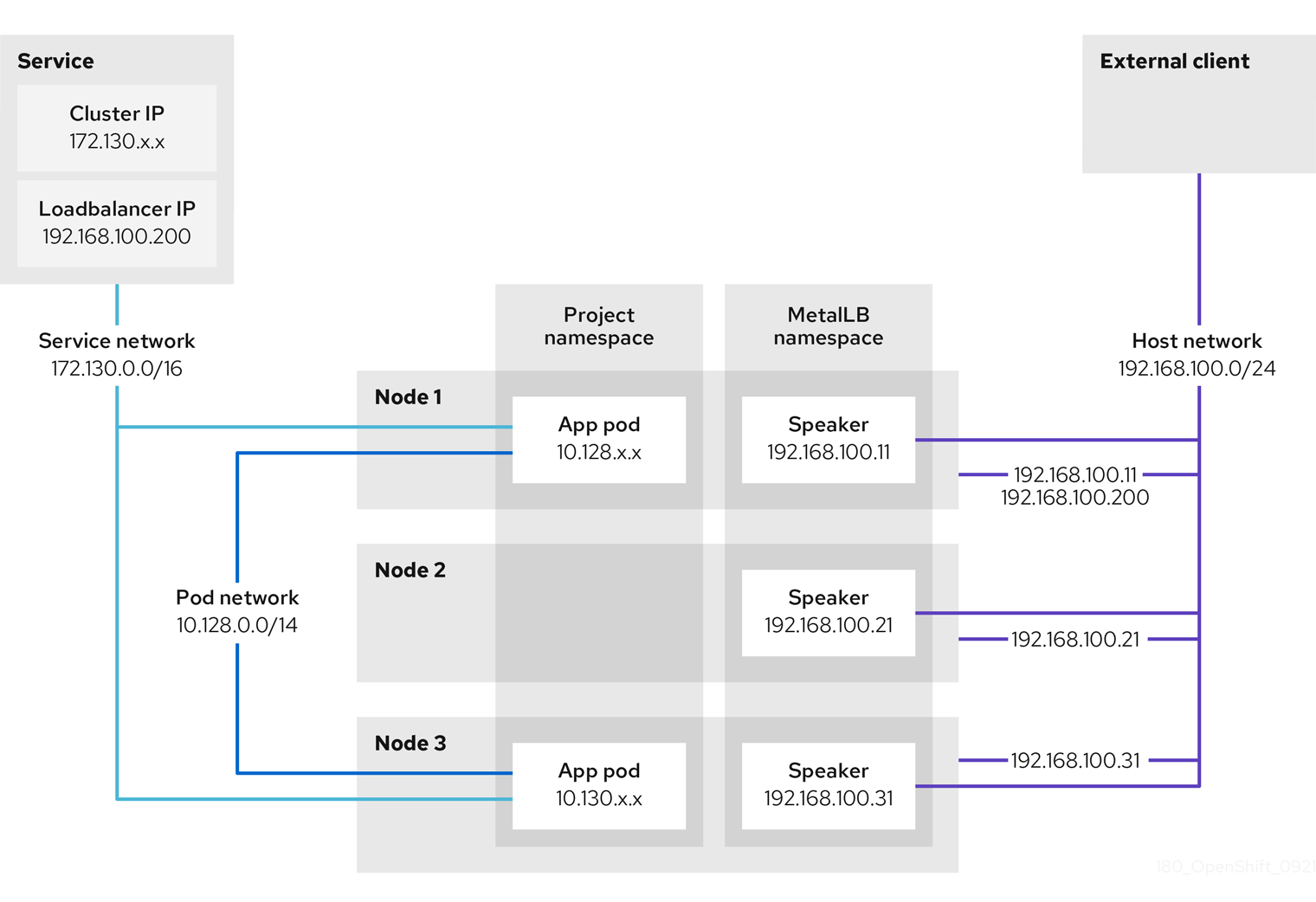

The preceding graphic shows the following concepts related to MetalLB:

-

An application is available through a service that has a cluster IP on the

172.130.0.0/16subnet. That IP address is accessible from inside the cluster. The service also has an external IP address that MetalLB assigned to the service,192.168.100.200. - Nodes 1 and 3 have a pod for the application.

-

The

speakerdaemon set runs a pod on each node. The MetalLB Operator starts these pods. -

Each

speakerpod is a host-networked pod. The IP address for the pod is identical to the IP address for the node on the host network. -

The

speakerpod on node 1 uses ARP to announce the external IP address for the service,192.168.100.200. Thespeakerpod that announces the external IP address must be on the same node as an endpoint for the service and the endpoint must be in theReadycondition. Client traffic is routed to the host network and connects to the

192.168.100.200IP address. After traffic enters the node, the service proxy sends the traffic to the application pod on the same node or another node according to the external traffic policy that you set for the service.-

If the external traffic policy for the service is set to

cluster, the node that advertises the192.168.100.200load balancer IP address is selected from the nodes where aspeakerpod is running. Only that node can receive traffic for the service. -

If the external traffic policy for the service is set to

local, the node that advertises the192.168.100.200load balancer IP address is selected from the nodes where aspeakerpod is running and at least an endpoint of the service. Only that node can receive traffic for the service. In the preceding graphic, either node 1 or 3 would advertise192.168.100.200.

-

If the external traffic policy for the service is set to

-

If node 1 becomes unavailable, the external IP address fails over to another node. On another node that has an instance of the application pod and service endpoint, the

speakerpod begins to announce the external IP address,192.168.100.200and the new node receives the client traffic. In the diagram, the only candidate is node 3.

34.1.6. MetalLB concepts for BGP mode

In BGP mode, by default each speaker pod advertises the load balancer IP address for a service to each BGP peer. It is also possible to advertise the IPs coming from a given pool to a specific set of peers by adding an optional list of BGP peers. BGP peers are commonly network routers that are configured to use the BGP protocol. When a router receives traffic for the load balancer IP address, the router picks one of the nodes with a speaker pod that advertised the IP address. The router sends the traffic to that node. After traffic enters the node, the service proxy for the CNI network plugin distributes the traffic to all the pods for the service.

The directly-connected router on the same layer 2 network segment as the cluster nodes can be configured as a BGP peer. If the directly-connected router is not configured as a BGP peer, you need to configure your network so that packets for load balancer IP addresses are routed between the BGP peers and the cluster nodes that run the speaker pods.

Each time a router receives new traffic for the load balancer IP address, it creates a new connection to a node. Each router manufacturer has an implementation-specific algorithm for choosing which node to initiate the connection with. However, the algorithms commonly are designed to distribute traffic across the available nodes for the purpose of balancing the network load.

If a node becomes unavailable, the router initiates a new connection with another node that has a speaker pod that advertises the load balancer IP address.

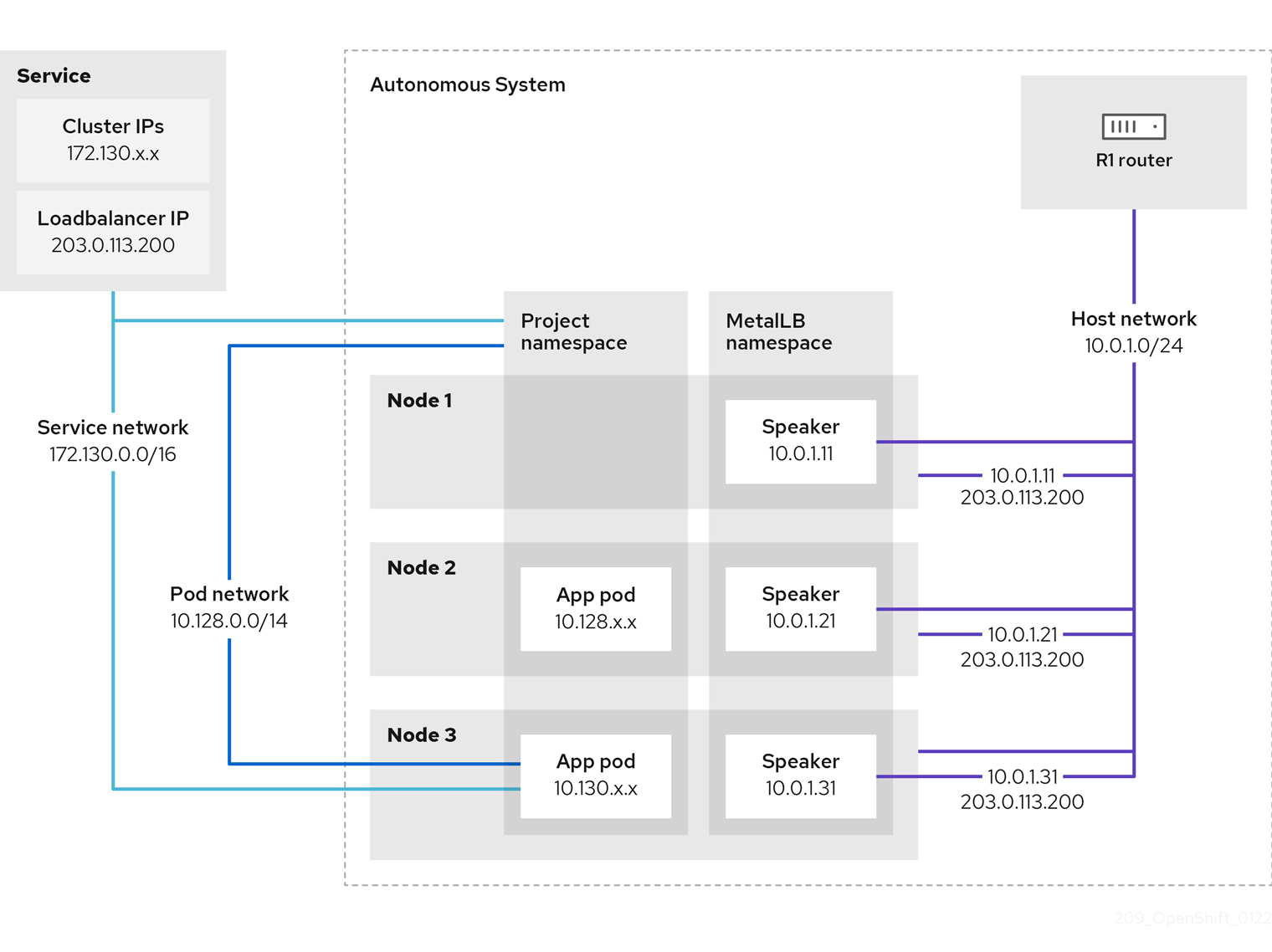

Figure 34.1. MetalLB topology diagram for BGP mode

The preceding graphic shows the following concepts related to MetalLB:

-

An application is available through a service that has an IPv4 cluster IP on the

172.130.0.0/16subnet. That IP address is accessible from inside the cluster. The service also has an external IP address that MetalLB assigned to the service,203.0.113.200. - Nodes 2 and 3 have a pod for the application.

-

The

speakerdaemon set runs a pod on each node. The MetalLB Operator starts these pods. You can configure MetalLB to specify which nodes run thespeakerpods. -

Each

speakerpod is a host-networked pod. The IP address for the pod is identical to the IP address for the node on the host network. -

Each

speakerpod starts a BGP session with all BGP peers and advertises the load balancer IP addresses or aggregated routes to the BGP peers. Thespeakerpods advertise that they are part of Autonomous System 65010. The diagram shows a router, R1, as a BGP peer within the same Autonomous System. However, you can configure MetalLB to start BGP sessions with peers that belong to other Autonomous Systems. All the nodes with a

speakerpod that advertises the load balancer IP address can receive traffic for the service.-

If the external traffic policy for the service is set to

cluster, all the nodes where a speaker pod is running advertise the203.0.113.200load balancer IP address and all the nodes with aspeakerpod can receive traffic for the service. The host prefix is advertised to the router peer only if the external traffic policy is set to cluster. -

If the external traffic policy for the service is set to

local, then all the nodes where aspeakerpod is running and at least an endpoint of the service is running can advertise the203.0.113.200load balancer IP address. Only those nodes can receive traffic for the service. In the preceding graphic, nodes 2 and 3 would advertise203.0.113.200.

-

If the external traffic policy for the service is set to

-

You can configure MetalLB to control which

speakerpods start BGP sessions with specific BGP peers by specifying a node selector when you add a BGP peer custom resource. - Any routers, such as R1, that are configured to use BGP can be set as BGP peers.

- Client traffic is routed to one of the nodes on the host network. After traffic enters the node, the service proxy sends the traffic to the application pod on the same node or another node according to the external traffic policy that you set for the service.

- If a node becomes unavailable, the router detects the failure and initiates a new connection with another node. You can configure MetalLB to use a Bidirectional Forwarding Detection (BFD) profile for BGP peers. BFD provides faster link failure detection so that routers can initiate new connections earlier than without BFD.

34.1.7. Limitations and restrictions

34.1.7.1. Infrastructure considerations for MetalLB

MetalLB is primarily useful for on-premise, bare metal installations because these installations do not include a native load-balancer capability. In addition to bare metal installations, installations of OpenShift Container Platform on some infrastructures might not include a native load-balancer capability. For example, the following infrastructures can benefit from adding the MetalLB Operator:

- Bare metal

- VMware vSphere

- IBM Z® and IBM® LinuxONE

- IBM Z® and IBM® LinuxONE for Red Hat Enterprise Linux (RHEL) KVM

- IBM Power®

MetalLB Operator and MetalLB are supported with the OpenShift SDN and OVN-Kubernetes network providers.

34.1.7.2. Limitations for layer 2 mode

34.1.7.2.1. Single-node bottleneck

MetalLB routes all traffic for a service through a single node, the node can become a bottleneck and limit performance.

Layer 2 mode limits the ingress bandwidth for your service to the bandwidth of a single node. This is a fundamental limitation of using ARP and NDP to direct traffic.

34.1.7.2.2. Slow failover performance

Failover between nodes depends on cooperation from the clients. When a failover occurs, MetalLB sends gratuitous ARP packets to notify clients that the MAC address associated with the service IP has changed.

Most client operating systems handle gratuitous ARP packets correctly and update their neighbor caches promptly. When clients update their caches quickly, failover completes within a few seconds. Clients typically fail over to a new node within 10 seconds. However, some client operating systems either do not handle gratuitous ARP packets at all or have outdated implementations that delay the cache update.

Recent versions of common operating systems such as Windows, macOS, and Linux implement layer 2 failover correctly. Issues with slow failover are not expected except for older and less common client operating systems.

To minimize the impact from a planned failover on outdated clients, keep the old node running for a few minutes after flipping leadership. The old node can continue to forward traffic for outdated clients until their caches refresh.

During an unplanned failover, the service IPs are unreachable until the outdated clients refresh their cache entries.

34.1.7.2.3. Additional Network and MetalLB cannot use same network

Using the same VLAN for both MetalLB and an additional network interface set up on a source pod might result in a connection failure. This occurs when both the MetalLB IP and the source pod reside on the same node.

To avoid connection failures, place the MetalLB IP in a different subnet from the one where the source pod resides. This configuration ensures that traffic from the source pod will take the default gateway. Consequently, the traffic can effectively reach its destination by using the OVN overlay network, ensuring that the connection functions as intended.

34.1.7.3. Limitations for BGP mode

34.1.7.3.1. Node failure can break all active connections

MetalLB shares a limitation that is common to BGP-based load balancing. When a BGP session terminates, such as when a node fails or when a speaker pod restarts, the session termination might result in resetting all active connections. End users can experience a Connection reset by peer message.

The consequence of a terminated BGP session is implementation-specific for each router manufacturer. However, you can anticipate that a change in the number of speaker pods affects the number of BGP sessions and that active connections with BGP peers will break.

To avoid or reduce the likelihood of a service interruption, you can specify a node selector when you add a BGP peer. By limiting the number of nodes that start BGP sessions, a fault on a node that does not have a BGP session has no affect on connections to the service.

34.1.7.3.2. Support for a single ASN and a single router ID only

When you add a BGP peer custom resource, you specify the spec.myASN field to identify the Autonomous System Number (ASN) that MetalLB belongs to. OpenShift Container Platform uses an implementation of BGP with MetalLB that requires MetalLB to belong to a single ASN. If you attempt to add a BGP peer and specify a different value for spec.myASN than an existing BGP peer custom resource, you receive an error.

Similarly, when you add a BGP peer custom resource, the spec.routerID field is optional. If you specify a value for this field, you must specify the same value for all other BGP peer custom resources that you add.

The limitation to support a single ASN and single router ID is a difference with the community-supported implementation of MetalLB.

34.2. Installing the MetalLB Operator

As a cluster administrator, you can add the MetallB Operator so that the Operator can manage the lifecycle for an instance of MetalLB on your cluster.

MetalLB and IP failover are incompatible. If you configured IP failover for your cluster, perform the steps to remove IP failover before you install the Operator.

34.2.1. Installing the MetalLB Operator from the OperatorHub using the web console

As a cluster administrator, you can install the MetalLB Operator by using the OpenShift Container Platform web console.

Prerequisites

-

Log in as a user with

cluster-adminprivileges.

Procedure

-

In the OpenShift Container Platform web console, navigate to Operators

OperatorHub. Type a keyword into the Filter by keyword box or scroll to find the Operator you want. For example, type

metallbto find the MetalLB Operator.You can also filter options by Infrastructure Features. For example, select Disconnected if you want to see Operators that work in disconnected environments, also known as restricted network environments.

- On the Install Operator page, accept the defaults and click Install.

Verification

To confirm that the installation is successful:

-

Navigate to the Operators

Installed Operators page. -

Check that the Operator is installed in the

openshift-operatorsnamespace and that its status isSucceeded.

-

Navigate to the Operators

If the Operator is not installed successfully, check the status of the Operator and review the logs:

-

Navigate to the Operators

Installed Operators page and inspect the Statuscolumn for any errors or failures. -

Navigate to the Workloads

Pods page and check the logs in any pods in the openshift-operatorsproject that are reporting issues.

-

Navigate to the Operators

34.2.2. Installing from OperatorHub using the CLI

Instead of using the OpenShift Container Platform web console, you can install an Operator from OperatorHub using the CLI. You can use the OpenShift CLI (oc) to install the MetalLB Operator.

It is recommended that when using the CLI you install the Operator in the metallb-system namespace.

Prerequisites

- A cluster installed on bare-metal hardware.

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create a namespace for the MetalLB Operator by entering the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an Operator group custom resource (CR) in the namespace:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm the Operator group is installed in the namespace:

oc get operatorgroup -n metallb-system

$ oc get operatorgroup -n metallb-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE metallb-operator 14m

NAME AGE metallb-operator 14mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

SubscriptionCR:Define the

SubscriptionCR and save the YAML file, for example,metallb-sub.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You must specify the

redhat-operatorsvalue.

To create the

SubscriptionCR, run the following command:oc create -f metallb-sub.yaml

$ oc create -f metallb-sub.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Optional: To ensure BGP and BFD metrics appear in Prometheus, you can label the namespace as in the following command:

oc label ns metallb-system "openshift.io/cluster-monitoring=true"

$ oc label ns metallb-system "openshift.io/cluster-monitoring=true"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

The verification steps assume the MetalLB Operator is installed in the metallb-system namespace.

Confirm the install plan is in the namespace:

oc get installplan -n metallb-system

$ oc get installplan -n metallb-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CSV APPROVAL APPROVED install-wzg94 metallb-operator.4.15.0-nnnnnnnnnnnn Automatic true

NAME CSV APPROVAL APPROVED install-wzg94 metallb-operator.4.15.0-nnnnnnnnnnnn Automatic trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteInstallation of the Operator might take a few seconds.

To verify that the Operator is installed, enter the following command:

oc get clusterserviceversion -n metallb-system \ -o custom-columns=Name:.metadata.name,Phase:.status.phase

$ oc get clusterserviceversion -n metallb-system \ -o custom-columns=Name:.metadata.name,Phase:.status.phaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Name Phase metallb-operator.4.15.0-nnnnnnnnnnnn Succeeded

Name Phase metallb-operator.4.15.0-nnnnnnnnnnnn SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.2.3. Starting MetalLB on your cluster

After you install the Operator, you need to configure a single instance of a MetalLB custom resource. After you configure the custom resource, the Operator starts MetalLB on your cluster.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges. - Install the MetalLB Operator.

Procedure

This procedure assumes the MetalLB Operator is installed in the metallb-system namespace. If you installed using the web console substitute openshift-operators for the namespace.

Create a single instance of a MetalLB custom resource:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Confirm that the deployment for the MetalLB controller and the daemon set for the MetalLB speaker are running.

Verify that the deployment for the controller is running:

oc get deployment -n metallb-system controller

$ oc get deployment -n metallb-system controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE controller 1/1 1 1 11m

NAME READY UP-TO-DATE AVAILABLE AGE controller 1/1 1 1 11mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the daemon set for the speaker is running:

oc get daemonset -n metallb-system speaker

$ oc get daemonset -n metallb-system speakerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE speaker 6 6 6 6 6 kubernetes.io/os=linux 18m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE speaker 6 6 6 6 6 kubernetes.io/os=linux 18mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The example output indicates 6 speaker pods. The number of speaker pods in your cluster might differ from the example output. Make sure the output indicates one pod for each node in your cluster.

34.2.4. Deployment specifications for MetalLB

When you start an instance of MetalLB using the MetalLB custom resource, you can configure deployment specifications in the MetalLB custom resource to manage how the controller or speaker pods deploy and run in your cluster. Use these deployment specifications to manage the following tasks:

- Select nodes for MetalLB pod deployment.

- Manage scheduling by using pod priority and pod affinity.

- Assign CPU limits for MetalLB pods.

- Assign a container RuntimeClass for MetalLB pods.

- Assign metadata for MetalLB pods.

34.2.4.1. Limit speaker pods to specific nodes

By default, when you start MetalLB with the MetalLB Operator, the Operator starts an instance of a speaker pod on each node in the cluster. Only the nodes with a speaker pod can advertise a load balancer IP address. You can configure the MetalLB custom resource with a node selector to specify which nodes run the speaker pods.

The most common reason to limit the speaker pods to specific nodes is to ensure that only nodes with network interfaces on specific networks advertise load balancer IP addresses. Only the nodes with a running speaker pod are advertised as destinations of the load balancer IP address.

If you limit the speaker pods to specific nodes and specify local for the external traffic policy of a service, then you must ensure that the application pods for the service are deployed to the same nodes.

Example configuration to limit speaker pods to worker nodes

- 1

- The example configuration specifies to assign the speaker pods to worker nodes, but you can specify labels that you assigned to nodes or any valid node selector.

- 2

- In this example configuration, the pod that this toleration is attached to tolerates any taint that matches the

keyvalue andeffectvalue using theoperator.

After you apply a manifest with the spec.nodeSelector field, you can check the number of pods that the Operator deployed with the oc get daemonset -n metallb-system speaker command. Similarly, you can display the nodes that match your labels with a command like oc get nodes -l node-role.kubernetes.io/worker=.

You can optionally allow the node to control which speaker pods should, or should not, be scheduled on them by using affinity rules. You can also limit these pods by applying a list of tolerations. For more information about affinity rules, taints, and tolerations, see the additional resources.

34.2.4.2. Configuring pod priority and pod affinity in a MetalLB deployment

You can optionally assign pod priority and pod affinity rules to controller and speaker pods by configuring the MetalLB custom resource. The pod priority indicates the relative importance of a pod on a node and schedules the pod based on this priority. Set a high priority on your controller or speaker pod to ensure scheduling priority over other pods on the node.

Pod affinity manages relationships among pods. Assign pod affinity to the controller or speaker pods to control on what node the scheduler places the pod in the context of pod relationships. For example, you can use pod affinity rules to ensure that certain pods are located on the same node or nodes, which can help improve network communication and reduce latency between those components.

Prerequisites

-

You are logged in as a user with

cluster-adminprivileges. - You have installed the MetalLB Operator.

- You have started the MetalLB Operator on your cluster.

Procedure

Create a

PriorityClasscustom resource, such asmyPriorityClass.yaml, to configure the priority level. This example defines aPriorityClassnamedhigh-prioritywith a value of1000000. Pods that are assigned this priority class are considered higher priority during scheduling compared to pods with lower priority classes:apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: high-priority value: 1000000

apiVersion: scheduling.k8s.io/v1 kind: PriorityClass metadata: name: high-priority value: 1000000Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

PriorityClasscustom resource configuration:oc apply -f myPriorityClass.yaml

$ oc apply -f myPriorityClass.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

MetalLBcustom resource, such asMetalLBPodConfig.yaml, to specify thepriorityClassNameandpodAffinityvalues:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies the priority class for the MetalLB controller pods. In this case, it is set to

high-priority. - 2

- Specifies that you are configuring pod affinity rules. These rules dictate how pods are scheduled in relation to other pods or nodes. This configuration instructs the scheduler to schedule pods that have the label

app: metallbonto nodes that share the same hostname. This helps to co-locate MetalLB-related pods on the same nodes, potentially optimizing network communication, latency, and resource usage between these pods.

Apply the

MetalLBcustom resource configuration:oc apply -f MetalLBPodConfig.yaml

$ oc apply -f MetalLBPodConfig.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To view the priority class that you assigned to pods in the

metallb-systemnamespace, run the following command:oc get pods -n metallb-system -o custom-columns=NAME:.metadata.name,PRIORITY:.spec.priorityClassName

$ oc get pods -n metallb-system -o custom-columns=NAME:.metadata.name,PRIORITY:.spec.priorityClassNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME PRIORITY controller-584f5c8cd8-5zbvg high-priority metallb-operator-controller-manager-9c8d9985-szkqg <none> metallb-operator-webhook-server-c895594d4-shjgx <none> speaker-dddf7 high-priority

NAME PRIORITY controller-584f5c8cd8-5zbvg high-priority metallb-operator-controller-manager-9c8d9985-szkqg <none> metallb-operator-webhook-server-c895594d4-shjgx <none> speaker-dddf7 high-priorityCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the scheduler placed pods according to pod affinity rules, view the metadata for the pod’s node or nodes by running the following command:

oc get pod -o=custom-columns=NODE:.spec.nodeName,NAME:.metadata.name -n metallb-system

$ oc get pod -o=custom-columns=NODE:.spec.nodeName,NAME:.metadata.name -n metallb-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.2.4.3. Configuring pod CPU limits in a MetalLB deployment

You can optionally assign pod CPU limits to controller and speaker pods by configuring the MetalLB custom resource. Defining CPU limits for the controller or speaker pods helps you to manage compute resources on the node. This ensures all pods on the node have the necessary compute resources to manage workloads and cluster housekeeping.

Prerequisites

-

You are logged in as a user with

cluster-adminprivileges. - You have installed the MetalLB Operator.

Procedure

Create a

MetalLBcustom resource file, such asCPULimits.yaml, to specify thecpuvalue for thecontrollerandspeakerpods:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

MetalLBcustom resource configuration:oc apply -f CPULimits.yaml

$ oc apply -f CPULimits.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To view compute resources for a pod, run the following command, replacing

<pod_name>with your target pod:oc describe pod <pod_name>

$ oc describe pod <pod_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

34.2.6. Next steps

34.3. Upgrading the MetalLB

A Subscription custom resource (CR) that subscribes the namespace to metallb-system by default, automatically sets the installPlanApproval parameter to Automatic. This means that when Red Hat-provided Operator catalogs include a newer version of the MetalLB Operator, the MetalLB Operator is automatically upgraded.

If you need to manually control upgrading the MetalLB Operator, set the installPlanApproval parameter to Manual.

34.3.1. Manually upgrading the MetalLB Operator

To manually control upgrading the MetalLB Operator, you must edit the Subscription custom resource (CR) that subscribes the namespace to metallb-system. A Subscription CR is created as part of the Operator installation and the CR has the installPlanApproval parameter set to Automatic by default.

Prerequisites

- You updated your cluster to the latest z-stream release.

- You used OperatorHub to install the MetalLB Operator.

-

Access the cluster as a user with the

cluster-adminrole.

Procedure

Get the YAML definition of the

metallb-operatorsubscription in themetallb-systemnamespace by entering the following command:oc -n metallb-system get subscription metallb-operator -o yaml

$ oc -n metallb-system get subscription metallb-operator -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

SubscriptionCR by setting theinstallPlanApprovalparameter toManual:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Find the latest OpenShift Container Platform 4.15 version of the MetalLB Operator by entering the following command:

oc -n metallb-system get csv

$ oc -n metallb-system get csvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DISPLAY VERSION REPLACES PHASE metallb-operator.v4.15.0 MetalLB Operator 4.15.0 Succeeded

NAME DISPLAY VERSION REPLACES PHASE metallb-operator.v4.15.0 MetalLB Operator 4.15.0 SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the install plan that exists in the namespace by entering the following command.

oc -n metallb-system get installplan

$ oc -n metallb-system get installplanCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output that shows install-tsz2g as a manual install plan

NAME CSV APPROVAL APPROVED install-shpmd metallb-operator.v4.15.0-202502261233 Automatic true install-tsz2g metallb-operator.v4.15.0-202503102139 Manual false

NAME CSV APPROVAL APPROVED install-shpmd metallb-operator.v4.15.0-202502261233 Automatic true install-tsz2g metallb-operator.v4.15.0-202503102139 Manual falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the install plan that exists in the namespace by entering the following command. Ensure that you replace

<name_of_installplan>with the name of the install plan, such asinstall-tsz2g.oc edit installplan <name_of_installplan> -n metallb-system

$ oc edit installplan <name_of_installplan> -n metallb-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow With the install plan open in your editor, set the

spec.approvalparameter toManualand set thespec.approvedparameter totrue.NoteAfter you edit the install plan, the upgrade operation starts. If you enter the

oc -n metallb-system get csvcommand during the upgrade operation, the output might show theReplacingor thePendingstatus.

Verification

Verify the upgrade was successful by entering the following command:

oc -n metallb-system get csv

$ oc -n metallb-system get csvCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DISPLAY VERSION REPLACE PHASE metallb-operator.v<latest>.0-202503102139 MetalLB Operator {product-version}.0-202503102139 metallb-operator.v{product-version}.0-202502261233 SucceededNAME DISPLAY VERSION REPLACE PHASE metallb-operator.v<latest>.0-202503102139 MetalLB Operator {product-version}.0-202503102139 metallb-operator.v{product-version}.0-202502261233 SucceededCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.3.2. Additional resources

34.4. Configuring MetalLB address pools

As a cluster administrator, you can add, modify, and delete address pools. The MetalLB Operator uses the address pool custom resources to set the IP addresses that MetalLB can assign to services. The namespace used in the examples assume the namespace is metallb-system.

34.4.1. About the IPAddressPool custom resource

The address pool custom resource definition (CRD) and API documented in "Load balancing with MetalLB" in OpenShift Container Platform 4.10 can still be used in 4.15. However, the enhanced functionality associated with advertising an IP address from an IPAddressPool with layer 2 protocols, or the BGP protocol, is not supported when using the AddressPool CRD.

The fields for the IPAddressPool custom resource are described in the following tables.

| Field | Type | Description |

|---|---|---|

|

|

|

Specifies the name for the address pool. When you add a service, you can specify this pool name in the |

|

|

| Specifies the namespace for the address pool. Specify the same namespace that the MetalLB Operator uses. |

|

|

|

Optional: Specifies the key value pair assigned to the |

|

|

| Specifies a list of IP addresses for MetalLB Operator to assign to services. You can specify multiple ranges in a single pool; they will all share the same settings. Specify each range in CIDR notation or as starting and ending IP addresses separated with a hyphen. |

|

|

|

Optional: Specifies whether MetalLB automatically assigns IP addresses from this pool. Specify Note

For IP address pool configurations, ensure the addresses field specifies only IPs that are available and not in use by other network devices, especially gateway addresses, to prevent conflicts when |

|

|

|

Optional: This ensures when enabled that IP addresses ending |

You can assign IP addresses from an IPAddressPool to services and namespaces by configuring the spec.serviceAllocation specification.

| Field | Type | Description |

|---|---|---|

|

|

| Optional: Defines the priority between IP address pools when more than one IP address pool matches a service or namespace. A lower number indicates a higher priority. |

|

|

| Optional: Specifies a list of namespaces that you can assign to IP addresses in an IP address pool. |

|

|

| Optional: Specifies namespace labels that you can assign to IP addresses from an IP address pool by using label selectors in a list format. |

|

|

| Optional: Specifies service labels that you can assign to IP addresses from an address pool by using label selectors in a list format. |

34.4.2. Configuring an address pool

As a cluster administrator, you can add address pools to your cluster to control the IP addresses that MetalLB can assign to load-balancer services.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create a file, such as

ipaddresspool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This label assigned to the

IPAddressPoolcan be referenced by theipAddressPoolSelectorsin theBGPAdvertisementCRD to associate theIPAddressPoolwith the advertisement.

Apply the configuration for the IP address pool:

oc apply -f ipaddresspool.yaml

$ oc apply -f ipaddresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the address pool by entering the following command:

oc describe -n metallb-system IPAddressPool doc-example

$ oc describe -n metallb-system IPAddressPool doc-exampleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Confirm that the address pool name, such as

doc-example, and the IP address ranges exist in the output.

34.4.3. Configure MetalLB address pool for VLAN

As a cluster administrator, you can add address pools to your cluster to control the IP addresses on a created VLAN that MetalLB can assign to load-balancer services

Prerequisites

-

Install the OpenShift CLI (

oc). - Configure a separate VLAN.

-

Log in as a user with

cluster-adminprivileges.

Procedure

Create a file, such as

ipaddresspool-vlan.yaml, that is similar to the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This label assigned to the

IPAddressPoolcan be referenced by theipAddressPoolSelectorsin theBGPAdvertisementCRD to associate theIPAddressPoolwith the advertisement. - 2

- This IP range must match the subnet assigned to the VLAN on your network. To support layer 2 (L2) mode, the IP address range must be within the same subnet as the cluster nodes.

Apply the configuration for the IP address pool:

oc apply -f ipaddresspool-vlan.yaml

$ oc apply -f ipaddresspool-vlan.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow To ensure this configuration applies to the VLAN you need to set the

specgatewayConfig.ipForwardingtoGlobal.Run the following command to edit the network configuration custom resource (CR):

oc edit network.operator.openshift/cluster

$ oc edit network.operator.openshift/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the

spec.defaultNetwork.ovnKubernetesConfigsection to include thegatewayConfig.ipForwardingset toGlobal. It should look something like this:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

34.4.4. Example address pool configurations

The following examples show address pool configurations for specific scenarios.

34.4.4.1. Example: IPv4 and CIDR ranges

You can specify a range of IP addresses in classless inter-domain routing (CIDR) notation. You can combine CIDR notation with the notation that uses a hyphen to separate lower and upper bounds.

34.4.4.2. Example: Assign IP addresses

You can set the autoAssign field to false to prevent MetalLB from automatically assigning IP addresses from the address pool. You can then assign a single IP address or multiple IP addresses from an IP address pool. To assign an IP address, append the /32 CIDR notation to the target IP address in the spec.addresses parameter. This setting ensures that only the specific IP address is avilable for assignment, leaving non-reserved IP addresses for application use.

Example IPAddressPool CR that assigns multiple IP addresses

When you add a service, you can request a specific IP address from the address pool or you can specify the pool name in an annotation to request any IP address from the pool.

34.4.4.3. Example: IPv4 and IPv6 addresses

You can add address pools that use IPv4 and IPv6. You can specify multiple ranges in the addresses list, just like several IPv4 examples.

Whether the service is assigned a single IPv4 address, a single IPv6 address, or both is determined by how you add the service. The spec.ipFamilies and spec.ipFamilyPolicy fields control how IP addresses are assigned to the service.

- 1

- Where

10.0.100.0/28is the local network IP address followed by the/28network prefix.

34.4.4.4. Example: Assign IP address pools to services or namespaces

You can assign IP addresses from an IPAddressPool to services and namespaces that you specify.

If you assign a service or namespace to more than one IP address pool, MetalLB uses an available IP address from the higher-priority IP address pool. If no IP addresses are available from the assigned IP address pools with a high priority, MetalLB uses available IP addresses from an IP address pool with lower priority or no priority.

You can use the matchLabels label selector, the matchExpressions label selector, or both, for the namespaceSelectors and serviceSelectors specifications. This example demonstrates one label selector for each specification.

- 1

- Assign a priority to the address pool. A lower number indicates a higher priority.

- 2

- Assign one or more namespaces to the IP address pool in a list format.

- 3

- Assign one or more namespace labels to the IP address pool by using label selectors in a list format.

- 4

- Assign one or more service labels to the IP address pool by using label selectors in a list format.

34.4.5. Next steps

34.5. About advertising for the IP address pools

You can configure MetalLB so that the IP address is advertised with layer 2 protocols, the BGP protocol, or both. With layer 2, MetalLB provides a fault-tolerant external IP address. With BGP, MetalLB provides fault-tolerance for the external IP address and load balancing.

MetalLB supports advertising using L2 and BGP for the same set of IP addresses.

MetalLB provides the flexibility to assign address pools to specific BGP peers effectively to a subset of nodes on the network. This allows for more complex configurations, for example facilitating the isolation of nodes or the segmentation of the network.

34.5.1. About the BGPAdvertisement custom resource

The fields for the BGPAdvertisements object are defined in the following table:

| Field | Type | Description |

|---|---|---|

|

|

| Specifies the name for the BGP advertisement. |

|

|

| Specifies the namespace for the BGP advertisement. Specify the same namespace that the MetalLB Operator uses. |

|

|

|

Optional: Specifies the number of bits to include in a 32-bit CIDR mask. To aggregate the routes that the speaker advertises to BGP peers, the mask is applied to the routes for several service IP addresses and the speaker advertises the aggregated route. For example, with an aggregation length of |

|

|

|

Optional: Specifies the number of bits to include in a 128-bit CIDR mask. For example, with an aggregation length of |

|

|

| Optional: Specifies one or more BGP communities. Each community is specified as two 16-bit values separated by the colon character. Well-known communities must be specified as 16-bit values:

|

|

|

| Optional: Specifies the local preference for this advertisement. This BGP attribute applies to BGP sessions within the Autonomous System. |

|

|

|

Optional: The list of |

|

|

|

Optional: A selector for the |

|

|

|

Optional: |

|

|

|

Optional: Use a list to specify the |

34.5.2. Configuring MetalLB with a BGP advertisement and a basic use case

Configure MetalLB as follows so that the peer BGP routers receive one 203.0.113.200/32 route and one fc00:f853:ccd:e799::1/128 route for each load-balancer IP address that MetalLB assigns to a service. Because the localPref and communities fields are not specified, the routes are advertised with localPref set to zero and no BGP communities.

34.5.2.1. Example: Advertise a basic address pool configuration with BGP

Configure MetalLB as follows so that the IPAddressPool is advertised with the BGP protocol.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create an IP address pool.

Create a file, such as

ipaddresspool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool:

oc apply -f ipaddresspool.yaml

$ oc apply -f ipaddresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a BGP advertisement.

Create a file, such as

bgpadvertisement.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f bgpadvertisement.yaml

$ oc apply -f bgpadvertisement.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.5.3. Configuring MetalLB with a BGP advertisement and an advanced use case

Configure MetalLB as follows so that MetalLB assigns IP addresses to load-balancer services in the ranges between 203.0.113.200 and 203.0.113.203 and between fc00:f853:ccd:e799::0 and fc00:f853:ccd:e799::f.

To explain the two BGP advertisements, consider an instance when MetalLB assigns the IP address of 203.0.113.200 to a service. With that IP address as an example, the speaker advertises two routes to BGP peers:

-

203.0.113.200/32, withlocalPrefset to100and the community set to the numeric value of theNO_ADVERTISEcommunity. This specification indicates to the peer routers that they can use this route but they should not propagate information about this route to BGP peers. -

203.0.113.200/30, aggregates the load-balancer IP addresses assigned by MetalLB into a single route. MetalLB advertises the aggregated route to BGP peers with the community attribute set to8000:800. BGP peers propagate the203.0.113.200/30route to other BGP peers. When traffic is routed to a node with a speaker, the203.0.113.200/32route is used to forward the traffic into the cluster and to a pod that is associated with the service.

As you add more services and MetalLB assigns more load-balancer IP addresses from the pool, peer routers receive one local route, 203.0.113.20x/32, for each service, as well as the 203.0.113.200/30 aggregate route. Each service that you add generates the /30 route, but MetalLB deduplicates the routes to one BGP advertisement before communicating with peer routers.

34.5.3.1. Example: Advertise an advanced address pool configuration with BGP

Configure MetalLB as follows so that the IPAddressPool is advertised with the BGP protocol.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create an IP address pool.

Create a file, such as

ipaddresspool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool:

oc apply -f ipaddresspool.yaml

$ oc apply -f ipaddresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a BGP advertisement.

Create a file, such as

bgpadvertisement1.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f bgpadvertisement1.yaml

$ oc apply -f bgpadvertisement1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a file, such as

bgpadvertisement2.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f bgpadvertisement2.yaml

$ oc apply -f bgpadvertisement2.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.5.4. Advertising an IP address pool from a subset of nodes

To advertise an IP address from an IP addresses pool, from a specific set of nodes only, use the .spec.nodeSelector specification in the BGPAdvertisement custom resource. This specification associates a pool of IP addresses with a set of nodes in the cluster. This is useful when you have nodes on different subnets in a cluster and you want to advertise an IP addresses from an address pool from a specific subnet, for example a public-facing subnet only.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create an IP address pool by using a custom resource:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Control which nodes in the cluster the IP address from

pool1advertises from by defining the.spec.nodeSelectorvalue in the BGPAdvertisement custom resource:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

In this example, the IP address from pool1 advertises from NodeA and NodeB only.

34.5.5. About the L2Advertisement custom resource

The fields for the l2Advertisements object are defined in the following table:

| Field | Type | Description |

|---|---|---|

|

|

| Specifies the name for the L2 advertisement. |

|

|

| Specifies the namespace for the L2 advertisement. Specify the same namespace that the MetalLB Operator uses. |

|

|

|

Optional: The list of |

|

|

|

Optional: A selector for the |

|

|

|

Optional: Important Limiting the nodes to announce as next hops is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope. |

|

|

|

Optional: The list of |

34.5.6. Configuring MetalLB with an L2 advertisement

Configure MetalLB as follows so that the IPAddressPool is advertised with the L2 protocol.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create an IP address pool.

Create a file, such as

ipaddresspool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool:

oc apply -f ipaddresspool.yaml

$ oc apply -f ipaddresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a L2 advertisement.

Create a file, such as

l2advertisement.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f l2advertisement.yaml

$ oc apply -f l2advertisement.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.5.7. Configuring MetalLB with a L2 advertisement and label

The ipAddressPoolSelectors field in the BGPAdvertisement and L2Advertisement custom resource definitions is used to associate the IPAddressPool to the advertisement based on the label assigned to the IPAddressPool instead of the name itself.

This example shows how to configure MetalLB so that the IPAddressPool is advertised with the L2 protocol by configuring the ipAddressPoolSelectors field.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create an IP address pool.

Create a file, such as

ipaddresspool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool:

oc apply -f ipaddresspool.yaml

$ oc apply -f ipaddresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a L2 advertisement advertising the IP using

ipAddressPoolSelectors.Create a file, such as

l2advertisement.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f l2advertisement.yaml

$ oc apply -f l2advertisement.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.5.8. Configuring MetalLB with an L2 advertisement for selected interfaces

By default, the IP addresses from IP address pool that has been assigned to the service, is advertised from all the network interfaces. The interfaces field in the L2Advertisement custom resource definition is used to restrict those network interfaces that advertise the IP address pool.

This example shows how to configure MetalLB so that the IP address pool is advertised only from the network interfaces listed in the interfaces field of all nodes.

Prerequisites

-

You have installed the OpenShift CLI (

oc). -

You are logged in as a user with

cluster-adminprivileges.

Procedure

Create an IP address pool.

Create a file, such as

ipaddresspool.yaml, and enter the configuration details like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool like the following example:

oc apply -f ipaddresspool.yaml

$ oc apply -f ipaddresspool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a L2 advertisement advertising the IP with

interfacesselector.Create a YAML file, such as

l2advertisement.yaml, and enter the configuration details like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the advertisement like the following example:

oc apply -f l2advertisement.yaml

$ oc apply -f l2advertisement.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The interface selector does not affect how MetalLB chooses the node to announce a given IP by using L2. The chosen node does not announce the service if the node does not have the selected interface.

34.5.9. Configuring MetalLB with secondary networks

From OpenShift Container Platform 4.14 the default network behavior is to not allow forwarding of IP packets between network interfaces. Therefore, when MetalLB is configured on a secondary interface, you need to add a machine configuration to enable IP forwarding for only the required interfaces.

OpenShift Container Platform clusters upgraded from 4.13 are not affected because a global parameter is set during upgrade to enable global IP forwarding.

To enable IP forwarding for the secondary interface, you have two options:

- Enable IP forwarding for all interfaces.

Enable IP forwarding for a specific interface.

NoteEnabling IP forwarding for a specific interface provides more granular control, while enabling it for all interfaces applies a global setting.

Procedure

Enable forwarding for a specific secondary interface, such as

bridge-netby creating and applying aMachineConfigCR.-

Create the

MachineConfigCR to enable IP forwarding for the specified secondary interface namedbridge-net. Save the following YAML in the

enable-ip-forward.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Node role where you want to enable IP forwarding, for example,

worker

Apply the configuration by running the following command:

oc apply -f enable-ip-forward.yaml

$ oc apply -f enable-ip-forward.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Create the

Alternatively, you can enable IP forwarding globally by running the following command:

oc patch network.operator cluster -p '{"spec":{"defaultNetwork":{"ovnKubernetesConfig":{"gatewayConfig":{"ipForwarding": "Global"}}}}}' --type=merge$ oc patch network.operator cluster -p '{"spec":{"defaultNetwork":{"ovnKubernetesConfig":{"gatewayConfig":{"ipForwarding": "Global"}}}}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.6. Configuring MetalLB BGP peers

As a cluster administrator, you can add, modify, and delete Border Gateway Protocol (BGP) peers. The MetalLB Operator uses the BGP peer custom resources to identify which peers that MetalLB speaker pods contact to start BGP sessions. The peers receive the route advertisements for the load-balancer IP addresses that MetalLB assigns to services.

34.6.1. About the BGP peer custom resource

The fields for the BGP peer custom resource are described in the following table.

| Field | Type | Description |

|---|---|---|

|

|

| Specifies the name for the BGP peer custom resource. |

|

|

| Specifies the namespace for the BGP peer custom resource. |

|

|

|

Specifies the Autonomous System number for the local end of the BGP session. Specify the same value in all BGP peer custom resources that you add. The range is |

|

|

|

Specifies the Autonomous System number for the remote end of the BGP session. The range is |

|

|

| Specifies the IP address of the peer to contact for establishing the BGP session. |

|

|

| Optional: Specifies the IP address to use when establishing the BGP session. The value must be an IPv4 address. |

|

|

|

Optional: Specifies the network port of the peer to contact for establishing the BGP session. The range is |

|

|

|

Optional: Specifies the duration for the hold time to propose to the BGP peer. The minimum value is 3 seconds ( |

|

|

|

Optional: Specifies the maximum interval between sending keep-alive messages to the BGP peer. If you specify this field, you must also specify a value for the |

|

|

| Optional: Specifies the router ID to advertise to the BGP peer. If you specify this field, you must specify the same value in every BGP peer custom resource that you add. |

|

|

| Optional: Specifies the MD5 password to send to the peer for routers that enforce TCP MD5 authenticated BGP sessions. |

|

|

|

Optional: Specifies name of the authentication secret for the BGP Peer. The secret must live in the |

|

|

| Optional: Specifies the name of a BFD profile. |

|

|

| Optional: Specifies a selector, using match expressions and match labels, to control which nodes can connect to the BGP peer. |

|

|

|

Optional: Specifies that the BGP peer is multiple network hops away. If the BGP peer is not directly connected to the same network, the speaker cannot establish a BGP session unless this field is set to |

The passwordSecret field is mutually exclusive with the password field, and contains a reference to a secret containing the password to use. Setting both fields results in a failure of the parsing.

34.6.2. Configuring a BGP peer

As a cluster administrator, you can add a BGP peer custom resource to exchange routing information with network routers and advertise the IP addresses for services.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges. - Configure MetalLB with a BGP advertisement.

Procedure

Create a file, such as

bgppeer.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the BGP peer:

oc apply -f bgppeer.yaml

$ oc apply -f bgppeer.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

34.6.3. Configure a specific set of BGP peers for a given address pool

This procedure illustrates how to:

-

Configure a set of address pools (

pool1andpool2). -

Configure a set of BGP peers (

peer1andpeer2). -

Configure BGP advertisement to assign

pool1topeer1andpool2topeer2.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create address pool

pool1.Create a file, such as

ipaddresspool1.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool

pool1:oc apply -f ipaddresspool1.yaml

$ oc apply -f ipaddresspool1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create address pool

pool2.Create a file, such as

ipaddresspool2.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool

pool2:oc apply -f ipaddresspool2.yaml

$ oc apply -f ipaddresspool2.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create BGP

peer1.Create a file, such as

bgppeer1.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the BGP peer:

oc apply -f bgppeer1.yaml

$ oc apply -f bgppeer1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create BGP

peer2.Create a file, such as

bgppeer2.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the BGP peer2:

oc apply -f bgppeer2.yaml

$ oc apply -f bgppeer2.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create BGP advertisement 1.

Create a file, such as

bgpadvertisement1.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f bgpadvertisement1.yaml

$ oc apply -f bgpadvertisement1.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create BGP advertisement 2.

Create a file, such as

bgpadvertisement2.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration:

oc apply -f bgpadvertisement2.yaml

$ oc apply -f bgpadvertisement2.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

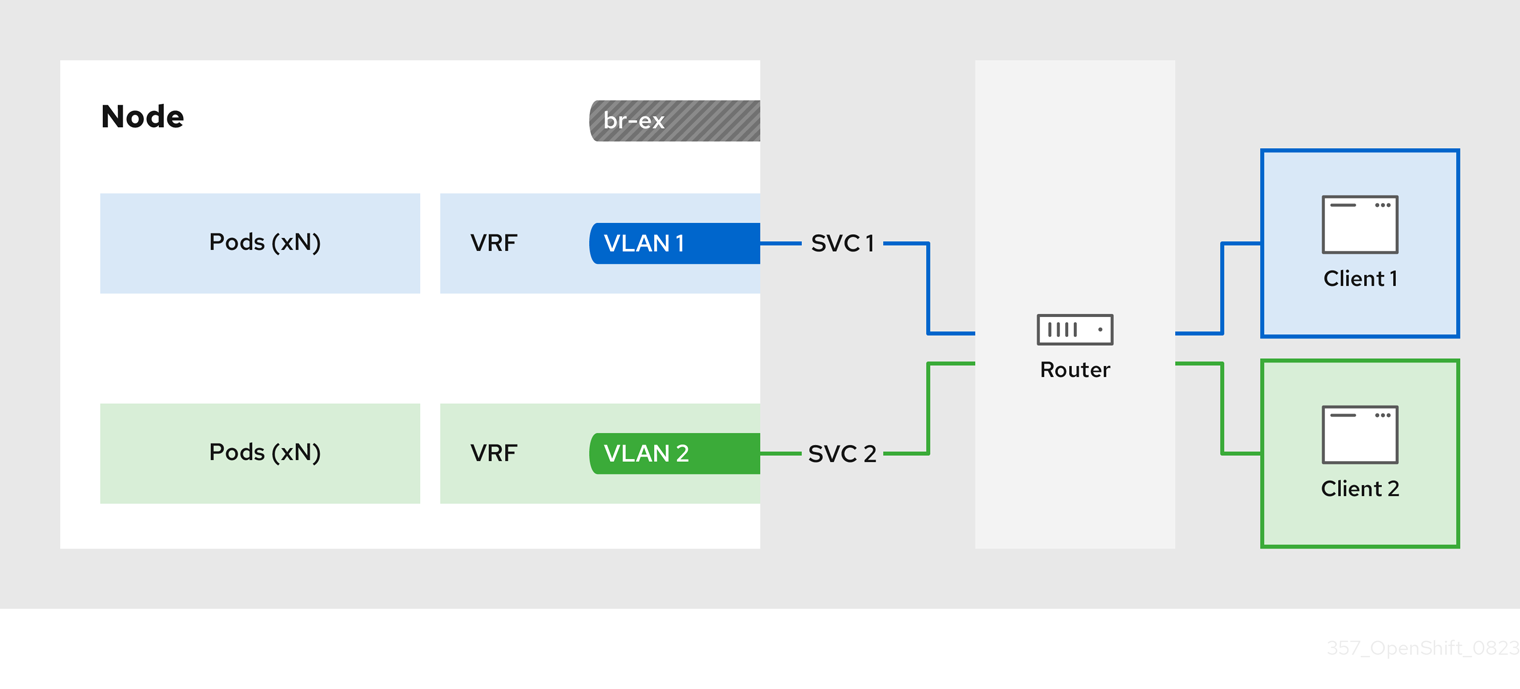

34.6.4. Exposing a service through a network VRF

You can expose a service through a virtual routing and forwarding (VRF) instance by associating a VRF on a network interface with a BGP peer.

Exposing a service through a VRF on a BGP peer is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

By using a VRF on a network interface to expose a service through a BGP peer, you can segregate traffic to the service, configure independent routing decisions, and enable multi-tenancy support on a network interface.

By establishing a BGP session through an interface belonging to a network VRF, MetalLB can advertise services through that interface and enable external traffic to reach the service through this interface. However, the network VRF routing table is different from the default VRF routing table used by OVN-Kubernetes. Therefore, the traffic cannot reach the OVN-Kubernetes network infrastructure.

To enable the traffic directed to the service to reach the OVN-Kubernetes network infrastructure, you must configure routing rules to define the next hops for network traffic. See the NodeNetworkConfigurationPolicy resource in "Managing symmetric routing with MetalLB" in the Additional resources section for more information.

These are the high-level steps to expose a service through a network VRF with a BGP peer:

- Define a BGP peer and add a network VRF instance.

- Specify an IP address pool for MetalLB.

- Configure a BGP route advertisement for MetalLB to advertise a route using the specified IP address pool and the BGP peer associated with the VRF instance.

- Deploy a service to test the configuration.

Prerequisites

-

You installed the OpenShift CLI (

oc). -

You logged in as a user with

cluster-adminprivileges. -

You defined a

NodeNetworkConfigurationPolicyto associate a Virtual Routing and Forwarding (VRF) instance with a network interface. For more information about completing this prerequisite, see the Additional resources section. - You installed MetalLB on your cluster.

Procedure

Create a

BGPPeercustom resources (CR):Create a file, such as

frrviavrf.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies the network VRF instance to associate with the BGP peer. MetalLB can advertise services and make routing decisions based on the routing information in the VRF.

NoteYou must configure this network VRF instance in a

NodeNetworkConfigurationPolicyCR. See the Additional resources for more information.Apply the configuration for the BGP peer by running the following command:

oc apply -f frrviavrf.yaml

$ oc apply -f frrviavrf.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create an

IPAddressPoolCR:Create a file, such as

first-pool.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the IP address pool by running the following command:

oc apply -f first-pool.yaml

$ oc apply -f first-pool.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a

BGPAdvertisementCR:Create a file, such as

first-adv.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- In this example, MetalLB advertises a range of IP addresses from the

first-poolIP address pool to thefrrviavrfBGP peer.

Apply the configuration for the BGP advertisement by running the following command:

oc apply -f first-adv.yaml

$ oc apply -f first-adv.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a

Namespace,Deployment, andServiceCR:Create a file, such as

deploy-service.yaml, with content like the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration for the namespace, deployment, and service by running the following command:

oc apply -f deploy-service.yaml

$ oc apply -f deploy-service.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Identify a MetalLB speaker pod by running the following command:

oc get -n metallb-system pods -l component=speaker

$ oc get -n metallb-system pods -l component=speakerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE speaker-c6c5f 6/6 Running 0 69m

NAME READY STATUS RESTARTS AGE speaker-c6c5f 6/6 Running 0 69mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the state of the BGP session is

Establishedin the speaker pod by running the following command, replacing the variables to match your configuration:oc exec -n metallb-system <speaker_pod> -c frr -- vtysh -c "show bgp vrf <vrf_name> neigh"

$ oc exec -n metallb-system <speaker_pod> -c frr -- vtysh -c "show bgp vrf <vrf_name> neigh"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

BGP neighbor is 192.168.30.1, remote AS 200, local AS 100, external link BGP version 4, remote router ID 192.168.30.1, local router ID 192.168.30.71 BGP state = Established, up for 04:20:09 ...

BGP neighbor is 192.168.30.1, remote AS 200, local AS 100, external link BGP version 4, remote router ID 192.168.30.1, local router ID 192.168.30.71 BGP state = Established, up for 04:20:09 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the service is advertised correctly by running the following command:

oc exec -n metallb-system <speaker_pod> -c frr -- vtysh -c "show bgp vrf <vrf_name> ipv4"

$ oc exec -n metallb-system <speaker_pod> -c frr -- vtysh -c "show bgp vrf <vrf_name> ipv4"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

34.6.5. Example BGP peer configurations

34.6.5.1. Example: Limit which nodes connect to a BGP peer

You can specify the node selectors field to control which nodes can connect to a BGP peer.

34.6.5.2. Example: Specify a BFD profile for a BGP peer

You can specify a BFD profile to associate with BGP peers. BFD compliments BGP by providing more rapid detection of communication failures between peers than BGP alone.

Deleting the bidirectional forwarding detection (BFD) profile and removing the bfdProfile added to the border gateway protocol (BGP) peer resource does not disable the BFD. Instead, the BGP peer starts using the default BFD profile. To disable BFD from a BGP peer resource, delete the BGP peer configuration and recreate it without a BFD profile. For more information, see BZ#2050824.

34.6.5.3. Example: Specify BGP peers for dual-stack networking

To support dual-stack networking, add one BGP peer custom resource for IPv4 and one BGP peer custom resource for IPv6.

34.6.6. Next steps

34.7. Configuring community alias

As a cluster administrator, you can configure a community alias and use it across different advertisements.

34.7.1. About the community custom resource

The community custom resource is a collection of aliases for communities. Users can define named aliases to be used when advertising ipAddressPools using the BGPAdvertisement. The fields for the community custom resource are described in the following table.

The community CRD applies only to BGPAdvertisement.

| Field | Type | Description |

|---|---|---|

|

|

|

Specifies the name for the |

|

|

|

Specifies the namespace for the |

|

|

|