Questo contenuto non è disponibile nella lingua selezionata.

Chapter 10. Admission plugins

Admission plugins are used to help regulate how OpenShift Container Platform functions.

10.1. About admission plugins

Admission plugins intercept requests to the master API to validate resource requests. After a request is authenticated and authorized, the admission plugins ensure that any associated policies are followed. For example, they are commonly used to enforce security policy, resource limitations or configuration requirements.

Admission plugins run in sequence as an admission chain. If any admission plugin in the sequence rejects a request, the whole chain is aborted and an error is returned.

OpenShift Container Platform has a default set of admission plugins enabled for each resource type. These are required for proper functioning of the cluster. Admission plugins ignore resources that they are not responsible for.

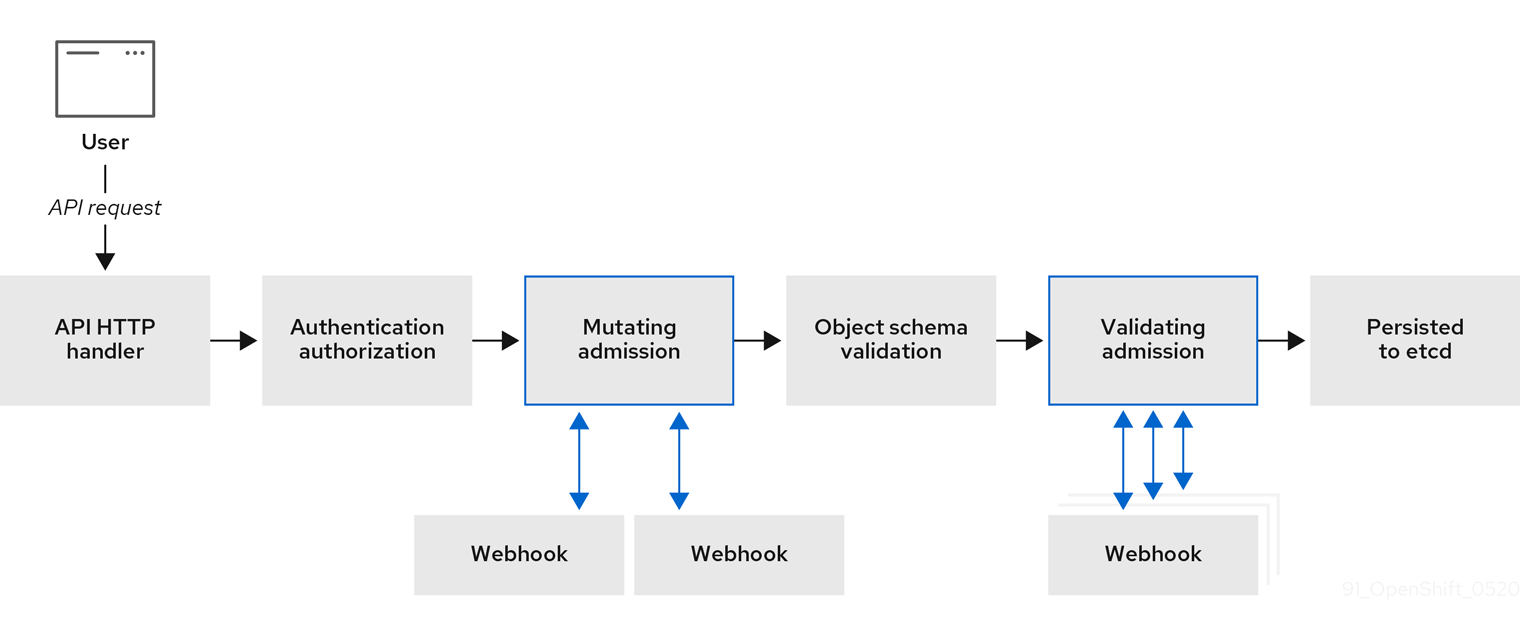

In addition to the defaults, the admission chain can be extended dynamically through webhook admission plugins that call out to custom webhook servers. There are two types of webhook admission plugins: a mutating admission plugin and a validating admission plugin. The mutating admission plugin runs first and can both modify resources and validate requests. The validating admission plugin validates requests and runs after the mutating admission plugin so that modifications triggered by the mutating admission plugin can also be validated.

Calling webhook servers through a mutating admission plugin can produce side effects on resources related to the target object. In such situations, you must take steps to validate that the end result is as expected.

Dynamic admission should be used cautiously because it impacts cluster control plane operations. When calling webhook servers through webhook admission plugins in OpenShift Container Platform 4.14, ensure that you have read the documentation fully and tested for side effects of mutations. Include steps to restore resources back to their original state prior to mutation, in the event that a request does not pass through the entire admission chain.

10.2. Default admission plugins

Default validating and admission plugins are enabled in OpenShift Container Platform 4.14. These default plugins contribute to fundamental control plane functionality, such as ingress policy, cluster resource limit override and quota policy.

Do not run workloads in or share access to default projects. Default projects are reserved for running core cluster components.

The following default projects are considered highly privileged: default, kube-public, kube-system, openshift, openshift-infra, openshift-node, and other system-created projects that have the openshift.io/run-level label set to 0 or 1. Functionality that relies on admission plugins, such as pod security admission, security context constraints, cluster resource quotas, and image reference resolution, does not work in highly privileged projects.

The following lists contain the default admission plugins:

Example 10.1. Validating admission plugins

-

LimitRanger -

ServiceAccount -

PodNodeSelector -

Priority -

PodTolerationRestriction -

OwnerReferencesPermissionEnforcement -

PersistentVolumeClaimResize -

RuntimeClass -

CertificateApproval -

CertificateSigning -

CertificateSubjectRestriction -

autoscaling.openshift.io/ManagementCPUsOverride -

authorization.openshift.io/RestrictSubjectBindings -

scheduling.openshift.io/OriginPodNodeEnvironment -

network.openshift.io/ExternalIPRanger -

network.openshift.io/RestrictedEndpointsAdmission -

image.openshift.io/ImagePolicy -

security.openshift.io/SecurityContextConstraint -

security.openshift.io/SCCExecRestrictions -

route.openshift.io/IngressAdmission -

config.openshift.io/ValidateAPIServer -

config.openshift.io/ValidateAuthentication -

config.openshift.io/ValidateFeatureGate -

config.openshift.io/ValidateConsole -

operator.openshift.io/ValidateDNS -

config.openshift.io/ValidateImage -

config.openshift.io/ValidateOAuth -

config.openshift.io/ValidateProject -

config.openshift.io/DenyDeleteClusterConfiguration -

config.openshift.io/ValidateScheduler -

quota.openshift.io/ValidateClusterResourceQuota -

security.openshift.io/ValidateSecurityContextConstraints -

authorization.openshift.io/ValidateRoleBindingRestriction -

config.openshift.io/ValidateNetwork -

operator.openshift.io/ValidateKubeControllerManager -

ValidatingAdmissionWebhook -

ResourceQuota -

quota.openshift.io/ClusterResourceQuota

Example 10.2. Mutating admission plugins

-

NamespaceLifecycle -

LimitRanger -

ServiceAccount -

NodeRestriction -

TaintNodesByCondition -

PodNodeSelector -

Priority -

DefaultTolerationSeconds -

PodTolerationRestriction -

DefaultStorageClass -

StorageObjectInUseProtection -

RuntimeClass -

DefaultIngressClass -

autoscaling.openshift.io/ManagementCPUsOverride -

scheduling.openshift.io/OriginPodNodeEnvironment -

image.openshift.io/ImagePolicy -

security.openshift.io/SecurityContextConstraint -

security.openshift.io/DefaultSecurityContextConstraints -

MutatingAdmissionWebhook

10.3. Webhook admission plugins

In addition to OpenShift Container Platform default admission plugins, dynamic admission can be implemented through webhook admission plugins that call webhook servers, to extend the functionality of the admission chain. Webhook servers are called over HTTP at defined endpoints.

There are two types of webhook admission plugins in OpenShift Container Platform:

- During the admission process, the mutating admission plugin can perform tasks, such as injecting affinity labels.

- At the end of the admission process, the validating admission plugin can be used to make sure an object is configured properly, for example ensuring affinity labels are as expected. If the validation passes, OpenShift Container Platform schedules the object as configured.

When an API request comes in, mutating or validating admission plugins use the list of external webhooks in the configuration and call them in parallel:

- If all of the webhooks approve the request, the admission chain continues.

- If any of the webhooks deny the request, the admission request is denied and the reason for doing so is based on the first denial.

- If more than one webhook denies the admission request, only the first denial reason is returned to the user.

-

If an error is encountered when calling a webhook, the request is either denied or the webhook is ignored depending on the error policy set. If the error policy is set to

Ignore, the request is unconditionally accepted in the event of a failure. If the policy is set toFail, failed requests are denied. UsingIgnorecan result in unpredictable behavior for all clients.

Communication between the webhook admission plugin and the webhook server must use TLS. Generate a CA certificate and use the certificate to sign the server certificate that is used by your webhook admission server. The PEM-encoded CA certificate is supplied to the webhook admission plugin using a mechanism, such as service serving certificate secrets.

The following diagram illustrates the sequential admission chain process within which multiple webhook servers are called.

Figure 10.1. API admission chain with mutating and validating admission plugins

An example webhook admission plugin use case is where all pods must have a common set of labels. In this example, the mutating admission plugin can inject labels and the validating admission plugin can check that labels are as expected. OpenShift Container Platform would subsequently schedule pods that include required labels and reject those that do not.

Some common webhook admission plugin use cases include:

- Namespace reservation.

- Limiting custom network resources managed by the SR-IOV network device plugin.

- Defining tolerations that enable taints to qualify which pods should be scheduled on a node.

- Pod priority class validation.

The maximum default webhook timeout value in OpenShift Container Platform is 13 seconds, and it cannot be changed.

10.4. Types of webhook admission plugins

Cluster administrators can call out to webhook servers through the mutating admission plugin or the validating admission plugin in the API server admission chain.

10.4.1. Mutating admission plugin

The mutating admission plugin is invoked during the mutation phase of the admission process, which allows modification of resource content before it is persisted. One example webhook that can be called through the mutating admission plugin is the Pod Node Selector feature, which uses an annotation on a namespace to find a label selector and add it to the pod specification.

Sample mutating admission plugin configuration

- 1

- Specifies a mutating admission plugin configuration.

- 2

- The name for the

MutatingWebhookConfigurationobject. Replace<webhook_name>with the appropriate value. - 3

- The name of the webhook to call. Replace

<webhook_name>with the appropriate value. - 4

- Information about how to connect to, trust, and send data to the webhook server.

- 5

- The namespace where the front-end service is created.

- 6

- The name of the front-end service.

- 7

- The webhook URL used for admission requests. Replace

<webhook_url>with the appropriate value. - 8

- A PEM-encoded CA certificate that signs the server certificate that is used by the webhook server. Replace

<ca_signing_certificate>with the appropriate certificate in base64 format. - 9

- Rules that define when the API server should use this webhook admission plugin.

- 10

- One or more operations that trigger the API server to call this webhook admission plugin. Possible values are

create,update,deleteorconnect. Replace<operation>and<resource>with the appropriate values. - 11

- Specifies how the policy should proceed if the webhook server is unavailable. Replace

<policy>with eitherIgnore(to unconditionally accept the request in the event of a failure) orFail(to deny the failed request). UsingIgnorecan result in unpredictable behavior for all clients.

In OpenShift Container Platform 4.14, objects created by users or control loops through a mutating admission plugin might return unexpected results, especially if values set in an initial request are overwritten, which is not recommended.

10.4.2. Validating admission plugin

A validating admission plugin is invoked during the validation phase of the admission process. This phase allows the enforcement of invariants on particular API resources to ensure that the resource does not change again. The Pod Node Selector is also an example of a webhook which is called by the validating admission plugin, to ensure that all nodeSelector fields are constrained by the node selector restrictions on the namespace.

Sample validating admission plugin configuration

- 1

- Specifies a validating admission plugin configuration.

- 2

- The name for the

ValidatingWebhookConfigurationobject. Replace<webhook_name>with the appropriate value. - 3

- The name of the webhook to call. Replace

<webhook_name>with the appropriate value. - 4

- Information about how to connect to, trust, and send data to the webhook server.

- 5

- The namespace where the front-end service is created.

- 6

- The name of the front-end service.

- 7

- The webhook URL used for admission requests. Replace

<webhook_url>with the appropriate value. - 8

- A PEM-encoded CA certificate that signs the server certificate that is used by the webhook server. Replace

<ca_signing_certificate>with the appropriate certificate in base64 format. - 9

- Rules that define when the API server should use this webhook admission plugin.

- 10

- One or more operations that trigger the API server to call this webhook admission plugin. Possible values are

create,update,deleteorconnect. Replace<operation>and<resource>with the appropriate values. - 11

- Specifies how the policy should proceed if the webhook server is unavailable. Replace

<policy>with eitherIgnore(to unconditionally accept the request in the event of a failure) orFail(to deny the failed request). UsingIgnorecan result in unpredictable behavior for all clients.

10.5. Configuring dynamic admission

This procedure outlines high-level steps to configure dynamic admission. The functionality of the admission chain is extended by configuring a webhook admission plugin to call out to a webhook server.

The webhook server is also configured as an aggregated API server. This allows other OpenShift Container Platform components to communicate with the webhook using internal credentials and facilitates testing using the oc command. Additionally, this enables role based access control (RBAC) into the webhook and prevents token information from other API servers from being disclosed to the webhook.

Prerequisites

- An OpenShift Container Platform account with cluster administrator access.

-

The OpenShift Container Platform CLI (

oc) installed. - A published webhook server container image.

Procedure

- Build a webhook server container image and make it available to the cluster using an image registry.

- Create a local CA key and certificate and use them to sign the webhook server’s certificate signing request (CSR).

Create a new project for webhook resources:

oc new-project my-webhook-namespace

$ oc new-project my-webhook-namespace1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Note that the webhook server might expect a specific name.

Define RBAC rules for the aggregated API service in a file called

rbac.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Delegates authentication and authorization to the webhook server API.

- 2

- Allows the webhook server to access cluster resources.

- 3

- Points to resources. This example points to the

namespacereservationsresource. - 4

- Enables the aggregated API server to create admission reviews.

- 5

- Points to resources. This example points to the

namespacereservationsresource. - 6

- Enables the webhook server to access cluster resources.

- 7

- Role binding to read the configuration for terminating authentication.

- 8

- Default cluster role and cluster role bindings for an aggregated API server.

Apply those RBAC rules to the cluster:

oc auth reconcile -f rbac.yaml

$ oc auth reconcile -f rbac.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a YAML file called

webhook-daemonset.yamlthat is used to deploy a webhook as a daemon set server in a namespace:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Note that the webhook server might expect a specific container name.

- 2

- Points to a webhook server container image. Replace

<image_registry_username>/<image_path>:<tag>with the appropriate value. - 3

- Specifies webhook container run commands. Replace

<container_commands>with the appropriate value. - 4

- Defines the target port within pods. This example uses port 8443.

- 5

- Specifies the port used by the readiness probe. This example uses port 8443.

Deploy the daemon set:

oc apply -f webhook-daemonset.yaml

$ oc apply -f webhook-daemonset.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Define a secret for the service serving certificate signer, within a YAML file called

webhook-secret.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the secret:

oc apply -f webhook-secret.yaml

$ oc apply -f webhook-secret.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Define a service account and service, within a YAML file called

webhook-service.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expose the webhook server within the cluster:

oc apply -f webhook-service.yaml

$ oc apply -f webhook-service.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Define a custom resource definition for the webhook server, in a file called

webhook-crd.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Reflects

CustomResourceDefinitionspecvalues and is in the format<plural>.<group>. This example uses thenamespacereservationsresource. - 2

- REST API group name.

- 3

- REST API version name.

- 4

- Accepted values are

NamespacedorCluster. - 5

- Plural name to be included in URL.

- 6

- Alias seen in

ocoutput. - 7

- The reference for resource manifests.

Apply the custom resource definition:

oc apply -f webhook-crd.yaml

$ oc apply -f webhook-crd.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the webhook server also as an aggregated API server, within a file called

webhook-api-service.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- A PEM-encoded CA certificate that signs the server certificate that is used by the webhook server. Replace

<ca_signing_certificate>with the appropriate certificate in base64 format.

Deploy the aggregated API service:

oc apply -f webhook-api-service.yaml

$ oc apply -f webhook-api-service.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Define the webhook admission plugin configuration within a file called

webhook-config.yaml. This example uses the validating admission plugin:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Name for the

ValidatingWebhookConfigurationobject. This example uses thenamespacereservationsresource. - 2

- Name of the webhook to call. This example uses the

namespacereservationsresource. - 3

- Enables access to the webhook server through the aggregated API.

- 4

- The webhook URL used for admission requests. This example uses the

namespacereservationresource. - 5

- A PEM-encoded CA certificate that signs the server certificate that is used by the webhook server. Replace

<ca_signing_certificate>with the appropriate certificate in base64 format.

Deploy the webhook:

oc apply -f webhook-config.yaml

$ oc apply -f webhook-config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that the webhook is functioning as expected. For example, if you have configured dynamic admission to reserve specific namespaces, confirm that requests to create those namespaces are rejected and that requests to create non-reserved namespaces succeed.