Questo contenuto non è disponibile nella lingua selezionata.

Chapter 17. Network-Bound Disk Encryption (NBDE)

17.1. About disk encryption technology

Network-Bound Disk Encryption (NBDE) allows you to encrypt root volumes of hard drives on physical and virtual machines without having to manually enter a password when restarting machines.

17.1.1. Disk encryption technology comparison

To understand the merits of Network-Bound Disk Encryption (NBDE) for securing data at rest on edge servers, compare key escrow and TPM disk encryption without Clevis to NBDE on systems running Red Hat Enterprise Linux (RHEL).

The following table presents some tradeoffs to consider around the threat model and the complexity of each encryption solution.

| Scenario | Key escrow | TPM disk encryption (without Clevis) | NBDE |

|---|---|---|---|

| Protects against single-disk theft | X | X | X |

| Protects against entire-server theft | X | X | |

| Systems can reboot independently from the network | X | ||

| No periodic rekeying | X | ||

| Key is never transmitted over a network | X | X | |

| Supported by OpenShift | X | X |

17.1.1.1. Key escrow

Key escrow is the traditional system for storing cryptographic keys. The key server on the network stores the encryption key for a node with an encrypted boot disk and returns it when queried. The complexities around key management, transport encryption, and authentication do not make this a reasonable choice for boot disk encryption.

Although available in Red Hat Enterprise Linux (RHEL), key escrow-based disk encryption setup and management is a manual process and not suited to OpenShift Container Platform automation operations, including automated addition of nodes, and currently not supported by OpenShift Container Platform.

17.1.1.2. TPM encryption

Trusted Platform Module (TPM) disk encryption is best suited for data centers or installations in remote protected locations. Full disk encryption utilities such as dm-crypt and BitLocker encrypt disks with a TPM bind key, and then store the TPM bind key in the TPM, which is attached to the motherboard of the node. The main benefit of this method is that there is no external dependency, and the node is able to decrypt its own disks at boot time without any external interaction.

TPM disk encryption protects against decryption of data if the disk is stolen from the node and analyzed externally. However, for insecure locations this may not be sufficient. For example, if an attacker steals the entire node, the attacker can intercept the data when powering on the node, because the node decrypts its own disks. This applies to nodes with physical TPM2 chips as well as virtual machines with Virtual Trusted Platform Module (VTPM) access.

17.1.1.3. Network-Bound Disk Encryption (NBDE)

Network-Bound Disk Encryption (NBDE) effectively ties the encryption key to an external server or set of servers in a secure and anonymous way across the network. This is not a key escrow, in that the nodes do not store the encryption key or transfer it over the network, but otherwise behaves in a similar fashion.

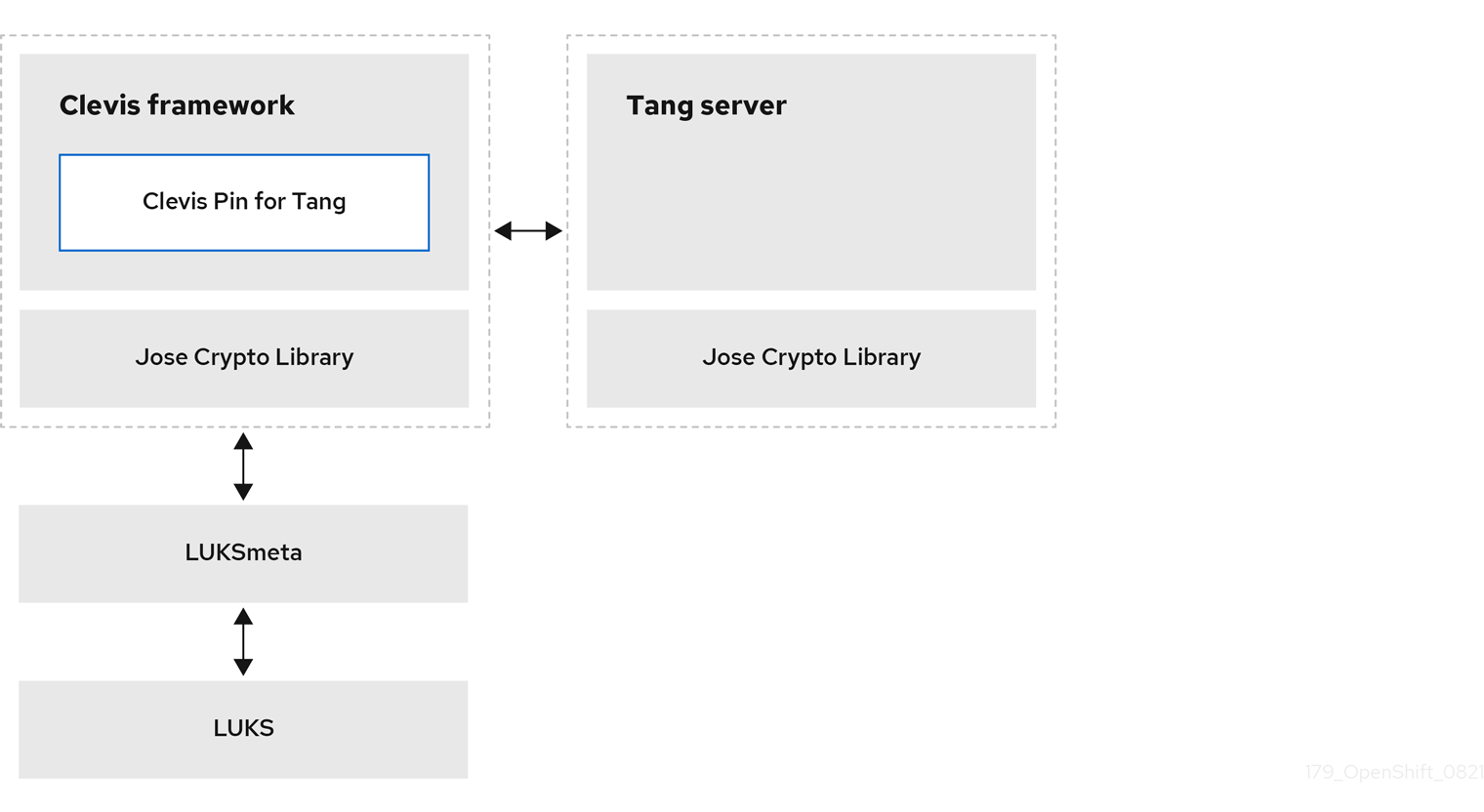

Clevis and Tang are generic client and server components that provide network-bound encryption. Red Hat Enterprise Linux CoreOS (RHCOS) uses these components in conjunction with Linux Unified Key Setup-on-disk-format (LUKS) to encrypt and decrypt root and non-root storage volumes to accomplish Network-Bound Disk Encryption.

When a node starts, it attempts to contact a predefined set of Tang servers by performing a cryptographic handshake. If it can reach the required number of Tang servers, the node can construct its disk decryption key and unlock the disks to continue booting. If the node cannot access a Tang server due to a network outage or server unavailability, the node cannot boot and continues retrying indefinitely until the Tang servers become available again. Because the key is effectively tied to the node’s presence in a network, an attacker attempting to gain access to the data at rest would need to obtain both the disks on the node, and network access to the Tang server as well.

The following figure illustrates the deployment model for NBDE.

The following figure illustrates NBDE behavior during a reboot.

17.1.1.4. Secret sharing encryption

Shamir’s secret sharing (sss) is a cryptographic algorithm to securely divide up, distribute, and re-assemble keys. Using this algorithm, OpenShift Container Platform can support more complicated mixtures of key protection.

When you configure a cluster node to use multiple Tang servers, OpenShift Container Platform uses sss to set up a decryption policy that will succeed if at least one of the specified servers is available. You can create layers for additional security. For example, you can define a policy where OpenShift Container Platform requires both the TPM and one of the given list of Tang servers to decrypt the disk.

17.1.2. Tang server disk encryption

The following components and technologies implement Network-Bound Disk Encryption (NBDE).

Figure 17.1. NBDE scheme when using a LUKS1-encrypted volume. The luksmeta package is not used for LUKS2 volumes.

Tang is a server for binding data to network presence. It makes a node containing the data available when the node is bound to a certain secure network. Tang is stateless and does not require Transport Layer Security (TLS) or authentication. Unlike escrow-based solutions, where the key server stores all encryption keys and has knowledge of every encryption key, Tang never interacts with any node keys, so it never gains any identifying information from the node.

Clevis is a pluggable framework for automated decryption that provides automated unlocking of Linux Unified Key Setup-on-disk-format (LUKS) volumes. The Clevis package runs on the node and provides the client side of the feature.

A Clevis pin is a plugin into the Clevis framework. There are three pin types:

- TPM2

- Binds the disk encryption to the TPM2.

- Tang

- Binds the disk encryption to a Tang server to enable NBDE.

- Shamir’s secret sharing (sss)

Allows more complex combinations of other pins. It allows more nuanced policies such as the following:

- Must be able to reach one of these three Tang servers

- Must be able to reach three of these five Tang servers

- Must be able to reach the TPM2 AND at least one of these three Tang servers

17.1.3. Tang server location planning

When planning your Tang server environment, consider the physical and network locations of the Tang servers.

- Physical location

The geographic location of the Tang servers is relatively unimportant, as long as they are suitably secured from unauthorized access or theft and offer the required availability and accessibility to run a critical service.

Nodes with Clevis clients do not require local Tang servers as long as the Tang servers are available at all times. Disaster recovery requires both redundant power and redundant network connectivity to Tang servers regardless of their location.

- Network location

Any node with network access to the Tang servers can decrypt their own disk partitions, or any other disks encrypted by the same Tang servers.

Select network locations for the Tang servers that ensure the presence or absence of network connectivity from a given host allows for permission to decrypt. For example, firewall protections might be in place to prohibit access from any type of guest or public network, or any network jack located in an unsecured area of the building.

Additionally, maintain network segregation between production and development networks. This assists in defining appropriate network locations and adds an additional layer of security.

Do not deploy Tang servers on the same resource, for example, the same

rolebindings.rbac.authorization.k8s.iocluster, that they are responsible for unlocking. However, a cluster of Tang servers and other security resources can be a useful configuration to enable support of multiple additional clusters and cluster resources.

17.1.4. Tang server sizing requirements

The requirements around availability, network, and physical location drive the decision of how many Tang servers to use, rather than any concern over server capacity.

Tang servers do not maintain the state of data encrypted using Tang resources. Tang servers are either fully independent or share only their key material, which enables them to scale well.

There are two ways Tang servers handle key material:

Multiple Tang servers share key material:

- You must load balance Tang servers sharing keys behind the same URL. The configuration can be as simple as round-robin DNS, or you can use physical load balancers.

- You can scale from a single Tang server to multiple Tang servers. Scaling Tang servers does not require rekeying or client reconfiguration on the node when the Tang servers share key material and the same URL.

- Client node setup and key rotation only requires one Tang server.

Multiple Tang servers generate their own key material:

- You can configure multiple Tang servers at installation time.

- You can scale an individual Tang server behind a load balancer.

- All Tang servers must be available during client node setup or key rotation.

- When a client node boots using the default configuration, the Clevis client contacts all Tang servers. Only n Tang servers must be online to proceed with decryption. The default value for n is 1.

- Red Hat does not support postinstallation configuration that changes the behavior of the Tang servers.

17.1.5. Logging considerations

Centralized logging of Tang traffic is advantageous because it might allow you to detect such things as unexpected decryption requests. For example:

- A node requesting decryption of a passphrase that does not correspond to its boot sequence

- A node requesting decryption outside of a known maintenance activity, such as cycling keys

17.2. Tang server installation considerations

Network-Bound Disk Encryption (NBDE) must be enabled when a cluster node is installed. However, you can change the disk encryption policy at any time after it was initialized at installation.

17.2.1. Installation scenarios

Consider the following recommendations when planning Tang server installations:

Small environments can use a single set of key material, even when using multiple Tang servers:

- Key rotations are easier.

- Tang servers can scale easily to permit high availability.

Large environments can benefit from multiple sets of key material:

- Physically diverse installations do not require the copying and synchronizing of key material between geographic regions.

- Key rotations are more complex in large environments.

- Node installation and rekeying require network connectivity to all Tang servers.

- A small increase in network traffic can occur due to a booting node querying all Tang servers during decryption. Note that while only one Clevis client query must succeed, Clevis queries all Tang servers.

Further complexity:

-

Additional manual reconfiguration can permit the Shamir’s secret sharing (sss) of

any N of M servers onlinein order to decrypt the disk partition. Decrypting disks in this scenario requires multiple sets of key material, and manual management of Tang servers and nodes with Clevis clients after the initial installation.

-

Additional manual reconfiguration can permit the Shamir’s secret sharing (sss) of

High level recommendations:

- For a single RAN deployment, a limited set of Tang servers can run in the corresponding domain controller (DC).

- For multiple RAN deployments, you must decide whether to run Tang servers in each corresponding DC or whether a global Tang environment better suits the other needs and requirements of the system.

17.2.2. Installing a Tang server

To deploy one or more Tang servers, you can choose from the following options depending on your scenario:

17.2.2.1. Compute requirements

The computational requirements for the Tang server are very low. Any typical server grade configuration that you would use to deploy a server into production can provision sufficient compute capacity.

High availability considerations are solely for availability and not additional compute power to satisfy client demands.

17.2.2.2. Automatic start at boot

Due to the sensitive nature of the key material the Tang server uses, you should keep in mind that the overhead of manual intervention during the Tang server’s boot sequence can be beneficial.

By default, if a Tang server starts and does not have key material present in the expected local volume, it will create fresh material and serve it. You can avoid this default behavior by either starting with pre-existing key material or aborting the startup and waiting for manual intervention.

17.2.2.3. HTTP versus HTTPS

Traffic to the Tang server can be encrypted (HTTPS) or plain text (HTTP). There are no significant security advantages of encrypting this traffic, and leaving it decrypted removes any complexity or failure conditions related to Transport Layer Security (TLS) certificate checking in the node running a Clevis client.

While it is possible to perform passive monitoring of unencrypted traffic between the node’s Clevis client and the Tang server, the ability to use this traffic to determine the key material is at best a future theoretical concern. Any such traffic analysis would require large quantities of captured data. Key rotation would immediately invalidate it. Finally, any threat actor able to perform passive monitoring has already obtained the necessary network access to perform manual connections to the Tang server and can perform the simpler manual decryption of captured Clevis headers.

However, because other network policies in place at the installation site might require traffic encryption regardless of application, consider leaving this decision to the cluster administrator.

17.3. Tang server encryption key management

The cryptographic mechanism to recreate the encryption key is based on the blinded key stored on the node and the private key of the involved Tang servers.

To protect against the possibility of an attacker who has obtained both the Tang server private key and the node’s encrypted disk, periodic rekeying is advisable.

You must perform the rekeying operation for every node before you can delete the old key from the Tang server.

The following sections provide procedures for rekeying and deleting old keys.

17.3.1. Backing up keys for a Tang server

The Tang server uses /usr/libexec/tangd-keygen to generate new keys and stores them in the /var/db/tang directory by default. To recover the Tang server in the event of a failure, back up this directory. The keys are sensitive and because they are able to perform the boot disk decryption of all hosts that have used them, the keys must be protected accordingly.

Procedure

-

Copy the backup key from the

/var/db/tangdirectory to the temp directory from which you can restore the key.

17.3.2. Recovering keys for a Tang server

You can recover the keys for a Tang server by accessing the keys from a backup.

Procedure

Restore the key from your backup folder to the

/var/db/tang/directory.When the Tang server starts up, it advertises and uses these restored keys.

17.3.3. Rekeying Tang servers

This procedure uses a set of three Tang servers, each with unique keys, as an example.

Using redundant Tang servers reduces the chances of nodes failing to boot automatically.

Rekeying a Tang server, and all associated NBDE-encrypted nodes, is a three-step procedure.

Prerequisites

- A working Network-Bound Disk Encryption (NBDE) installation on one or more nodes.

Procedure

- Generate a new Tang server key.

- Rekey all NBDE-encrypted nodes so they use the new key.

Delete the old Tang server key.

NoteDeleting the old key before all NBDE-encrypted nodes have completed their rekeying causes those nodes to become overly dependent on any other configured Tang servers.

Figure 17.2. Example workflow for rekeying a Tang server

17.3.3.1. Generating a new Tang server key

Prerequisites

- A root shell on the Linux machine running the Tang server.

To facilitate verification of the Tang server key rotation, encrypt a small test file with the old key:

echo plaintext | clevis encrypt tang '{"url":"http://localhost:7500”}' -y >/tmp/encrypted.oldkey# echo plaintext | clevis encrypt tang '{"url":"http://localhost:7500”}' -y >/tmp/encrypted.oldkeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the encryption succeeded and the file can be decrypted to produce the same string

plaintext:clevis decrypt </tmp/encrypted.oldkey

# clevis decrypt </tmp/encrypted.oldkeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Locate and access the directory that stores the Tang server key. This is usually the

/var/db/tangdirectory. Check the currently advertised key thumbprint:tang-show-keys 7500

# tang-show-keys 7500Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

36AHjNH3NZDSnlONLz1-V4ie6t8

36AHjNH3NZDSnlONLz1-V4ie6t8Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the Tang server key directory:

cd /var/db/tang/

# cd /var/db/tang/Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the current Tang server keys:

ls -A1

# ls -A1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

36AHjNH3NZDSnlONLz1-V4ie6t8.jwk gJZiNPMLRBnyo_ZKfK4_5SrnHYo.jwk

36AHjNH3NZDSnlONLz1-V4ie6t8.jwk gJZiNPMLRBnyo_ZKfK4_5SrnHYo.jwkCopy to Clipboard Copied! Toggle word wrap Toggle overflow During normal Tang server operations, there are two

.jwkfiles in this directory: one for signing and verification, and another for key derivation.Disable advertisement of the old keys:

for key in *.jwk; do \ mv -- "$key" ".$key"; \ done

# for key in *.jwk; do \ mv -- "$key" ".$key"; \ doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow New clients setting up Network-Bound Disk Encryption (NBDE) or requesting keys will no longer see the old keys. Existing clients can still access and use the old keys until they are deleted. The Tang server reads but does not advertise keys stored in UNIX hidden files, which start with the

.character.Generate a new key:

/usr/libexec/tangd-keygen /var/db/tang

# /usr/libexec/tangd-keygen /var/db/tangCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the current Tang server keys to verify the old keys are no longer advertised, as they are now hidden files, and new keys are present:

ls -A1

# ls -A1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

.36AHjNH3NZDSnlONLz1-V4ie6t8.jwk .gJZiNPMLRBnyo_ZKfK4_5SrnHYo.jwk Bp8XjITceWSN_7XFfW7WfJDTomE.jwk WOjQYkyK7DxY_T5pMncMO5w0f6E.jwk

.36AHjNH3NZDSnlONLz1-V4ie6t8.jwk .gJZiNPMLRBnyo_ZKfK4_5SrnHYo.jwk Bp8XjITceWSN_7XFfW7WfJDTomE.jwk WOjQYkyK7DxY_T5pMncMO5w0f6E.jwkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Tang automatically advertises the new keys.

NoteMore recent Tang server installations include a helper

/usr/libexec/tangd-rotate-keysdirectory that takes care of disabling advertisement and generating the new keys simultaneously.- If you are running multiple Tang servers behind a load balancer that share the same key material, ensure the changes made here are properly synchronized across the entire set of servers before proceeding.

Verification

Verify that the Tang server is advertising the new key, and not advertising the old key:

tang-show-keys 7500

# tang-show-keys 7500Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

WOjQYkyK7DxY_T5pMncMO5w0f6E

WOjQYkyK7DxY_T5pMncMO5w0f6ECopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the old key, while not advertised, is still available to decryption requests:

clevis decrypt </tmp/encrypted.oldkey

# clevis decrypt </tmp/encrypted.oldkeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

17.3.3.2. Rekeying all NBDE nodes

You can rekey all of the nodes on a remote cluster by using a DaemonSet object without incurring any downtime to the remote cluster.

If a node loses power during the rekeying, it is possible that it might become unbootable, and must be redeployed via Red Hat Advanced Cluster Management (RHACM) or a GitOps pipeline.

Prerequisites

-

cluster-adminaccess to all clusters with Network-Bound Disk Encryption (NBDE) nodes. - All Tang servers must be accessible to every NBDE node undergoing rekeying, even if the keys of a Tang server have not changed.

- Obtain the Tang server URL and key thumbprint for every Tang server.

Procedure

Create a

DaemonSetobject based on the following template. This template sets up three redundant Tang servers, but can be easily adapted to other situations. Change the Tang server URLs and thumbprints in theNEW_TANG_PINenvironment to suit your environment:Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this case, even though you are rekeying

tangserver01, you must specify not only the new thumbprint fortangserver01, but also the current thumbprints for all other Tang servers. Failure to specify all thumbprints for a rekeying operation opens up the opportunity for a man-in-the-middle attack.To distribute the daemon set to every cluster that must be rekeyed, run the following command:

oc apply -f tang-rekey.yaml

$ oc apply -f tang-rekey.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow However, to run at scale, wrap the daemon set in an ACM policy. This ACM configuration must contain one policy to deploy the daemon set, a second policy to check that all the daemon set pods are READY, and a placement rule to apply it to the appropriate set of clusters.

After validating that the daemon set has successfully rekeyed all servers, delete the daemon set. If you do not delete the daemon set, it must be deleted before the next rekeying operation.

Verification

After you distribute the daemon set, monitor the daemon sets to ensure that the rekeying has completed successfully. The script in the example daemon set terminates with an error if the rekeying failed, and remains in the CURRENT state if successful. There is also a readiness probe that marks the pod as READY when the rekeying has completed successfully.

This is an example of the output listing for the daemon set before the rekeying has completed:

oc get -n openshift-machine-config-operator ds tang-rekey

$ oc get -n openshift-machine-config-operator ds tang-rekeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE tang-rekey 1 1 0 1 0 kubernetes.io/os=linux 11s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE tang-rekey 1 1 0 1 0 kubernetes.io/os=linux 11sCopy to Clipboard Copied! Toggle word wrap Toggle overflow This is an example of the output listing for the daemon set after the rekeying has completed successfully:

oc get -n openshift-machine-config-operator ds tang-rekey

$ oc get -n openshift-machine-config-operator ds tang-rekeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE tang-rekey 1 1 1 1 1 kubernetes.io/os=linux 13h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE tang-rekey 1 1 1 1 1 kubernetes.io/os=linux 13hCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Rekeying usually takes a few minutes to complete.

If you use ACM policies to distribute the daemon sets to multiple clusters, you must include a compliance policy that checks every daemon set’s READY count is equal to the DESIRED count. In this way, compliance to such a policy demonstrates that all daemon set pods are READY and the rekeying has completed successfully. You could also use an ACM search to query all of the daemon sets' states.

17.3.3.3. Troubleshooting temporary rekeying errors for Tang servers

To determine if the error condition from rekeying the Tang servers is temporary, perform the following procedure. Temporary error conditions might include:

- Temporary network outages

- Tang server maintenance

Generally, when these types of temporary error conditions occur, you can wait until the daemon set succeeds in resolving the error or you can delete the daemon set and not try again until the temporary error condition has been resolved.

Procedure

- Restart the pod that performs the rekeying operation using the normal Kubernetes pod restart policy.

- If any of the associated Tang servers are unavailable, try rekeying until all the servers are back online.

17.3.3.4. Troubleshooting permanent rekeying errors for Tang servers

If, after rekeying the Tang servers, the READY count does not equal the DESIRED count after an extended period of time, it might indicate a permanent failure condition. In this case, the following conditions might apply:

-

A typographical error in the Tang server URL or thumbprint in the

NEW_TANG_PINdefinition. - The Tang server is decommissioned or the keys are permanently lost.

Prerequisites

- The commands shown in this procedure can be run on the Tang server or on any Linux system that has network access to the Tang server.

Procedure

Validate the Tang server configuration by performing a simple encrypt and decrypt operation on each Tang server’s configuration as defined in the daemon set.

This is an example of an encryption and decryption attempt with a bad thumbprint:

echo "okay" | clevis encrypt tang \ '{"url":"http://tangserver02:7500","thp":"badthumbprint"}' | \ clevis decrypt$ echo "okay" | clevis encrypt tang \ '{"url":"http://tangserver02:7500","thp":"badthumbprint"}' | \ clevis decryptCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Unable to fetch advertisement: 'http://tangserver02:7500/adv/badthumbprint'!

Unable to fetch advertisement: 'http://tangserver02:7500/adv/badthumbprint'!Copy to Clipboard Copied! Toggle word wrap Toggle overflow This is an example of an encryption and decryption attempt with a good thumbprint:

echo "okay" | clevis encrypt tang \ '{"url":"http://tangserver03:7500","thp":"goodthumbprint"}' | \ clevis decrypt$ echo "okay" | clevis encrypt tang \ '{"url":"http://tangserver03:7500","thp":"goodthumbprint"}' | \ clevis decryptCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

okay

okayCopy to Clipboard Copied! Toggle word wrap Toggle overflow After you identify the root cause, remedy the underlying situation:

- Delete the non-working daemon set.

Edit the daemon set definition to fix the underlying issue. This might include any of the following actions:

- Edit a Tang server entry to correct the URL and thumbprint.

- Remove a Tang server that is no longer in service.

- Add a new Tang server that is a replacement for a decommissioned server.

- Distribute the updated daemon set again.

When replacing, removing, or adding a Tang server from a configuration, the rekeying operation will succeed as long as at least one original server is still functional, including the server currently being rekeyed. If none of the original Tang servers are functional or can be recovered, recovery of the system is impossible and you must redeploy the affected nodes.

Verification

Check the logs from each pod in the daemon set to determine whether the rekeying completed successfully. If the rekeying is not successful, the logs might indicate the failure condition.

Locate the name of the container that was created by the daemon set:

oc get pods -A | grep tang-rekey

$ oc get pods -A | grep tang-rekeyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

openshift-machine-config-operator tang-rekey-7ks6h 1/1 Running 20 (8m39s ago) 89m

openshift-machine-config-operator tang-rekey-7ks6h 1/1 Running 20 (8m39s ago) 89mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Print the logs from the container. The following log is from a completed successful rekeying operation:

oc logs tang-rekey-7ks6h

$ oc logs tang-rekey-7ks6hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

17.3.4. Deleting old Tang server keys

Prerequisites

- A root shell on the Linux machine running the Tang server.

Procedure

Locate and access the directory where the Tang server key is stored. This is usually the

/var/db/tangdirectory:cd /var/db/tang/

# cd /var/db/tang/Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the current Tang server keys, showing the advertised and unadvertised keys:

ls -A1

# ls -A1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

.36AHjNH3NZDSnlONLz1-V4ie6t8.jwk .gJZiNPMLRBnyo_ZKfK4_5SrnHYo.jwk Bp8XjITceWSN_7XFfW7WfJDTomE.jwk WOjQYkyK7DxY_T5pMncMO5w0f6E.jwk

.36AHjNH3NZDSnlONLz1-V4ie6t8.jwk .gJZiNPMLRBnyo_ZKfK4_5SrnHYo.jwk Bp8XjITceWSN_7XFfW7WfJDTomE.jwk WOjQYkyK7DxY_T5pMncMO5w0f6E.jwkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the old keys:

rm .*.jwk

# rm .*.jwkCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the current Tang server keys to verify the unadvertised keys are no longer present:

ls -A1

# ls -A1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Bp8XjITceWSN_7XFfW7WfJDTomE.jwk WOjQYkyK7DxY_T5pMncMO5w0f6E.jwk

Bp8XjITceWSN_7XFfW7WfJDTomE.jwk WOjQYkyK7DxY_T5pMncMO5w0f6E.jwkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

At this point, the server still advertises the new keys, but an attempt to decrypt based on the old key will fail.

Query the Tang server for the current advertised key thumbprints:

tang-show-keys 7500

# tang-show-keys 7500Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

WOjQYkyK7DxY_T5pMncMO5w0f6E

WOjQYkyK7DxY_T5pMncMO5w0f6ECopy to Clipboard Copied! Toggle word wrap Toggle overflow Decrypt the test file created earlier to verify decryption against the old keys fails:

clevis decrypt </tmp/encryptValidation

# clevis decrypt </tmp/encryptValidationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Error communicating with the server!

Error communicating with the server!Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you are running multiple Tang servers behind a load balancer that share the same key material, ensure the changes made are properly synchronized across the entire set of servers before proceeding.

17.4. Disaster recovery considerations

This section describes several potential disaster situations and the procedures to respond to each of them. Additional situations will be added here as they are discovered or presumed likely to be possible.

17.4.1. Loss of a client machine

The loss of a cluster node that uses the Tang server to decrypt its disk partition is not a disaster. Whether the machine was stolen, suffered hardware failure, or another loss scenario is not important: the disks are encrypted and considered unrecoverable.

However, in the event of theft, a precautionary rotation of the Tang server’s keys and rekeying of all remaining nodes would be prudent to ensure the disks remain unrecoverable even in the event the thieves subsequently gain access to the Tang servers.

To recover from this situation, either reinstall or replace the node.

17.4.2. Planning for a loss of client network connectivity

The loss of network connectivity to an individual node will cause it to become unable to boot in an unattended fashion.

If you are planning work that might cause a loss of network connectivity, you can reveal the passphrase for an onsite technician to use manually, and then rotate the keys afterwards to invalidate it:

Procedure

Before the network becomes unavailable, show the password used in the first slot

-s 1of device/dev/vda2with this command:sudo clevis luks pass -d /dev/vda2 -s 1

$ sudo clevis luks pass -d /dev/vda2 -s 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Invalidate that value and regenerate a new random boot-time passphrase with this command:

sudo clevis luks regen -d /dev/vda2 -s 1

$ sudo clevis luks regen -d /dev/vda2 -s 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

17.4.3. Unexpected loss of network connectivity

If the network disruption is unexpected and a node reboots, consider the following scenarios:

- If any nodes are still online, ensure that they do not reboot until network connectivity is restored. This is not applicable for single-node clusters.

- The node will remain offline until such time that either network connectivity is restored, or a pre-established passphrase is entered manually at the console. In exceptional circumstances, network administrators might be able to reconfigure network segments to reestablish access, but this is counter to the intent of NBDE, which is that lack of network access means lack of ability to boot.

- The lack of network access at the node can reasonably be expected to impact that node’s ability to function as well as its ability to boot. Even if the node were to boot via manual intervention, the lack of network access would make it effectively useless.

17.4.4. Recovering network connectivity manually

A somewhat complex and manually intensive process is also available to the onsite technician for network recovery.

Procedure

- The onsite technician extracts the Clevis header from the hard disks. Depending on BIOS lockdown, this might involve removing the disks and installing them in a lab machine.

- The onsite technician transmits the Clevis headers to a colleague with legitimate access to the Tang network who then performs the decryption.

- Due to the necessity of limited access to the Tang network, the technician should not be able to access that network via VPN or other remote connectivity. Similarly, the technician cannot patch the remote server through to this network in order to decrypt the disks automatically.

- The technician reinstalls the disk and manually enters the plain text passphrase provided by their colleague.

- The machine successfully starts even without direct access to the Tang servers. Note that the transmission of the key material from the install site to another site with network access must be done carefully.

- When network connectivity is restored, the technician rotates the encryption keys.

17.4.5. Emergency recovery of network connectivity

If you are unable to recover network connectivity manually, consider the following steps. Be aware that these steps are discouraged if other methods to recover network connectivity are available.

- This method must only be performed by a highly trusted technician.

- Taking the Tang server’s key material to the remote site is considered to be a breach of the key material and all servers must be rekeyed and re-encrypted.

- This method must be used in extreme cases only, or as a proof of concept recovery method to demonstrate its viability.

- Equally extreme, but theoretically possible, is to power the server in question with an Uninterruptible Power Supply (UPS), transport the server to a location with network connectivity to boot and decrypt the disks, and then restore the server at the original location on battery power to continue operation.

- If you want to use a backup manual passphrase, you must create it before the failure situation occurs.

- Just as attack scenarios become more complex with TPM and Tang compared to a stand-alone Tang installation, so emergency disaster recovery processes are also made more complex if leveraging the same method.

17.4.6. Loss of a network segment

The loss of a network segment, making a Tang server temporarily unavailable, has the following consequences:

- OpenShift Container Platform nodes continue to boot as normal, provided other servers are available.

- New nodes cannot establish their encryption keys until the network segment is restored. In this case, ensure connectivity to remote geographic locations for the purposes of high availability and redundancy. This is because when you are installing a new node or rekeying an existing node, all of the Tang servers you are referencing in that operation must be available.

A hybrid model for a vastly diverse network, such as five geographic regions in which each client is connected to the closest three clients is worth investigating.

In this scenario, new clients are able to establish their encryption keys with the subset of servers that are reachable. For example, in the set of tang1, tang2 and tang3 servers, if tang2 becomes unreachable clients can still establish their encryption keys with tang1 and tang3, and at a later time re-establish with the full set. This can involve either a manual intervention or a more complex automation to be available.

17.4.7. Loss of a Tang server

The loss of an individual Tang server within a load balanced set of servers with identical key material is completely transparent to the clients.

The temporary failure of all Tang servers associated with the same URL, that is, the entire load balanced set, can be considered the same as the loss of a network segment. Existing clients have the ability to decrypt their disk partitions so long as another preconfigured Tang server is available. New clients cannot enroll until at least one of these servers comes back online.

You can mitigate the physical loss of a Tang server by either reinstalling the server or restoring the server from backups. Ensure that the backup and restore processes of the key material is adequately protected from unauthorized access.

17.4.8. Rekeying compromised key material

If key material is potentially exposed to unauthorized third parties, such as through the physical theft of a Tang server or associated data, immediately rotate the keys.

Procedure

- Rekey any Tang server holding the affected material.

- Rekey all clients using the Tang server.

- Destroy the original key material.

- Scrutinize any incidents that result in unintended exposure of the master encryption key. If possible, take compromised nodes offline and re-encrypt their disks.

Reformatting and reinstalling on the same physical hardware, although slow, is easy to automate and test.