Questo contenuto non è disponibile nella lingua selezionata.

Chapter 1. Understanding OpenShift updates

1.1. Introduction to OpenShift updates

With OpenShift Container Platform 4, you can update an OpenShift Container Platform cluster with a single operation by using the web console or the OpenShift CLI (oc). Platform administrators can view new update options either by going to Administration oc adm upgrade command.

Red Hat hosts a public OpenShift Update Service (OSUS), which serves a graph of update possibilities based on the OpenShift Container Platform release images in the official registry. The graph contains update information for any public OCP release. OpenShift Container Platform clusters are configured to connect to the OSUS by default, and the OSUS responds to clusters with information about known update targets.

An update begins when either a cluster administrator or an automatic update controller edits the custom resource (CR) of the Cluster Version Operator (CVO) with a new version. To reconcile the cluster with the newly specified version, the CVO retrieves the target release image from an image registry and begins to apply changes to the cluster.

Operators previously installed through Operator Lifecycle Manager (OLM) follow a different process for updates. See Updating installed Operators for more information.

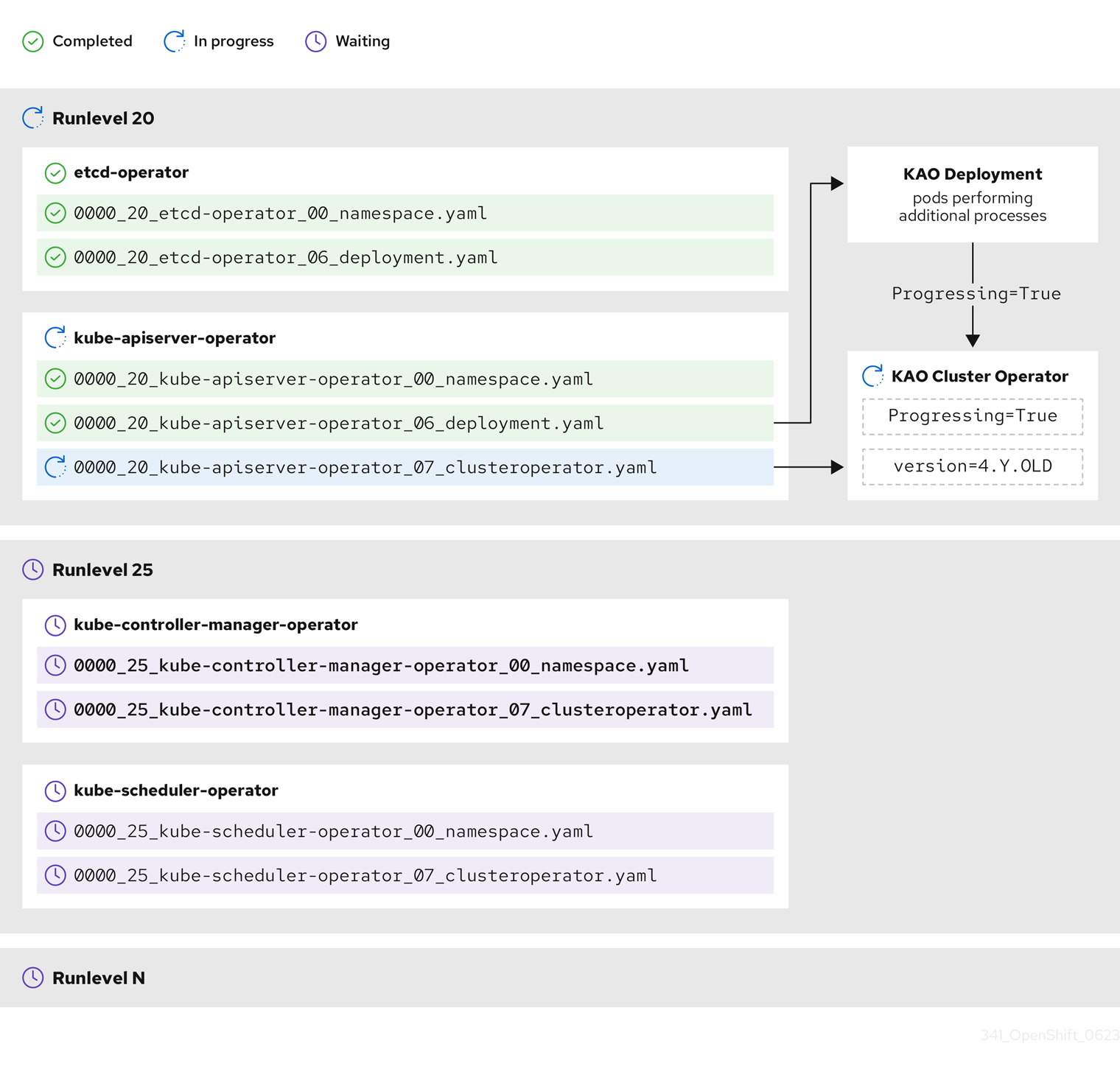

The target release image contains manifest files for all cluster components that form a specific OCP version. When updating the cluster to a new version, the CVO applies manifests in separate stages called Runlevels. Most, but not all, manifests support one of the cluster Operators. As the CVO applies a manifest to a cluster Operator, the Operator might perform update tasks to reconcile itself with its new specified version.

The CVO monitors the state of each applied resource and the states reported by all cluster Operators. The CVO only proceeds with the update when all manifests and cluster Operators in the active Runlevel reach a stable condition. After the CVO updates the entire control plane through this process, the Machine Config Operator (MCO) updates the operating system and configuration of every node in the cluster.

1.1.1. Common questions about update availability

There are several factors that affect if and when an update is made available to an OpenShift Container Platform cluster. The following list provides common questions regarding the availability of an update:

What are the differences between each of the update channels?

-

A new release is initially added to the

candidatechannel. -

After successful final testing, a release on the

candidatechannel is promoted to thefastchannel, an errata is published, and the release is now fully supported. After a delay, a release on the

fastchannel is finally promoted to thestablechannel. This delay represents the only difference between thefastandstablechannels.NoteFor the latest z-stream releases, this delay may generally be a week or two. However, the delay for initial updates to the latest minor version may take much longer, generally 45-90 days.

-

Releases promoted to the

stablechannel are simultaneously promoted to theeuschannel. The primary purpose of theeuschannel is to serve as a convenience for clusters performing a Control Plane Only update.

Is a release on the stable channel safer or more supported than a release on the fast channel?

-

If a regression is identified for a release on a

fastchannel, it will be resolved and managed to the same extent as if that regression was identified for a release on thestablechannel. -

The only difference between releases on the

fastandstablechannels is that a release only appears on thestablechannel after it has been on thefastchannel for some time, which provides more time for new update risks to be discovered. -

A release that is available on the

fastchannel always becomes available on thestablechannel after this delay.

What does it mean if an update is supported but not recommended?

- Red Hat continuously evaluates data from multiple sources to determine whether updates from one version to another lead to issues. If an issue is identified, an update path may no longer be recommended to users. However, even if the update path is not recommended, customers are still supported if they perform the update.

Red Hat does not block users from updating to a certain version. Red Hat may declare conditional update risks, which may or may not apply to a particular cluster.

- Declared risks provide cluster administrators more context about a supported update. Cluster administrators can still accept the risk and update to that particular target version. This update is always supported despite not being recommended in the context of the conditional risk.

What if I see that an update to a particular release is no longer recommended?

- If Red Hat removes update recommendations from any supported release due to a regression, a superseding update recommendation will be provided to a future version that corrects the regression. There may be a delay while the defect is corrected, tested, and promoted to your selected channel.

How long until the next z-stream release is made available on the fast and stable channels?

While the specific cadence can vary based on a number of factors, new z-stream releases for the latest minor version are typically made available about every week. Older minor versions, which have become more stable over time, may take much longer for new z-stream releases to be made available.

ImportantThese are only estimates based on past data about z-stream releases. Red Hat reserves the right to change the release frequency as needed. Any number of issues could cause irregularities and delays in this release cadence.

-

Once a z-stream release is published, it also appears in the

fastchannel for that minor version. After a delay, the z-stream release may then appear in that minor version’sstablechannel.

1.1.2. About the OpenShift Update Service

The OpenShift Update Service (OSUS) provides update recommendations to OpenShift Container Platform, including Red Hat Enterprise Linux CoreOS (RHCOS). It provides a graph, or diagram, that contains the vertices of component Operators and the edges that connect them. The edges in the graph show which versions you can safely update to. The vertices are update payloads that specify the intended state of the managed cluster components.

The Cluster Version Operator (CVO) in your cluster checks with the OpenShift Update Service to see the valid updates and update paths based on current component versions and information in the graph. When you request an update, the CVO uses the corresponding release image to update your cluster. The release artifacts are hosted in Quay as container images.

To allow the OpenShift Update Service to provide only compatible updates, a release verification pipeline drives automation. Each release artifact is verified for compatibility with supported cloud platforms and system architectures, as well as other component packages. After the pipeline confirms the suitability of a release, the OpenShift Update Service notifies you that it is available.

The OpenShift Update Service (OSUS) supports a single-stream release model, where only one release version is active and supported at any given time. When a new release is deployed, it fully replaces the previous release.

The updated release provides support for upgrades from all OpenShift Container Platform versions starting after 4.8 up to the new release version.

The OpenShift Update Service displays all recommended updates for your current cluster. If an update path is not recommended by the OpenShift Update Service, it might be because of a known issue with the update or the target release.

Two controllers run during continuous update mode. The first controller continuously updates the payload manifests, applies the manifests to the cluster, and outputs the controlled rollout status of the Operators to indicate whether they are available, upgrading, or failed. The second controller polls the OpenShift Update Service to determine if updates are available.

Only updating to a newer version is supported. Reverting or rolling back your cluster to a previous version is not supported. If your update fails, contact Red Hat support.

During the update process, the Machine Config Operator (MCO) applies the new configuration to your cluster machines. The MCO cordons the number of nodes specified by the maxUnavailable field on the machine configuration pool and marks them unavailable. By default, this value is set to 1. The MCO updates the affected nodes alphabetically by zone, based on the topology.kubernetes.io/zone label. If a zone has more than one node, the oldest nodes are updated first. For nodes that do not use zones, such as in bare metal deployments, the nodes are updated by age, with the oldest nodes updated first. The MCO updates the number of nodes as specified by the maxUnavailable field on the machine configuration pool at a time. The MCO then applies the new configuration and reboots the machine.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

If you use Red Hat Enterprise Linux (RHEL) machines as workers, the MCO does not update the kubelet because you must update the OpenShift API on the machines first.

With the specification for the new version applied to the old kubelet, the RHEL machine cannot return to the Ready state. You cannot complete the update until the machines are available. However, the maximum number of unavailable nodes is set to ensure that normal cluster operations can continue with that number of machines out of service.

The OpenShift Update Service is composed of an Operator and one or more application instances.

1.1.3. Understanding cluster Operator condition types

The status of cluster Operators includes their condition type, which informs you of the current state of your Operator’s health. The following definitions cover a list of some common ClusterOperator condition types. Operators that have additional condition types and use Operator-specific language have been omitted.

The Cluster Version Operator (CVO) is responsible for collecting the status conditions from cluster Operators so that cluster administrators can better understand the state of the OpenShift Container Platform cluster.

-

Available: The condition type

Availableindicates that an Operator is functional and available in the cluster. If the status isFalse, at least one part of the operand is non-functional and the condition requires an administrator to intervene. Progressing: The condition type

Progressingindicates that an Operator is actively rolling out new code, propagating configuration changes, or otherwise moving from one steady state to another.Operators do not report the condition type

ProgressingasTruewhen they are reconciling a previous known state. If the observed cluster state has changed and the Operator is reacting to it, then the status reports back asTrue, since it is moving from one steady state to another.Degraded: The condition type

Degradedindicates that an Operator has a current state that does not match its required state over a period of time. The period of time can vary by component, but aDegradedstatus represents persistent observation of an Operator’s condition. As a result, an Operator does not fluctuate in and out of theDegradedstate.There might be a different condition type if the transition from one state to another does not persist over a long enough period to report

Degraded. An Operator does not reportDegradedduring the course of a normal update. An Operator may reportDegradedin response to a persistent infrastructure failure that requires eventual administrator intervention.NoteThis condition type is only an indication that something may need investigation and adjustment. As long as the Operator is available, the

Degradedcondition does not cause user workload failure or application downtime.Upgradeable: The condition type

Upgradeableindicates whether the Operator is safe to update based on the current cluster state. The message field contains a human-readable description of what the administrator needs to do for the cluster to successfully update. The CVO allows updates when this condition isTrue,Unknownor missing.When the

Upgradeablestatus isFalse, only minor updates are impacted, and the CVO prevents the cluster from performing impacted updates unless forced.

1.1.4. Understanding cluster version condition types

The Cluster Version Operator (CVO) monitors cluster Operators and other components, and is responsible for collecting the status of both the cluster version and its Operators. This status includes the condition type, which informs you of the health and current state of the OpenShift Container Platform cluster.

In addition to Available, Progressing, and Upgradeable, there are condition types that affect cluster versions and Operators.

-

Failing: The cluster version condition type

Failingindicates that a cluster cannot reach its desired state, is unhealthy, and requires an administrator to intervene. -

Invalid: The cluster version condition type

Invalidindicates that the cluster version has an error that prevents the server from taking action. The CVO only reconciles the current state as long as this condition is set. -

RetrievedUpdates: The cluster version condition type

RetrievedUpdatesindicates whether or not available updates have been retrieved from the upstream update server. The condition isUnknownbefore retrieval,Falseif the updates either recently failed or could not be retrieved, orTrueif theavailableUpdatesfield is both recent and accurate. -

ReleaseAccepted: The cluster version condition type

ReleaseAcceptedwith aTruestatus indicates that the requested release payload was successfully loaded without failure during image verification and precondition checking. -

ImplicitlyEnabledCapabilities: The cluster version condition type

ImplicitlyEnabledCapabilitieswith aTruestatus indicates that there are enabled capabilities that the user is not currently requesting throughspec.capabilities. The CVO does not support disabling capabilities if any associated resources were previously managed by the CVO.

1.1.5. Common terms

- Control plane

- The control plane, which is composed of control plane machines, manages the OpenShift Container Platform cluster. The control plane machines manage workloads on the compute machines, which are also known as worker machines.

- Cluster Version Operator

- The Cluster Version Operator (CVO) starts the update process for the cluster. It checks with OSUS based on the current cluster version and retrieves the graph which contains available or possible update paths.

- Machine Config Operator

- The Machine Config Operator (MCO) is a cluster-level Operator that manages the operating system and machine configurations. Through the MCO, platform administrators can configure and update systemd, CRI-O and Kubelet, the kernel, NetworkManager, and other system features on the worker nodes.

- OpenShift Update Service

- The OpenShift Update Service (OSUS) provides over-the-air updates to OpenShift Container Platform, including to Red Hat Enterprise Linux CoreOS (RHCOS). It provides a graph, or diagram, that contains the vertices of component Operators and the edges that connect them.

- Channels

- Channels declare an update strategy tied to minor versions of OpenShift Container Platform. The OSUS uses this configured strategy to recommend update edges consistent with that strategy.

- Recommended update edge

- A recommended update edge is a recommended update between OpenShift Container Platform releases. Whether a given update is recommended can depend on the cluster’s configured channel, current version, known bugs, and other information. OSUS communicates the recommended edges to the CVO, which runs in every cluster.

1.2. How cluster updates work

The following sections describe each major aspect of the OpenShift Container Platform (OCP) update process in detail. For a general overview of how updates work, see the Introduction to OpenShift updates.

1.2.1. The Cluster Version Operator

The Cluster Version Operator (CVO) is the primary component that orchestrates and facilitates the OpenShift Container Platform update process. During installation and standard cluster operation, the CVO is constantly comparing the manifests of managed cluster Operators to in-cluster resources, and reconciling discrepancies to ensure that the actual state of these resources match their desired state.

1.2.1.1. The ClusterVersion object

One of the resources that the Cluster Version Operator (CVO) monitors is the ClusterVersion resource.

Administrators and OpenShift components can communicate or interact with the CVO through the ClusterVersion object. The desired CVO state is declared through the ClusterVersion object and the current CVO state is reflected in the object’s status.

Do not directly modify the ClusterVersion object. Instead, use interfaces such as the oc CLI or the web console to declare your update target.

The CVO continually reconciles the cluster with the target state declared in the spec property of the ClusterVersion resource. When the desired release differs from the actual release, that reconciliation updates the cluster.

1.2.1.1.1. Update availability data

The ClusterVersion resource also contains information about updates that are available to the cluster. This includes updates that are available, but not recommended due to a known risk that applies to the cluster. These updates are known as conditional updates. To learn how the CVO maintains this information about available updates in the ClusterVersion resource, see the "Evaluation of update availability" section.

You can inspect all available updates with the following command:

oc adm upgrade --include-not-recommended

$ oc adm upgrade --include-not-recommendedCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe additional

--include-not-recommendedparameter includes updates that are available but not recommended due to a known risk that applies to the cluster.Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

oc adm upgradecommand queries theClusterVersionresource for information about available updates and presents it in a human-readable format.One way to directly inspect the underlying availability data created by the CVO is by querying the

ClusterVersionresource with the following command:oc get clusterversion version -o json | jq '.status.availableUpdates'

$ oc get clusterversion version -o json | jq '.status.availableUpdates'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow A similar command can be used to check conditional updates:

oc get clusterversion version -o json | jq '.status.conditionalUpdates'

$ oc get clusterversion version -o json | jq '.status.conditionalUpdates'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.2.1.2. Evaluation of update availability

The Cluster Version Operator (CVO) periodically queries the OpenShift Update Service (OSUS) for the most recent data about update possibilities. This data is based on the cluster’s subscribed channel. The CVO then saves information about update recommendations into either the availableUpdates or conditionalUpdates field of its ClusterVersion resource.

The CVO periodically checks the conditional updates for update risks. These risks are conveyed through the data served by the OSUS, which contains information for each version about known issues that might affect a cluster updated to that version. Most risks are limited to clusters with specific characteristics, such as clusters with a certain size or clusters that are deployed in a particular cloud platform.

The CVO continuously evaluates its cluster characteristics against the conditional risk information for each conditional update. If the CVO finds that the cluster matches the criteria, the CVO stores this information in the conditionalUpdates field of its ClusterVersion resource. If the CVO finds that the cluster does not match the risks of an update, or that there are no risks associated with the update, it stores the target version in the availableUpdates field of its ClusterVersion resource.

The user interface, either the web console or the OpenShift CLI (oc), presents this information in sectioned headings to the administrator. Each supported but not recommended update recommendation contains a link to further resources about the risk so that the administrator can make an informed decision about the update.

1.2.2. Release images

A release image is the delivery mechanism for a specific OpenShift Container Platform (OCP) version. It contains the release metadata, a Cluster Version Operator (CVO) binary matching the release version, every manifest needed to deploy individual OpenShift cluster Operators, and a list of SHA digest-versioned references to all container images that make up this OpenShift version.

You can inspect the content of a specific release image by running the following command:

oc adm release extract <release image>

$ oc adm release extract <release image>oc adm release extract quay.io/openshift-release-dev/ocp-release:4.12.6-x86_64 Extracted release payload from digest sha256:800d1e39d145664975a3bb7cbc6e674fbf78e3c45b5dde9ff2c5a11a8690c87b created at 2023-03-01T12:46:29Z

$ oc adm release extract quay.io/openshift-release-dev/ocp-release:4.12.6-x86_64

Extracted release payload from digest sha256:800d1e39d145664975a3bb7cbc6e674fbf78e3c45b5dde9ff2c5a11a8690c87b created at 2023-03-01T12:46:29Zls

$ ls1.2.3. Update process workflow

The following steps represent a detailed workflow of the OpenShift Container Platform (OCP) update process:

-

The target version is stored in the

spec.desiredUpdate.versionfield of theClusterVersionresource, which may be managed through the web console or the CLI. -

The Cluster Version Operator (CVO) detects that the

desiredUpdatein theClusterVersionresource differs from the current cluster version. Using graph data from the OpenShift Update Service, the CVO resolves the desired cluster version to a pull spec for the release image. - The CVO validates the integrity and authenticity of the release image. Red Hat publishes cryptographically-signed statements about published release images at predefined locations by using image SHA digests as unique and immutable release image identifiers. The CVO utilizes a list of built-in public keys to validate the presence and signatures of the statement matching the checked release image.

-

The CVO creates a job named

version-$version-$hashin theopenshift-cluster-versionnamespace. This job uses containers that are executing the release image, so the cluster downloads the image through the container runtime. The job then extracts the manifests and metadata from the release image to a shared volume that is accessible to the CVO. - The CVO validates the extracted manifests and metadata.

- The CVO checks some preconditions to ensure that no problematic condition is detected in the cluster. Certain conditions can prevent updates from proceeding. These conditions are either determined by the CVO itself, or reported by individual cluster Operators that detect some details about the cluster that the Operator considers problematic for the update.

-

The CVO records the accepted release in

status.desiredand creates astatus.historyentry about the new update. - The CVO begins reconciling the manifests from the release image. Cluster Operators are updated in separate stages called Runlevels, and the CVO ensures that all Operators in a Runlevel finish updating before it proceeds to the next level.

- Manifests for the CVO itself are applied early in the process. When the CVO deployment is applied, the current CVO pod stops, and a CVO pod that uses the new version starts. The new CVO proceeds to reconcile the remaining manifests.

-

The update proceeds until the entire control plane is updated to the new version. Individual cluster Operators might perform update tasks on their domain of the cluster, and while they do so, they report their state through the

Progressing=Truecondition. - The Machine Config Operator (MCO) manifests are applied towards the end of the process. The updated MCO then begins updating the system configuration and operating system of every node. Each node might be drained, updated, and rebooted before it starts to accept workloads again.

The cluster reports as updated after the control plane update is finished, usually before all nodes are updated. After the update, the CVO maintains all cluster resources to match the state delivered in the release image.

1.2.4. Understanding how manifests are applied during an update

Some manifests supplied in a release image must be applied in a certain order because of the dependencies between them. For example, the CustomResourceDefinition resource must be created before the matching custom resources. Additionally, there is a logical order in which the individual cluster Operators must be updated to minimize disruption in the cluster. The Cluster Version Operator (CVO) implements this logical order through the concept of Runlevels.

These dependencies are encoded in the filenames of the manifests in the release image:

0000_<runlevel>_<component>_<manifest-name>.yaml

0000_<runlevel>_<component>_<manifest-name>.yamlFor example:

0000_03_config-operator_01_proxy.crd.yaml

0000_03_config-operator_01_proxy.crd.yamlThe CVO internally builds a dependency graph for the manifests, where the CVO obeys the following rules:

- During an update, manifests at a lower Runlevel are applied before those at a higher Runlevel.

- Within one Runlevel, manifests for different components can be applied in parallel.

- Within one Runlevel, manifests for a single component are applied in lexicographic order.

The CVO then applies manifests following the generated dependency graph.

For some resource types, the CVO monitors the resource after its manifest is applied, and considers it to be successfully updated only after the resource reaches a stable state. Achieving this state can take some time. This is especially true for ClusterOperator resources, while the CVO waits for a cluster Operator to update itself and then update its ClusterOperator status.

The CVO waits until all cluster Operators in the Runlevel meet the following conditions before it proceeds to the next Runlevel:

-

The cluster Operators have an

Available=Truecondition. -

The cluster Operators have a

Degraded=Falsecondition.

- The cluster Operators declare they have achieved the desired version in their ClusterOperator resource.

Some actions can take significant time to finish. The CVO waits for the actions to complete in order to ensure the subsequent Runlevels can proceed safely. Initially reconciling the new release’s manifests is expected to take 60 to 120 minutes in total; see Understanding OpenShift Container Platform update duration for more information about factors that influence update duration.

In the previous example diagram, the CVO is waiting until all work is completed at Runlevel 20. The CVO has applied all manifests to the Operators in the Runlevel, but the kube-apiserver-operator ClusterOperator performs some actions after its new version was deployed. The kube-apiserver-operator ClusterOperator declares this progress through the Progressing=True condition and by not declaring the new version as reconciled in its status.versions. The CVO waits until the ClusterOperator reports an acceptable status, and then it will start reconciling manifests at Runlevel 25.

1.2.5. Understanding how the Machine Config Operator updates nodes

The Machine Config Operator (MCO) applies a new machine configuration to each control plane node and compute node. During the machine configuration update, control plane nodes and compute nodes are organized into their own machine config pools, where the pools of machines are updated in parallel. The .spec.maxUnavailable parameter, which has a default value of 1, determines how many nodes in a machine config pool can simultaneously undergo the update process.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

When the machine configuration update process begins, the MCO checks the amount of currently unavailable nodes in a pool. If there are fewer unavailable nodes than the value of .spec.maxUnavailable, the MCO initiates the following sequence of actions on available nodes in the pool:

Cordon and drain the node

NoteWhen a node is cordoned, workloads cannot be scheduled to it.

- Update the system configuration and operating system (OS) of the node

- Reboot the node

- Uncordon the node

A node undergoing this process is unavailable until it is uncordoned and workloads can be scheduled to it again. The MCO begins updating nodes until the number of unavailable nodes is equal to the value of .spec.maxUnavailable.

As a node completes its update and becomes available, the number of unavailable nodes in the machine config pool is once again fewer than .spec.maxUnavailable. If there are remaining nodes that need to be updated, the MCO initiates the update process on a node until the .spec.maxUnavailable limit is once again reached. This process repeats until each control plane node and compute node has been updated.

The following example workflow describes how this process might occur in a machine config pool with 5 nodes, where .spec.maxUnavailable is 3 and all nodes are initially available:

- The MCO cordons nodes 1, 2, and 3, and begins to drain them.

- Node 2 finishes draining, reboots, and becomes available again. The MCO cordons node 4 and begins draining it.

- Node 1 finishes draining, reboots, and becomes available again. The MCO cordons node 5 and begins draining it.

- Node 3 finishes draining, reboots, and becomes available again.

- Node 5 finishes draining, reboots, and becomes available again.

- Node 4 finishes draining, reboots, and becomes available again.

Because the update process for each node is independent of other nodes, some nodes in the example above finish their update out of the order in which they were cordoned by the MCO.

You can check the status of the machine configuration update by running the following command:

oc get mcp

$ oc get mcpExample output

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE master rendered-master-acd1358917e9f98cbdb599aea622d78b True False False 3 3 3 0 22h worker rendered-worker-1d871ac76e1951d32b2fe92369879826 False True False 2 1 1 0 22h

NAME CONFIG UPDATED UPDATING DEGRADED MACHINECOUNT READYMACHINECOUNT UPDATEDMACHINECOUNT DEGRADEDMACHINECOUNT AGE

master rendered-master-acd1358917e9f98cbdb599aea622d78b True False False 3 3 3 0 22h

worker rendered-worker-1d871ac76e1951d32b2fe92369879826 False True False 2 1 1 0 22h1.3. Understanding update channels and releases

Update channels are the mechanism by which users declare the OpenShift Container Platform minor version they intend to update their clusters to. They also allow users to choose the timing and level of support their updates will have through the fast, stable, candidate, and eus channel options. The Cluster Version Operator uses an update graph based on the channel declaration, along with other conditional information, to provide a list of recommended and conditional updates available to the cluster.

Update channels correspond to a minor version of OpenShift Container Platform. The version number in the channel represents the target minor version that the cluster will eventually be updated to, even if it is higher than the cluster’s current minor version.

For instance, OpenShift Container Platform 4.10 update channels provide the following recommendations:

- Updates within 4.10.

- Updates within 4.9.

- Updates from 4.9 to 4.10, allowing all 4.9 clusters to eventually update to 4.10, even if they do not immediately meet the minimum z-stream version requirements.

-

eus-4.10only: updates within 4.8. -

eus-4.10only: updates from 4.8 to 4.9 to 4.10, allowing all 4.8 clusters to eventually update to 4.10.

4.10 update channels do not recommend updates to 4.11 or later releases. This strategy ensures that administrators must explicitly decide to update to the next minor version of OpenShift Container Platform.

Update channels control only release selection and do not impact the version of the cluster that you install. The openshift-install binary file for a specific version of OpenShift Container Platform always installs that version.

OpenShift Container Platform 4.14 offers the following update channels:

-

stable-4.14 -

eus-4.y(only offered for EUS versions and meant to facilitate updates between EUS versions) -

fast-4.14 -

candidate-4.14

If you do not want the Cluster Version Operator to fetch available updates from the update recommendation service, you can use the oc adm upgrade channel command in the OpenShift CLI to configure an empty channel. This configuration can be helpful if, for example, a cluster has restricted network access and there is no local, reachable update recommendation service.

Red Hat recommends updating to versions suggested by OpenShift Update Service only. For a minor version update, versions must be contiguous. Red Hat does not test updates to noncontiguous versions and cannot guarantee compatibility with earlier versions.

1.3.1. Update channels

1.3.1.1. fast-4.14 channel

The fast-4.14 channel is updated with new versions of OpenShift Container Platform 4.14 as soon as Red Hat declares the version as a general availability (GA) release. As such, these releases are fully supported and purposed to be used in production environments.

1.3.1.2. stable-4.14 channel

While the fast-4.14 channel contains releases as soon as their errata are published, releases are added to the stable-4.14 channel after a delay. During this delay, data is collected from multiple sources and analyzed for indications of product regressions. Once a significant number of data points have been collected, these releases are added to the stable channel.

Since the time required to obtain a significant number of data points varies based on many factors, Service LeveL Objective (SLO) is not offered for the delay duration between fast and stable channels. For more information, please see "Choosing the correct channel for your cluster"

Newly installed clusters default to using stable channels.

1.3.1.3. eus-4.y channel

In addition to the stable channel, all even-numbered minor versions of OpenShift Container Platform offer Extended Update Support (EUS). Releases promoted to the stable channel are also simultaneously promoted to the EUS channels. The primary purpose of the EUS channels is to serve as a convenience for clusters performing a Control Plane Only update.

Both standard and non-EUS subscribers can access all EUS repositories and necessary RPMs (rhel-*-eus-rpms) to be able to support critical purposes such as debugging and building drivers.

1.3.1.4. candidate-4.14 channel

The candidate-4.14 channel offers unsupported early access to releases as soon as they are built. Releases present only in candidate channels may not contain the full feature set of eventual GA releases or features may be removed prior to GA. Additionally, these releases have not been subject to full Red Hat Quality Assurance and may not offer update paths to later GA releases. Given these caveats, the candidate channel is only suitable for testing purposes where destroying and recreating a cluster is acceptable.

1.3.1.5. Update recommendations in the channel

OpenShift Container Platform maintains an update recommendation service that knows your installed OpenShift Container Platform version and the path to take within the channel to get you to the next release. Update paths are also limited to versions relevant to your currently selected channel and its promotion characteristics.

You can imagine seeing the following releases in your channel:

- 4.14.0

- 4.14.1

- 4.14.3

- 4.14.4

The service recommends only updates that have been tested and have no known serious regressions. For example, if your cluster is on 4.14.1 and OpenShift Container Platform suggests 4.14.4, then it is recommended to update from 4.14.1 to 4.14.4.

Do not rely on consecutive patch numbers. In this example, 4.14.2 is not and never was available in the channel, therefore updates to 4.14.2 are not recommended or supported.

1.3.1.6. Update recommendations and Conditional Updates

Red Hat monitors newly released versions and update paths associated with those versions before and after they are added to supported channels.

If Red Hat removes update recommendations from any supported release, a superseding update recommendation will be provided to a future version that corrects the regression. There may however be a delay while the defect is corrected, tested, and promoted to your selected channel.

Beginning in OpenShift Container Platform 4.10, when update risks are confirmed, they are declared as Conditional Update risks for the relevant updates. Each known risk may apply to all clusters or only clusters matching certain conditions. Some examples include having the Platform set to None or the CNI provider set to OpenShiftSDN. The Cluster Version Operator (CVO) continually evaluates known risks against the current cluster state. If no risks match, the update is recommended. If the risk matches, those updates are supported but not recommended, and a reference link is provided. The reference link helps the cluster admin decide if they would like to accept the risk and update anyway.

When Red Hat chooses to declare Conditional Update risks, that action is taken in all relevant channels simultaneously. Declaration of a Conditional Update risk may happen either before or after the update has been promoted to supported channels.

1.3.1.7. Choosing the correct channel for your cluster

Choosing the appropriate channel involves two decisions.

First, select the minor version you want for your cluster update. Selecting a channel which matches your current version ensures that you only apply z-stream updates and do not receive feature updates. Selecting an available channel which has a version greater than your current version will ensure that after one or more updates your cluster will have updated to that version. Your cluster will only be offered channels which match its current version, the next version, or the next EUS version.

Due to the complexity involved in planning updates between versions many minors apart, channels that assist in planning updates beyond a single Control Plane Only update are not offered.

Second, you should choose your desired rollout strategy. You may choose to update as soon as Red Hat declares a release GA by selecting from fast channels or you may want to wait for Red Hat to promote releases to the stable channel. Update recommendations offered in the fast-4.14 and stable-4.14 are both fully supported and benefit equally from ongoing data analysis. The promotion delay before promoting a release to the stable channel represents the only difference between the two channels. Updates to the latest z-streams are generally promoted to the stable channel within a week or two, however the delay when initially rolling out updates to the latest minor is much longer, generally 45-90 days. Please consider the promotion delay when choosing your desired channel, as waiting for promotion to the stable channel may affect your scheduling plans.

Additionally, there are several factors which may lead an organization to move clusters to the fast channel either permanently or temporarily including:

- The desire to apply a specific fix known to affect your environment without delay.

- Application of CVE fixes without delay. CVE fixes may introduce regressions, so promotion delays still apply to z-streams with CVE fixes.

- Internal testing processes. If it takes your organization several weeks to qualify releases it is best test concurrently with our promotion process rather than waiting. This also assures that any telemetry signal provided to Red Hat is a factored into our rollout, so issues relevant to you can be fixed faster.

1.3.1.8. Restricted network clusters

If you manage the container images for your OpenShift Container Platform clusters yourself, you must consult the Red Hat errata that is associated with product releases and note any comments that impact updates. During an update, the user interface might warn you about switching between these versions, so you must ensure that you selected an appropriate version before you bypass those warnings.

1.3.1.9. Switching between channels

A channel can be switched from the web console or through the adm upgrade channel command:

oc adm upgrade channel <channel>

$ oc adm upgrade channel <channel>The web console will display an alert if you switch to a channel that does not include the current release. The web console does not recommend any updates while on a channel without the current release. You can return to the original channel at any point, however.

Changing your channel might impact the supportability of your cluster. The following conditions might apply:

-

Your cluster is still supported if you change from the

stable-4.14channel to thefast-4.14channel. -

You can switch to the

candidate-4.14channel at any time, but some releases for this channel might be unsupported. -

You can switch from the

candidate-4.14channel to thefast-4.14channel if your current release is a general availability release. -

You can always switch from the

fast-4.14channel to thestable-4.14channel. There is a possible delay of up to a day for the release to be promoted tostable-4.14if the current release was recently promoted.

1.4. Understanding OpenShift Container Platform update duration

OpenShift Container Platform update duration varies based on the deployment topology. This page helps you understand the factors that affect update duration and estimate how long the cluster update takes in your environment.

1.4.1. Factors affecting update duration

The following factors can affect your cluster update duration:

The reboot of compute nodes to the new machine configuration by Machine Config Operator (MCO)

The value of

MaxUnavailablein the machine config poolWarningThe default setting for

maxUnavailableis1for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to3for the control plane pool.- The minimum number or percentages of replicas set in pod disruption budget (PDB)

- The number of nodes in the cluster

- The health of the cluster nodes

1.4.2. Cluster update phases

In OpenShift Container Platform, the cluster update happens in two phases:

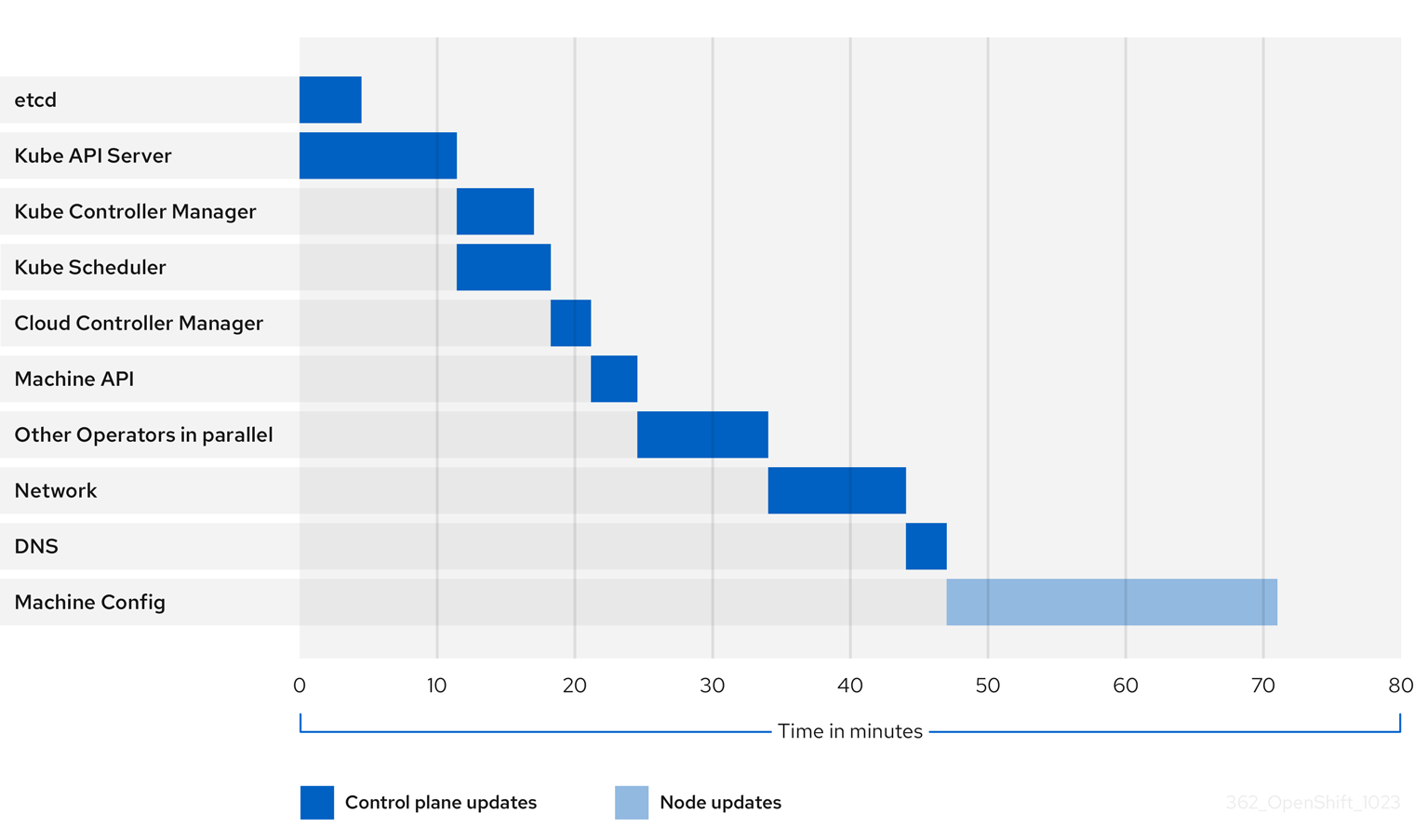

- Cluster Version Operator (CVO) target update payload deployment

- Machine Config Operator (MCO) node updates

1.4.2.1. Cluster Version Operator target update payload deployment

The Cluster Version Operator (CVO) retrieves the target update release image and applies to the cluster. All components which run as pods are updated during this phase, whereas the host components are updated by the Machine Config Operator (MCO). This process might take 60 to 120 minutes.

The CVO phase of the update does not restart the nodes.

1.4.2.2. Machine Config Operator node updates

The Machine Config Operator (MCO) applies a new machine configuration to each control plane and compute node. During this process, the MCO performs the following sequential actions on each node of the cluster:

- Cordon and drain all the nodes

- Update the operating system (OS)

- Reboot the nodes

- Uncordon all nodes and schedule workloads on the node

When a node is cordoned, workloads cannot be scheduled to it.

The time to complete this process depends on several factors including the node and infrastructure configuration. This process might take 5 or more minutes to complete per node.

In addition to MCO, you should consider the impact of the following parameters:

- The control plane node update duration is predictable and oftentimes shorter than compute nodes, because the control plane workloads are tuned for graceful updates and quick drains.

-

You can update the compute nodes in parallel by setting the

maxUnavailablefield to greater than1in the Machine Config Pool (MCP). The MCO cordons the number of nodes specified inmaxUnavailableand marks them unavailable for update. -

When you increase

maxUnavailableon the MCP, it can help the pool to update more quickly. However, ifmaxUnavailableis set too high, and several nodes are cordoned simultaneously, the pod disruption budget (PDB) guarded workloads could fail to drain because a schedulable node cannot be found to run the replicas. If you increasemaxUnavailablefor the MCP, ensure that you still have sufficient schedulable nodes to allow PDB guarded workloads to drain. Before you begin the update, you must ensure that all the nodes are available. Any unavailable nodes can significantly impact the update duration because the node unavailability affects the

maxUnavailableand pod disruption budgets.To check the status of nodes from the terminal, run the following command:

oc get node

$ oc get nodeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example Output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the status of the node is

NotReadyorSchedulingDisabled, then the node is not available and this impacts the update duration.You can check the status of nodes from the Administrator perspective in the web console by expanding Compute

Nodes.

1.4.2.3. Example update duration of cluster Operators

The previous diagram shows an example of the time that cluster Operators might take to update to their new versions. The example is based on a three-node AWS OVN cluster, which has a healthy compute MachineConfigPool and no workloads that take long to drain, updating from 4.13 to 4.14.

- The specific update duration of a cluster and its Operators can vary based on several cluster characteristics, such as the target version, the amount of nodes, and the types of workloads scheduled to the nodes.

- Some Operators, such as the Cluster Version Operator, update themselves in a short amount of time. These Operators have either been omitted from the diagram or are included in the broader group of Operators labeled "Other Operators in parallel".

Each cluster Operator has characteristics that affect the time it takes to update itself. For instance, the Kube API Server Operator in this example took more than eleven minutes to update because kube-apiserver provides graceful termination support, meaning that existing, in-flight requests are allowed to complete gracefully. This might result in a longer shutdown of the kube-apiserver. In the case of this Operator, update speed is sacrificed to help prevent and limit disruptions to cluster functionality during an update.

Another characteristic that affects the update duration of an Operator is whether the Operator utilizes DaemonSets. The Network and DNS Operators utilize full-cluster DaemonSets, which can take time to roll out their version changes, and this is one of several reasons why these Operators might take longer to update themselves.

The update duration for some Operators is heavily dependent on characteristics of the cluster itself. For instance, the Machine Config Operator update applies machine configuration changes to each node in the cluster. A cluster with many nodes has a longer update duration for the Machine Config Operator compared to a cluster with fewer nodes.

Each cluster Operator is assigned a stage during which it can be updated. Operators within the same stage can update simultaneously, and Operators in a given stage cannot begin updating until all previous stages have been completed. For more information, see "Understanding how manifests are applied during an update" in the "Additional resources" section.

1.4.3. Estimating cluster update time

Historical update duration of similar clusters provides you the best estimate for the future cluster updates. However, if the historical data is not available, you can use the following convention to estimate your cluster update time:

Cluster update time = CVO target update payload deployment time + (# node update iterations x MCO node update time)

Cluster update time = CVO target update payload deployment time + (# node update iterations x MCO node update time)

A node update iteration consists of one or more nodes updated in parallel. The control plane nodes are always updated in parallel with the compute nodes. In addition, one or more compute nodes can be updated in parallel based on the maxUnavailable value.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Container Platform. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

For example, to estimate the update time, consider an OpenShift Container Platform cluster with three control plane nodes and six compute nodes and each host takes about 5 minutes to reboot.

The time it takes to reboot a particular node varies significantly. In cloud instances, the reboot might take about 1 to 2 minutes, whereas in physical bare metal hosts the reboot might take more than 15 minutes.

Scenario-1

When you set maxUnavailable to 1 for both the control plane and compute nodes Machine Config Pool (MCP), then all the six compute nodes will update one after another in each iteration:

Cluster update time = 60 + (6 x 5) = 90 minutes

Cluster update time = 60 + (6 x 5) = 90 minutesScenario-2

When you set maxUnavailable to 2 for the compute node MCP, then two compute nodes will update in parallel in each iteration. Therefore it takes total three iterations to update all the nodes.

Cluster update time = 60 + (3 x 5) = 75 minutes

Cluster update time = 60 + (3 x 5) = 75 minutes

The default setting for maxUnavailable is 1 for all the MCPs in OpenShift Container Platform. It is recommended that you do not change the maxUnavailable in the control plane MCP.

1.4.4. Red Hat Enterprise Linux (RHEL) compute nodes

Red Hat Enterprise Linux (RHEL) compute nodes require an additional usage of openshift-ansible to update node binary components. The actual time spent updating RHEL compute nodes should not be significantly different from Red Hat Enterprise Linux CoreOS (RHCOS) compute nodes.