3.2. Telco RAN DU 参考规格

3.2.1. Telco RAN DU 4.17 参考设计概述

Telco RAN 分布式单元(DU) 4.17 参考设计配置在商业硬件上运行的 OpenShift Container Platform 4.17 集群,以托管 Telco RAN DU 工作负载。它捕获了推荐的、经过测试和支持的配置,以便为运行电信 RAN DU 配置集的集群获取可靠和可重复的性能。

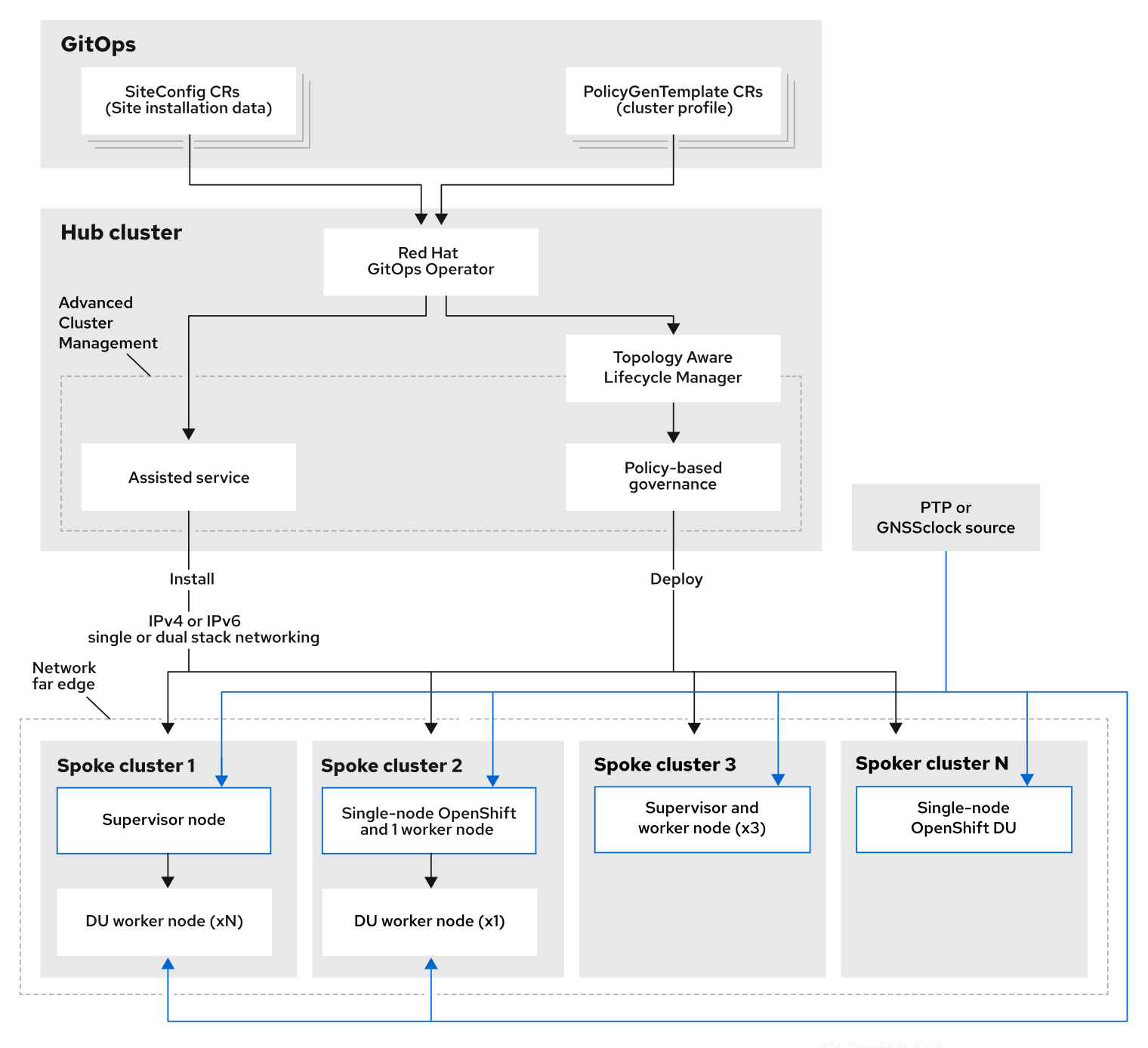

3.2.1.1. 部署架构概述

您可以从集中管理的 RHACM hub 集群将 Telco RAN DU 4.17 引用配置部署到受管集群。参考设计规格 (RDS) 包括受管集群的配置和 hub 集群组件的配置。

图 3.1. Telco RAN DU 部署架构概述

3.2.2. Telco RAN DU 使用模型概述

使用以下信息来计划 hub 集群和管理的单节点 OpenShift 集群的电信 RAN DU 工作负载、集群资源和硬件规格。

3.2.2.1. Telco RAN DU 应用程序工作负载

DU worker 节点必须具有 3rd Generation Xeon (Ice Lake) 2.20 GHz 或更好的 CPU,并使用固件调优以获得最大性能。

5g RAN DU 用户应用程序和工作负载应符合以下最佳实践和应用程序限制:

- 开发符合 Kubernetes 红帽最佳实践的最新版本的云原生网络功能 (CNF)。

- 使用 SR-IOV 进行高性能网络。

使用 exec probe 静默,且仅在没有其他合适的选项时才使用

-

如果 CNF 使用 CPU 固定,则不要使用 exec 探测。使用其他探测实施,如

httpGet或tcpSocket。 - 当您需要使用 exec 探测时,限制 exec 探测频率和数量。exec 探测的最大数量必须保持在 10 以下,且频率不得小于 10 秒。

-

如果 CNF 使用 CPU 固定,则不要使用 exec 探测。使用其他探测实施,如

避免使用 exec 探测,除非绝对没有可行的替代选择。

注意在 steady-state 操作过程中启动探测需要最少的资源。exec 探测的限制主要适用于存活度和就绪度探测。

符合参考 DU 应用程序工作负载的尺寸测试工作负载可在 openshift-kni/du-test-workloads 中找到。

3.2.2.2. Telco RAN DU 代表引用应用程序工作负载特征

代表引用应用程序工作负载有以下特征:

- vRAN 应用最多 15 个 pod 和 30 个容器,包括其管理和控制功能

-

每个 pod 最多使用 2 个

ConfigMap和 4 个SecretCR - 使用最多 10 个 exec 探测,其频率小于 10 秒

kube-apiserver上的增量应用程序负载小于集群平台用量的 10%注意您可以从平台指标中提取 CPU 负载。例如:

query=avg_over_time(pod:container_cpu_usage:sum{namespace="openshift-kube-apiserver"}[30m])query=avg_over_time(pod:container_cpu_usage:sum{namespace="openshift-kube-apiserver"}[30m])Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 平台日志收集器不会收集应用程序日志

- 主 CNI 上的聚合流量小于 1 MBps

3.2.2.3. Telco RAN DU worker 节点集群资源使用率

系统上运行的最大 pod 数量(包括应用程序工作负载和 OpenShift Container Platform pod)是 120。

- 资源利用率

OpenShift Container Platform 资源利用率根据包括应用程序工作负载特性的许多因素而有所不同,例如:

- Pod 数量

- 探测的类型和频率

- 带有内核网络的主 CNI 或二级 CNI 的消息传递率

- API 访问率

- 日志记录率

- 存储 IOPS

在以下情况下,集群资源要求适用:

- 集群正在运行描述的代表应用程序工作负载。

- 集群使用 "Telco RAN DU worker 节点集群资源 utilization" 中描述的约束来管理。

- 在 RAN DU 中使用模型配置中作为可选组件不会被应用。

您需要进行额外的分析,以确定资源利用率和功能在 Telco RAN DU 参考设计范围之外的配置满足 KPI 目标的影响。您可能必须根据要求在集群中分配其他资源。

3.2.2.4. hub 集群管理特征

Red Hat Advanced Cluster Management (RHACM) 是推荐的集群管理解决方案。将其配置为 hub 集群的以下限制:

- 配置最多 5 个 RHACM 策略,其合规评估间隔至少为 10 分钟。

- 在策略中最多使用 10 个受管集群模板。在可能的情况下,使用 hub-side 模版。

禁用除

policy-controller和observability-controller附加组件外的所有 RHACM 附加组件。将Observability设置为默认配置。重要配置可选组件或启用附加功能将导致额外的资源使用量,并降低整体系统性能。

如需更多信息,请参阅参考设计部署组件。

| 指标 | 限制 | 注 |

|---|---|---|

| CPU 用量 | 少于 4000 mc - 2 个内核(4 超线程) | 平台 CPU 固定到保留内核,包括每个保留内核中的超线程。系统设计为使用 steady-state 的 3 个 CPU (3000mc),以允许定期的系统任务和激增。 |

| 使用的内存 | 少于 16G |

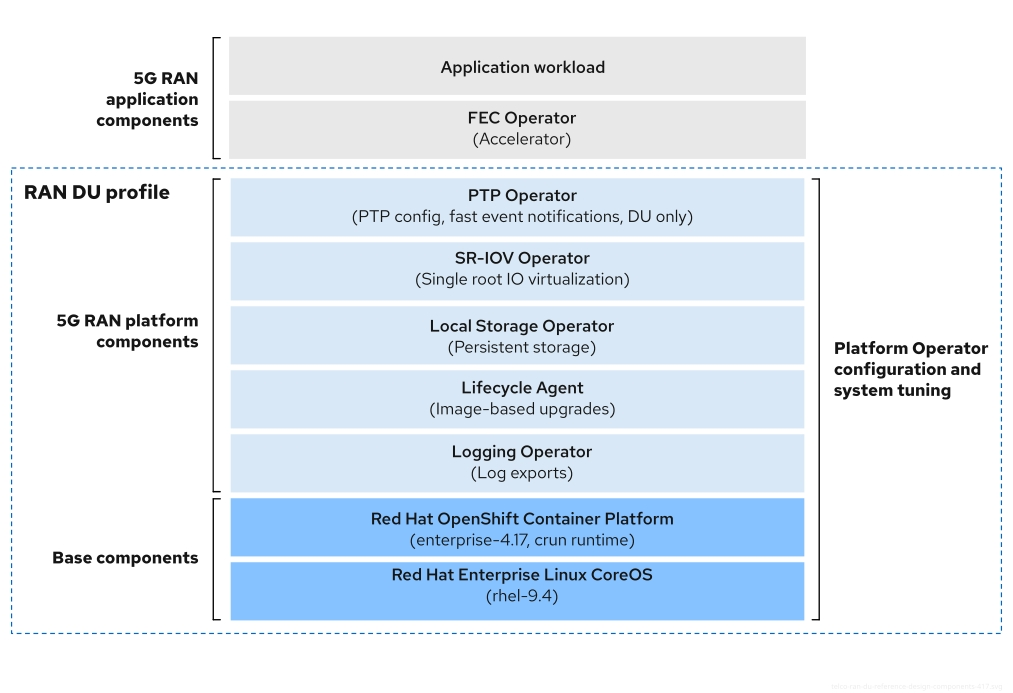

3.2.2.5. Telco RAN DU RDS 组件

以下小节描述了用于配置和部署集群来运行电信 RAN DU 工作负载的各种 OpenShift Container Platform 组件和配置。

图 3.2. Telco RAN DU 参考组件

确保未包含在电信 RAN DU 配置集中的组件不会影响分配给工作负载应用程序的 CPU 资源。

不支持在树外驱动程序中。

3.2.3. Telco RAN DU 4.17 参考组件

以下小节描述了用于配置和部署集群来运行 RAN DU 工作负载的各种 OpenShift Container Platform 组件和配置。

3.2.3.1. 主机固件调整

- 这个版本中的新内容

- 现在,您可以为使用 GitOps ZTP 部署的受管集群配置主机固件设置。

- 描述

-

在初始集群部署期间调优主机固件设置以获得最佳的性能。受管集群主机固件设置在 hub 集群中提供,作为使用

SiteConfigCR 和 GitOps ZTP 部署受管集群时创建的BareMetalHost自定义资源 (CR)。 - 限制和要求

- 必须启用超线程

- 工程考虑

- 调优所有设置以获得最佳性能。

- 除非需要针对节能进行调优,否则所有设置都是针对实现最大性能而设计的。

- 如果需要,您可以针对实现节能目的而对主机固件进行调优,这会牺牲性能。

- 启用安全引导。启用安全引导后,内核只载入签名的内核模块。不支持树外驱动程序。

3.2.3.2. Node Tuning Operator

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

您可以通过创建性能配置集来调整集群性能。

重要RAN DU 用例需要针对低延迟性能调整集群。

- 限制和要求

Node Tuning Operator 使用

PerformanceProfileCR 来配置集群。您需要在 RAN DU 配置集PerformanceProfileCR 中配置以下设置:- 选择保留和隔离内核,并确保在 Intel 3rd Generation Xeon (Ice Lake) 2.20 GHz CPU 上至少分配 4 个超线程(等同于 2 个内核)。

-

将保留的

cpuset设置为包括每个包含的内核的超线程同级功能。Unreserved 内核可作为可分配 CPU 用于调度工作负载。确保超线程不会跨保留和隔离的内核进行分割。 - 根据您设置为保留和隔离的 CPU,将保留和隔离的 CPU 配置为包括所有内核中的所有线程。

- 设置要包含在保留 CPU 集中的每个 NUMA 节点的核心 0。

- 将巨页大小设置为 1G。

您不应该在管理分区中添加额外的工作负载。只有作为 OpenShift 管理平台一部分的 pod 才应标注为管理分区。

- 工程考虑

- 您应该使用 RT 内核来满足性能要求。但是,如果需要,您可以使用非 RT 内核,这会对集群性能产生相应的影响。

- 您配置的巨页数量取决于应用程序工作负载要求。这个参数中的变化是正常的,并允许。

- 根据所选硬件和系统中使用的其他组件,预计在保留和隔离的 CPU 集的配置中有变化。变体必须仍然符合指定的限制。

- 没有 IRQ 关联性的硬件会影响隔离的 CPU。为确保具有保证整个 CPU QoS 的 pod 完全使用分配的 CPU,服务器中的所有硬件都必须支持 IRQ 关联性。如需更多信息,请参阅"查找节点的有效 IRQ 关联性设置"。

当您使用 cpuPartitioningMode: AllNodes 设置在集群部署期间启用工作负载分区时,PerformanceProfile CR 中设置保留的 CPU 必须包含足够的 CPU 用于操作系统、中断和 OpenShift 平台 pod。

cgroup v1 是一个已弃用的功能。弃用的功能仍然包含在 OpenShift Container Platform 中,并将继续被支持。但是,这个功能会在以后的发行版本中被删除,且不建议在新的部署中使用。

有关 OpenShift Container Platform 中已弃用或删除的主要功能的最新列表,请参阅 OpenShift Container Platform 发行注记中已弃用和删除的功能部分。

3.2.3.3. PTP Operator

- 这个版本中的新内容

-

提供了 Precision Time Protocol (PTP) 快速事件 REST API 的新版本。消费者应用程序现在可以直接订阅 PTP 事件制作者 sidecar 中的事件 REST API。PTP 快速事件 REST API v2 与 Event Consumers 3.0 的 O-RAN O-Cloud Notification API 规格 兼容。您可以通过在

PtpOperatorConfig资源中设置ptpEventConfig.apiVersion字段来更改 API 版本。

-

提供了 Precision Time Protocol (PTP) 快速事件 REST API 的新版本。消费者应用程序现在可以直接订阅 PTP 事件制作者 sidecar 中的事件 REST API。PTP 快速事件 REST API v2 与 Event Consumers 3.0 的 O-RAN O-Cloud Notification API 规格 兼容。您可以通过在

- 描述

如需了解集群节点的支持和配置 PTP 的详情,请参阅"为 vDU 应用程序工作负载的单节点 OpenShift 集群配置"。DU 节点可在以下模式下运行:

- 作为常规时钟 (OC) 同步至 grandmaster 时钟或边界时钟 (T-BC)

- 作为从 GPS 进行同步的 grandmaster 时钟 (T-GM),支持单或双卡 E810 NIC。

- 作为双边界时钟(每个 NIC 对应一个),支持 E810 NIC。

- 当在不同 NIC 上有多个时间源时,作为具有高可用性 (HA) 系统时钟的 T-BC。

- 可选:作为无线单元 (RU) 的边界时钟。

- 限制和要求

- 限制为双 NIC 和 HA 的两个边界时钟。

- 限制为 T-GM 的两个卡 E810 配置。

- 工程考虑

- 为普通时钟、边界时钟、具有高可用性系统时钟的边界时钟、和 grandmaster 时钟提供的配置。

-

PTP 快速事件通知使用

ConfigMapCR 存储 PTP 事件订阅 - PTP 事件 REST API v2 没有资源路径中包含的所有较低层次结构资源的全局订阅。您可以将消费者应用程序订阅到不同的可用事件类型。

3.2.3.4. SR-IOV Operator

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

-

SR-IOV Operator 置备并配置 SR-IOV CNI 和设备插件。

netdevice(内核 VF)和vfio(DPDK) 设备都被支持,并适用于 RAN 使用模型。 - 限制和要求

- 使用 OpenShift Container Platform 支持的设备

- BIOS 中的 SR-IOV 和 IOMMU 启用 :SR-IOV Network Operator 将在内核命令行中自动启用 IOMMU。

- SR-IOV VF 不会从 PF 接收链路状态更新。如果需要链接检测,则必须在协议级别进行配置。

不支持使用安全引导或内核锁定进行固件更新的 NIC 必须预先配置足够的虚拟功能(VF),以支持应用程序工作负载所需的 VF 数量。

注意您可能需要使用没有在文档中记录的

disablePlugins选项为不支持的 NIC 禁用 SR-IOV Operator 插件。

- 工程考虑

-

带有

vfio驱动程序类型的 SR-IOV 接口通常用于为需要高吞吐量或低延迟的应用程序启用额外的二级网络。 -

期望客户对

SriovNetwork和SriovNetworkNodePolicy自定义资源 (CR) 的配置和数量变化。 -

IOMMU 内核命令行设置会在安装时使用

MachineConfigCR 应用。这样可确保SriovOperatorCR 在添加节点时不会导致节点重启。 - SR-IOV 支持并行排空节点,不适用于单节点 OpenShift 集群。

-

如果您从部署中排除

SriovOperatorConfigCR,则不会自动创建 CR。 - 如果您将工作负载固定到特定的节点,SR-IOV 并行节点排空功能不会重新调度 pod。在这些情况下,SR-IOV Operator 会禁用并行节点排空功能。

-

带有

3.2.3.5. 日志记录

- 这个版本中的新内容

- 更新您现有的实现,以适应 Cluster Logging Operator 6.0 的新 API。您必须使用策略删除旧的 Operator 工件。如需更多信息,请参阅附加资源。

- 描述

- 使用日志记录从最边缘节点收集日志进行远程分析。推荐的日志收集器是 Vector。

- 工程考虑

- 例如,处理基础架构和审计日志以外的日志,例如,应用程序工作负载会根据额外的日志记录率需要额外的 CPU 和网络带宽。

从 OpenShift Container Platform 4.14 开始,Vector 是引用日志收集器。

注意在 RAN 使用模型中使用 fluentd 已被弃用。

3.2.3.6. SRIOV-FEC Operator

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

- SRIOV-FEC Operator 是一个可选的第三方认证 Operator,支持 FEC 加速器硬件。

- 限制和要求

从 FEC Operator v2.7.0 开始:

-

SecureBoot支持 -

PF的vfio驱动程序需要使用vfio-token注入 Pod。pod 中的应用程序可以使用 EAL 参数--vfio-vf-token将VF令牌传递给 DPDK。

-

- 工程考虑

-

SRIOV-FEC Operator 使用

isolatedCPU 集合的 CPU 内核。 - 您可以作为应用程序部署的预检查的一部分来验证 FEC 就绪,例如通过扩展验证策略。

-

SRIOV-FEC Operator 使用

3.2.3.7. 生命周期代理

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

- Lifecycle Agent 为单节点 OpenShift 集群提供本地生命周期管理服务。

- 限制和要求

- Lifecycle Agent 不适用于带有额外 worker 的多节点集群或单节点 OpenShift 集群。

- 需要一个在安装集群时创建的持久性卷。对于分区要求,请参阅"使用 GitOps ZTP 时,在 ostree stateroots 之间配置共享目录"。

3.2.3.8. Local Storage Operator

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

-

您可以使用 Local Storage Operator 创建可用作

PVC资源的持久性卷。您创建的PV资源的数量和类型取决于您的要求。 - 工程考虑

-

在创建

PV之前,为PVCR 创建后备存储。这可以是分区、本地卷、LVM 卷或完整磁盘。 请参阅

LocalVolumeCR 中的设备列表,访问每个设备,以确保正确分配磁盘和分区。无法保证在节点重启后逻辑名称(例如/dev/sda)一致。如需更多信息,请参阅有关设备标识符的 RHEL 9 文档。

-

在创建

3.2.3.9. LVM 存储

- 这个版本中的新内容

- 这个版本没有参考设计更新

逻辑卷管理器 (LVM) 存储是一个可选组件。

当使用 LVM Storage 作为存储解决方案时,它会替换 Local Storage Operator。CPU 资源作为平台开销分配给管理分区。参考配置必须包含这些存储解决方案中的一个,但不能同时包含这两个解决方案。

- 描述

-

LVM Storage 提供块和文件存储的动态置备。LVM 存储从本地设备创建逻辑卷,这些逻辑卷可由应用程序用作

PVC资源。也可以进行卷扩展和快照。 - 限制和要求

- 在单节点 OpenShift 集群中,持久性存储必须由 LVM Storage 或本地存储提供,不能同时由这两个存储提供。

- 参考配置中排除卷快照。

- 工程考虑

- LVM 存储可用作 RAN DU 用例的本地存储实现。当 LVM 存储用作存储解决方案时,它会替换 Local Storage Operator,并将所需的 CPU 分配给管理分区作为平台开销。参考配置必须包含这些存储解决方案中的一个,但不能同时包含这两个解决方案。

- 确保有足够的磁盘或分区来满足存储要求。

3.2.3.10. 工作负载分区

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

- 工作负载分区将作为 DU 配置集一部分的 OpenShift 平台和第 2 天 Operator pod 固定到保留的 CPU 集,并从节点核算中删除保留的 CPU。这会保留所有非保留 CPU 内核供用户工作负载使用。

- 限制和要求

-

必须注解

Namespace和PodCR,以允许将 pod 应用到管理分区 - 具有 CPU 限制的 Pod 无法分配给分区。这是因为 mutation 可以更改 pod QoS。

- 有关可分配给管理分区的最小 CPU 数量的更多信息,请参阅 Node Tuning Operator。

-

必须注解

- 工程考虑

- 工作负载分区将所有管理 pod 固定到保留内核。必须将足够数量的内核分配给保留集以考虑操作系统、管理 pod,以及工作负载启动时发生 CPU 使用的预期激增、节点重启或其他系统事件。

3.2.3.11. 集群调整

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

- 如需了解您可以在安装前启用或禁用的可选组件的完整列表,请参阅"集群功能"。

- 限制和要求

- 集群功能不适用于安装程序置备的安装方法。

您必须应用所有平台调优配置。下表列出了所需的平台调优配置:

Expand 表 3.2. 集群功能配置 功能 描述 删除可选集群功能

通过在单节点 OpenShift 集群上禁用可选集群 Operator 来减少 OpenShift Container Platform 占用空间。

- 删除除 Marketplace 和 Node Tuning Operator 以外的所有可选 Operator。

配置集群监控

通过执行以下操作配置监控堆栈以减少占用空间:

-

禁用本地

alertmanager和telemeter组件。 -

如果使用 RHACM observability,则必须与适当的

additionalAlertManagerConfigsCR 增强,才能将警报转发到 hub 集群。 将

Prometheus保留周期减少 24h。注意RHACM hub 集群聚合受管集群指标。

禁用网络诊断

为单节点 OpenShift 禁用网络诊断,因为它们不是必需的。

配置单个 OperatorHub 目录源

将集群配置为使用单个目录源,它只包含 RAN DU 部署所需的 Operator。每个目录源会增加集群中的 CPU 使用量。使用单个

CatalogSource适合平台 CPU 预算。禁用 Console Operator

如果集群在禁用控制台的情况下部署,则不需要

ConsoleCR (ConsoleOperatorDisable.yaml)。如果集群是在启用了控制台的情况下部署的,则必须应用ConsoleCR。

- 工程考虑

在 OpenShift Container Platform 4.16 及更高版本中,当应用

PerformanceProfileCR 时,集群不会自动恢复到 cgroup v1。如果集群中运行的工作负载需要 cgroups v1,则需要将集群配置为使用 cgroups v1。注意如果您需要配置 cgroup v1,请将配置作为初始集群部署的一部分。

3.2.3.12. 机器配置

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 限制和要求

CRI-O 擦除禁用

MachineConfig假设磁盘上的镜像是静态的镜像,而不是在定义的维护窗口中调度的维护期间使用。为确保镜像是静态的,请不要将 podimagePullPolicy字段设置为Always。Expand 表 3.3. 机器配置选项 功能 描述 容器运行时

将所有节点角色的容器运行时设为

crun。kubelet 配置和容器挂载隐藏

减少 kubelet 内务处理和驱除监控的频率,以减少 CPU 用量。创建容器挂载命名空间,对 kubelet 和 CRI-O 可见,以减少系统挂载扫描资源使用情况。

SCTP

可选配置(默认为启用)启用 SCTP。RAN 应用程序需要 SCTP,但在 RHCOS 中默认禁用。

kdump

可选配置(默认启用)启用 kdump 在内核 panic 发生时捕获调试信息。

注意启用 kdump 的参考 CR 根据参考配置中包含的驱动程序和内核模块集合增加内存保留。

CRI-O 擦除禁用

在未清除关闭后禁用 CRI-O 镜像缓存的自动擦除。

与 SR-IOV 相关的内核参数

在内核命令行中包括额外的 SR-IOV 相关参数。

RCU Normal systemd 服务

在系统完全启动后设置

rcu_normal。一次性时间同步

为 control plane 或 worker 节点运行一次性 NTP 系统时间同步作业。

3.2.3.13. Telco RAN DU 部署组件

以下小节描述了您使用 Red Hat Advanced Cluster Management (RHACM) 配置 hub 集群的各种 OpenShift Container Platform 组件和配置。

3.2.3.13.1. Red Hat Advanced Cluster Management

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

Red Hat Advanced Cluster Management (RHACM) 为部署的集群提供 Multi Cluster Engine (MCE) 安装和持续生命周期管理功能。您可以通过在维护窗口期间将

Policy自定义资源 (CR) 应用到集群来声明管理集群配置和升级。您可以使用 RHACM 策略控制器应用策略,如 Topology Aware Lifecycle Manager (TALM)。策略控制器处理配置、升级和集群状态。

安装受管集群时,RHACM 将标签和初始 ignition 配置应用到各个节点,以支持自定义磁盘分区、分配角色和分配到机器配置池。您可以使用

SiteConfig或ClusterInstanceCR 定义这些配置。- 限制和要求

-

每个 ArgoCD 应用程序 300 个

SiteConfigCR。您可以使用多个应用程序来实现单个 hub 集群支持的最大集群数量。 -

单个 hub 集群支持最多 3500 部署的单节点 OpenShift 集群,其中包含绑定到每个集群的 5 个

PolicyCR。

-

每个 ArgoCD 应用程序 300 个

- 工程考虑

- 使用 RHACM 策略 hub 侧模板来更好地扩展集群配置。您可以使用单个组策略或少量常规组策略(其中组和每个集群值替换)来显著减少策略数量。

-

集群特定的配置:受管集群通常具有一些特定于单个集群的配置值。这些配置应该使用 RHACM 策略 hub 侧模板来管理,其值基于集群名称从

ConfigMapCR 中拉取。 - 要在受管集群中保存 CPU 资源,在集群安装 GitOps ZTP 后,应用静态配置的策略应该从受管集群绑定。

3.2.3.13.2. Topology Aware Lifecycle Manager

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

- Topology Aware Lifecycle Manager (TALM)是一个仅在 hub 集群上运行的 Operator,用于管理集群和 Operator 升级、配置等变化是如何部署到网络中。

- 限制和要求

- TALM 支持以 400 批量进行并发集群部署。

- 预缓存和备份功能仅适用于单节点 OpenShift 集群。

- 工程考虑

-

在初始集群安装过程中,只有带有

ran.openshift.io/ztp-deploy-wave注解的策略才会由 TALM 自动应用。 -

您可以创建进一步的

ClusterGroupUpgradeCR,以控制 TALM 修复的策略。

-

在初始集群安装过程中,只有带有

3.2.3.13.3. GitOps 和 GitOps ZTP 插件

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

GitOps 和 GitOps ZTP 插件提供了一个基于 GitOps 的基础架构,用于管理集群部署和配置。集群定义和配置在 Git 中作为声明状态进行维护。您可以将

ClusterInstanceCR 应用到 hub 集群,其中SiteConfigOperator 会将它们呈现为安装 CR。另外,您可以使用 GitOps ZTP 插件直接从SiteConfigCR 生成安装 CR。GitOps ZTP 插件支持根据PolicyGenTemplateCR 在策略中自动嵌套配置 CR。注意您可以使用基准引用配置 CR 在受管集群中部署和管理多个 OpenShift Container Platform 版本。您可以使用自定义 CR 和基线(baseline)CR。

要同时维护多个针对每个版本的策略,请使用 Git 管理源 CR 和策略 CR (

PolicyGenTemplate或PolicyGenerator) 的版本。将引用 CR 和自定义 CR 保留在不同的目录中。这样,您可以通过简单的替换所有目录内容来修补和更新引用 CR,而无需涉及自定义 CR。

- Limits

-

每个 ArgoCD 应用程序 300 个

SiteConfigCR。您可以使用多个应用程序来实现单个 hub 集群支持的最大集群数量。 -

Git 中的

/source-crs文件夹的内容会覆盖 GitOps ZTP 插件容器中提供的内容。Git 在搜索路径中具有优先权。 在与

kustomization.yaml文件相同的目录中添加/source-crs文件夹,其中包含PolicyGenTemplate作为生成器。注意此上下文中不支持

/source-crs目录的备用位置。-

SiteConfigCR 的extraManifestPath字段已从 OpenShift Container Platform 4.15 及之后的版本中弃用。使用新的extraManifests.searchPaths字段替代。

-

每个 ArgoCD 应用程序 300 个

- 工程考虑

-

对于多节点集群升级,您可以通过将

paused字段设置为true,在维护窗口期间暂停MachineConfigPool(MCP) CR。您可以通过在MCPCR 中配置maxUnavailable设置来增加每个MCP更新的节点数量。MaxUnavailable字段定义了,在MachineConfig更新期间,池中可以同时不可用的节点的百分比。将maxUnavailable设置为最大可容忍的值。这可减少升级过程中的重启次数,从而缩短升级时间。当您最终取消暂停MCPCR 时,所有更改的配置都会使用单个重启来应用。 -

在集群安装过程中,您可以通过将

paused字段设置为true并将maxUnavailable设置为 100% 以改进安装时间来暂停自定义MCPCR。 -

为了避免在更新内容时避免混淆或意外覆盖文件,请在

/source-crs文件夹和 Git 中额外清单中使用唯一的和可分辨名称。 -

SiteConfigCR 允许多个 extra-manifest 路径。当在多个目录路径中找到具有相同名称的文件时,找到的最后一个文件将具有优先权。这可让您将特定于版本的整个版本 0 清单 (extra-manifests) 放在 Git 中,并从SiteConfigCR 引用它们。使用此功能,您可以同时将多个 OpenShift Container Platform 版本部署到受管集群。

-

对于多节点集群升级,您可以通过将

3.2.3.13.4. 基于代理的安装程序

- 这个版本中的新内容

- 这个版本没有参考设计更新

- 描述

基于代理的安装程序(ABI)提供没有集中基础架构的安装功能。安装程序会创建一个挂载到服务器的 ISO 镜像。当服务器引导时,它会安装 OpenShift Container Platform 并提供额外的清单。

注意您还可以使用 ABI 在没有 hub 集群的情况下安装 OpenShift Container Platform 集群。以这种方式使用 ABI 时,仍需要镜像 registry。

基于代理的安装程序(ABI)是一个可选组件。

- 限制和要求

- 您可在安装时提供一组有限的额外清单。

-

您必须包含 RAN DU 用例所需的

MachineConfigurationCR。

- 工程考虑

- ABI 提供基准 OpenShift Container Platform 安装。

- 安装后,您要安装第 2 天 Operator 和 RAN DU 用例配置的其余部分。

3.2.4. Telco RAN 分布式单元(DU)参考配置 CR

使用以下自定义资源(CR)使用 Telco RAN DU 配置集配置和部署 OpenShift Container Platform 集群。有些 CR 根据您的要求是可选的。您可以更改的 CR 字段在 CR 中被注解,并带有 YAML 注释。

您可以从 ztp-site-generate 容器镜像中提取一组 RAN DU CR。如需更多信息,请参阅准备 GitOps ZTP 站点配置存储库。

3.2.4.1. 第 2 天 Operator 参考 CR

| 组件 | 参考 CR | 选填 | 这个版本中的新内容 |

|---|---|---|---|

| 集群日志记录 | 否 | 否 | |

| 集群日志记录 | 否 | 否 | |

| 集群日志记录 | 否 | 否 | |

| 集群日志记录 | 否 | 是 | |

| 集群日志记录 | 否 | 是 | |

| 集群日志记录 | 否 | 是 | |

| 集群日志记录 | 否 | 否 | |

| Lifecycle Agent Operator | 是 | 否 | |

| Lifecycle Agent Operator | 是 | 否 | |

| Lifecycle Agent Operator | 是 | 否 | |

| Lifecycle Agent Operator | 是 | 否 | |

| Local Storage Operator | 是 | 否 | |

| Local Storage Operator | 是 | 否 | |

| Local Storage Operator | 是 | 否 | |

| Local Storage Operator | 是 | 否 | |

| Local Storage Operator | 是 | 否 | |

| LVM Operator | 是 | 否 | |

| LVM Operator | 是 | 否 | |

| LVM Operator | 是 | 否 | |

| LVM Operator | 是 | 否 | |

| LVM Operator | 是 | 否 | |

| Node Tuning Operator | 否 | 否 | |

| Node Tuning Operator | 否 | 否 | |

| PTP 快速事件通知 | 是 | 否 | |

| PTP 快速事件通知 | 是 | 否 | |

| PTP 快速事件通知 | 是 | 否 | |

| PTP 快速事件通知 | 是 | 否 | |

| PTP Operator - 高可用性 | 否 | 否 | |

| PTP Operator - 高可用性 | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| PTP Operator | 否 | 否 | |

| SR-IOV FEC Operator | 是 | 否 | |

| SR-IOV FEC Operator | 是 | 否 | |

| SR-IOV FEC Operator | 是 | 否 | |

| SR-IOV FEC Operator | 是 | 否 | |

| SR-IOV Operator | 否 | 否 | |

| SR-IOV Operator | 否 | 否 | |

| SR-IOV Operator | 否 | 否 | |

| SR-IOV Operator | 否 | 否 | |

| SR-IOV Operator | 否 | 否 | |

| SR-IOV Operator | 否 | 否 | |

| SR-IOV Operator | 否 | 否 |

3.2.4.2. 集群调优参考 CR

| 组件 | 参考 CR | 选填 | 这个版本中的新内容 |

|---|---|---|---|

| Composable OpenShift | 否 | 否 | |

| 控制台禁用 | 是 | 否 | |

| 断开连接的 registry | 否 | 否 | |

| 断开连接的 registry | 否 | 否 | |

| 断开连接的 registry | 否 | 否 | |

| 断开连接的 registry | 否 | 否 | |

| 断开连接的 registry | 单节点 OpenShift 需要 OperatorHub,对于多节点集群是可选的 | 否 | |

| 监控配置 | 否 | 否 | |

| 网络诊断禁用 | 否 | 否 |

3.2.4.3. 机器配置引用 CR

| 组件 | 参考 CR | 选填 | 这个版本中的新内容 |

|---|---|---|---|

| 容器运行时 (crun) | 否 | 否 | |

| 容器运行时 (crun) | 否 | 否 | |

| 禁用 CRI-O 擦除 | 否 | 否 | |

| 禁用 CRI-O 擦除 | 否 | 否 | |

| kdump 启用 | 否 | 否 | |

| kdump 启用 | 否 | 否 | |

| kubelet 配置 / 容器挂载隐藏 | 否 | 否 | |

| kubelet 配置 / 容器挂载隐藏 | 否 | 否 | |

| 一次性时间同步 | 否 | 否 | |

| 一次性时间同步 | 否 | 否 | |

| SCTP | 是 | 否 | |

| SCTP | 是 | 否 | |

| 设置 RCU normal | 否 | 否 | |

| 设置 RCU normal | 否 | 否 | |

| 与 SR-IOV 相关的内核参数 | 否 | 否 | |

| 与 SR-IOV 相关的内核参数 | 否 | 否 |

3.2.4.4. YAML 参考

以下是构成电信 RAN DU 4.17 参考配置的所有自定义资源(CR)的完整参考。

3.2.4.4.1. 第 2 天 Operator 引用 YAML

ClusterLogForwarder.yaml

ClusterLogNS.yaml

ClusterLogOperGroup.yaml

ClusterLogServiceAccount.yaml

ClusterLogServiceAccountAuditBinding.yaml

ClusterLogServiceAccountInfrastructureBinding.yaml

ClusterLogSubscription.yaml

ImageBasedUpgrade.yaml

LcaSubscription.yaml

LcaSubscriptionNS.yaml

LcaSubscriptionOperGroup.yaml

StorageClass.yaml

StorageLV.yaml

StorageNS.yaml

StorageOperGroup.yaml

StorageSubscription.yaml

LVMOperatorStatus.yaml

StorageLVMCluster.yaml

StorageLVMSubscription.yaml

StorageLVMSubscriptionNS.yaml

StorageLVMSubscriptionOperGroup.yaml

PerformanceProfile.yaml

TunedPerformancePatch.yaml

PtpConfigBoundaryForEvent.yaml

PtpConfigForHAForEvent.yaml

PtpConfigMasterForEvent.yaml

PtpConfigSlaveForEvent.yaml

PtpConfigBoundary.yaml

PtpConfigForHA.yaml

PtpConfigDualCardGmWpc.yaml

PtpConfigThreeCardGmWpc.yaml

PtpConfigGmWpc.yaml

PtpConfigSlave.yaml

PtpOperatorConfig.yaml

PtpOperatorConfigForEvent.yaml

PtpSubscription.yaml

PtpSubscriptionNS.yaml

PtpSubscriptionOperGroup.yaml

AcceleratorsNS.yaml

apiVersion: v1

kind: Namespace

metadata:

name: vran-acceleration-operators

annotations: {}

apiVersion: v1

kind: Namespace

metadata:

name: vran-acceleration-operators

annotations: {}AcceleratorsOperGroup.yaml

AcceleratorsSubscription.yaml

SriovFecClusterConfig.yaml

SriovNetwork.yaml

SriovNetworkNodePolicy.yaml

SriovOperatorConfig.yaml

SriovOperatorConfigForSNO.yaml

SriovSubscription.yaml

SriovSubscriptionNS.yaml

SriovSubscriptionOperGroup.yaml

3.2.4.4.2. 集群调优参考 YAML

example-sno.yaml

ConsoleOperatorDisable.yaml

09-openshift-marketplace-ns.yaml

DefaultCatsrc.yaml

DisableOLMPprof.yaml

DisconnectedICSP.yaml

OperatorHub.yaml

ReduceMonitoringFootprint.yaml

DisableSnoNetworkDiag.yaml

3.2.4.4.3. 机器配置引用 YAML

enable-crun-master.yaml

enable-crun-worker.yaml

99-crio-disable-wipe-master.yaml

99-crio-disable-wipe-worker.yaml

06-kdump-master.yaml

06-kdump-worker.yaml

01-container-mount-ns-and-kubelet-conf-master.yaml

01-container-mount-ns-and-kubelet-conf-worker.yaml

99-sync-time-once-master.yaml

99-sync-time-once-worker.yaml

03-sctp-machine-config-master.yaml

03-sctp-machine-config-worker.yaml

08-set-rcu-normal-master.yaml

08-set-rcu-normal-worker.yaml

3.2.5. Telco RAN DU 参考配置软件规格

以下信息描述了电信 RAN DU 参考规格 (RDS) 验证的软件版本。

3.2.5.1. Telco RAN DU 4.17 验证软件组件

Red Hat Telco RAN DU 4.17 解决方案已在为 OpenShift Container Platform 受管集群和 hub 集群使用以下红帽产品进行验证。

| 组件 | 软件版本 |

|---|---|

| 受管集群版本 | 4.17 |

| Cluster Logging Operator | 6.0 |

| Local Storage Operator | 4.17 |

| OpenShift API for Data Protection (OADP) | 1.4.1 |

| PTP Operator | 4.17 |

| SRIOV Operator | 4.17 |

| SRIOV-FEC Operator | 2.9 |

| 生命周期代理 | 4.17 |

| 组件 | 软件版本 |

|---|---|

| hub 集群版本 | 4.17 |

| Red Hat Advanced Cluster Management (RHACM) | 2.11, 2.12 |

| GitOps ZTP 插件 | 4.17 |

| Red Hat OpenShift GitOps | 1.16 |

| Topology Aware Lifecycle Manager (TALM) | 4.17 |