Chapter 2. OpenShift Container Platform overview

OpenShift Container Platform is a cloud-based Kubernetes container platform. The foundation of OpenShift Container Platform is based on Kubernetes and therefore shares the same technology. It is designed to allow applications and the data centers that support them to expand from just a few machines and applications to thousands of machines that serve millions of clients.

OpenShift Container Platform enables you to do the following:

- Provide developers and IT organizations with cloud application platforms that can be used for deploying applications on secure and scalable resources.

- Require minimal configuration and management overhead.

- Bring the Kubernetes platform to customer data centers and cloud.

- Meet security, privacy, compliance, and governance requirements.

With its foundation in Kubernetes, OpenShift Container Platform incorporates the same technology that serves as the engine for massive telecommunications, streaming video, gaming, banking, and other applications. Its implementation in open Red Hat technologies lets you extend your containerized applications beyond a single cloud to on-premise and multi-cloud environments.

2.1. Glossary of common terms for OpenShift Container Platform

This glossary defines common Kubernetes and OpenShift Container Platform terms.

- Kubernetes

- Kubernetes is an open source container orchestration engine for automating deployment, scaling, and management of containerized applications.

- Containers

- Containers are application instances and components that run in OCI-compliant containers on the worker nodes. A container is the runtime of an Open Container Initiative (OCI)-compliant image. An image is a binary application. A worker node can run many containers. A node capacity is related to memory and CPU capabilities of the underlying resources whether they are cloud, hardware, or virtualized.

- Pod

A pod is one or more containers deployed together on one host. It consists of a colocated group of containers with shared resources such as volumes and IP addresses. A pod is also the smallest compute unit defined, deployed, and managed.

In OpenShift Container Platform, pods replace individual application containers as the smallest deployable unit.

Pods are the orchestrated unit in OpenShift Container Platform. OpenShift Container Platform schedules and runs all containers in a pod on the same node. Complex applications are made up of many pods, each with their own containers. They interact externally and also with another inside the OpenShift Container Platform environment.

- Replica set and replication controller

- The Kubernetes replica set and the OpenShift Container Platform replication controller are both available. The job of this component is to ensure the specified number of pod replicas are running at all times. If pods exit or are deleted, the replica set or replication controller starts more. If more pods are running than needed, the replica set deletes as many as necessary to match the specified number of replicas.

- Deployment and DeploymentConfig

OpenShift Container Platform implements both Kubernetes

Deploymentobjects and OpenShift Container PlatformDeploymentConfigsobjects. Users may select either.Deploymentobjects control how an application is rolled out as pods. They identify the name of the container image to be taken from the registry and deployed as a pod on a node. They set the number of replicas of the pod to deploy, creating a replica set to manage the process. The labels indicated instruct the scheduler onto which nodes to deploy the pod. The set of labels is included in the pod definition that the replica set instantiates.Deploymentobjects are able to update the pods deployed onto the worker nodes based on the version of theDeploymentobjects and the various rollout strategies for managing acceptable application availability. OpenShift Container PlatformDeploymentConfigobjects add the additional features of change triggers, which are able to automatically create new versions of theDeploymentobjects as new versions of the container image are available, or other changes.- Service

A service defines a logical set of pods and access policies. It provides permanent internal IP addresses and hostnames for other applications to use as pods are created and destroyed.

Service layers connect application components together. For example, a front-end web service connects to a database instance by communicating with its service. Services allow for simple internal load balancing across application components. OpenShift Container Platform automatically injects service information into running containers for ease of discovery.

- Route

- A route is a way to expose a service by giving it an externally reachable hostname, such as www.example.com. Each route consists of a route name, a service selector, and optionally a security configuration. A router can consume a defined route and the endpoints identified by its service to provide a name that lets external clients reach your applications. While it is easy to deploy a complete multi-tier application, traffic from anywhere outside the OpenShift Container Platform environment cannot reach the application without the routing layer.

- Build

-

A build is the process of transforming input parameters into a resulting object. Most often, the process is used to transform input parameters or source code into a runnable image. A

BuildConfigobject is the definition of the entire build process. OpenShift Container Platform leverages Kubernetes by creating containers from build images and pushing them to the integrated registry. - Project

OpenShift Container Platform uses projects to allow groups of users or developers to work together, serving as the unit of isolation and collaboration. It defines the scope of resources, allows project administrators and collaborators to manage resources, and restricts and tracks the user’s resources with quotas and limits.

A project is a Kubernetes namespace with additional annotations. It is the central vehicle for managing access to resources for regular users. A project lets a community of users organize and manage their content in isolation from other communities. Users must receive access to projects from administrators. But cluster administrators can allow developers to create their own projects, in which case users automatically have access to their own projects.

Each project has its own set of objects, policies, constraints, and service accounts.

Projects are also known as namespaces.

- Operators

An Operator is a Kubernetes-native application. The goal of an Operator is to put operational knowledge into software. Previously this knowledge only resided in the minds of administrators, various combinations or shell scripts or automation software such as Ansible. It was outside your Kubernetes cluster and hard to integrate. With Operators, all of this changes.

Operators are purpose-built for your applications. They implement and automate common Day 1 activities such as installation and configuration as well as Day 2 activities such as scaling up and down, reconfiguration, updates, backups, fail overs, and restores in a piece of software running inside your Kubernetes cluster by integrating natively with Kubernetes concepts and APIs. This is called a Kubernetes-native application.

With Operators, applications must not be treated as a collection of primitives, such as pods, deployments, services, or config maps. Instead, Operators should be treated as a single object that exposes the options that make sense for the application.

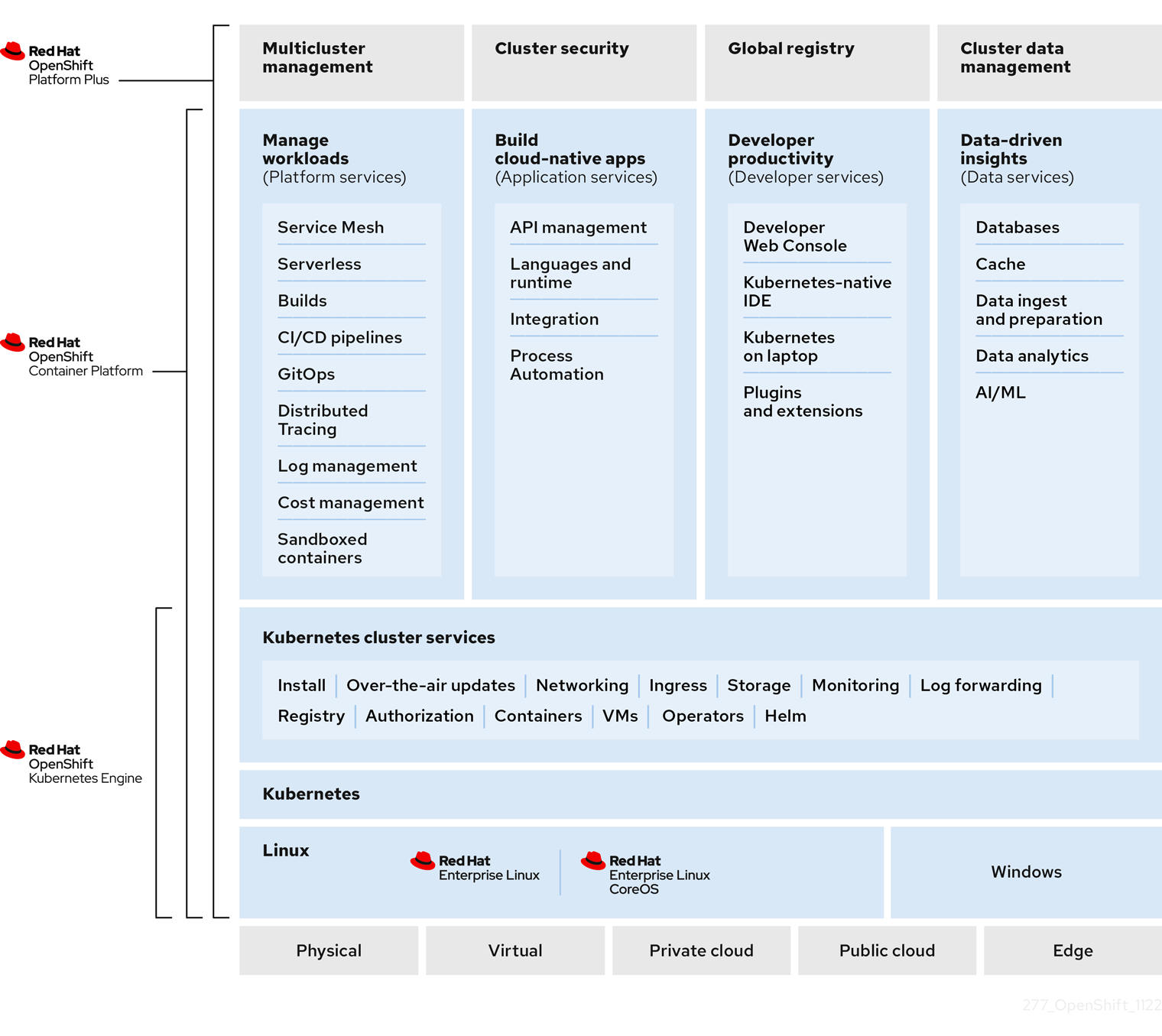

2.2. Understanding OpenShift Container Platform

OpenShift Container Platform is a Kubernetes environment for managing the lifecycle of container-based applications and their dependencies on various computing platforms, such as bare metal, virtualized, on-premise, and in cloud. OpenShift Container Platform deploys, configures and manages containers. OpenShift Container Platform offers usability, stability, and customization of its components.

OpenShift Container Platform utilises a number of computing resources, known as nodes. A node has a lightweight, secure operating system based on Red Hat Enterprise Linux (RHEL), known as Red Hat Enterprise Linux CoreOS (RHCOS).

After a node is booted and configured, it obtains a container runtime, such as CRI-O or Docker, for managing and running the images of container workloads scheduled to it. The Kubernetes agent, or kubelet schedules container workloads on the node. The kubelet is responsible for registering the node with the cluster and receiving the details of container workloads.

OpenShift Container Platform configures and manages the networking, load balancing and routing of the cluster. OpenShift Container Platform adds cluster services for monitoring the cluster health and performance, logging, and for managing upgrades.

The container image registry and OperatorHub provide Red Hat certified products and community built softwares for providing various application services within the cluster. These applications and services manage the applications deployed in the cluster, databases, frontends and user interfaces, application runtimes and business automation, and developer services for development and testing of container applications.

You can manage applications within the cluster either manually by configuring deployments of containers running from pre-built images or through resources known as Operators. You can build custom images from pre-build images and source code, and store these custom images locally in an internal, private or public registry.

The Multicluster Management layer can manage multiple clusters including their deployment, configuration, compliance and distribution of workloads in a single console.

2.3. Installing OpenShift Container Platform

The OpenShift Container Platform installation program offers you flexibility. You can use the installation program to deploy a cluster on infrastructure that the installation program provisions and the cluster maintains or deploy a cluster on infrastructure that you prepare and maintain.

For more information about the installation process, the supported platforms, and choosing a method of installing and preparing your cluster, see the following:

2.3.1. OpenShift Local overview

OpenShift Local supports rapid application development to get started building OpenShift Container Platform clusters. OpenShift Local is designed to run on a local computer to simplify setup and testing, and to emulate the cloud development environment locally with all of the tools needed to develop container-based applications.

Regardless of the programming language you use, OpenShift Local hosts your application and brings a minimal, preconfigured Red Hat OpenShift Container Platform cluster to your local PC without the need for a server-based infrastructure.

On a hosted environment, OpenShift Local can create microservices, convert them into images, and run them in Kubernetes-hosted containers directly on your laptop or desktop running Linux, macOS, or Windows 10 or later.

For more information about OpenShift Local, see Red Hat OpenShift Local Overview.

2.4. Next Steps

2.4.1. For developers

Develop and deploy containerized applications with OpenShift Container Platform. OpenShift Container Platform is a platform for developing and deploying containerized applications. OpenShift Container Platform documentation helps you:

- Understand OpenShift Container Platform development: Learn the different types of containerized applications, from simple containers to advanced Kubernetes deployments and Operators.

-

Work with projects: Create projects from the OpenShift Container Platform web console or OpenShift CLI (

oc) to organize and share the software you develop. - Work with applications:

Use the Developer perspective in the OpenShift Container Platform web console to create and deploy applications.

Use the Topology view to see your applications, monitor status, connect and group components, and modify your code base.

-

Use the developer CLI tool (

odo): TheodoCLI tool lets developers create single or multi-component applications and automates deployment, build, and service route configurations. It abstracts complex Kubernetes and OpenShift Container Platform concepts, allowing you to focus on developing your applications. - Create CI/CD Pipelines: Pipelines are serverless, cloud-native, continuous integration, and continuous deployment systems that run in isolated containers. They use standard Tekton custom resources to automate deployments and are designed for decentralized teams working on microservices-based architecture.

- Deploy Helm charts: Helm 3 is a package manager that helps developers define, install, and update application packages on Kubernetes. A Helm chart is a packaging format that describes an application that can be deployed using the Helm CLI.

- Understand image builds: Choose from different build strategies (Docker, S2I, custom, and pipeline) that can include different kinds of source materials (Git repositories, local binary inputs, and external artifacts). Then, follow examples of build types from basic builds to advanced builds.

- Create container images: A container image is the most basic building block in OpenShift Container Platform (and Kubernetes) applications. Defining image streams lets you gather multiple versions of an image in one place as you continue its development. S2I containers let you insert your source code into a base container that is set up to run code of a particular type, such as Ruby, Node.js, or Python.

-

Create deployments: Use

DeploymentandDeploymentConfigobjects to exert fine-grained management over applications. Manage deployments using the Workloads page or OpenShift CLI (oc). Learn rolling, recreate, and custom deployment strategies. - Create templates: Use existing templates or create your own templates that describe how an application is built or deployed. A template can combine images with descriptions, parameters, replicas, exposed ports and other content that defines how an application can be run or built.

- Understand Operators: Operators are the preferred method for creating on-cluster applications for OpenShift Container Platform 4.16. Learn about the Operator Framework and how to deploy applications using installed Operators into your projects.

- Develop Operators: Operators are the preferred method for creating on-cluster applications for OpenShift Container Platform 4.16. Learn the workflow for building, testing, and deploying Operators. Then, create your own Operators based on Ansible or Helm, or configure built-in Prometheus monitoring using the Operator SDK.

- REST API reference: Learn about OpenShift Container Platform application programming interface endpoints.

2.4.2. For administrators

- Understand OpenShift Container Platform management: Learn about components of the OpenShift Container Platform 4.16 control plane. See how OpenShift Container Platform control plane and worker nodes are managed and updated through the Machine API and Operators.

- Manage users and groups: Add users and groups with different levels of permissions to use or modify clusters.

- Manage authentication: Learn how user, group, and API authentication works in OpenShift Container Platform. OpenShift Container Platform supports multiple identity providers.

- Manage networking: The cluster network in OpenShift Container Platform is managed by the Cluster Network Operator (CNO). The Multus Container Network Interface adds the capability to attach multiple network interfaces to a pod. Using network policy features, you can isolate your pods or permit selected traffic.

- Manage storage: OpenShift Container Platform allows cluster administrators to configure persistent storage.

- Manage Operators: Lists of Red Hat, ISV, and community Operators can be reviewed by cluster administrators and installed on their clusters. After you install them, you can run, upgrade, back up, or otherwise manage the Operator on your cluster.

- Use custom resource definitions (CRDs) to modify the cluster: Cluster features implemented with Operators can be modified with CRDs. Learn to create a CRD and manage resources from CRDs.

- Set resource quotas: Choose from CPU, memory, and other system resources to set quotas.

- Prune and reclaim resources: Reclaim space by pruning unneeded Operators, groups, deployments, builds, images, registries, and cron jobs.

- Scale and tune clusters: Set cluster limits, tune nodes, scale cluster monitoring, and optimize networking, storage, and routes for your environment.

- Using the OpenShift Update Service in a disconnected environement: Learn about installing and managing a local OpenShift Update Service for recommending OpenShift Container Platform updates in disconnected environments.

- Monitor clusters: Learn to configure the monitoring stack. After configuring monitoring, use the web console to access monitoring dashboards. In addition to infrastructure metrics, you can also scrape and view metrics for your own services.

- Remote health monitoring: OpenShift Container Platform collects anonymized aggregated information about your cluster. Using Telemetry and the Insights Operator, this data is received by Red Hat and used to improve OpenShift Container Platform. You can view the data collected by remote health monitoring.