Chapter 3. Reference design specifications

3.1. Telco core and RAN DU reference design specifications

The telco core reference design specification (RDS) describes OpenShift Container Platform 4.16 clusters running on commodity hardware that can support large scale telco applications including control plane and some centralized data plane functions.

The telco RAN RDS describes the configuration for clusters running on commodity hardware to host 5G workloads in the Radio Access Network (RAN).

3.1.1. Reference design specifications for telco 5G deployments

Red Hat and certified partners offer deep technical expertise and support for networking and operational capabilities required to run telco applications on OpenShift Container Platform 4.16 clusters.

Red Hat’s telco partners require a well-integrated, well-tested, and stable environment that can be replicated at scale for enterprise 5G solutions. The telco core and RAN DU reference design specifications (RDS) outline the recommended solution architecture based on a specific version of OpenShift Container Platform. Each RDS describes a tested and validated platform configuration for telco core and RAN DU use models. The RDS ensures an optimal experience when running your applications by defining the set of critical KPIs for telco 5G core and RAN DU. Following the RDS minimizes high severity escalations and improves application stability.

5G use cases are evolving and your workloads are continually changing. Red Hat is committed to iterating over the telco core and RAN DU RDS to support evolving requirements based on customer and partner feedback.

3.1.2. Reference design scope

The telco core and telco RAN reference design specifications (RDS) capture the recommended, tested, and supported configurations to get reliable and repeatable performance for clusters running the telco core and telco RAN profiles.

Each RDS includes the released features and supported configurations that are engineered and validated for clusters to run the individual profiles. The configurations provide a baseline OpenShift Container Platform installation that meets feature and KPI targets. Each RDS also describes expected variations for each individual configuration. Validation of each RDS includes many long duration and at-scale tests.

The validated reference configurations are updated for each major Y-stream release of OpenShift Container Platform. Z-stream patch releases are periodically re-tested against the reference configurations.

3.1.3. Deviations from the reference design

Deviating from the validated telco core and telco RAN DU reference design specifications (RDS) can have significant impact beyond the specific component or feature that you change. Deviations require analysis and engineering in the context of the complete solution.

All deviations from the RDS should be analyzed and documented with clear action tracking information. Due diligence is expected from partners to understand how to bring deviations into line with the reference design. This might require partners to provide additional resources to engage with Red Hat to work towards enabling their use case to achieve a best in class outcome with the platform. This is critical for the supportability of the solution and ensuring alignment across Red Hat and with partners.

Deviation from the RDS can have some or all of the following consequences:

- It can take longer to resolve issues.

- There is a risk of missing project service-level agreements (SLAs), project deadlines, end provider performance requirements, and so on.

Unapproved deviations may require escalation at executive levels.

NoteRed Hat prioritizes the servicing of requests for deviations based on partner engagement priorities.

3.2. Telco RAN DU reference design specification

3.2.1. Telco RAN DU 4.16 reference design overview

The Telco RAN distributed unit (DU) 4.16 reference design configures an OpenShift Container Platform 4.16 cluster running on commodity hardware to host telco RAN DU workloads. It captures the recommended, tested, and supported configurations to get reliable and repeatable performance for a cluster running the telco RAN DU profile.

3.2.1.1. Deployment architecture overview

You deploy the telco RAN DU 4.16 reference configuration to managed clusters from a centrally managed RHACM hub cluster. The reference design specification (RDS) includes configuration of the managed clusters and the hub cluster components.

Figure 3.1. Telco RAN DU deployment architecture overview

3.2.2. Telco RAN DU use model overview

Use the following information to plan telco RAN DU workloads, cluster resources, and hardware specifications for the hub cluster and managed single-node OpenShift clusters.

3.2.2.1. Telco RAN DU application workloads

DU worker nodes must have 3rd Generation Xeon (Ice Lake) 2.20 GHz or better CPUs with firmware tuned for maximum performance.

5G RAN DU user applications and workloads should conform to the following best practices and application limits:

- Develop cloud-native network functions (CNFs) that conform to the latest version of the CNF best practices guide.

- Use SR-IOV for high performance networking.

Use exec probes sparingly and only when no other suitable options are available

-

Do not use exec probes if a CNF uses CPU pinning. Use other probe implementations, for example,

httpGetortcpSocket. - When you need to use exec probes, limit the exec probe frequency and quantity. The maximum number of exec probes must be kept below 10, and frequency must not be set to less than 10 seconds.

-

Do not use exec probes if a CNF uses CPU pinning. Use other probe implementations, for example,

- Avoid using exec probes unless there is absolutely no viable alternative.

Startup probes require minimal resources during steady-state operation. The limitation on exec probes applies primarily to liveness and readiness probes.

3.2.2.2. Telco RAN DU representative reference application workload characteristics

The representative reference application workload has the following characteristics:

- Has a maximum of 15 pods and 30 containers for the vRAN application including its management and control functions

-

Uses a maximum of 2

ConfigMapand 4SecretCRs per pod - Uses a maximum of 10 exec probes with a frequency of not less than 10 seconds

Incremental application load on the

kube-apiserveris less than 10% of the cluster platform usageNoteYou can extract CPU load can from the platform metrics. For example:

query=avg_over_time(pod:container_cpu_usage:sum{namespace="openshift-kube-apiserver"}[30m])query=avg_over_time(pod:container_cpu_usage:sum{namespace="openshift-kube-apiserver"}[30m])Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Application logs are not collected by the platform log collector

- Aggregate traffic on the primary CNI is less than 1 MBps

3.2.2.3. Telco RAN DU worker node cluster resource utilization

The maximum number of running pods in the system, inclusive of application workloads and OpenShift Container Platform pods, is 120.

- Resource utilization

OpenShift Container Platform resource utilization varies depending on many factors including application workload characteristics such as:

- Pod count

- Type and frequency of probes

- Messaging rates on primary CNI or secondary CNI with kernel networking

- API access rate

- Logging rates

- Storage IOPS

Cluster resource requirements are applicable under the following conditions:

- The cluster is running the described representative application workload.

- The cluster is managed with the constraints described in "Telco RAN DU worker node cluster resource utilization".

- Components noted as optional in the RAN DU use model configuration are not applied.

You will need to do additional analysis to determine the impact on resource utilization and ability to meet KPI targets for configurations outside the scope of the Telco RAN DU reference design. You might have to allocate additional resources in the cluster depending on your requirements.

3.2.2.4. Hub cluster management characteristics

Red Hat Advanced Cluster Management (RHACM) is the recommended cluster management solution. Configure it to the following limits on the hub cluster:

- Configure a maximum of 5 RHACM policies with a compliant evaluation interval of at least 10 minutes.

- Use a maximum of 10 managed cluster templates in policies. Where possible, use hub-side templating.

Disable all RHACM add-ons except for the

policy-controllerandobservability-controlleradd-ons. SetObservabilityto the default configuration.ImportantConfiguring optional components or enabling additional features will result in additional resource usage and can reduce overall system performance.

For more information, see Reference design deployment components.

| Metric | Limit | Notes |

|---|---|---|

| CPU usage | Less than 4000 mc – 2 cores (4 hyperthreads) | Platform CPU is pinned to reserved cores, including both hyperthreads in each reserved core. The system is engineered to use 3 CPUs (3000mc) at steady-state to allow for periodic system tasks and spikes. |

| Memory used | Less than 16G |

3.2.2.5. Telco RAN DU RDS components

The following sections describe the various OpenShift Container Platform components and configurations that you use to configure and deploy clusters to run telco RAN DU workloads.

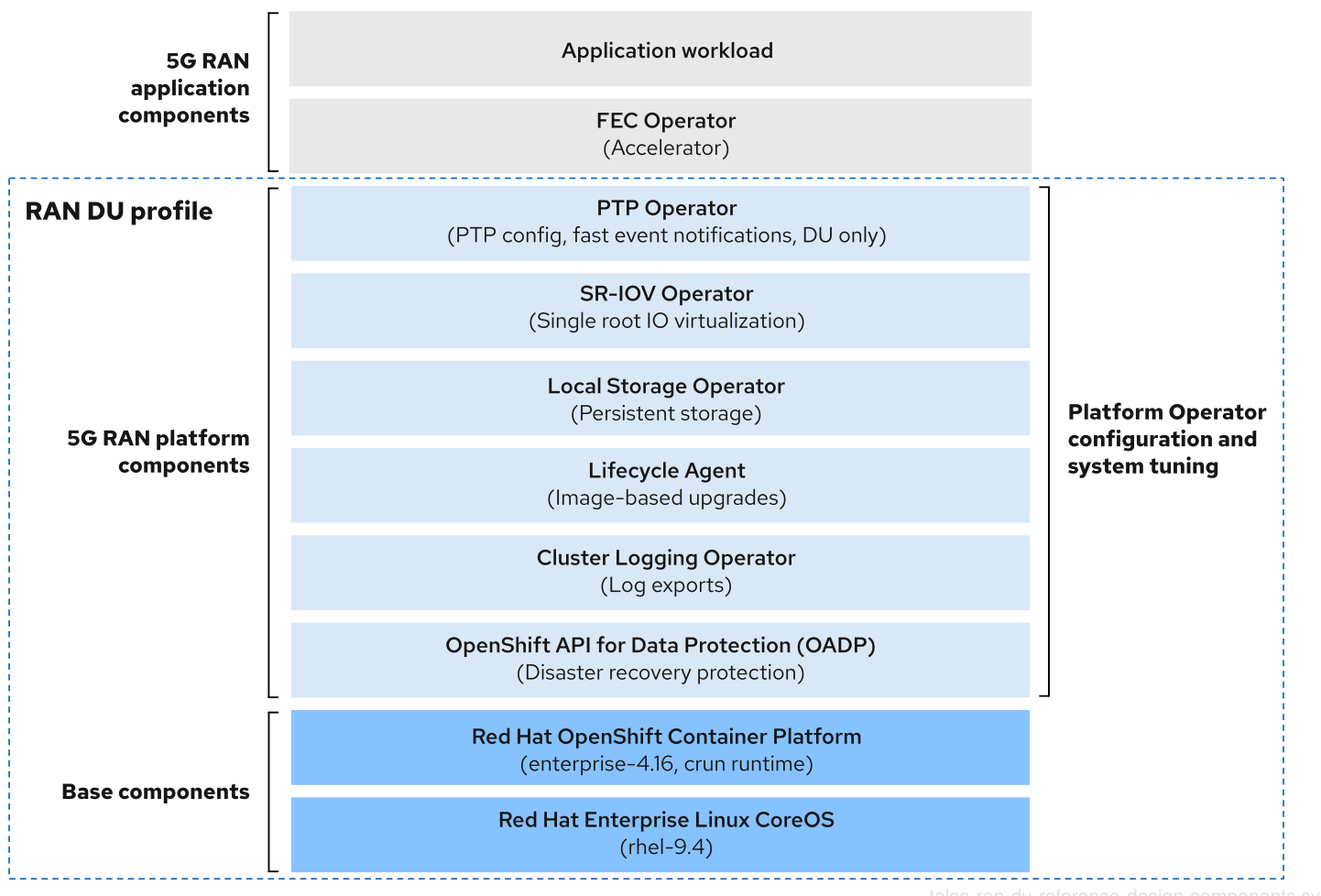

Figure 3.2. Telco RAN DU reference design components

Ensure that components that are not included in the telco RAN DU profile do not affect the CPU resources allocated to workload applications.

Out of tree drivers are not supported.

3.2.3. Telco RAN DU 4.16 reference design components

The following sections describe the various OpenShift Container Platform components and configurations that you use to configure and deploy clusters to run RAN DU workloads.

3.2.3.1. Host firmware tuning

- New in this release

- No reference design updates in this release

- Description

Configure system level performance. See Configuring host firmware for low latency and high performance for recommended settings.

If Ironic inspection is enabled, the firmware setting values are available from the per-cluster

BareMetalHostCR on the hub cluster. You enable Ironic inspection with a label in thespec.clusters.nodesfield in theSiteConfigCR that you use to install the cluster. For example:nodes: - hostName: "example-node1.example.com" ironicInspect: "enabled"nodes: - hostName: "example-node1.example.com" ironicInspect: "enabled"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe telco RAN DU reference

SiteConfigdoes not enable theironicInspectfield by default.- Limits and requirements

- Hyperthreading must be enabled

- Engineering considerations

Tune all settings for maximum performance

NoteYou can tune firmware selections for power savings at the expense of performance as required.

3.2.3.2. Node Tuning Operator

- New in this release

-

With this release, the Node Tuning Operator supports setting CPU frequencies in the

PerformanceProfilefor reserved and isolated core CPUs. This is an optional feature that you can use to define specific frequencies. Use this feature to set specific frequencies by enabling theintel_pstateCPUFreqdriver in the Intel hardware. You must follow Intel’s recommendations on frequencies for FlexRAN-like applications, which requires the default CPU frequency to be set to a lower value than default running frequency. -

Previously, for the RAN DU-profile, setting the

realTimeworkload hint totruein thePerformanceProfilealways disabled theintel_pstate. With this release, the Node Tuning Operator detects the underlying Intel hardware usingTuneDand appropriately sets theintel_pstatekernel parameter based on the processor’s generation. - In this release, OpenShift Container Platform deployments with a performance profile now default to using cgroups v2 as the underlying resource management layer. If you run workloads that are not ready for this change, you can still revert to using the older cgroups v1 mechanism.

-

With this release, the Node Tuning Operator supports setting CPU frequencies in the

- Description

You tune the cluster performance by creating a performance profile. Settings that you configure with a performance profile include:

- Selecting the realtime or non-realtime kernel.

-

Allocating cores to a reserved or isolated

cpuset. OpenShift Container Platform processes allocated to the management workload partition are pinned to reserved set. - Enabling kubelet features (CPU manager, topology manager, and memory manager).

- Configuring huge pages.

- Setting additional kernel arguments.

- Setting per-core power tuning and max CPU frequency.

- Reserved and isolated core frequency tuning.

- Limits and requirements

The Node Tuning Operator uses the

PerformanceProfileCR to configure the cluster. You need to configure the following settings in the RAN DU profilePerformanceProfileCR:- Select reserved and isolated cores and ensure that you allocate at least 4 hyperthreads (equivalent to 2 cores) on Intel 3rd Generation Xeon (Ice Lake) 2.20 GHz CPUs or better with firmware tuned for maximum performance.

-

Set the reserved

cpusetto include both hyperthread siblings for each included core. Unreserved cores are available as allocatable CPU for scheduling workloads. Ensure that hyperthread siblings are not split across reserved and isolated cores. - Configure reserved and isolated CPUs to include all threads in all cores based on what you have set as reserved and isolated CPUs.

- Set core 0 of each NUMA node to be included in the reserved CPU set.

- Set the huge page size to 1G.

You should not add additional workloads to the management partition. Only those pods which are part of the OpenShift management platform should be annotated into the management partition.

- Engineering considerations

You should use the RT kernel to meet performance requirements.

NoteYou can use the non-RT kernel if required.

- The number of huge pages that you configure depends on the application workload requirements. Variation in this parameter is expected and allowed.

- Variation is expected in the configuration of reserved and isolated CPU sets based on selected hardware and additional components in use on the system. Variation must still meet the specified limits.

- Hardware without IRQ affinity support impacts isolated CPUs. To ensure that pods with guaranteed whole CPU QoS have full use of the allocated CPU, all hardware in the server must support IRQ affinity. For more information, see About support of IRQ affinity setting.

cgroup v1 is a deprecated feature. Deprecated functionality is still included in OpenShift Container Platform and continues to be supported; however, it will be removed in a future release of this product and is not recommended for new deployments.

For the most recent list of major functionality that has been deprecated or removed within OpenShift Container Platform, refer to the Deprecated and removed features section of the OpenShift Container Platform release notes.

3.2.3.3. PTP Operator

- New in this release

- Configuring linuxptp services as grandmaster clock (T-GM) for dual Intel E810 Westport Channel NICs is now a generally available feature.

-

You can configure the

linuxptpservicesptp4landphc2sysas a highly available (HA) system clock for dual PTP boundary clocks (T-BC).

- Description

See PTP timing for details of support and configuration of PTP in cluster nodes. The DU node can run in the following modes:

- As an ordinary clock (OC) synced to a grandmaster clock or boundary clock (T-BC)

- As a grandmaster clock synced from GPS with support for single or dual card E810 Westport Channel NICs.

- As dual boundary clocks (one per NIC) with support for E810 Westport Channel NICs

- Allow for High Availability of the system clock when there are multiple time sources on different NICs.

- Optional: as a boundary clock for radio units (RUs)

Events and metrics for grandmaster clocks are a Tech Preview feature added in the 4.14 telco RAN DU RDS. For more information see Using the PTP hardware fast event notifications framework.

You can subscribe applications to PTP events that happen on the node where the DU application is running.

- Limits and requirements

- Limited to two boundary clocks for dual NIC and HA

- Limited to two WPC card configuration for T-GM

- Engineering considerations

- Configurations are provided for ordinary clock, boundary clock, grandmaster clock, or PTP-HA

-

PTP fast event notifications uses

ConfigMapCRs to store PTP event subscriptions - Use Intel E810-XXV-4T Westport Channel NICs for PTP grandmaster clocks with GPS timing, minimum firmware version 4.40

3.2.3.4. SR-IOV Operator

- New in this release

-

With this release, you can use the SR-IOV Network Operator to configure QinQ (802.1ad and 802.1q) tagging. QinQ tagging provides efficient traffic management by enabling the use of both inner and outer VLAN tags. Outer VLAN tagging is hardware accelerated, leading to faster network performance. The update extends beyond the SR-IOV Network Operator itself. You can now configure QinQ on externally managed VFs by setting the outer VLAN tag using

nmstate. QinQ support varies across different NICs. For a comprehensive list of known limitations for specific NIC models, see Configuring QinQ support for SR-IOV enabled workloads in the Additional resources section. - With this release, you can configure the SR-IOV Network Operator to drain nodes in parallel during network policy updates, dramatically accelerating the setup process. This translates to significant time savings, especially for large cluster deployments that previously took hours or even days to complete.

-

With this release, you can use the SR-IOV Network Operator to configure QinQ (802.1ad and 802.1q) tagging. QinQ tagging provides efficient traffic management by enabling the use of both inner and outer VLAN tags. Outer VLAN tagging is hardware accelerated, leading to faster network performance. The update extends beyond the SR-IOV Network Operator itself. You can now configure QinQ on externally managed VFs by setting the outer VLAN tag using

- Description

-

The SR-IOV Operator provisions and configures the SR-IOV CNI and device plugins. Both

netdevice(kernel VFs) andvfio(DPDK) devices are supported. - Limits and requirements

- Use OpenShift Container Platform supported devices

- SR-IOV and IOMMU enablement in BIOS: The SR-IOV Network Operator will automatically enable IOMMU on the kernel command line.

- SR-IOV VFs do not receive link state updates from the PF. If link down detection is needed you must configure this at the protocol level.

-

You can apply multi-network policies on

netdevicedrivers types only. Multi-network policies require theiptablestool, which cannot managevfiodriver types.

- Engineering considerations

-

SR-IOV interfaces with the

vfiodriver type are typically used to enable additional secondary networks for applications that require high throughput or low latency. -

Customer variation on the configuration and number of

SriovNetworkandSriovNetworkNodePolicycustom resources (CRs) is expected. -

IOMMU kernel command-line settings are applied with a

MachineConfigCR at install time. This ensures that theSriovOperatorCR does not cause a reboot of the node when adding them. - SR-IOV support for draining nodes in parallel is not applicable in a single-node OpenShift cluster.

-

If you exclude the

SriovOperatorConfigCR from your deployment, the CR will not be created automatically. - In scenarios where you pin or restrict workloads to specific nodes, the SR-IOV parallel node drain feature will not result in the rescheduling of pods. In these scenarios, the SR-IOV Operator disables the parallel node drain functionality.

-

SR-IOV interfaces with the

3.2.3.5. Logging

- New in this release

- Cluster Logging Operator 6.0 is new in this release. Update your existing implementation to adapt to the new version of the API. You must remove the old Operator artifacts by using policies. For more information, see Additional resources.

- Description

- Use logging to collect logs from the far edge node for remote analysis. The recommended log collector is Vector.

- Engineering considerations

- Handling logs beyond the infrastructure and audit logs, for example, from the application workload requires additional CPU and network bandwidth based on additional logging rate.

As of OpenShift Container Platform 4.14, Vector is the reference log collector.

NoteUse of fluentd in the RAN use model is deprecated.

3.2.3.6. SRIOV-FEC Operator

- New in this release

- No reference design updates in this release

- Description

- SRIOV-FEC Operator is an optional 3rd party Certified Operator supporting FEC accelerator hardware.

- Limits and requirements

Starting with FEC Operator v2.7.0:

-

SecureBootis supported -

The

vfiodriver for thePFrequires the usage ofvfio-tokenthat is injected into Pods. Applications in the pod can pass theVFtoken to DPDK by using the EAL parameter--vfio-vf-token.

-

- Engineering considerations

-

The SRIOV-FEC Operator uses CPU cores from the

isolatedCPU set. - You can validate FEC readiness as part of the pre-checks for application deployment, for example, by extending the validation policy.

-

The SRIOV-FEC Operator uses CPU cores from the

3.2.3.7. Local Storage Operator

- New in this release

- No reference design updates in this release

- Description

-

You can create persistent volumes that can be used as

PVCresources by applications with the Local Storage Operator. The number and type ofPVresources that you create depends on your requirements. - Engineering considerations

-

Create backing storage for

PVCRs before creating thePV. This can be a partition, a local volume, LVM volume, or full disk. Refer to the device listing in

LocalVolumeCRs by the hardware path used to access each device to ensure correct allocation of disks and partitions. Logical names (for example,/dev/sda) are not guaranteed to be consistent across node reboots.For more information, see the RHEL 9 documentation on device identifiers.

-

Create backing storage for

3.2.3.8. LVMS Operator

- New in this release

- No reference design updates in this release

LVMS Operator is an optional component.

When you use the LVMS Operator as the storage solution, it replaces the Local Storage Operator, and the CPU required will be assigned to the management partition as platform overhead. The reference configuration must include one of these storage solutions but not both.

- Description

The LVMS Operator provides dynamic provisioning of block and file storage. The LVMS Operator creates logical volumes from local devices that can be used as

PVCresources by applications. Volume expansion and snapshots are also possible.The following example configuration creates a

vg1volume group that leverages all available disks on the node except the installation disk:StorageLVMCluster.yaml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Limits and requirements

- In single-node OpenShift clusters, persistent storage must be provided by either LVMS or local storage, not both.

- Engineering considerations

- Ensure that sufficient disks or partitions are available for storage requirements.

3.2.3.9. Workload partitioning

- New in this release

- No reference design updates in this release

- Description

Workload partitioning pins OpenShift platform and Day 2 Operator pods that are part of the DU profile to the reserved

cpusetand removes the reserved CPU from node accounting. This leaves all unreserved CPU cores available for user workloads.The method of enabling and configuring workload partitioning changed in OpenShift Container Platform 4.14.

- 4.14 and later

Configure partitions by setting installation parameters:

cpuPartitioningMode: AllNodes

cpuPartitioningMode: AllNodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Configure management partition cores with the reserved CPU set in the

PerformanceProfileCR

- 4.13 and earlier

-

Configure partitions with extra

MachineConfigurationCRs applied at install-time

-

Configure partitions with extra

- Limits and requirements

-

NamespaceandPodCRs must be annotated to allow the pod to be applied to the management partition - Pods with CPU limits cannot be allocated to the partition. This is because mutation can change the pod QoS.

- For more information about the minimum number of CPUs that can be allocated to the management partition, see Node Tuning Operator.

-

- Engineering considerations

- Workload Partitioning pins all management pods to reserved cores. A sufficient number of cores must be allocated to the reserved set to account for operating system, management pods, and expected spikes in CPU use that occur when the workload starts, the node reboots, or other system events happen.

3.2.3.10. Cluster tuning

- New in this release

- No reference design updates in this release

- Description

- See the section Cluster capabilities section for a full list of optional components that you enable or disable before installation.

- Limits and requirements

- Cluster capabilities are not available for installer-provisioned installation methods.

You must apply all platform tuning configurations. The following table lists the required platform tuning configurations:

Expand Table 3.2. Cluster capabilities configurations Feature Description Remove optional cluster capabilities

Reduce the OpenShift Container Platform footprint by disabling optional cluster Operators on single-node OpenShift clusters only.

- Remove all optional Operators except the Marketplace and Node Tuning Operators.

Configure cluster monitoring

Configure the monitoring stack for reduced footprint by doing the following:

-

Disable the local

alertmanagerandtelemetercomponents. -

If you use RHACM observability, the CR must be augmented with appropriate

additionalAlertManagerConfigsCRs to forward alerts to the hub cluster. Reduce the

Prometheusretention period to 24h.NoteThe RHACM hub cluster aggregates managed cluster metrics.

Disable networking diagnostics

Disable networking diagnostics for single-node OpenShift because they are not required.

Configure a single OperatorHub catalog source

Configure the cluster to use a single catalog source that contains only the Operators required for a RAN DU deployment. Each catalog source increases the CPU use on the cluster. Using a single

CatalogSourcefits within the platform CPU budget.

- Engineering considerations

- In this release, OpenShift Container Platform deployments use Control Groups version 2 (cgroup v2) by default. As a consequence, performance profiles in a cluster use cgroups v2 for the underlying resource management layer. If workloads running on the cluster require cgroups v1, you can configure nodes to use cgroups v1. You can make this configuration as part of the initial cluster deployment.

3.2.3.11. Machine configuration

- New in this release

- No reference design updates in this release

- Limits and requirements

The CRI-O wipe disable

MachineConfigassumes that images on disk are static other than during scheduled maintenance in defined maintenance windows. To ensure the images are static, do not set the podimagePullPolicyfield toAlways.Expand Table 3.3. Machine configuration options Feature Description Container runtime

Sets the container runtime to

crunfor all node roles.kubelet config and container mount hiding

Reduces the frequency of kubelet housekeeping and eviction monitoring to reduce CPU usage. Create a container mount namespace, visible to kubelet and CRI-O, to reduce system mount scanning resource usage.

SCTP

Optional configuration (enabled by default) Enables SCTP. SCTP is required by RAN applications but disabled by default in RHCOS.

kdump

Optional configuration (enabled by default) Enables kdump to capture debug information when a kernel panic occurs.

CRI-O wipe disable

Disables automatic wiping of the CRI-O image cache after unclean shutdown.

SR-IOV-related kernel arguments

Includes additional SR-IOV related arguments in the kernel command line.

RCU Normal systemd service

Sets

rcu_normalafter the system is fully started.One-shot time sync

Runs a one-time system time synchronization job for control plane or worker nodes.

3.2.3.12. Lifecycle Agent

- New in this release

- Use the Lifecycle Agent to enable image-based upgrades for single-node OpenShift clusters.

- Description

- The Lifecycle Agent provides local lifecycle management services for single-node OpenShift clusters.

- Limits and requirements

- The Lifecycle Agent is not applicable in multi-node clusters or single-node OpenShift clusters with an additional worker.

- Requires a persistent volume.

3.2.3.13. Reference design deployment components

The following sections describe the various OpenShift Container Platform components and configurations that you use to configure the hub cluster with Red Hat Advanced Cluster Management (RHACM).

3.2.3.13.1. Red Hat Advanced Cluster Management (RHACM)

- New in this release

-

You can now use

PolicyGeneratorresources and Red Hat Advanced Cluster Management (RHACM) to deploy polices for managed clusters with GitOps ZTP. This is a Technology Preview feature.

-

You can now use

- Description

RHACM provides Multi Cluster Engine (MCE) installation and ongoing lifecycle management functionality for deployed clusters. You declaratively specify configurations and upgrades with

PolicyCRs and apply the policies to clusters with the RHACM policy controller as managed by Topology Aware Lifecycle Manager.- GitOps Zero Touch Provisioning (ZTP) uses the MCE feature of RHACM

- Configuration, upgrades, and cluster status are managed with the RHACM policy controller

During installation RHACM can apply labels to individual nodes as configured in the

SiteConfigcustom resource (CR).- Limits and requirements

-

A single hub cluster supports up to 3500 deployed single-node OpenShift clusters with 5

PolicyCRs bound to each cluster.

-

A single hub cluster supports up to 3500 deployed single-node OpenShift clusters with 5

- Engineering considerations

- Use RHACM policy hub-side templating to better scale cluster configuration. You can significantly reduce the number of policies by using a single group policy or small number of general group policies where the group and per-cluster values are substituted into templates.

-

Cluster specific configuration: managed clusters typically have some number of configuration values that are specific to the individual cluster. These configurations should be managed using RHACM policy hub-side templating with values pulled from

ConfigMapCRs based on the cluster name. - To save CPU resources on managed clusters, policies that apply static configurations should be unbound from managed clusters after GitOps ZTP installation of the cluster.

3.2.3.13.2. Topology Aware Lifecycle Manager (TALM)

- New in this release

- No reference design updates in this release

- Description

- Managed updates

TALM is an Operator that runs only on the hub cluster for managing how changes (including cluster and Operator upgrades, configuration, and so on) are rolled out to the network. TALM does the following:

-

Progressively applies updates to fleets of clusters in user-configurable batches by using

PolicyCRs. -

Adds

ztp-donelabels or other user configurable labels on a per-cluster basis

-

Progressively applies updates to fleets of clusters in user-configurable batches by using

- Precaching for single-node OpenShift clusters

TALM supports optional precaching of OpenShift Container Platform, OLM Operator, and additional user images to single-node OpenShift clusters before initiating an upgrade.

A

PreCachingConfigcustom resource is available for specifying optional pre-caching configurations. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Limits and requirements

- TALM supports concurrent cluster deployment in batches of 400

- Precaching and backup features are for single-node OpenShift clusters only.

- Engineering considerations

-

The

PreCachingConfigCR is optional and does not need to be created if you just wants to precache platform related (OpenShift and OLM Operator) images. ThePreCachingConfigCR must be applied before referencing it in theClusterGroupUpgradeCR.

-

The

3.2.3.13.3. GitOps and GitOps ZTP plugins

- New in this release

- No reference design updates in this release

- Description

GitOps and GitOps ZTP plugins provide a GitOps-based infrastructure for managing cluster deployment and configuration. Cluster definitions and configurations are maintained as a declarative state in Git. ZTP plugins provide support for generating installation CRs from the

SiteConfigCR and automatic wrapping of configuration CRs in policies based onPolicyGenTemplateCRs.You can deploy and manage multiple versions of OpenShift Container Platform on managed clusters using the baseline reference configuration CRs. You can also use custom CRs alongside the baseline CRs.

- Limits

-

300

SiteConfigCRs per ArgoCD application. You can use multiple applications to achieve the maximum number of clusters supported by a single hub cluster. -

Content in the

/source-crsfolder in Git overrides content provided in the GitOps ZTP plugin container. Git takes precedence in the search path. Add the

/source-crsfolder in the same directory as thekustomization.yamlfile, which includes thePolicyGenTemplateas a generator.NoteAlternative locations for the

/source-crsdirectory are not supported in this context.

-

300

- Engineering considerations

-

To avoid confusion or unintentional overwriting of files when updating content, use unique and distinguishable names for user-provided CRs in the

/source-crsfolder and extra manifests in Git. -

The

SiteConfigCR allows multiple extra-manifest paths. When files with the same name are found in multiple directory paths, the last file found takes precedence. This allows you to put the full set of version-specific Day 0 manifests (extra-manifests) in Git and reference them from theSiteConfigCR. With this feature, you can deploy multiple OpenShift Container Platform versions to managed clusters simultaneously. -

The

extraManifestPathfield of theSiteConfigCR is deprecated from OpenShift Container Platform 4.15 and later. Use the newextraManifests.searchPathsfield instead.

-

To avoid confusion or unintentional overwriting of files when updating content, use unique and distinguishable names for user-provided CRs in the

3.2.3.13.4. Agent-based installer

- New in this release

- No reference design updates in this release

- Description

Agent-based installer (ABI) provides installation capabilities without centralized infrastructure. The installation program creates an ISO image that you mount to the server. When the server boots it installs OpenShift Container Platform and supplied extra manifests.

NoteYou can also use ABI to install OpenShift Container Platform clusters without a hub cluster. An image registry is still required when you use ABI in this manner.

Agent-based installer (ABI) is an optional component.

- Limits and requirements

- You can supply a limited set of additional manifests at installation time.

-

You must include

MachineConfigurationCRs that are required by the RAN DU use case.

- Engineering considerations

- ABI provides a baseline OpenShift Container Platform installation.

- You install Day 2 Operators and the remainder of the RAN DU use case configurations after installation.

3.2.4. Telco RAN distributed unit (DU) reference configuration CRs

Use the following custom resources (CRs) to configure and deploy OpenShift Container Platform clusters with the telco RAN DU profile. Some of the CRs are optional depending on your requirements. CR fields you can change are annotated in the CR with YAML comments.

You can extract the complete set of RAN DU CRs from the ztp-site-generate container image. See Preparing the GitOps ZTP site configuration repository for more information.

3.2.4.1. Day 2 Operators reference CRs

| Component | Reference CR | Optional | New in this release |

|---|---|---|---|

| Cluster logging | No | No | |

| Cluster logging | No | No | |

| Cluster logging | No | No | |

| Cluster logging | No | No | |

| Cluster logging | No | No | |

| Lifecycle Agent | Yes | Yes | |

| Lifecycle Agent | Yes | Yes | |

| Lifecycle Agent | Yes | Yes | |

| Lifecycle Agent | Yes | Yes | |

| Local Storage Operator | Yes | No | |

| Local Storage Operator | Yes | No | |

| Local Storage Operator | Yes | No | |

| Local Storage Operator | Yes | No | |

| Local Storage Operator | Yes | No | |

| LVM Storage | No | Yes | |

| LVM Storage | No | Yes | |

| LVM Storage | No | Yes | |

| LVM Storage | No | Yes | |

| LVM Storage | No | Yes | |

| Node Tuning Operator | No | No | |

| Node Tuning Operator | No | No | |

| PTP fast event notifications | Yes | Yes | |

| PTP fast event notifications | Yes | Yes | |

| PTP fast event notifications | Yes | Yes | |

| PTP fast event notifications | Yes | Yes | |

| PTP fast event notifications | Yes | No | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| PTP Operator | No | Yes | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| PTP Operator | No | No | |

| SR-IOV FEC Operator | Yes | No | |

| SR-IOV FEC Operator | Yes | No | |

| SR-IOV FEC Operator | Yes | No | |

| SR-IOV FEC Operator | Yes | No | |

| SR-IOV Operator | No | No | |

| SR-IOV Operator | No | No | |

| SR-IOV Operator | No | No | |

| SR-IOV Operator | No | Yes | |

| SR-IOV Operator | No | No | |

| SR-IOV Operator | No | No | |

| SR-IOV Operator | No | No |

3.2.4.2. Cluster tuning reference CRs

| Component | Reference CR | Optional | New in this release |

|---|---|---|---|

| Cluster capabilities | No | No | |

| Disabling network diagnostics | No | No | |

| Monitoring configuration | No | No | |

| OperatorHub | No | No | |

| OperatorHub | No | No | |

| OperatorHub | No | No | |

| OperatorHub | No | No | |

| OperatorHub | Yes | No |

3.2.4.3. Machine configuration reference CRs

| Component | Reference CR | Optional | New in this release |

|---|---|---|---|

| Container runtime (crun) | No | No | |

| Container runtime (crun) | No | No | |

| Disabling CRI-O wipe | No | No | |

| Disabling CRI-O wipe | No | No | |

| Enabling kdump | No | No | |

| Enabling kdump | No | No | |

| Kubelet configuration and container mount hiding | No | No | |

| Kubelet configuration and container mount hiding | No | No | |

| One-shot time sync | No | No | |

| One-shot time sync | No | No | |

| SCTP | No | No | |

| SCTP | No | No | |

| Set RCU Normal | No | No | |

| Set RCU Normal | No | No | |

| SR-IOV related kernel arguments | No | Yes | |

| SR-IOV related kernel arguments | No | No |

3.2.4.4. YAML reference

The following is a complete reference for all the custom resources (CRs) that make up the telco RAN DU 4.16 reference configuration.

3.2.4.4.1. Day 2 Operators reference YAML

ClusterLogForwarder.yaml

ClusterLogging.yaml

ClusterLogNS.yaml

ClusterLogOperGroup.yaml

ClusterLogSubscription.yaml

ImageBasedUpgrade.yaml

LcaSubscription.yaml

LcaSubscriptionNS.yaml

LcaSubscriptionOperGroup.yaml

StorageClass.yaml

StorageLV.yaml

StorageNS.yaml

StorageOperGroup.yaml

StorageSubscription.yaml

LVMOperatorStatus.yaml

StorageLVMCluster.yaml

StorageLVMSubscription.yaml

StorageLVMSubscriptionNS.yaml

StorageLVMSubscriptionOperGroup.yaml

PerformanceProfile.yaml

TunedPerformancePatch.yaml

PtpConfigBoundaryForEvent.yaml

PtpConfigForHAForEvent.yaml

PtpConfigMasterForEvent.yaml

PtpConfigSlaveForEvent.yaml

PtpOperatorConfigForEvent.yaml

PtpConfigBoundary.yaml

PtpConfigDualCardGmWpc.yaml

PtpConfigThreeCardGmWpc.yaml

PtpConfigForHA.yaml

PtpConfigGmWpc.yaml

PtpConfigSlave.yaml

PtpOperatorConfig.yaml

PtpSubscription.yaml

PtpSubscriptionNS.yaml

PtpSubscriptionOperGroup.yaml

AcceleratorsNS.yaml

apiVersion: v1

kind: Namespace

metadata:

name: vran-acceleration-operators

annotations: {}

apiVersion: v1

kind: Namespace

metadata:

name: vran-acceleration-operators

annotations: {}AcceleratorsOperGroup.yaml

AcceleratorsSubscription.yaml

SriovFecClusterConfig.yaml

SriovNetwork.yaml

SriovNetworkNodePolicy.yaml

SriovOperatorConfig.yaml

SriovOperatorConfigForSNO.yaml

SriovSubscription.yaml

SriovSubscriptionNS.yaml

SriovSubscriptionOperGroup.yaml

3.2.4.4.2. Cluster tuning reference YAML

example-sno.yaml

DisableSnoNetworkDiag.yaml

ReduceMonitoringFootprint.yaml

09-openshift-marketplace-ns.yaml

DefaultCatsrc.yaml

DisableOLMPprof.yaml

DisconnectedICSP.yaml

OperatorHub.yaml

3.2.4.4.3. Machine configuration reference YAML

enable-crun-master.yaml

enable-crun-worker.yaml

99-crio-disable-wipe-master.yaml

99-crio-disable-wipe-worker.yaml

06-kdump-master.yaml

06-kdump-worker.yaml

01-container-mount-ns-and-kubelet-conf-master.yaml

01-container-mount-ns-and-kubelet-conf-worker.yaml

99-sync-time-once-master.yaml

99-sync-time-once-worker.yaml

03-sctp-machine-config-master.yaml

03-sctp-machine-config-worker.yaml

08-set-rcu-normal-master.yaml

08-set-rcu-normal-worker.yaml

3.2.5. Telco RAN DU reference configuration software specifications

The following information describes the telco RAN DU reference design specification (RDS) validated software versions.

3.2.5.1. Telco RAN DU 4.16 validated software components

The Red Hat telco RAN DU 4.16 solution has been validated using the following Red Hat software products for OpenShift Container Platform managed clusters and hub clusters.

| Component | Software version |

|---|---|

| Managed cluster version | 4.16 |

| Cluster Logging Operator | 6.0 |

| Local Storage Operator | 4.16 |

| PTP Operator | 4.16 |

| SRIOV Operator | 4.16 |

| Node Tuning Operator | 4.16 |

| Logging Operator | 4.16 |

| SRIOV-FEC Operator | 2.9 |

| Component | Software version |

|---|---|

| Hub cluster version | 4.16 |

| GitOps ZTP plugin | 4.16 |

| Red Hat Advanced Cluster Management (RHACM) | 2.10, 2.11 |

| Red Hat OpenShift GitOps | 1.16 |

| Topology Aware Lifecycle Manager (TALM) | 4.16 |

3.3. Telco core reference design specification

3.3.1. Telco core 4.16 reference design overview

The telco core reference design specification (RDS) configures a OpenShift Container Platform cluster running on commodity hardware to host telco core workloads.

3.3.2. Telco core 4.16 use model overview

The Telco core reference design specification (RDS) describes a platform that supports large-scale telco applications including control plane functions such as signaling and aggregation. It also includes some centralized data plane functions, for example, user plane functions (UPF). These functions generally require scalability, complex networking support, resilient software-defined storage, and support performance requirements that are less stringent and constrained than far-edge deployments like RAN.

Telco core use model architecture

The networking prerequisites for telco core functions are diverse and encompass an array of networking attributes and performance benchmarks. IPv6 is mandatory, with dual-stack configurations being prevalent. Certain functions demand maximum throughput and transaction rates, necessitating user plane networking support such as DPDK. Other functions adhere to conventional cloud-native patterns and can use solutions such as OVN-K, kernel networking, and load balancing.

Telco core clusters are configured as standard three control plane clusters with worker nodes configured with the stock non real-time (RT) kernel. To support workloads with varying networking and performance requirements, worker nodes are segmented using MachineConfigPool CRs. For example, this is done to separate non-user data plane nodes from high-throughput nodes. To support the required telco operational features, the clusters have a standard set of Operator Lifecycle Manager (OLM) Day 2 Operators installed.

3.3.2.1. Common baseline model

The following configurations and use model description are applicable to all telco core use cases.

- Cluster

The cluster conforms to these requirements:

- High-availability (3+ supervisor nodes) control plane

- Non-schedulable supervisor nodes

-

Multiple

MachineConfigPoolresources

- Storage

- Core use cases require persistent storage as provided by external OpenShift Data Foundation. For more information, see the "Storage" subsection in "Reference core design components".

- Networking

Telco core clusters networking conforms to these requirements:

- Dual stack IPv4/IPv6

- Fully disconnected: Clusters do not have access to public networking at any point in their lifecycle.

- Multiple networks: Segmented networking provides isolation between OAM, signaling, and storage traffic.

- Cluster network type: OVN-Kubernetes is required for IPv6 support.

Core clusters have multiple layers of networking supported by underlying RHCOS, SR-IOV Operator, Load Balancer, and other components detailed in the following "Networking" section. At a high level these layers include:

Cluster networking: The cluster network configuration is defined and applied through the installation configuration. Updates to the configuration can be done at day-2 through the NMState Operator. Initial configuration can be used to establish:

- Host interface configuration

- Active/Active Bonding (Link Aggregation Control Protocol (LACP))

Secondary or additional networks: OpenShift CNI is configured through the Network

additionalNetworksor NetworkAttachmentDefinition CRs.- MACVLAN

- Application Workload: User plane networking is running in cloud-native network functions (CNFs).

- Service Mesh

- Use of Service Mesh by telco CNFs is very common. It is expected that all core clusters will include a Service Mesh implementation. Service Mesh implementation and configuration is outside the scope of this specification.

3.3.2.1.1. Engineering Considerations common use model

The following engineering considerations are relevant for the common use model.

- Worker nodes

- Intel 3rd Generation Xeon (IceLake) CPUs or better when supported by OpenShift Container Platform, or CPUs with the silicon security bug (Spectre and similar) mitigations turned off. Skylake and older CPUs can experience 40% transaction performance drops when Spectre and similar mitigations are enabled.

- AMD EPYC Zen 4 CPUs (Genoa, Bergamo) or AMD EPYC Zen 5 CPUs (Turin) when supported by OpenShift Container Platform.

- Intel Sierra Forest CPUs when supported by OpenShift Container Platform.

-

IRQ Balancing is enabled on worker nodes. The

PerformanceProfilesetsgloballyDisableIrqLoadBalancing: false. Guaranteed QoS Pods are annotated to ensure isolation as described in "CPU partitioning and performance tuning" subsection in "Reference core design components" section.

- All nodes

- Hyper-Threading is enabled on all nodes

-

CPU architecture is

x86_64only - Nodes are running the stock (non-RT) kernel

- Nodes are not configured for workload partitioning

The balance of node configuration between power management and maximum performance varies between MachineConfigPools in the cluster. This configuration is consistent for all nodes within a MachineConfigPool.

- CPU partitioning

-

CPU partitioning is configured using the PerformanceProfile and applied on a per

MachineConfigPoolbasis. See the "CPU partitioning and performance tuning" subsection in "Reference core design components".

3.3.2.1.2. Application workloads

Application workloads running on core clusters might include a mix of high-performance networking CNFs and traditional best-effort or burstable pod workloads.

Guaranteed QoS scheduling is available to pods that require exclusive or dedicated use of CPUs due to performance or security requirements. Typically pods hosting high-performance and low-latency-sensitive Cloud Native Functions (CNFs) utilizing user plane networking with DPDK necessitate the exclusive utilization of entire CPUs. This is accomplished through node tuning and guaranteed Quality of Service (QoS) scheduling. For pods that require exclusive use of CPUs, be aware of the potential implications of hyperthreaded systems and configure them to request multiples of 2 CPUs when the entire core (2 hyperthreads) must be allocated to the pod.

Pods running network functions that do not require the high throughput and low latency networking are typically scheduled with best-effort or burstable QoS and do not require dedicated or isolated CPU cores.

- Description of limits

- CNF applications should conform to the latest version of the Red Hat Best Practices for Kubernetes guide.

For a mix of best-effort and burstable QoS pods.

-

Guaranteed QoS pods might be used but require correct configuration of reserved and isolated CPUs in the

PerformanceProfile. - Guaranteed QoS Pods must include annotations for fully isolating CPUs.

- Best effort and burstable pods are not guaranteed exclusive use of a CPU. Workloads might be preempted by other workloads, operating system daemons, or kernel tasks.

-

Guaranteed QoS pods might be used but require correct configuration of reserved and isolated CPUs in the

Exec probes should be avoided unless there is no viable alternative.

- Do not use exec probes if a CNF is using CPU pinning.

-

Other probe implementations, for example

httpGet/tcpSocket, should be used.

NoteStartup probes require minimal resources during steady-state operation. The limitation on exec probes applies primarily to liveness and readiness probes.

- Signaling workload

- Signaling workloads typically use SCTP, REST, gRPC, or similar TCP or UDP protocols.

- The transactions per second (TPS) is in the order of hundreds of thousands using secondary CNI (multus) configured as MACVLAN or SR-IOV.

- Signaling workloads run in pods with either guaranteed or burstable QoS.

3.3.3. Telco core reference design components

The following sections describe the various OpenShift Container Platform components and configurations that you use to configure and deploy clusters to run telco core workloads.

3.3.3.1. CPU partitioning and performance tuning

- New in this release

- In this release, OpenShift Container Platform deployments use Control Groups version 2 (cgroup v2) by default. As a consequence, performance profiles in a cluster use cgroups v2 for the underlying resource management layer.

- Description

-

CPU partitioning allows for the separation of sensitive workloads from generic purposes, auxiliary processes, interrupts, and driver work queues to achieve improved performance and latency. The CPUs allocated to those auxiliary processes are referred to as

reservedin the following sections. In hyperthreaded systems, a CPU is one hyperthread. - Limits and requirements

The operating system needs a certain amount of CPU to perform all the support tasks including kernel networking.

- A system with just user plane networking applications (DPDK) needs at least one Core (2 hyperthreads when enabled) reserved for the operating system and the infrastructure components.

- A system with Hyper-Threading enabled must always put all core sibling threads to the same pool of CPUs.

- The set of reserved and isolated cores must include all CPU cores.

- Core 0 of each NUMA node must be included in the reserved CPU set.

Isolated cores might be impacted by interrupts. The following annotations must be attached to the pod if guaranteed QoS pods require full use of the CPU:

cpu-load-balancing.crio.io: "disable" cpu-quota.crio.io: "disable" irq-load-balancing.crio.io: "disable"

cpu-load-balancing.crio.io: "disable" cpu-quota.crio.io: "disable" irq-load-balancing.crio.io: "disable"Copy to Clipboard Copied! Toggle word wrap Toggle overflow When per-pod power management is enabled with

PerformanceProfile.workloadHints.perPodPowerManagementthe following annotations must also be attached to the pod if guaranteed QoS pods require full use of the CPU:cpu-c-states.crio.io: "disable" cpu-freq-governor.crio.io: "performance"

cpu-c-states.crio.io: "disable" cpu-freq-governor.crio.io: "performance"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Engineering considerations

-

The minimum reserved capacity (

systemReserved) required can be found by following the guidance in "Which amount of CPU and memory are recommended to reserve for the system in OpenShift 4 nodes?" - The actual required reserved CPU capacity depends on the cluster configuration and workload attributes.

- This reserved CPU value must be rounded up to a full core (2 hyper-thread) alignment.

- Changes to the CPU partitioning will drain and reboot the nodes in the MCP.

- The reserved CPUs reduce the pod density, as the reserved CPUs are removed from the allocatable capacity of the OpenShift node.

- The real-time workload hint should be enabled if the workload is real-time capable.

- Hardware without Interrupt Request (IRQ) affinity support will impact isolated CPUs. To ensure that pods with guaranteed CPU QoS have full use of allocated CPU, all hardware in the server must support IRQ affinity.

-

OVS dynamically manages its

cpusetconfiguration to adapt to network traffic needs. You do not need to reserve additional CPUs for handling high network throughput on the primary CNI. - If workloads running on the cluster require cgroups v1, you can configure nodes to use cgroups v1. You can make this configuration as part of initial cluster deployment. For more information, see Enabling Linux cgroup v1 during installation in the Additional resources section.

-

The minimum reserved capacity (

3.3.3.2. Service Mesh

- Description

- Telco core CNFs typically require a service mesh implementation. The specific features and performance required are dependent on the application. The selection of service mesh implementation and configuration is outside the scope of this documentation. The impact of service mesh on cluster resource utilization and performance, including additional latency introduced into pod networking, must be accounted for in the overall solution engineering.

3.3.3.3. Networking

OpenShift Container Platform networking is an ecosystem of features, plugins, and advanced networking capabilities that extend Kubernetes networking with the advanced networking-related features that your cluster needs to manage its network traffic for one or multiple hybrid clusters.

3.3.3.3.1. Cluster Network Operator

- New in this release

- No reference design updates in this release

- Description

The Cluster Network Operator (CNO) deploys and manages the cluster network components including the default OVN-Kubernetes network plugin during cluster installation. The CNO allows for configuring primary interface MTU settings, OVN gateway configurations to use node routing tables for pod egress, and additional secondary networks such as MACVLAN.

In support of network traffic separation, multiple network interfaces are configured through the CNO. Traffic steering to these interfaces is configured through static routes applied by using the NMState Operator. To ensure that pod traffic is properly routed, OVN-K is configured with the

routingViaHostoption enabled. This setting uses the kernel routing table and the applied static routes rather than OVN for pod egress traffic.The Whereabouts CNI plugin is used to provide dynamic IPv4 and IPv6 addressing for additional pod network interfaces without the use of a DHCP server.

- Limits and requirements

- OVN-Kubernetes is required for IPv6 support.

- Large MTU cluster support requires connected network equipment to be set to the same or larger value.

-

MACVLAN and IPVLAN cannot co-locate on the same main interface due to their reliance on the same underlying kernel mechanism, specifically the

rx_handler. This handler allows a third-party module to process incoming packets before the host processes them, and only one such handler can be registered per network interface. Since both MACVLAN and IPVLAN need to register their ownrx_handlerto function, they conflict and cannot coexist on the same interface. See ipvlan/ipvlan_main.c#L82 and net/macvlan.c#L1260 for details. Alternative NIC configurations include splitting the shared NIC into multiple NICs or using a single dual-port NIC.

ImportantSplitting the shared NIC into multiple NICs or using a single dual-port NIC has not been validated with the telco core reference design.

- Single-stack IP cluster not validated.

- Engineering considerations

-

Pod egress traffic is handled by kernel routing table with the

routingViaHostoption. Appropriate static routes must be configured in the host.

-

Pod egress traffic is handled by kernel routing table with the

3.3.3.3.2. Load balancer

- New in this release

-

In OpenShift Container Platform 4.17,

frr-k8sis now the default and fully supported Border Gateway Protocol (BGP) backend. The deprecatedfrrBGP mode is still available. You should upgrade clusters to use thefrr-k8sbackend.

-

In OpenShift Container Platform 4.17,

- Description

MetalLB is a load-balancer implementation that uses standard routing protocols for bare-metal clusters. It enables a Kubernetes service to get an external IP address which is also added to the host network for the cluster.

NoteSome use cases might require features not available in MetalLB, for example stateful load balancing. Where necessary, use an external third party load balancer. Selection and configuration of an external load balancer is outside the scope of this document. When you use an external third party load balancer, ensure that it meets all performance and resource utilization requirements.

- Limits and requirements

- Stateful load balancing is not supported by MetalLB. An alternate load balancer implementation must be used if this is a requirement for workload CNFs.

- The networking infrastructure must ensure that the external IP address is routable from clients to the host network for the cluster.

- Engineering considerations

- MetalLB is used in BGP mode only for core use case models.

-

For core use models, MetalLB is supported with only when you set

routingViaHost=truein theovnKubernetesConfig.gatewayConfigspecification of the OVN-Kubernetes network plugin. - BGP configuration in MetalLB varies depending on the requirements of the network and peers.

- Address pools can be configured as needed, allowing variation in addresses, aggregation length, auto assignment, and other relevant parameters.

-

MetalLB uses BGP for announcing routes only. Only the

transmitIntervalandminimumTtlparameters are relevant in this mode. Other parameters in the BFD profile should remain close to the default settings. Shorter values might lead to errors and impact performance.

3.3.3.3.3. SR-IOV

- New in this release

-

With this release, you can use the SR-IOV Network Operator to configure QinQ (802.1ad and 802.1q) tagging. QinQ tagging provides efficient traffic management by enabling the use of both inner and outer VLAN tags. Outer VLAN tagging is hardware accelerated, leading to faster network performance. The update extends beyond the SR-IOV Network Operator itself. You can now configure QinQ on externally managed VFs by setting the outer VLAN tag using

nmstate. QinQ support varies across different NICs. For a comprehensive list of known limitations for specific NIC models, see the official documentation. - With this release, you can configure the SR-IOV Network Operator to drain nodes in parallel during network policy updates, dramatically accelerating the setup process. This translates to significant time savings, especially for large cluster deployments that previously took hours or even days to complete.

-

With this release, you can use the SR-IOV Network Operator to configure QinQ (802.1ad and 802.1q) tagging. QinQ tagging provides efficient traffic management by enabling the use of both inner and outer VLAN tags. Outer VLAN tagging is hardware accelerated, leading to faster network performance. The update extends beyond the SR-IOV Network Operator itself. You can now configure QinQ on externally managed VFs by setting the outer VLAN tag using

- Description

- SR-IOV enables physical network interfaces (PFs) to be divided into multiple virtual functions (VFs). VFs can then be assigned to multiple pods to achieve higher throughput performance while keeping the pods isolated. The SR-IOV Network Operator provisions and manages SR-IOV CNI, network device plugin, and other components of the SR-IOV stack.

- Limits and requirements

- The network interface controllers supported are listed in Supported devices

- SR-IOV and IOMMU enablement in BIOS: The SR-IOV Network Operator automatically enables IOMMU on the kernel command line.

- SR-IOV VFs do not receive link state updates from PF. If link down detection is needed, it must be done at the protocol level.

-

MultiNetworkPolicyCRs can be applied tonetdevicenetworks only. This is because the implementation uses theiptablestool, which cannot managevfiointerfaces.

- Engineering considerations

-

SR-IOV interfaces in

vfiomode are typically used to enable additional secondary networks for applications that require high throughput or low latency. -

If you exclude the

SriovOperatorConfigCR from your deployment, the CR will not be created automatically.

-

SR-IOV interfaces in

3.3.3.3.4. NMState Operator

- New in this release

- No reference design updates in this release

- Description

- The NMState Operator provides a Kubernetes API for performing network configurations across the cluster’s nodes. It enables network interface configurations, static IPs and DNS, VLANs, trunks, bonding, static routes, MTU, and enabling promiscuous mode on the secondary interfaces. The cluster nodes periodically report on the state of each node’s network interfaces to the API server.

- Limits and requirements

- Not applicable

- Engineering considerations

-

The initial networking configuration is applied using

NMStateConfigcontent in the installation CRs. The NMState Operator is used only when needed for network updates. -

When SR-IOV virtual functions are used for host networking, the NMState Operator using

NodeNetworkConfigurationPolicyis used to configure those VF interfaces, for example, VLANs and the MTU.

-

The initial networking configuration is applied using

3.3.3.4. Logging

- New in this release

- Cluster Logging Operator 6.0 is new in this release. Update your existing implementation to adapt to the new version of the API. You must remove the old Operator artifacts by using policies. For more information, see Additional resources.

- Description

- The Cluster Logging Operator enables collection and shipping of logs off the node for remote archival and analysis. The reference configuration ships audit and infrastructure logs to a remote archive by using Kafka.

- Limits and requirements

- Not applicable

- Engineering considerations

- The impact of cluster CPU use is based on the number or size of logs generated and the amount of log filtering configured.

- The reference configuration does not include shipping of application logs. Inclusion of application logs in the configuration requires evaluation of the application logging rate and sufficient additional CPU resources allocated to the reserved set.

3.3.3.5. Power Management

- New in this release

- No reference design updates in this release

- Description

The Performance Profile can be used to configure a cluster in a high power, low power, or mixed mode. The choice of power mode depends on the characteristics of the workloads running on the cluster, particularly how sensitive they are to latency. Configure the maximum latency for a low-latency pod by using the per-pod power management C-states feature.

For more information, see Configuring power saving for nodes.

- Limits and requirements

- Power configuration relies on appropriate BIOS configuration, for example, enabling C-states and P-states. Configuration varies between hardware vendors.

- Engineering considerations

-

Latency: To ensure that latency-sensitive workloads meet their requirements, you will need either a high-power configuration or a per-pod power management configuration. Per-pod power management is only available for

GuaranteedQoS Pods with dedicated pinned CPUs.

-

Latency: To ensure that latency-sensitive workloads meet their requirements, you will need either a high-power configuration or a per-pod power management configuration. Per-pod power management is only available for

3.3.3.6. Storage

- Overview

Cloud native storage services can be provided by multiple solutions including OpenShift Data Foundation from Red Hat or third parties.

OpenShift Data Foundation is a Ceph based software-defined storage solution for containers. It provides block storage, file system storage, and on-premises object storage, which can be dynamically provisioned for both persistent and non-persistent data requirements. Telco core applications require persistent storage.

NoteAll storage data may not be encrypted in flight. To reduce risk, isolate the storage network from other cluster networks. The storage network must not be reachable, or routable, from other cluster networks. Only nodes directly attached to the storage network should be allowed to gain access to it.

3.3.3.6.1. OpenShift Data Foundation

- New in this release

- No reference design updates in this release

- Description

- Red Hat OpenShift Data Foundation is a software-defined storage service for containers. For Telco core clusters, storage support is provided by OpenShift Data Foundation storage services running externally to the application workload cluster. OpenShift Data Foundation supports separation of storage traffic using secondary CNI networks.

- Limits and requirements

- In an IPv4/IPv6 dual-stack networking environment, OpenShift Data Foundation uses IPv4 addressing. For more information, see Support OpenShift dual stack with OpenShift Data Foundation using IPv4.

- Engineering considerations

- OpenShift Data Foundation network traffic should be isolated from other traffic on a dedicated network, for example, by using VLAN isolation.

3.3.3.6.2. Other Storage

Other storage solutions can be used to provide persistent storage for core clusters. The configuration and integration of these solutions is outside the scope of the telco core RDS. Integration of the storage solution into the core cluster must include correct sizing and performance analysis to ensure the storage meets overall performance and resource utilization requirements.

3.3.3.7. Monitoring

- New in this release

- No reference design updates in this release

- Description

The Cluster Monitoring Operator (CMO) is included by default on all OpenShift clusters and provides monitoring (metrics, dashboards, and alerting) for the platform components and optionally user projects as well.

Configuration of the monitoring operator allows for customization, including:

- Default retention period

- Custom alert rules

The default handling of pod CPU and memory metrics is based on upstream Kubernetes

cAdvisorand makes a tradeoff that prefers handling of stale data over metric accuracy. This leads to spiky data that will create false triggers of alerts over user-specified thresholds. OpenShift supports an opt-in dedicated service monitor feature creating an additional set of pod CPU and memory metrics that do not suffer from the spiky behavior. For additional information, see this solution guide.In addition to default configuration, the following metrics are expected to be configured for telco core clusters:

- Pod CPU and memory metrics and alerts for user workloads

- Limits and requirements

- Monitoring configuration must enable the dedicated service monitor feature for accurate representation of pod metrics

- Engineering considerations

- The Prometheus retention period is specified by the user. The value used is a tradeoff between operational requirements for maintaining historical data on the cluster against CPU and storage resources. Longer retention periods increase the need for storage and require additional CPU to manage the indexing of data.

3.3.3.8. Scheduling

- New in this release

- No reference design updates in this release

- Description

- The scheduler is a cluster-wide component responsible for selecting the right node for a given workload. It is a core part of the platform and does not require any specific configuration in the common deployment scenarios. However, there are few specific use cases described in the following section. NUMA-aware scheduling can be enabled through the NUMA Resources Operator. For more information, see Scheduling NUMA-aware workloads.

- Limits and requirements

The default scheduler does not understand the NUMA locality of workloads. It only knows about the sum of all free resources on a worker node. This might cause workloads to be rejected when scheduled to a node with Topology manager policy set to

single-numa-nodeorrestricted.- For example, consider a pod requesting 6 CPUs and being scheduled to an empty node that has 4 CPUs per NUMA node. The total allocatable capacity of the node is 8 CPUs and the scheduler will place the pod there. The node local admission will fail, however, as there are only 4 CPUs available in each of the NUMA nodes.

-

All clusters with multi-NUMA nodes are required to use the NUMA Resources Operator. The

machineConfigPoolSelectorof the NUMA Resources Operator must select all nodes where NUMA aligned scheduling is needed.

- All machine config pools must have consistent hardware configuration for example all nodes are expected to have the same NUMA zone count.

- Engineering considerations

- Pods might require annotations for correct scheduling and isolation. For more information on annotations, see CPU partitioning and performance tuning.

-

You can configure SR-IOV virtual function NUMA affinity to be ignored during scheduling by using the

excludeTopologyfield inSriovNetworkNodePolicyCR.

3.3.3.9. Installation

- New in this release

- No reference design updates in this release

- Description

Telco core clusters can be installed using the Agent Based Installer (ABI). This method allows users to install OpenShift Container Platform on bare metal servers without requiring additional servers or VMs for managing the installation. The ABI installer can be run on any system for example a laptop to generate an ISO installation image. This ISO is used as the installation media for the cluster supervisor nodes. Progress can be monitored using the ABI tool from any system with network connectivity to the supervisor node’s API interfaces.

- Installation from declarative CRs

- Does not require additional servers to support installation

- Supports install in disconnected environment

- Limits and requirements

- Disconnected installation requires a reachable registry with all required content mirrored.

- Engineering considerations

- Networking configuration should be applied as NMState configuration during installation in preference to day-2 configuration by using the NMState Operator.

3.3.3.10. Security

- New in this release

- No reference design updates in this release

- Description

Telco operators are security conscious and require clusters to be hardened against multiple attack vectors. Within OpenShift Container Platform, there is no single component or feature responsible for securing a cluster. This section provides details of security-oriented features and configuration for the use models covered in this specification.

- SecurityContextConstraints: All workload pods should be run with restricted-v2 or restricted SCC.

-

Seccomp: All pods should be run with the

RuntimeDefault(or stronger) seccomp profile. - Rootless DPDK pods: Many user-plane networking (DPDK) CNFs require pods to run with root privileges. With this feature, a conformant DPDK pod can be run without requiring root privileges. Rootless DPDK pods create a tap device in a rootless pod that injects traffic from a DPDK application to the kernel.

- Storage: The storage network should be isolated and non-routable to other cluster networks. See the "Storage" section for additional details.

- Limits and requirements

Rootless DPDK pods requires the following additional configuration steps:

-

Configure the TAP plugin with the

container_tSELinux context. -

Enable the

container_use_devicesSELinux boolean on the hosts.

-

Configure the TAP plugin with the

- Engineering considerations

-

For rootless DPDK pod support, the SELinux boolean

container_use_devicesmust be enabled on the host for the TAP device to be created. This introduces a security risk that is acceptable for short to mid-term use. Other solutions will be explored.

-

For rootless DPDK pod support, the SELinux boolean

3.3.3.11. Scalability

- New in this release

- No reference design updates in this release

- Description

Clusters will scale to the sizing listed in the limits and requirements section.

Scaling of workloads is described in the use model section.

- Limits and requirements

- Cluster scales to at least 120 nodes

- Engineering considerations

- Not applicable

3.3.3.12. Additional configuration

3.3.3.12.1. Disconnected environment

- Description

Telco core clusters are expected to be installed in networks without direct access to the internet. All container images needed to install, configure, and operator the cluster must be available in a disconnected registry. This includes OpenShift Container Platform images, day-2 Operator Lifecycle Manager (OLM) Operator images, and application workload images. The use of a disconnected environment provides multiple benefits, for example:

- Limiting access to the cluster for security

- Curated content: The registry is populated based on curated and approved updates for the clusters

- Limits and requirements

- A unique name is required for all custom CatalogSources. Do not reuse the default catalog names.

- A valid time source must be configured as part of cluster installation.

- Engineering considerations

- Not applicable

3.3.3.12.2. Kernel

- New in this release

- No reference design updates in this release

- Description

The user can install the following kernel modules by using

MachineConfigto provide extended kernel functionality to CNFs:- sctp

- ip_gre

- ip6_tables

- ip6t_REJECT

- ip6table_filter

- ip6table_mangle

- iptable_filter

- iptable_mangle

- iptable_nat

- xt_multiport

- xt_owner

- xt_REDIRECT

- xt_statistic

- xt_TCPMSS

- Limits and requirements