9.5. AWS 中托管集群的灾难恢复

您可以将托管集群恢复到 Amazon Web Services (AWS) 中的同一区域。例如,当升级管理集群时,需要灾难恢复,且托管集群处于只读状态。

托管的 control plane 只是一个技术预览功能。技术预览功能不受红帽产品服务等级协议(SLA)支持,且功能可能并不完整。红帽不推荐在生产环境中使用它们。这些技术预览功能可以使用户提早试用新的功能,并有机会在开发阶段提供反馈意见。

有关红帽技术预览功能支持范围的更多信息,请参阅以下链接:

灾难恢复过程涉及以下步骤:

- 在源集群中备份托管集群

- 在目标管理集群中恢复托管集群

- 从源管理集群中删除托管的集群

您的工作负载在此过程中保持运行。集群 API 可能会在一段时间内不可用,但不会影响 worker 节点上运行的服务。

源管理集群和目标管理集群必须具有 --external-dns 标志才能维护 API 服务器 URL。这样,服务器 URL 以 https://api-sample-hosted.sample-hosted.aws.openshift.com 结尾。请参见以下示例:

示例:外部 DNS 标记

--external-dns-provider=aws \ --external-dns-credentials=<path_to_aws_credentials_file> \ --external-dns-domain-filter=<basedomain>

--external-dns-provider=aws \

--external-dns-credentials=<path_to_aws_credentials_file> \

--external-dns-domain-filter=<basedomain>

如果您没有包含 --external-dns 标志来维护 API 服务器 URL,则无法迁移托管集群。

9.5.1. 备份和恢复过程概述

备份和恢复过程按如下方式工作:

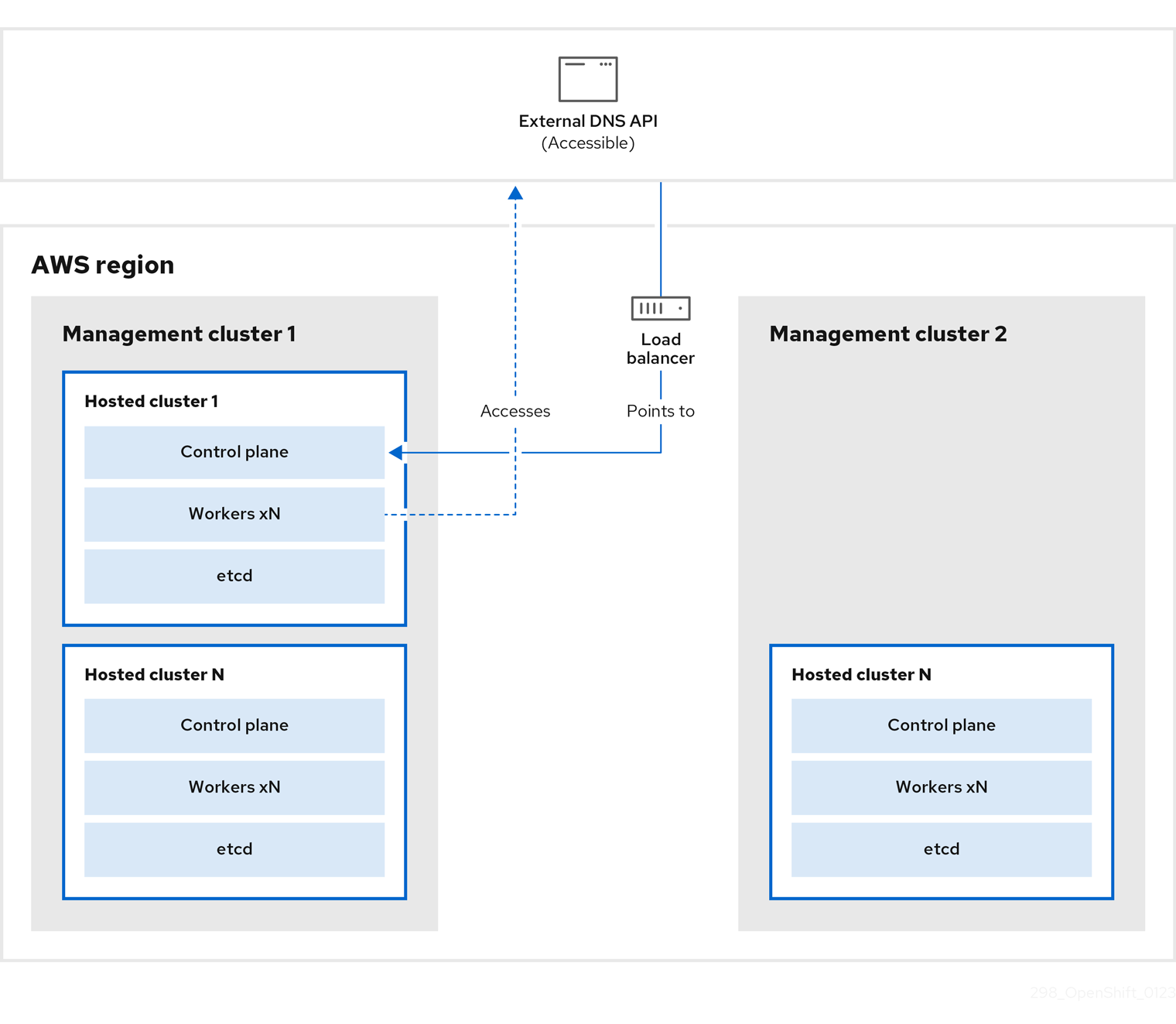

在管理集群 1 中,您可以将其视为源管理集群,control plane 和 worker 使用 ExternalDNS API 进行交互。可以访问外部 DNS API,并且一个负载均衡器位于管理集群之间。

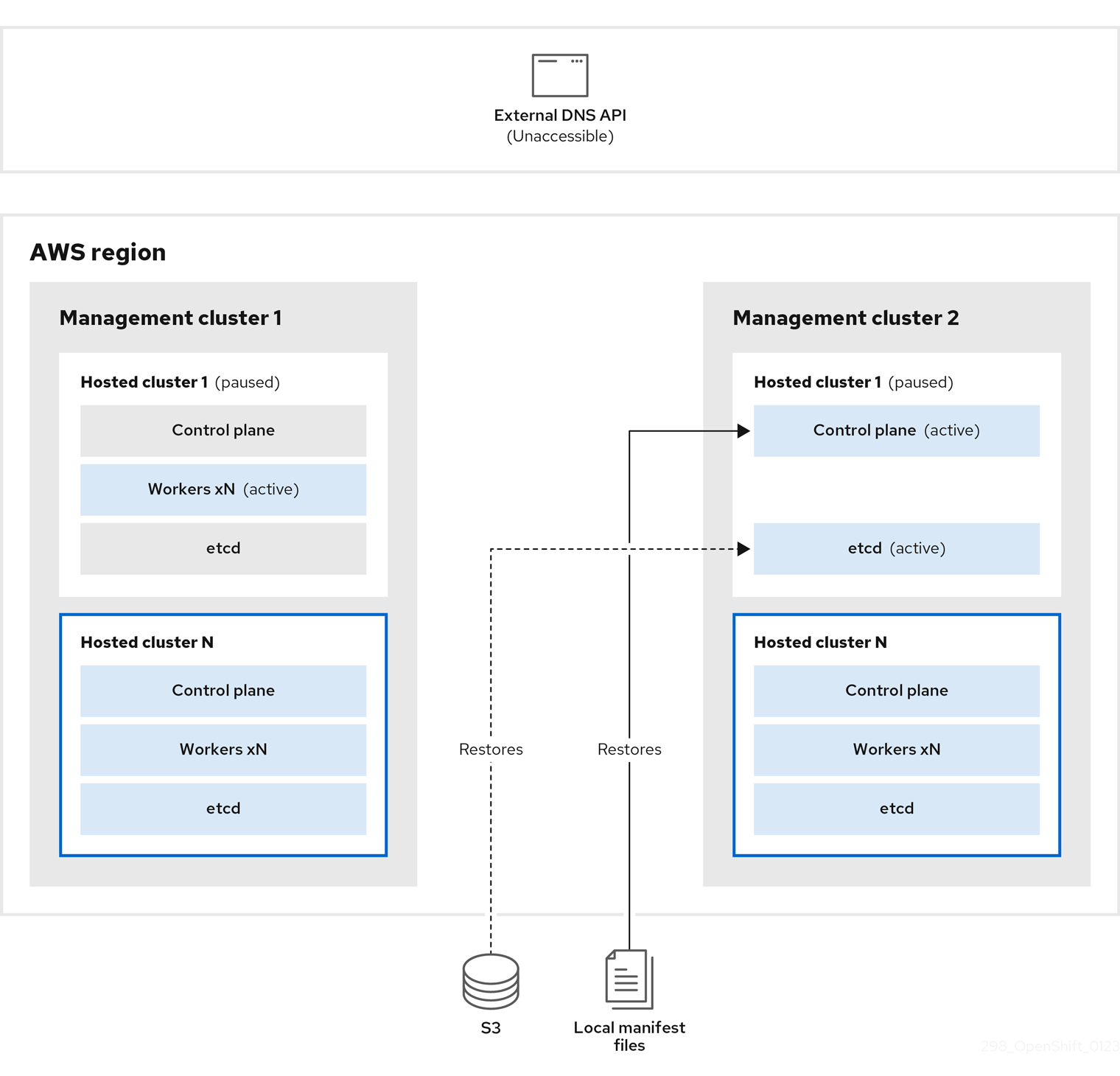

您为托管集群执行快照,其中包括 etcd、control plane 和 worker 节点。在此过程中,worker 节点仍然会尝试访问外部 DNS API,即使无法访问它,工作负载正在运行,control plane 存储在本地清单文件中,etcd 会备份到 S3 存储桶。data plane 处于活跃状态,control plane 已暂停。

在管理集群 2 中,您可以将其视为目标管理集群,您可以从 S3 存储桶中恢复 etcd,并从本地清单文件恢复 control plane。在此过程中,外部 DNS API 会停止,托管集群 API 变得不可访问,任何使用 API 的 worker 都无法更新其清单文件,但工作负载仍在运行。

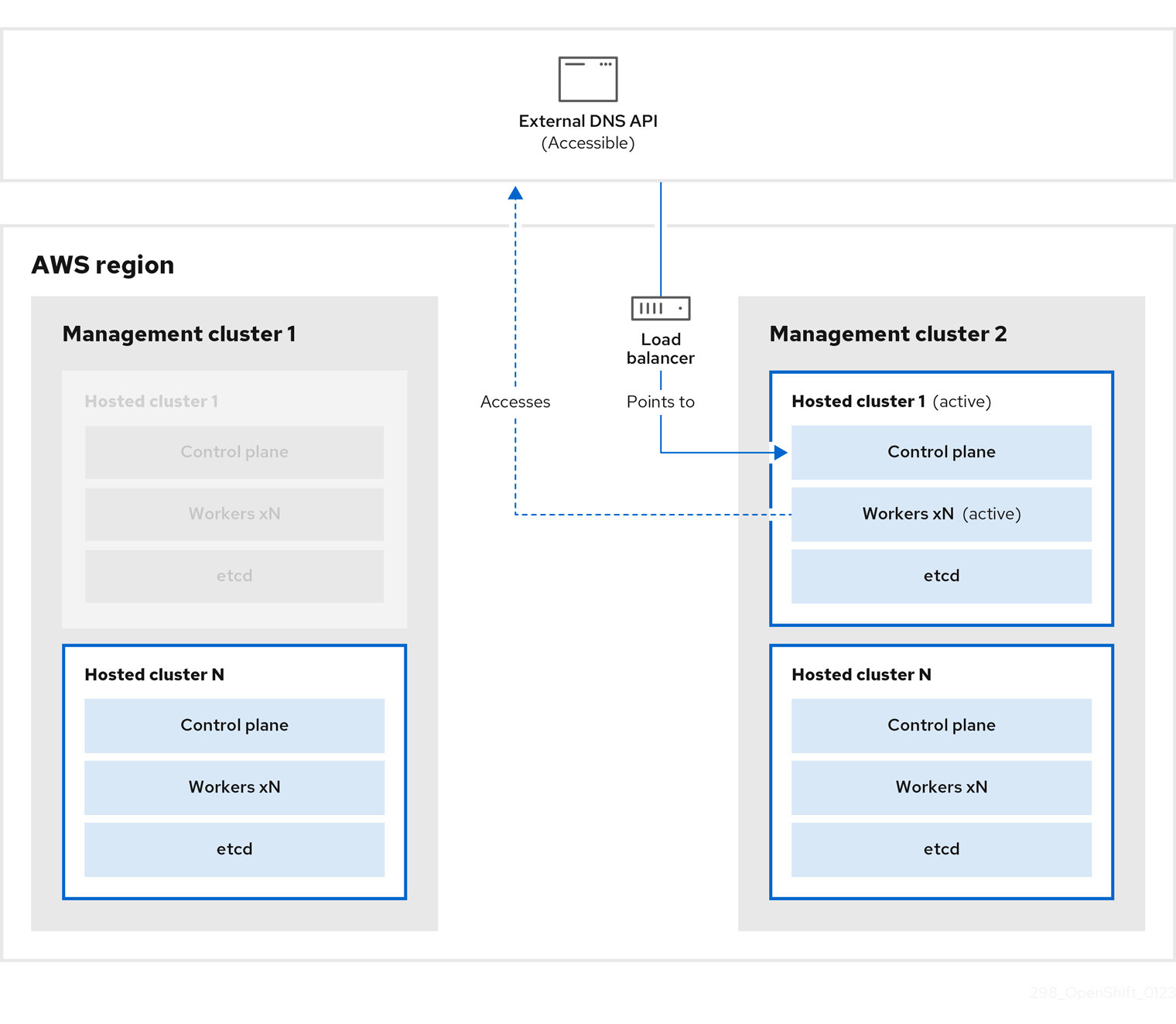

外部 DNS API 可以再次访问,worker 节点使用它来移至管理集群 2。外部 DNS API 可以访问指向 control plane 的负载均衡器。

在管理集群 2 中,control plane 和 worker 节点使用外部 DNS API 进行交互。资源从管理集群 1 中删除,但 etcd 的 S3 备份除外。如果您尝试在 mangagement 集群 1 上再次设置托管集群,它将无法正常工作。

9.5.2. 备份托管集群

要在目标管理集群中恢复托管集群,首先需要备份所有相关数据。

流程

输入以下命令创建 configmap 文件来声明源管理集群:

oc create configmap mgmt-parent-cluster -n default --from-literal=from=${MGMT_CLUSTER_NAME}$ oc create configmap mgmt-parent-cluster -n default --from-literal=from=${MGMT_CLUSTER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入这些命令,在托管集群和节点池中关闭协调:

PAUSED_UNTIL="true" oc patch -n ${HC_CLUSTER_NS} hostedclusters/${HC_CLUSTER_NAME} -p '{"spec":{"pausedUntil":"'${PAUSED_UNTIL}'"}}' --type=merge oc scale deployment -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 kube-apiserver openshift-apiserver openshift-oauth-apiserver control-plane-operator$ PAUSED_UNTIL="true" $ oc patch -n ${HC_CLUSTER_NS} hostedclusters/${HC_CLUSTER_NAME} -p '{"spec":{"pausedUntil":"'${PAUSED_UNTIL}'"}}' --type=merge $ oc scale deployment -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 kube-apiserver openshift-apiserver openshift-oauth-apiserver control-plane-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow PAUSED_UNTIL="true" oc patch -n ${HC_CLUSTER_NS} hostedclusters/${HC_CLUSTER_NAME} -p '{"spec":{"pausedUntil":"'${PAUSED_UNTIL}'"}}' --type=merge oc patch -n ${HC_CLUSTER_NS} nodepools/${NODEPOOLS} -p '{"spec":{"pausedUntil":"'${PAUSED_UNTIL}'"}}' --type=merge oc scale deployment -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 kube-apiserver openshift-apiserver openshift-oauth-apiserver control-plane-operator$ PAUSED_UNTIL="true" $ oc patch -n ${HC_CLUSTER_NS} hostedclusters/${HC_CLUSTER_NAME} -p '{"spec":{"pausedUntil":"'${PAUSED_UNTIL}'"}}' --type=merge $ oc patch -n ${HC_CLUSTER_NS} nodepools/${NODEPOOLS} -p '{"spec":{"pausedUntil":"'${PAUSED_UNTIL}'"}}' --type=merge $ oc scale deployment -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 kube-apiserver openshift-apiserver openshift-oauth-apiserver control-plane-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 运行此 bash 脚本备份 etcd 并将数据上传到 S3 存储桶:

提示将此脚本嵌套在函数中,并从主功能调用它。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 有关备份 etcd 的更多信息,请参阅 "Backing and restore etcd on a hosted cluster"。

输入以下命令备份 Kubernetes 和 OpenShift Container Platform 对象。您需要备份以下对象:

-

来自 HostedCluster 命名空间的

HostedCluster和NodePool对象 -

来自 HostedCluster 命名空间中的

HostedClustersecret -

来自 Hosted Control Plane 命名空间中的

HostedControlPlane -

来自 Hosted Control Plane 命名空间的

Cluster -

来自 Hosted Control Plane 命名空间的

AWSCluster,AWSMachineTemplate, 和AWSMachine -

来自 Hosted Control Plane 命名空间的

MachineDeployments,MachineSets, 和Machines。 来自 Hosted Control Plane 命名空间的

ControlPlaneCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

来自 HostedCluster 命名空间的

输入以下命令清理

ControlPlane路由:oc delete routes -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --all$ oc delete routes -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入该命令,您可以启用 ExternalDNS Operator 来删除 Route53 条目。

运行该脚本验证 Route53 条目是否清理:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

验证

检查所有 OpenShift Container Platform 对象和 S3 存储桶,以验证所有内容是否如预期。

后续步骤

恢复托管集群。

9.5.3. 恢复托管集群

收集您备份和恢复目标管理集群中的所有对象。

先决条件

您已从源集群备份数据。

确保目标管理集群的 kubeconfig 文件放置在 KUBECONFIG 变量中,或者在 MGMT2_KUBECONFIG 变量中设置。使用 export KUBECONFIG=<Kubeconfig FilePath>,或者使用 export KUBECONFIG=${MGMT2_KUBECONFIG}。

流程

输入以下命令验证新管理集群是否不包含您要恢复的集群中的任何命名空间:

export KUBECONFIG=${MGMT2_KUBECONFIG}$ export KUBECONFIG=${MGMT2_KUBECONFIG}Copy to Clipboard Copied! Toggle word wrap Toggle overflow BACKUP_DIR=${HC_CLUSTER_DIR}/backup$ BACKUP_DIR=${HC_CLUSTER_DIR}/backupCopy to Clipboard Copied! Toggle word wrap Toggle overflow 目标管理集群中的命名空间删除

oc delete ns ${HC_CLUSTER_NS} || true$ oc delete ns ${HC_CLUSTER_NS} || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete ns ${HC_CLUSTER_NS}-{HC_CLUSTER_NAME} || true$ oc delete ns ${HC_CLUSTER_NS}-{HC_CLUSTER_NAME} || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令重新创建已删除的命名空间:

命名空间创建命令

oc new-project ${HC_CLUSTER_NS}$ oc new-project ${HC_CLUSTER_NS}Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc new-project ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}$ oc new-project ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令恢复 HC 命名空间中的 secret:

oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}/secret-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}/secret-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令恢复

HostedClustercontrol plane 命名空间中的对象:恢复 secret 命令

oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/secret-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/secret-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow 集群恢复命令

oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/hcp-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/hcp-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/cl-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/cl-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow 如果您要恢复节点和节点池来重复利用 AWS 实例,请输入以下命令恢复 HC control plane 命名空间中的对象:

AWS 命令

oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/awscl-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/awscl-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/awsmt-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/awsmt-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/awsm-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/awsm-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow 机器的命令

oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/machinedeployment-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/machinedeployment-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/machineset-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/machineset-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/machine-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}/machine-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow 运行此 bash 脚本恢复 etcd 数据和托管集群:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 如果您要恢复节点和节点池来重复利用 AWS 实例,请输入以下命令恢复节点池:

oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}/np-*$ oc apply -f ${BACKUP_DIR}/namespaces/${HC_CLUSTER_NS}/np-*Copy to Clipboard Copied! Toggle word wrap Toggle overflow

验证

要验证节点是否已完全恢复,请使用此功能:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

后续步骤

关闭并删除集群。

9.5.4. 从源集群中删除托管集群

备份托管集群并将其恢复到目标管理集群后,您将关闭并删除源管理集群中的托管集群。

先决条件

您备份了数据并将其恢复到源管理集群。

确保目标管理集群的 kubeconfig 文件放置在 KUBECONFIG 变量中,或者在 MGMT_KUBECONFIG 变量中设置。使用 export KUBECONFIG=<Kubeconfig FilePath> 或使用脚本,请使用 export KUBECONFIG=${MGMT_KUBECONFIG}。

流程

输入以下命令来扩展

deployment和statefulset对象:重要如果其

spec.persistentVolumeClaimRetentionPolicy.whenScaled字段被设置为Delete,则不要扩展有状态的集合,因为这可能会导致数据丢失。作为临时解决方案,将

spec.persistentVolumeClaimRetentionPolicy.whenScaled字段的值更新为Retain。确保不存在协调有状态集的控制器,并将值返回为Delete,这可能会导致丢失数据。export KUBECONFIG=${MGMT_KUBECONFIG}$ export KUBECONFIG=${MGMT_KUBECONFIG}Copy to Clipboard Copied! Toggle word wrap Toggle overflow 缩减部署命令

oc scale deployment -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 --all$ oc scale deployment -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc scale statefulset.apps -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 --all$ oc scale statefulset.apps -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --replicas=0 --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow sleep 15

$ sleep 15Copy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令来删除

NodePool对象:NODEPOOLS=$(oc get nodepools -n ${HC_CLUSTER_NS} -o=jsonpath='{.items[?(@.spec.clusterName=="'${HC_CLUSTER_NAME}'")].metadata.name}') if [[ ! -z "${NODEPOOLS}" ]];then oc patch -n "${HC_CLUSTER_NS}" nodepool ${NODEPOOLS} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]' oc delete np -n ${HC_CLUSTER_NS} ${NODEPOOLS} fiNODEPOOLS=$(oc get nodepools -n ${HC_CLUSTER_NS} -o=jsonpath='{.items[?(@.spec.clusterName=="'${HC_CLUSTER_NAME}'")].metadata.name}') if [[ ! -z "${NODEPOOLS}" ]];then oc patch -n "${HC_CLUSTER_NS}" nodepool ${NODEPOOLS} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]' oc delete np -n ${HC_CLUSTER_NS} ${NODEPOOLS} fiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令删除

machine和machineset对象:# Machines for m in $(oc get machines -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} -o name); do oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]' || true oc delete -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} || true done# Machines for m in $(oc get machines -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} -o name); do oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]' || true oc delete -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} || true doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete machineset -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --all || true$ oc delete machineset -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --all || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令删除集群对象:

C_NAME=$(oc get cluster -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} -o name)$ C_NAME=$(oc get cluster -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} -o name)Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${C_NAME} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]'$ oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${C_NAME} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete cluster.cluster.x-k8s.io -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --all$ oc delete cluster.cluster.x-k8s.io -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入这些命令来删除 AWS 机器 (Kubernetes 对象)。不用担心删除实际的 AWS 机器。云实例不会受到影响。

for m in $(oc get awsmachine.infrastructure.cluster.x-k8s.io -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} -o name) do oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]' || true oc delete -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} || true donefor m in $(oc get awsmachine.infrastructure.cluster.x-k8s.io -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} -o name) do oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]' || true oc delete -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} ${m} || true doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令删除

HostedControlPlane和ControlPlaneHC 命名空间对象:删除 HCP 和 ControlPlane HC NS 命令

oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} hostedcontrolplane.hypershift.openshift.io ${HC_CLUSTER_NAME} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]'$ oc patch -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} hostedcontrolplane.hypershift.openshift.io ${HC_CLUSTER_NAME} --type=json --patch='[ { "op":"remove", "path": "/metadata/finalizers" }]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete hostedcontrolplane.hypershift.openshift.io -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --all$ oc delete hostedcontrolplane.hypershift.openshift.io -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete ns ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} || true$ oc delete ns ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME} || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow 输入以下命令删除

HostedCluster和 HC 命名空间对象:删除 HC 和 HC 命名空间命令

oc -n ${HC_CLUSTER_NS} patch hostedclusters ${HC_CLUSTER_NAME} -p '{"metadata":{"finalizers":null}}' --type merge || true$ oc -n ${HC_CLUSTER_NS} patch hostedclusters ${HC_CLUSTER_NAME} -p '{"metadata":{"finalizers":null}}' --type merge || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete hc -n ${HC_CLUSTER_NS} ${HC_CLUSTER_NAME} || true$ oc delete hc -n ${HC_CLUSTER_NS} ${HC_CLUSTER_NAME} || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete ns ${HC_CLUSTER_NS} || true$ oc delete ns ${HC_CLUSTER_NS} || trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

验证

要验证所有内容是否正常工作,请输入以下命令:

验证命令

export KUBECONFIG=${MGMT2_KUBECONFIG}$ export KUBECONFIG=${MGMT2_KUBECONFIG}Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get hc -n ${HC_CLUSTER_NS}$ oc get hc -n ${HC_CLUSTER_NS}Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get np -n ${HC_CLUSTER_NS}$ oc get np -n ${HC_CLUSTER_NS}Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pod -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}$ oc get pod -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get machines -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}$ oc get machines -n ${HC_CLUSTER_NS}-${HC_CLUSTER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow HostedCluster 内的命令

export KUBECONFIG=${HC_KUBECONFIG}$ export KUBECONFIG=${HC_KUBECONFIG}Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc get clusterversion

$ oc get clusterversionCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

后续步骤

删除托管的集群中的 OVN pod,以便您可以连接到新管理集群中运行的新 OVN control plane:

-

使用托管的集群的 kubeconfig 路径加载

KUBECONFIG环境变量。 输入这个命令:

oc delete pod -n openshift-ovn-kubernetes --all

$ oc delete pod -n openshift-ovn-kubernetes --allCopy to Clipboard Copied! Toggle word wrap Toggle overflow